Abstract

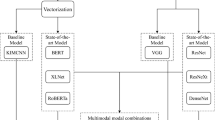

The goal of this research is to use social media to gain situational awareness in the wake of a crisis. With the developments in information and communication technologies, social media became the de facto norm for gathering and disseminating information. We present a method for classifying informative tweets from the massive volume of user tweets on social media. Once the informative tweets have been found, emergency responders can use them to gain situational awareness so that recovery actions can be carried out efficiently. The majority of previous research has focused on either text data or images in tweets. A thorough review of the literature illustrates that text and image carry complementary information. The proposed method is a deep learning framework which utilizes multiple input modalities, specifically text and image from a user-generated tweet. We mainly focused to devise an improved multimodal fusion strategy. The proposed system has a transformer-based image and text models. The main building blocks include fine-tuned RoBERTa model for text, Vision Transformer model for image, biLSTM and attention mechanism. We put forward a multiplicative fusion strategy for image and text inputs. Extensive experiments have been done on various network architectures with seven datasets spanning different types of disasters, including wildfire, hurricane, earth-quake and flood. Several state-of-the-art approaches were surpassed by our system. It showed good accuracy in the range of 94–98%. The results showed that identifying the interaction between multiple related modalities will enhance the quality of a deep learning classifier.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Data availability

The datasets used and analyzed during the current study are available online: https://crisisnlp.qcri.org/crisismmd.

References

Alam F, Imran M, Ofli F (2017) Image4act: online social media image processing for disaster response. In: Proceedings of the 2017 IEEE/ACM international conference on advances in social networks analysis and mining 2017, pp 601–604

Alam F, Ofli F, Imran M (2018) Crisismmd: multimodal twitter datasets from natural disasters. In: Twelfth international AAAI conference on web and social media

Alam F, Ofli F, Imran M (2018) Processing social media images by combining human and machine computing during crises. Int J Hum Comput Interact 34(4):311–327

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473

Balakrishnan V, Shi Z, Law CL et al (2021) A deep learning approach in predicting products’ sentiment ratings: a comparative analysis. J Supercomput 2021:1–21

Bengio Y, Courville A, Vincent P (2013) Representation learning: a review and new perspectives. IEEE Trans Pattern Anal Mach Intell 35(8):1798–1828

Chaudhuri N, Bose I (2019) Application of image analytics for disaster response in smart cities. In: Proceedings of the 52nd Hawaii international conference on system sciences

Devlin J, Chang MW, Lee K et al (2018) Bert: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805

Dosovitskiy A, Beyer L, Kolesnikov A et al (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929

Endsley MR (1995) Toward a theory of situation awareness in dynamic systems. Hum Factors 37(1):32–64

Flin R, O’connor P, Crichton M (2017) Safety at the sharp end: a guide to non-technical skills. CRC Press, Boca Raton

Gautam AK, Misra L, Kumar A et al (2019) Multimodal analysis of disaster tweets. In: 2019 IEEE fifth international conference on multimedia Big Data (BigMM). IEEE, pp 94–103

Ghafarian SH, Yazdi HS (2020) Identifying crisis-related informative tweets using learning on distributions. Inf Process Manag 57(2):102145

Gunes H, Piccardi M (2008) Automatic temporal segment detection and affect recognition from face and body display. IEEE Trans Syst Man Cybern Part B (Cybernetics) 39(1):64–84

Han J, Pei J, Kamber M (2011) Data mining: concepts and techniques. Elsevier, Amsterdam

He K, Zhang X, Ren S et al (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Huang G, Liu Z, Van Der Maaten L et al (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

Kejriwal M, Zhou P (2020) On detecting urgency in short crisis messages using minimal supervision and transfer learning. Soc Netw Anal Min 10(1):1–12

Kumar A, Singh JP, Dwivedi YK et al (2020) A deep multi-modal neural network for informative twitter content classification during emergencies. Ann Oper Res 2020:1–32

Kyrkou C, Theocharides T (2019) Deep-learning-based aerial image classification for emergency response applications using unmanned aerial vehicles. In: CVPR workshops, pp 517–525

Liu Y, Ott M, Goyal N et al (2019) Roberta: a robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692

Madichetty S, Muthukumarasamy S (2020) Detection of situational information from twitter during disaster using deep learning models. Sādhanā 45(1):1–13

Madichetty S, Sridevi M (2019) Disaster damage assessment from the tweets using the combination of statistical features and informative words. Soc Netw Anal Min 9(1):1–11

Madichetty S, Sridevi M (2021) A novel method for identifying the damage assessment tweets during disaster. Future Gener Comput Syst 116:440–454

Madichetty S, Muthukumarasamy S, Jayadev P (2021a) Multi-modal classification of twitter data during disasters for humanitarian response. J Ambient Intell Hum Comput 12(11):10223–10237

Madichetty S et al (2020a) Classifying informative and non-informative tweets from the twitter by adapting image features during disaster. Multimed Tools Appl 79(39):28,901-28,923

Madichetty S et al (2020) Identification of medical resource tweets using majority voting-based ensemble during disaster. Soc Netw Anal Min 10(1):1–18

Madichetty S et al (2021) A stacked convolutional neural network for detecting the resource tweets during a disaster. Multimed Tools Appl 80(3):3927–3949

Martinez-Rojas M, del Carmen Pardo-Ferreira M, Rubio-Romero JC (2018) Twitter as a tool for the management and analysis of emergency situations: a systematic literature review. Int J Inf Manag 43:196–208

Mohanty SD, Biggers B, Sayedahmed S et al (2021) A multi-modal approach towards mining social media data during natural disasters—a case study of hurricane irma. Int J Disaster Risk Reduct 54(102):032

Mouzannar H, Rizk Y, Awad M (2018) Damage identification in social media posts using multimodal deep learning. In: ISCRAM

Nugroho KS, Sukmadewa AY, Wuswilahaken DWH et al (2021) Bert fine-tuning for sentiment analysis on Indonesian mobile apps reviews. In: 6th international conference on sustainable information engineering and technology 2021, pp 258–264

Ofli F, Alam F, Imran M (2020) Analysis of social media data using multimodal deep learning for disaster response. arXiv preprint arXiv:2004.11838

Pajak K, Pajak D (2022) Multilingual fine-tuning for grammatical error correction. Expert Syst Appl 200(116):948

Palen L, Anderson KM, Mark G et al (2010) A vision for technology-mediated support for public participation & assistance in mass emergencies & disasters. ACM-BCS Vis Comput Sci 2010:1–12

Phengsuwan J, Shah T, Thekkummal NB et al (2021) Use of social media data in disaster management: a survey. Future Internet 13(2):46

Pourebrahim N, Sultana S, Edwards J et al (2019) Understanding communication dynamics on twitter during natural disasters: a case study of hurricane sandy. Int J Disaster Risk Reduct 37(101):176

Rizk Y, Jomaa HS, Awad M et al (2019) A computationally efficient multi-modal classification approach of disaster-related twitter images. In: Proceedings of the 34th ACM/SIGAPP symposium on applied computing, pp 2050–2059

Shah R, Zimmermann R (2017) Multimodal analysis of user-generated multimedia content. Springer, Berlin

Shah RR, Yu Y, Zimmermann R (2014) Advisor: personalized video soundtrack recommendation by late fusion with heuristic rankings. In: Proceedings of the 22nd ACM international conference on multimedia, pp 607–616

Shah RR, Mahata D, Choudhary V et al (2018) Multimodal semantics and affective computing from multimedia content. In: Intelligent multidimensional data and image processing. IGI Global, pp 359–382

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Singh T, Kumari M (2016) Role of text pre-processing in twitter sentiment analysis. Procedia Comput Sci 89:549–554

Snyder LS, Lin YS, Karimzadeh M et al (2019) Interactive learning for identifying relevant tweets to support real-time situational awareness. IEEE Trans Vis Comput Graph 26(1):558–568

Szegedy C, Liu W, Jia Y et al (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Tan M, Le Q (2019) Efficientnet: rethinking model scaling for convolutional neural networks. In: International conference on machine learning, PMLR, pp 6105–6114

Tripathy JK, Chakkaravarthy SS, Satapathy SC et al (2020) Albert-based fine-tuning model for cyberbullying analysis. Multimed Syst 2020:1–9

Valdez DB, Godmalin RAG (2021) A deep learning approach of recognizing natural disasters on images using convolutional neural network and transfer learning. In: Proceedings of the international conference on artificial intelligence and its applications, pp 1–7

Yu Y, Tang S, Aizawa K et al (2018) Category-based deep cca for fine-grained venue discovery from multimodal data. IEEE Trans Neural Netw Learn Syst 30(4):1250–1258

Yu Y, Tang S, Raposo F et al (2019) Deep cross-modal correlation learning for audio and lyrics in music retrieval. ACM Trans Multimed Comput Commun Appl 15(1):1–16

Zahra K, Imran M, Ostermann FO (2020) Automatic identification of eyewitness messages on twitter during disasters. Inf Process Manag 57(1):102,107

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Koshy, R., Elango, S. Multimodal tweet classification in disaster response systems using transformer-based bidirectional attention model. Neural Comput & Applic 35, 1607–1627 (2023). https://doi.org/10.1007/s00521-022-07790-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07790-5