Abstract

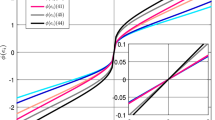

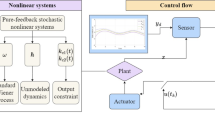

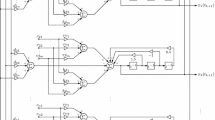

This paper concerns with the time-variant neural computing in a semi-global sense, taking into account initial conditions located within a region with a definitely finite radius. Both the conventional single and double power-rate RNN models are characterized and the closed-form expressions of the settling time functions are presented for given initial conditions, by which the fixed/predefined-time convergence can be assured in the semi-global sense. Despite asymptotic convergence behavior, the conventional linear RNN model is examined for comparison purposes. Modified RNN models adopt the inverse of the bound, according to the fixed-time convergence results, and the prescribed time can be an adjustable parameter. A novel two-phase RNN model with the pre-specified transition state is proposed, which has not only semi-global fixed/predefined-time stability but also a faster convergence rate than that of the conventional models. The proposed models are applied and compared, through numerical simulation, for time-variant matrix inversion, linear equation solving, and repeatable motion planning of a redundant manipulator in the presence of initial errors.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Ham FM, Kostanic I (2001) Principles of neurocomputing for science and engineering. McGraw-Hill, New York

Zhang Y, Yi C (2011) Zhang neural networks and neural-dynamic method. Nova Science Publishers, New York

Hopfield JJ (1984) Neurons with graded response have collective computational properties like those of two-state neurons. Proc Natl Acad Sci 81(10):3088–3092

Cichocki A, Unbehauen R (1992) Neural networks for solving systems of linear equation and related problems. IEEE Trans Circuits Syst 39(2):124–138

Wang J (1993) Recurrent neural networks for solving linear matrix equations. Appl Math Comput 26(9):23–34

Xia Y, Wang J, Hung DL (1999) Recurrent neural networks for solving linear inequalities and equations. IEEE Trans Circuits Syst I Fund Theory Appl 62(4):452–462

Kennedy M, Chua L (1988) Neural networks for nonlinear programming. IEEE Trans Circuits Syst 35(5):554–562

Maa CY, Shanblatt MA (1992) Linear and quadratic programming neural network analysis. IEEE Trans Neural Networks 3(4):580–594

Wang J (1993) Analysis and design of a recurrent neural network for linear programming. IEEE Trans Circuits Syst I Fundam Theory Appl 40(9):613–618

Hu X, Zhang B (2009) A new recurrent neural network for solving convex quadratic programming problems with an application to the \(k\)-winners-take-all problem. IEEE Trans Neural Networks 20(4):654–664

Liu Q, Wang J (2015) A projection neural network for constrained quadratic minimax optimization. IEEE Trans Neural Networks Learn Syst 26(11):2891–2900

Qi W, Ovur SE, Li Z, Marzullo A, Song R (2021) Multi-sensor guided hand gestures recognition for teleoperated robot using recurrent neural network. IEEE Robot Autom Lett 6(3):6039–6045

Whitney DE (1969) Resolved motion rate control of manipulators and human prostheses. IEEE Trans Man-Mach Syst 10(2):47–53

Cheng F, Chen T, Sun Y (1994) Resolving manipulator redundancy under inequality constraints. IEEE Trans Robot Autom 10(1):65–71

Jin L, Wei L, Li S (2022) Gradient-based differential neural-solution to time-dependent nonlinear optimization. IEEE Trans Autom Control (In press)

Klein CA, Kee KB (1989) The nature of drift in pseudoinverse control of kinematically redundant manipulators. IEEE Trans Robot Autom 5(2):231–234

Zhang Y, Jiang D, Wang J (2002) A recurrent neural network for solving Sylvester equation with time-varying coefficients. IEEE Trans Neural Networks 13(5):1053–1063

Zhang Y, Ge S (2005) Design and analysis of a general recurrent neural network model for time-varying matrix inversion. IEEE Trans Neural Networks 16(6):1477–1490

Zhang Y, Yi C, Guo D, Zheng J (2011) Comparison on Zhang neural dynamics and gradient-based neural dynamics for online solution of nonlinear time-varying equation. Neural Comput Appl 20(1):1–7

Zhang Z, Zhang Y (2013) Variable joint-velocity limits of redundant robot manipulators handled by quadratic programming. IEEE/ASME Trans Mech 18(2):674–686

Su H, Hu Y, Karimi HR et al (2020) Improved recurrent neural network-based manipulator control with remote center of motion constraints: Experimental results. Neural Networks 131:291–299

Li S, Chen S, Liu B (2013) Accelerating a recurrent neural network to finite-time convergence for solving time-varying Sylvester equation by using a sign-bi-power activation function. Neural Process Lett 37:189–205

Xiao L (2019) A finite-time convergent Zhang neural network and its application to real-time matrix square root finding. Neural Comput Appl 31:S793–S800

Jin J, Zhao L, Li M, Yu F, Xi Z (2020) Improved zeroing neural networks for finite time solving nonlinear equations. Neural Comput Appl 32:4151–4160

Ma Z, Yu S, Han Y, Guo D (2021) Zeroing neural network for bound-constrained time-varying nonlinear equation solving and its application to mobile robot manipulators. Neural Comput Appl 33:14231–14245

Tan Z, Li W, Xiao L, Hu Y (2020) New varying-parameter ZNN models with finite-time convergence and noise suppression for time-varying matrix Moore-Penrose inversion. IEEE Trans Neural Networks Learn Syst 31(8):2980–2992

Jin L, Zhang Y, Li S (2016) Integration-enhanced Zhang neural network for real-time-varying matrix inversion in the presence of various kinds of noises. IEEE Trans Neural Networks Learn Syst 27(12):2615–2627

Li W (2020) Design and analysis of a novel finite-time convergent and noise-tolerant recurrent neural network for time-variant matrix inversion. IEEE Trans Syst Man Cybern Syst 50(11):4362–4376

Zhang Z, Li Z, Yang S (2021) A barrier varying-parameter dynamic learning network for solving time-varying quadratic programming problems with multiple constraints. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2021.3051261

Sun Z, Shi T, Wei L, Sun Y, Liu K, Jin L (2020) Noise-suppressing zeroing neural network for online solving time-varying nonlinear optimization problem: a control-based approach. Neural Comput Appl 32(2):11505–11520

Sun M, Zhang Y, Wu Y, He X (2021) On a finitely-activated terminal RNN approach to time-variant problem solving. IEEE Trans Neural Networks Learn Syst. https://doi.org/10.1109/TNNLS.2021.3084740

Polyakov A (2012) Nonlinear feedback design for fixed-time stabilization of linear control systems. IEEE Trans Autom Control 57(8):2106–2110

Sun M (2020) Two-phase attractors for finite-duration consensus of multiagent systems. IEEE Trans Syst Man Cybern Syst 50(5):1757–1765

Sun M, Li H, Li W (2021) On finite-duration convergent attracting laws. IEEE Trans Syst Man Cybern Syst 51(8):5017–5029

Hu C, He H, Jiang H (2021) Fixed/preassigned-time synchronization of complex networks via improving fixed-time stability. IEEE Trans Cybern 51(6):2882–2892

Snchez-Torres JD, Gmez-Gutirrez D, Lpez E, Loukianov AG (2017) A class of predefined-time stable dynamical systems. IMA J Math Control Inf 32:1–29

Li W (2020) Predefined-time convergent neural solution to cyclical motion planning of redundant robots under physical constraints. IEEE Trans Industr Electron 67(12):10732–10743

Xiao L, Zhang Y, Hu Z et al (2019) Performance benefits of robust nonlinear zeroing neural network for finding accurate solution of Lyapunov equation in presence of various noises. IEEE Trans Industr Inf 15(9):5161–5171

Xiao L, Cao Y, Dai J et al (2021) Finite-Time and predefined-time convergence design for zeroing neural network: theorem, method, and verification. IEEE Trans Industr Inf 17(7):4724–4732

Xiao L, He Y (2021) A noise-suppression ZNN model with new variable parameter for dynamic Sylvester equation. IEEE Trans Industr Inf 17(11):7513–7522

Jimenez-Rodriguez E, Sanchez-Torres JD, Loukianov AG (2017) Semi-global predefined-time stable systems. In: Proceedings of 14th International conference on electrical engineering, computing science and automatic control, Mexico City, Mexico, pp.1-6

Haddad WM, Vijaysekhar C (2008) Nonlinear dynamical systems and control: a lyapunov-based approach. Princeton University Press, Princeton NJ

Thompson C, Pearson E, Comrie L, Hartley H (1941) Tables of percentage points of the incomplete beta-function. Biometrika 32(2):151–181

Andrews G, Askey R, Roy R (1999) Special functions. Cambridge Univ. Press, Cambridge

Funding

This work was supported by the National Natural Science Foundation of China under Grant 62073291.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A. The proof for Corollary 1

Appendix A. The proof for Corollary 1

For model (8), it follows that

From \(\Lambda _{ij}(0) > 1\) to \(\Lambda _{ij}(t_{1})=1\), we have

due to that \(\Lambda _{ij}^{\alpha } \le \Lambda _{ij}\). Defining \(y =\Lambda _{ij}^{1-\beta }\) yields

Solving the above linear differential inequality, we obtain

Since \(y(t_1)=1\),

From \(\Lambda _{ij}(t_1)=1\) to \(\Lambda _{ij}(t_2)=0\), we have

due to that \(\Lambda _{ij}^{\beta } \le \Lambda _{ij}\). Defining \(y =\Lambda _{ij}^{1-\alpha }\) leads to

Solving the above differential inequality for \(t \ge t_1\), we obtain

Since \(y(t_1) = 1\) and \(y(t_2)=0\),

Hence, the settling time function satisfies

This completes the proof.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sun, M., Li, X. & Zhong, G. Semi-global fixed/predefined-time RNN models with comprehensive comparisons for time-variant neural computing. Neural Comput & Applic 35, 1675–1693 (2023). https://doi.org/10.1007/s00521-022-07820-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07820-2