Abstract

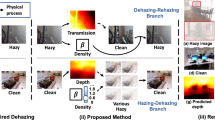

For deep learning-based single image dehazing works, their performances seriously depend on the designed models and training dataset. Existing state-of-the-art methods focus on the design of novel dehazing models or the improvement of training strategies to obtain better dehazing results. In this work, instead of designing a new deep dehazing model, we attempt to further improve the dehazing performance from the perspective of enriching training datasets by exploring an intuitive yet efficient way to synthesize photo-realistic hazy images. It is well known that for a natural hazy image, its perceived haze density increases with scene depth. Motivated by this, we develop a depth-aware haze generation network, namely HazeGAN, by incorporating the Generative Adversarial Network (GAN), depth estimation network, and physical atmospheric scattering to progressively synthesize hazy images. Specifically, a separate depth estimation network is embedded to obtain multi-scale depth features, which are exploited by the atmospheric scattering model to generate multi-scale hazy features. The hazy features are fused into the GAN generator to output synthetic hazy images with depth-aware haze effects. Extensive experimental results demonstrate that the proposed HazeGAN can generate diverse training pairs of depth-aware hazy images and clear images, which effectively enrich the existing benchmark datasets, and improve the generalization capabilities of existing deep image dehazing models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability

The datasets generated during and/or analysed during the current study are available from the first author or the corresponding author on reasonable and motivated request.

References

McCartney EJ (1976) Optics of the atmosphere: scattering by molecules and particles. John Wiley and Sons, Inc., New York, pp 421

Sistu G, Leang I, Chennupati S, Yogamani S, Hughes C, Milz S, Rawashdeh S (2019)NeurAll: towards a unified visual perception model for automated driving. In: 2019 IEEE intelligent transportation systems conference, pp 796– 803

Nalamati M, Kapoor A, Saqib M, Sharma N, Blumenstein M (2019) Drone detection in long-range surveillance videos. In: 2019 16th IEEE international conference on advanced video and signal based surveillance, pp 1– 6

He K, Sun J, Tang X (2010) Single image haze removal using dark channel prior. IEEE Trans Pattern Anal Mach Intell 33(12):2341–2353

Zhu Q, Mai J, Shao L (2015) A fast single image haze removal algorithm using color attenuation prior. IEEE Trans Image Process 24(11):3522–3533

Singh D, Kumar V, Kaur M (2019) Single image dehazing using gradient channel prior. Appl Intell 49(12):4276–4293

Cai B, Xu X, Jia K, Qing C, Tao D (2016) DehazeNet: an end-to-end system for single image haze removal. IEEE Trans Image Process 25(11):5187–5198

Li B, Peng X, Wang Z, Xu J, Feng D (2017) AOD-Net: all-in-one dehazing network. In: 2017 IEEE international conference on computer vision, pp 4780– 4788

Ren W, Ma L, Zhang J, Pan J, Cao X, Liu W, Yang M-H (2018) Gated fusion network for single image dehazing. In: 2018 IEEE conference on computer vision and pattern recognition, pp 3253– 3261

Zhang H, Patel VM (2018) Densely connected pyramid dehazing network. In: 2018 IEEE conference on computer vision and pattern recognition, pp 3194– 3203

Chen D, He M, Fan Q, Liao J, Zhang L, Hou D, Yuan L, Hua G (2019) Gated context aggregation network for image dehazing and deraining. In: 2019 IEEE winter conference on applications of computer vision. IEEE, pp 1375– 1383

Liu X, Ma Y, Shi Z, Chen J (2019) GridDehazeNet: attention-based multi-scale network for image dehazing. In: 2019 IEEE international conference on computer vision, pp 7313– 7322

Qu Y, Chen Y, Huang J, Xie Y (2019) Enhanced Pix2pix dehazing network. In: 2019 IEEE conference on computer vision and pattern recognition, pp 8152– 8160

Dong H, Pan J, Xiang L, Hu Z, Zhang X, Wang F, Yang M (2020) Multi-scale boosted dehazing network with dense feature fusion. In: 2020 IEEE conference on computer vision and pattern recognition, pp 2157– 2167

Ren W, Pan J, Zhang H, Cao X, Yang M-H (2020) Single image dehazing via multi-scale convolutional neural networks with holistic edges. Int J Comput Vision 128(1):240–259

Dong Y, Liu Y, Zhang H, Chen S (2020) FD-GAN: generative adversarial networks with fusion-discriminator for single image dehazing. In: Proceedings of the AAAI conference on artificial intelligence. vol 34 pp 10729–10736

X Qin, Z Wang, Y Bai, X Xie, H Jia (2020) FFA-Net: feature fusion attention network for single image dehazing. In: Proceedings of the AAAI conference on artificial intelligence vol 34, pp 11908–11915

Ju M, Ding C, Ren W, Yang Y, Zhang D, Guo YJ (2021) IDE: Image dehazing and exposure using an enhanced atmospheric scattering model. IEEE Trans Image Process 30:2180–2192

Abdulkareem KH, Arbaiy N, Zaidan A, Zaidan B, Albahri OS, Alsalem M, Salih MM (2021) A new standardisation and selection framework for real-time image dehazing algorithms from multi-foggy scenes based on fuzzy Delphi and hybrid multi-criteria decision analysis methods. Neural Comput Appl 33(4):1029–1054

Zheng Z, Ren,W, Cao X, Hu X, Wang T, Song F, Jia X (2021) Ultra-high-definition image dehazing via multi-guided bilateral learning. In: 2021 IEEE conference on computer vision and pattern recognition, pp 16185– 16194

Sun H, Zhang Y, Chen P, Dan Z, Sun S, Wan J, Li W (2021) Scale-free heterogeneous cyclegan for defogging from a single image for autonomous driving in fog. Neural Comput Appl. https://doi.org/10.1007/s00521-021-06296-w

Kim G, Park SW, Kwon J (2021) Pixel-wise wasserstein autoencoder for highly generative dehazing. IEEE Trans Image Process 30:5452–5462

Lin C, Rong X, Yu X (2022) MSAFF-Net: multiscale attention feature fusion networks for single image dehazing and beyond. In: IEEE transactions on multimedia, pp 1–1

Zhou Y, Chen Z, Li P, Song H, Chen CLP, Sheng B (2022) FSAD-Net: feedback spatial attention dehazing network. In: IEEE transactions on neural networks and learning systems, pp 1–15

Shao Y, Li L, Ren W, Gao C, Sang N (2020) Domain adaptation for image dehazing. In: 2020 IEEE conference on computer vision and pattern recognition, pp 2808– 2817

Chen Z, Wang Y, Yang Y, Liu D (2021) PSD: principled synthetic-to-real dehazing guided by physical priors. In: 2021 IEEE conference on computer vision and pattern recognition, pp 7180– 7189

Zhao S, Zhang L, Shen Y, Zhou Y (2021) RefineDNet: a weakly supervised refinement framework for single image dehazing. IEEE Trans Image Process 30:3391–3404

Li J, Li Y, Zhuo L, Kuang L, Yu T (2022) USID-Net: unsupervised single image dehazing network via disentangled representations. In: IEEE transactions on multimedia, pp. 1–1

Tarel JP, Hautiere N, Cord A, Gruyer D, Halmaoui H (2010) Improved visibility of road scene images under heterogeneous fog. In: 2010 IEEE intelligent vehicles symposium, IEEE, pp 478– 485

Tarel JP, Hautiere N, Caraffa L, Cord A, Halmaoui H, Gruyer D (2012) Vision enhancement in homogeneous and heterogeneous fog. IEEE Intell Transp Syst Mag 4(2):6–20

Zhang Y, Ding L, Sharma G( 2017) Hazerd: an outdoor scene dataset and benchmark for single image dehazing. In: 2017 IEEE international conference on image processing, IEEE, pp 3205– 3209

Li B, Ren W, Fu D, Tao D, Feng D, Zeng W, Wang Z (2018) Benchmarking single-image dehazing and beyond. IEEE Trans Image Process 28(1):492–505

Sakaridis C, Dai D, Gool LV (2018) Semantic foggy scene understanding with synthetic data. Int J Comput Vision 126(9):973–992

Zhang J, Cao Y, Zha Z-J, Tao D (2020) Nighttime dehazing with a synthetic benchmark. In: 2020 ACM international conference on multimedia, pp 2355– 2363

Xiao J, Zhou J, Lei J, Xu C, Sui H (2020) Image hazing algorithm based on generative adversarial networks. IEEE Access 8:15883–15894

Zhang C, Lin Z, Xu L, Li Z, Tang W, Liu Y, Meng G, Wang L, Li L (2021) Density-aware haze image synthesis by self-supervised content-style disentanglement. In: IEEE transactions on circuits and systems for video technology, pp 1–1

Ancuti CO, Ancuti C, Timofte R, Vleeschouwer CD (2018) O-HAZE: a dehazing benchmark with real hazy and haze-free outdoor images. In: 2018 IEEE conference on computer vision and pattern recognition W, pp 754– 762

Ancuti CO, Ancuti C, Sbert M, Timofte R (2019) Dense-Haze: a benchmark for image dehazing with dense-haze and haze-free images. In: 2017 IEEE international conference on image processing, pp 1014– 1018

Ancuti CO, Ancuti C, Timofte R (2020) NH-HAZE: an image dehazing benchmark with non-homogeneous hazy and haze-free images. In: 2020 IEEE conference on computer vision and pattern recognition workshops, pp 444– 445

Zhao S, Zhang L, Huang S, Shen Y, Zhao S (2020) Dehazing evaluation: real-world benchmark datasets, criteria, and baselines. IEEE Trans Image Process 29:6947–6962

Wang TC, Liu MY, Zhu JY, Tao A, Kautz J, Catanzaro B (2018) High-resolution image synthesis and semantic manipulation with conditional gans. In: 2018 IEEE conference on computer vision and pattern recognition, pp 8798– 8807

Xiao J, Zhang S, Yao Y, Wang Z, Zhang Y, Wang Y-F (2022) Generative adversarial network with hybrid attention and compromised normalization for multi-scene image conversion. Neural Comput Appl 34(9):7209–7225

Chen Z, Bi X, Zhang Y, Yue J, Wang H (2022) Lightweightderain: learning a lightweight multi-scale high-order feedback network for single image de-raining. Neural Comput Appl 34(7):5431–5448

Liu F, Shen C, Lin G, Reid I (2015) Learning depth from single monocular images using deep convolutional neural fields. IEEE Trans Pattern Anal Mach Intell 38(10):2024–2039

Liu C, Ye S, Zhang L, Bao H, Wang X, Wu F (2022) Non-homogeneous haze data synthesis based real-world image dehazing with enhancement-and-restoration fused CNNS. Comput Graph. https://doi.org/10.1016/j.cag.2022.05.008

Isola P, Zhu J-Y, Zhou T, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: 2017 IEEE conference on computer vision and pattern recognition

Lee H-Y, Tseng H-Y, Mao Q, Huang J-B, Lu Y-D, Singh M, Yang M-H (2020) DRIT++: diverse image-to-image translation via disentangled representations. Int J Comput Vision 128(10):2402–2417

Ye Y, Chang Y, Zhou H, Yan L (2021) Closing the loop: joint rain generation and removal via disentangled image translation. In: 2021 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 2053– 2062

Wang T-C, Liu M-Y, Zhu J-Y, Liu G, Tao A, Kautz J, Catanzaro B (2018) Video-to-video synthesis. In: Advances in neural information processing systems, vol 31

Zhang L, Yang H, Qiu T, Li L (2021) AP-GAN: improving attribute preservation in video face swapping. In: IEEE transactions on circuits and systems for video technology

Zhu J-Y, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: 2017 IEEE international conference on computer vision (ICCV), pp 2242– 2251

Li Z, Snavely N (2018) Megadepth: learning single-view depth prediction from internet photos. In: 2018 IEEE conference on computer vision and pattern recognition, pp 2041– 2050

He K, Sun J, Tang X (2012) Guided image filtering. IEEE Trans Pattern Anal Mach Intell 35(6):1397–1409

Ronneberger O, Fischer P, Brox T (2015) U-Net: convolutional networks for biomedical image segmentation. In: 2015 international conference on medical image computing and computer-assisted intervention, pp 234– 241

Isola P, Zhu JY, Zhou T, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: 2017 IEEE conference on computer vision and pattern recognition, pp 1125– 1134

Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S (2017) GANs trained by a two time-scale update rule converge to a local nash equilibrium. In: Advances in neural information processing systems, pp 6626– 6637

Choi LK, You J, Bovik AC (2015) Referenceless prediction of perceptual fog density and perceptual image defogging. IEEE Trans Image Process 24(11):3888–3901

Mittal A, Moorthy AK, Bovik AC (2012) No-reference image quality assessment in the spatial domain. IEEE Trans Image Process 21(12):4695–4708

Mittal A, Soundararajan R, Bovik AC (2013) Making a completely blind image quality analyzer. IEEE Signal Process Lett 20(3):209–212

Acknowledgements

This work is supported in part by the National Natural Science Foundation of China (61972143,62172059), and in part by the Scientific Research Fund of Hunan Provincial Education Department (21C0534), and Scientific Research Fund of Hunan Provincial Key Laboratory of Intelligent Information Processing and Application (2021HSKFJJ040).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest.

The authors have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, J., Yang, G., Xia, M. et al. From depth-aware haze generation to real-world haze removal. Neural Comput & Applic 35, 8281–8293 (2023). https://doi.org/10.1007/s00521-022-08101-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-08101-8