Abstract

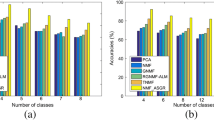

Nonnegative matrix factorization (NMF) is a crucial method for image clustering. However, NMF may obtain low accurate clustering results because the factorization results contain no data structure information. In this paper, we propose an algorithm of nonnegative matrix factorization under structure constraints (SNMF). The factorization results of SNMF could maintain data global and local structure information simultaneously. In SNMF, the global structure information is captured by the cosine measure under the \(\ell _2\) norm constraints. Meanwhile, \(\ell _2\) norm constraints are utilized to get more discriminant data representations. A graph regularization term is employed to maintain the local structure. Effective updating rules are given in this paper. Moreover, the effects of different normalizations on similarities are investigated through experiments. On real datasets, the numerical results confirm the effectiveness of the SNMF.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Data availability

All datasets analyzed in this study are available in the homepage of Deng Cai (http://www.cad.zju.edu.cn/home/dengcai/).

References

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444. https://doi.org/10.1038/nature14539

Guan Y, Fang J, Wu X (2020) Multi-pose face recognition using cascade alignment network and incremental clustering. Signal Image Video Process 1:1–9

Ren Y, Kamath U, Domeniconi C, Xu Z (2019) Parallel boosted clustering. Neurocomputing 351:87–100

Xie P, Xing EP (2015) Integrating image clustering and codebook learning. In: AAAI, pp 1903–1909

Chang J, Chen Y, Qi L, Yan H (2020) Hypergraph clustering using a new laplacian tensor with applications in image processing. SIAM J Imag Sci 13(3):1157–1178

Song K, Yao X, Nie F, Li X, Xu M (2021) Weighted bilateral k-means algorithm for fast co-clustering and fast spectral clustering. Pattern Recognit 109:107560

Ren Y, Wang N, Li M, Xu Z (2020) Deep density-based image clustering. Knowl-Based Syst 1:105841

Kumar N, Uppala P, Duddu K, Sreedhar H, Varma V, Guzman G, Walsh M, Sethi A (2018) Hyperspectral tissue image segmentation using semi-supervised NMF and hierarchical clustering. IEEE Trans Med Imaging 38(5):1304–1313

Belhumeur PN, Hespanha JP, Kriegman DJ (1997) Eigenfaces vs. fisherfaces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell 19(7):711–720

Ji S, Ye J (2008) Generalized linear discriminant analysis: a unified framework and efficient model selection. IEEE Trans Neural Networks 19(10):1768–1782

Lee DD, Seung HS (1999) Learning the parts of objects by non-negative matrix factorization. Nature 401(6755):788

Cai D, He X, Han J, Huang TS (2010) Graph regularized nonnegative matrix factorization for data representation. IEEE Trans Pattern Anal Mach Intell 33(8):1548–1560

Shang F, Jiao L, Wang F (2012) Graph dual regularization non-negative matrix factorization for co-clustering. Pattern Recogn 45(6):2237–2250

Ding CH, Li T, Jordan MI (2008) Convex and semi-nonnegative matrix factorizations. IEEE Trans Pattern Anal Mach Intell 32(1):45–55

Hu W, Choi K-S, Wang P, Jiang Y, Wang S (2015) Convex nonnegative matrix factorization with manifold regularization. Neural Netw 63:94–103

Cui G, Li X, Dong Y (2018) Subspace clustering guided convex nonnegative matrix factorization. Neurocomputing 292:38–48

Kong D, Ding C, Huang H (2011) Robust nonnegative matrix factorization using l21-norm. In: Proceedings of the 20th ACM international conference on information and knowledge management, pp 673–682

Li Z, Tang J, He X (2017) Robust structured nonnegative matrix factorization for image representation. IEEE Trans Neural Netw Learn Syst 29(5):1947–1960

Zhang Z, Liao S, Zhang H, Wang S, Hua C (2018) Improvements in sparse non-negative matrix factorization for hyperspectral unmixing algorithms. J Appl Remote Sens 12(4):045015

Xing L, Dong H, Jiang W, Tang K (2018) Nonnegative matrix factorization by joint locality-constrained and l2, 1-norm regularization. Multimed Tools Appl 77(3):3029–3048

Babaee M, Tsoukalas S, Babaee M, Rigoll G, Datcu M (2016) Discriminative nonnegative matrix factorization for dimensionality reduction. Neurocomputing 173:212–223

Liu H, Wu Z, Li X, Cai D, Huang TS (2011) Constrained nonnegative matrix factorization for image representation. IEEE Trans Pattern Anal Mach Intell 34(7):1299–1311

Fei W, Tao L, Changshui Z (2008) Semi-supervised clustering via matrix factorization. In: Proceedings of 2008 SIAM International Conference on Data Mining (SDM 2008), pp 1–12

Yang Y-J, Hu B-G (2007) Pairwise constraints-guided non-negative matrix factorization for document clustering. In: IEEE/WIC/ACM International Conference on Web Intelligence (WI’07). IEEE, pp 250–256

Yang Z, Hu Y, Liang N, Lv J (2019) Nonnegative matrix factorization with fixed l2-norm constraint. Circuits Syst Signal Process 38(7):3211–3226

Ahmed I, Hu XB, Acharya MP, Ding Y (2021) Neighborhood structure assisted non-negative matrix factorization and its application in unsupervised point-wise anomaly detection. J Mach Learn Res 22(34):1–32

Kuang D, Ding C, Park H (2012) Symmetric Nonnegative Matrix Factorization for Graph Clustering, pp 106–117. https://doi.org/10.1137/1.9781611972825.10

Samaria FS, Harter AC (1994) Parameterisation of a stochastic model for human face identification. In: Proceedings of 1994 IEEE workshop on applications of computer vision, pp 138–142. https://doi.org/10.1109/ACV.1994.341300

Hedjam R, Abdesselam A, Melgani F (2021) NMF with feature relationship preservation penalty term for clustering problems. Pattern Recogn 112:107814

Cai D, He X, Han J (2005) Document clustering using locality preserving indexing. IEEE Trans Knowl Data Eng 17(12):1624–1637

Wang Y, Chen L, Mei J-P (2014) Stochastic gradient descent based fuzzy clustering for large data. In: 2014 IEEE international conference on fuzzy systems (FUZZ-IEEE). IEEE, pp 2511–2518

Hubert L, Arabie P (1985) Comparing partitions. J Classif 2(1):193–218

Goldfarb D, Wen Z, Yin W (2009) A curvilinear search method for p-harmonic flows on spheres. SIAM J Imag Sci 2(1):84–109. https://doi.org/10.1137/080726926

Vese LA, Osher SJ (2002) Numerical methods for p-harmonic flows and applications to image processing. SIAM J Numer Anal 40(6):2085–2104. https://doi.org/10.1137/S0036142901396715

Acknowledgements

This work was supported by the National Natural Science Foundation of China (11961010, 61967004).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors disclosed no relevant relationships.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

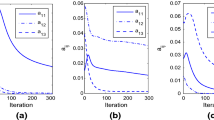

Because (18) and (19) are two gradient descent methods, (16) is non-increasing. Here is the proof.

Denote the objective function of (16) as F. The partial derivative of \(U_{mk}\) in F is

The formulation of updating \(U_{mk}\) through the gradient descent method is the following:

When \(\tau _u={U_{mk}}/{(UV^TV)_{mk}}\), the nonnegative constraints on U hold, and (26) is (18).

For V, the updating rule (19) is also a gradient descent method. The proof is similar to [25].

Let \(x_r\) and h represent the ith row of V and \(\frac{\partial F}{\partial x_r}\), respectively. Denote \(F_1(x_r)\) as the related part of \(x_r\) in (16). When omitting the nonnegative constraints, the Lagrange function of \(x_r\) is the following:

Denote

Rewrite (27) as follows:

When\(\nabla _xL(x,\lambda )=0\), we have

Through the constraint \(x^Tx=1\), we obtain

Thus, rewrite \(\nabla _xL(x,\lambda )\) as follows:

Let A represents \(ax^T-xa^T\). Therefore, A is a skew-symmetric matrix. Since Ax is the gradient of (28) the updating rule in the gradient descent method of x should be the following:

However, it is difficult to satisfy the constraint \(y^Ty=1\). From [33, 34], a modified method (30) is used.

(30) could satisfy that \(y^Ty=x^Tx=1\) for any skew-symmetric matrix A and \(\tau\).

From Lemma 1 (2) in [25], (30) could be expressed as:

Then from Lemma 2 in [25], (31) could be rewritten as

where

The nonnegative constraints on y should be handled next.

Note \(q=1-(\frac{\tau }{2})^2(a^Tx)^2+(\frac{\tau }{2})^2\Vert a\Vert ^2\Vert x\Vert ^2\). Rewrite (32) as follows:

where

Expanding \(\beta _1^{'}(\tau )(c_1-c_2)\) and \(\beta _2^{'}x\) in (33) through auxiliary variables (6), we get

Because \(y(\tau )\) has nonnegative constraints, the \(\tau\) should satisfy

Denote \((a^Tx)^2-\Vert a\Vert ^2\Vert x\Vert ^2\) as M. Then \(q=1-(\frac{\tau }{2})^2M\). Therefore, we obtain

Utilizing the auxiliary variables (6), a series of equations are obtained as follows:

(6) confirms that \(C_i\ge 0\) and \(D_i\le 0\). Thus, when \(\tau\) satisfies (36) and \(\tau >0\), there exist three situations.

-

1.

when \(B_i>0\),

$$\begin{aligned} \tau =\frac{\sqrt{C_i^2-4B_iD_i}-C_i}{2B_i}. \end{aligned}$$ -

2.

when \(B_i=0\),

$$\begin{aligned} \tau =-\frac{D_i}{C_i}. \end{aligned}$$ -

3.

when \(B_i<0\),

$$\begin{aligned} \tau =\frac{\sqrt{C_i^2-4B_iD_i}-C_i}{2B_i}. \end{aligned}$$

Thus, we obtain

(37) guarantees (35) hold. Thus, the updating rule (19) guarantees all constraints hold.

Now it has been proved that (18) and (19) are gradient descent methods for (16). Therefore, (16) is non-increasing under (18) and (19).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jia, M., Li, X. & Zhang, Y. An algorithm of nonnegative matrix factorization under structure constraints for image clustering. Neural Comput & Applic 35, 7891–7907 (2023). https://doi.org/10.1007/s00521-022-08136-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-08136-x