Abstract

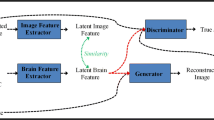

In this work, we propose an approach to process electroencephalogram (EEG) for a visual perception task for synthesizing the visual stimulus that was shown during the acquisition of EEG (images of objects, digits, and characters). We demonstrate that a cross-modality-based encoder–decoder network shows good performance for image synthesis tasks on simplistic images like digits and characters but fails on complex natural object images. To address this issue, we propose a novel attention & auxiliary classifier-based GAN architecture where the generator itself is a cross-modality-based encoder–decoder network. It generates images along with producing class-specific EEG encoding as a latent representation. In addition to the traditional adversarial loss, we also propose to use perceptual loss and attention modules to generate good-quality images. The performance of the proposed network is measured using two metrics— diversity score and inception score, which quantify the relevance and quality of the reconstructed images, respectively. Experimentation results show that our approach performs better compared to the state-of-the-art for both metrics.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Data availability

The dataset used in this work is a publicly available dataset (https://github.com/ptirupat/ThoughtViz).

References

Green AM, Kalaska JF (2011) Learning to move machines with the mind. Trends Neurosci 34(2):61–75

Guger C, Harkam W, Hertnaes C, Pfurtscheller G (1999) Prosthetic control by an eeg-based brain-computer interface (bci). In: Proc. Aaate 5th European Conference for the Advancement of Assistive Technology, pp. 3–6. Citeseer

Muller-Putz GR, Pfurtscheller G (2007) Control of an electrical prosthesis with an ssvep-based bci. IEEE Trans Biomed Eng 55(1):361–364

Schwartz AB, Cui XT, Weber DJ, Moran DW (2006) Brain-controlled interfaces: movement restoration with neural prosthetics. Neuron 52(1):205–220

Shih JJ, Krusienski DJ, Wolpaw JR (2012) Brain-computer interfaces in medicine. Mayo Clin Proc 87(3):268–79

Pasley BN, David SV, Mesgarani N, Flinker A, Shamma SA, Crone NE, Knight RT, Chang EF (2012) Reconstructing speech from human auditory cortex. PLoS Biol 10(1):1001251

Heckenlively JR, Arden GB, Bach M (2006) Principles and practice of clinical electrophysiology of vision. MIT press, Cambridge

Spampinato C, Palazzo S, Kavasidis I, Giordano D, Shah M, Souly N (2016) Deep learning human mind for automated visual classification. CoRR http://arxiv.org/abs/1609.00344

Tirupattur P, Rawat YS, Spampinato C, Shah M (2018) Thoughtviz: visualizing human thoughts using generative adversarial network. In: Proceedings of the 26th ACM International Conference on Multimedia, pp. 950–958

Ben-Cohen A, Klang E, Raskin SP, Soffer S, Ben-Haim S, Konen E, Amitai MM, Greenspan H (2019) Cross-modality synthesis from ct to pet using fcn and gan networks for improved automated lesion detection. Eng Appl Artif Intell 78:186–194

Kandel ER, Schwartz JH, Jessell TM (2000) Principles of neural science, vol 4. Mc-Graw hill, New York

Li D, Du C, He H (2020) Semi-supervised cross-modal image generation with generative adversarial networks. Pattern Recognit 100:107085

Khare S, Choubey RN, Amar L, Udutalapalli V (2022) Neurovision: perceived image regeneration using cprogan. Neural Comput Appl 25:1–13

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. Adv Neural Inf Process Syst 27:065

Mirza M, Osindero S (2014) Conditional generative adversarial nets. http://arxiv.org/abs/1411.1784

Kumar P, Saini R, Roy PP, Sahu PK, Dogra DP (2018) Envisioned speech recognition using eeg sensors. Personal and Ubiquitous Comput 22(1):185–199

Zaki M, Alquraini A, Sheltami TR (2018) Home automation using emotiv: controlling tv by brainwaves. J Ubiquitous Syst Pervasive Netw 10(1):27–32

Odena A, Olah C, Shlens J (2017) Conditional image synthesis with auxiliary classifier gans. In: International Conference on Machine Learning, pp. 2642–2651. PMLR

Gurumurthy S, Kiran Sarvadevabhatla R, Venkatesh Babu R (2017) Deligan: Generative adversarial networks for diverse and limited data. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 166–174

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141

Johnson J, Alahi A, Fei-Fei L (2016) Perceptual losses for real-time style transfer and super-resolution. In: European Conference on Computer Vision, pp. 694–711. Springer

Salimans T, Goodfellow I, Zaremba W, Cheung V, Radford A, Chen X (2016) Improved techniques for training gans. Adv Neural Inf Process Syst 29:2234–2242

BEN-YOSEF M (2018) Multi-modal generative adversarial networks. PhD thesis, Hebrew University of Jerusalem

Mishra R, Sharma K, Bhavsar A (2022) Reconstruction of visual stimulus from the eeg recordings via generative adversarial network. In: Medical Imaging 2022: Image Processing, vol. 12032, pp. 512–520. SPIE

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mishra, R., Sharma, K., Jha, R.R. et al. NeuroGAN: image reconstruction from EEG signals via an attention-based GAN. Neural Comput & Applic 35, 9181–9192 (2023). https://doi.org/10.1007/s00521-022-08178-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-08178-1