Abstract

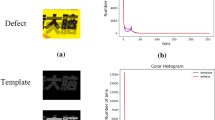

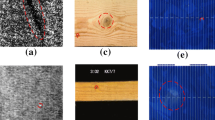

Diverse categories of defects on the surface of the cherries have different influences on cherries’ quality, so simultaneous detection of these defects is essential for their grading. It is a difficult undertaking that requires to investigate the intrinsic category dependencies while taking the category imbalances into account. We treat cherry defect recognition as a multi-label classification task and present a novel identification network called Coupled Graph convolutional Transformer (CoG-Trans). Utilizing the self-attention mechanism and static co-occurrence patterns via our proposed categorical representation extraction Module, we model the relevance of various categories implicitly and explicitly, respectively. Moreover, we design a VI-Fusion module based on the attention mechanism to fuse the visible and infrared information sources. Additionally, we employ asymmetric-contrastive loss to correct the category imbalance and learn more discriminative features for each label. Our experiments are conducted on the VI-Cherry dataset, which consists of 9492 paired visible and infrared cherry images with six defective categories and one normal category manually annotated. The suggested method yields excellent performance compared to previous work, achieving 99.54% mAP on the VI-Cherry dataset.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Data Availibility

Data will be made available on reasonable request.

References

Bujdosó G, Hrotkó K, Quero-Garcia J, Lezzoni A, Puławska J, Lang G (2017) Cherry production. In: Quero-Garcia J, Iezzoni A, Pulawska J, Lang G (eds) Cherries: botany, production and uses. Cabi, pp 1–13

Ali MA, Thai KW (2017) Automated fruit grading system. In: 2017 IEEE 3rd International Symposium in Robotics and Manufacturing Automation (ROMA), pp 1–6. IEEE

Naik S, Patel B (2017) Machine vision based fruit classification and grading—a review. Int J Comput Appl 170(9):22–34

Kamilaris A, Prenafeta-Boldú FX (2018) Deep learning in agriculture: a survey. Comput Electron Agric 147:70–90

Dubey SR, Jalal A (2012) Robust approach for fruit and vegetable classification. Proc Eng 38:3449–3453

Hartigan JA, Wong MA (1979) Algorithm as 136: a k-means clustering algorithm. J R Stat Soc Ser C Appl Stat 28(1):100–108

Vapnik V (1999) The nature of statistical learning theory. Springer

Zawbaa HM, Hazman M, Abbass M, Hassanien AE (2014) Automatic fruit classification using random forest algorithm. In: 2014 14th International Conference on Hybrid Intelligent Systems, pp 164–168

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1):119–139

Freund Y, Schapire RE (1996) Experiments with a new boosting algorithm. In: Icml, vol 96, pp 148–156. Citeseer

Felzenszwalb PF, Girshick RB, McAllester D, Ramanan D (2010) Object detection with discriminatively trained part-based models. IEEE Trans Pattern Anal Mach Intell 32(9):1627–1645

Biswas B, Ghosh SK, Ghosh A (2020) A robust multi-label fruit classification based on deep convolution neural network. Springer

Alajrami MA, Abu-Naser SS (2020) Type of tomato classification using deep learning. Int J Acad Pedagogical Res (IJAPR) 3(12)

Leemans V, Magein H, Destain M-F (2002) Ae-automation and emerging technologies: on-line fruit grading according to their external quality using machine vision. Biosyst Eng 83(4):397–404

Balestani A, Moghaddam P, Motlaq A, Dolaty H (2012) Sorting and grading of cherries on the basis of ripeness, size and defects by using image processing techniques. Int J Agric Crop Sci (IJACS) 4(16):1144–1149

Sun X, Ma L, Li G (2019) Multi-vision attention networks for on-line red jujube grading. Chin J Electron 28(6):1108–1117

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 4700–4708

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 7132–7141

Momeny M, Jahanbakhshi A, Jafarnezhad K, Zhang Y-D (2020) Accurate classification of cherry fruit using deep CNN based on hybrid pooling approach. Postharvest Biol Technol 166:111204

Elman JL (1990) Finding structure in time. Cogn Sci 14(2):179–211

Li H, Wu X-J, Durrani T (2020) Nestfuse: an infrared and visible image fusion architecture based on nest connection and spatial/channel attention models. IEEE Trans Instrum Meas 69(12):9645–9656

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. Adv Neural Inf Process Syst 30

Wei Y, Xia W, Lin M, Huang J, Ni B, Dong J, Zhao Y, Yan S (2015) Hcp: a flexible CNN framework for multi-label image classification. IEEE Trans Pattern Anal Mach Intell 38(9):1901–1907

Wang J, Yang Y, Mao J, Huang Z, Huang C, Xu W (2016) Cnn-rnn: A unified framework for multi-label image classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 2285–2294 (2016)

Chen S-F, Chen Y-C, Yeh C-K, Wang Y-C (2018) Order-free rnn with visual attention for multi-label classification. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 32

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Chen Z-M, Wei X-S, Wang P, Guo Y (2019) Multi-label image recognition with graph convolutional networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 5177–5186

Chen T, Xu M, Hui X, Wu H, Lin L (2019) Learning semantic-specific graph representation for multi-label image recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 522–531

Xu J, Tian H, Wang Z, Wang Y, Kang W, Chen F (2020) Joint input and output space learning for multi-label image classification. IEEE Trans Multimed 23:1696–1707

You R, Guo Z, Cui L, Long X, Bao Y, Wen S (2020) Cross-modality attention with semantic graph embedding for multi-label classification. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 34, pp 12709–12716

Wang Y, He D, Li F, Long X, Zhou Z, Ma J, Wen S (2020) Multi-label classification with label graph superimposing. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 34, pp 12265–12272

Ye J, He J, Peng X, Wu W, Qiao Y (2020) Attention-driven dynamic graph convolutional network for multi-label image recognition. In: European Conference on Computer Vision, pp 649–665. Springer

Lanchantin J, Wang T, Ordonez V, Qi Y (2021) General multi-label image classification with transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 16478–16488

Zhao J, Yan K, Zhao, Y, Guo X, Huang F, Li J (2021) Transformer-based dual relation graph for multi-label image recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 163–172

Zhao J, Zhao Y, Li J (2021) M3tr: multi-modal multi-label recognition with transformer. In: Proceedings of the 29th ACM International Conference on Multimedia, pp 469–477

Cheng X, Lin H, Wu X, Yang F, Shen D, Wang Z, Shi N, Liu H (2021) Mltr: Multi-label classification with transformer. arXiv preprint arXiv:2106.06195

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, et al (2020) An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929

Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-end object detection with transformers. In: European Conference on Computer Vision, pp 213–229. Springer

Liu S, Zhang L, Yang X, Su H, Zhu J (2021) Query2label: a simple transformer way to multi-label classification. arXiv preprint arXiv:2107.10834

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 770–778

Kipf TN, Welling M (2016) Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907

Pennington J, Socher R, Manning CD (2014) Glove: Global vectors for word representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp 1532–1543

Lin, T-Y, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp 2980–2988

Ridnik T, Ben-Baruch E, Zamir N, Noy A, Friedman I, Protter M, Zelnik-Manor L (2021) Asymmetric loss for multi-label classification. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 82–91

He J, Chen J-N, Liu S, Kortylewski A, Yang C, Bai Y, Wang C, Yuille A (2021) Transfg: A transformer architecture for fine-grained recognition. arXiv preprint arXiv:2103.07976

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, pp. 249–256. JMLR Workshop and Conference Proceedings

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980

Smith LN (2018) A disciplined approach to neural network hyper-parameters: Part 1–learning rate, batch size, momentum, and weight decay. arXiv preprint arXiv:1803.09820

Loshchilov I, Hutter F (2017) Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101

Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L, et al (2019) Pytorch: An imperative style, high-performance deep learning library. Adv Neural Inf Process Syst 32

Acknowledgements

This work was supported by Chinese Academy of Sciences Engineering Laboratory for Intelligent Logistics Equipment System (No. KFJ-PTXM-025).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lin, M., Li, G., Hao, Y. et al. CoG-Trans: coupled graph convolutional transformer for multi-label classification of cherry defects. Neural Comput & Applic 35, 15365–15379 (2023). https://doi.org/10.1007/s00521-023-08521-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-08521-0

Keywords

Profiles

- Meiling Lin View author profile