Abstract

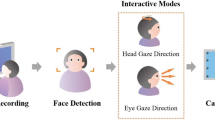

Due to its natural and fast characteristics, eye tracking, as a novel input modality, has been widely used in head-mounted displays for interaction. However, because of the inadvertent jitter of eyes and limitations of eye tracking devices, the eye-based selection often performs poorly in accuracy and stability compared with other input modalities, especially for small targets. To address this issue, we built a likelihood model by modeling the gaze point distribution and then combined it with Bayesian rules to infer the intended target from the perspective of probability as an alternative to the traditional selection criteria based on boundary judgment. Our investigation shows that using our model improves the selection performance significantly over the conventional ray-casting selection method and using the existing optimal likelihood model, especially in the selection of small targets.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Soukoreff RW, MacKenzie IS (2004) Towards a standard for pointing device evaluation, perspectives on 27 years of Fitts’ law research in HCI. Int J Hum Comput Stud 61(6):751–789

MacKenzie IS (2012) Human-computer interaction: an empirical research perspective. Morgan Kaufmann, Massachusetts

Hajri AA, Fels S, Miller G, Ilich M (2011) Moving target selection in 2D graphical user interfaces. In: IFIP Conference on Human-Computer Interaction, pp 141–161. Springer, Berlin, Heidelberg

Bohan M, Chaparro A, Scarlett D (1998) The effects of selection technique on target acquisition movements made with a mouse. In: Proceedings of the human factors and ergonomics society annual meeting, Vol. 42, No. 5, pp 473–475. Sage CA: Los Angeles, CA: SAGE Publications

Cockburn A, Firth A (2004) Improving the acquisition of small targets. In: People and computers XVII—designing for society, pp 181–196. Springer, London

Lee S, Seo J, Kim GJ, Park CM (2003) Evaluation of pointing techniques for ray casting selection in virtual environments. In: Third international conference on virtual reality and its application in industry, Vol. 4756, pp 38–44. SPIE

Yan Y, Shi Y, Yu C, Shi Y (2020) Headcross: exploring head-based crossing selection on head-mounted displays. In: Proceedings of the ACM on interactive, mobile, wearable and ubiquitous technologies, 4(1), 1-22

Pietroszek, K., & Lee, N. (2019). Virtual Hand Metaphor in Virtual Reality.

Schjerlund J, Hornbæk K, Bergström J (2021) Ninja hands: using many hands to improve target selection in vr. In: Proceedings of the 2021 CHI conference on human factors in computing systems (pp 1–14)

Moore AG, Hatch JG, Kuehl S, McMahan RP (2018) VOTE: a ray-casting study of vote-oriented technique enhancements. Int J Hum Comput Stud 120:36–48

Baloup M, Pietrzak T, Casiez G (2019) Raycursor: a 3d pointing facilitation technique based on raycasting. In: Proceedings of the 2019 CHI conference on human factors in computing systems, pp 1–12

Lu Y, Yu C, Shi Y (2020). Investigating bubble mechanism for ray-casting to improve 3D target acquisition in virtual reality. In: 2020 IEEE conference on virtual reality and 3D user interfaces (VR), pp 35–43. IEEE

Tanriverdi V, Jacob RJ (2000) Interacting with eye movements in virtual environments. In: Proceedings of the SIGCHI conference on human factors in computing systems, pp 265–272

Kytö M, Ens B, Piumsomboon T, Lee GA, Billinghurst M (2018) Pinpointing: Precise head-and eye-based target selection for augmented reality. In: Proceedings of the 2018 CHI conference on human factors in computing systems, pp 1–14

Hou WJ, Chen XL (2021) Comparison of eye-based and controller-based selection in virtual reality. Int J Human-Comput Interact 37(5):484–495

Qian YY, Teather RJ (2017) The eyes don't have it: an empirical comparison of head-based and eye-based selection in virtual reality. In: Proceedings of the 5th symposium on spatial user interaction, pp 91–98

Blattgerste J, Renner P, Pfeiffer T (2018) Advantages of eye-gaze over head-gaze-based selection in virtual and augmented reality under varying field of views. In: Proceedings of the workshop on communication by gaze interaction, pp 1–9

Miniotas D, Špakov O (2004) An algorithm to counteract eye jitter in gaze-controlled interfaces. Inf Tech Control 30(1):65–68

Holmqvist K (2017) Common predictors of accuracy, precision and data loss in 12 eye-trackers. In: The 7th scandinavian workshop on eye tracking

Aziz S, Komogortsev O (2022) An assessment of the eye tracking signal quality captured in the HoloLens 2. In: 2022 Symposium on eye tracking research and applications, pp 1–6

Ryu K, Lee JJ, Park JM (2019) GG Interaction: a gaze–grasp pose interaction for 3D virtual object selection. J Multimodal User Interfaces 13(4):383–393

Schweigert R, Schwind V, Mayer S (2019) Eyepointing: a gaze-based selection technique. In: Proceedings of Mensch und computer 2019, pp 719–723

Khamis M, Oechsner C, Alt F, Bulling A (2018) VRpursuits: interaction in virtual reality using smooth pursuit eye movements. In: Proceedings of the 2018 international conference on advanced visual interfaces, pp 1–8

Sidenmark L, Clarke C, Zhang X, Phu J, Gellersen H (2020) Outline pursuits: Gaze-assisted selection of occluded objects in virtual reality. In: Proceedings of the 2020 chi conference on human factors in computing systems, pp 1–13

Bi X, Zhai S (2013) Bayesian touch: a statistical criterion of target selection with finger touch. In: Proceedings of the 26th annual ACM symposium on user interface software and technology, pp 51–60

Zhu S, Kim Y, Zheng J, Luo JY, Qin R, Wang L, Bi X (2020) Using Bayes' theorem for command input: principle, models, and applications. In: Proceedings of the 2020 CHI conference on human factors in computing systems, pp 1–15

Huang J, Tian F, Li N, Fan X (2019) Modeling the uncertainty in 2D moving target selection. In: Proceedings of the 32nd annual ACM symposium on user interface software and technology, pp 1031–1043

Yu D, Liang HN, Lu X, Fan K, Ens B (2019) Modeling endpoint distribution of pointing selection tasks in virtual reality environments. ACM Trans Graphics (TOG) 38(6):1–13

Hülsmann, F., Dankert, T, Pfeiffer T (2011) Comparing gaze-based and manual interaction in a fast-paced gaming task in virtual reality. In: Proceedings of the Workshop Virtuelle & Erweiterte Realität 2011

Welford, A. T. (1968). Fundamentals of skill

Murata A (1999) Extending effective target width in Fitts’ law to a two-dimensional pointing task. Int J Human-Comput Interact 11(2):137–152

Bi X, Li Y, Zhai S (2013) FFitts law: modeling finger touch with fitts' law. In: Proceedings of the SIGCHI conference on human factors in computing systems, pp 1363–1372

Huang J, Tian F, Fan X, Zhang X, Zhai S (2018) Understanding the uncertainty in 1D unidirectional moving target selection. In: Proceedings of the 2018 CHI conference on human factors in computing systems, pp 1–12

Hornof AJ, Halverson T (2002) Cleaning up systematic error in eye-tracking data by using required fixation locations. Behav Res Methods Instrum Comput 34(4):592–604

Kim J, Kane D, Banks MS (2012) Visual discomfort and the temporal properties of the vergence-accommodation conflict. In: Stereoscopic displays and applications XXIII, Vol. 8288, pp 352–360 SPIE

Banks MS, Kim J, Shibata T (2013) Insight into vergence/accommodation mismatch. In: Head-and helmet-mounted displays XVIII: design and applications, Vol. 8735, pp 59–70. SPIE

Barrera Machuca MD, Stuerzlinger W (2018) Do stereo display deficiencies affect 3D pointing?. In: Extended abstracts of the 2018 CHI conference on human factors in computing systems, pp 1–6

Barrera Machuca MD, Stuerzlinger W (2019) The effect of stereo display deficiencies on virtual hand pointing. In: Proceedings of the 2019 CHI conference on human factors in computing systems, pp 1–14

Batmaz AU, Machuca MDB, Pham DM, Stuerzlinger W (2019) Do head-mounted display stereo deficiencies affect 3D pointing tasks in AR and VR?. In: 2019 IEEE conference on virtual reality and 3D user interfaces (VR), pp 585–592. IEEE

Previc FH (1998) The neuropsychology of 3-D space. Psychol Bull 124(2):123

Armbrüster C, Wolter M, Kuhlen T, Spijkers W, Fimm B (2008) Depth perception in virtual reality: distance estimations in peri-and extrapersonal space. Cyberpsychol Behav 11(1):9–15

Naceri A, Chellali R, Hoinville T (2011) Depth perception within peripersonal space using head-mounted display. Presence Teleop Virtual Environ 20(3):254–272

Bernhard M, Stavrakis E, Hecher M, Wimmer M (2014) Gaze-to-object mapping during visual search in 3d virtual environments. ACM Trans Appl Percept (TAP) 11(3):1–17

MacKenzie IS (1992) Fitts’ law as a research and design tool in human-computer interaction. Human-Comput Interact 7(1):91–139

Funding

The authors have not disclosed any funding

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared no potential conflicts of interest with respect to the research, author- ship, and/or publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lei, T., Chen, J., Chen, J. et al. Modeling the gaze point distribution to assist eye-based target selection in head-mounted displays. Neural Comput & Applic 35, 25069–25081 (2023). https://doi.org/10.1007/s00521-023-08705-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-08705-8