Abstract

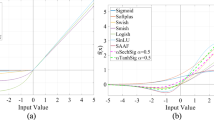

In Convolutional Neural Networks (CNNs), the selection and use of appropriate activation functions is of critical importance. It has been seen that the Rectified Linear Unit (ReLU) is widely used in many CNN models. Looking at the recent studies, it has been seen that some non-monotonic activation functions are gradually moving towards becoming the new standard to improve the performance of CNN models. It has been observed that some non-monotonic activation functions such as Swish, Mish, Logish and Smish are used to obtain successful results in various deep learning models. However, only a few of them have been widely used in most of the studies. Inspired by them, in this study, a new activation function named Gish, whose mathematical model can be represented by \(y=x\cdot ln(2-{e}^{{-e}^{x}})\), which can overcome other activation functions with its good properties, is proposed. The variable \(x\) is used to contribute to a strong regulation effect of negative output. The logarithm operation is done to reduce the numerical range of the expression \((2-{e}^{{-e}^{x}})\). To present our contributions in this work, various experiments were conducted on different network models and datasets to evaluate the performance of Gish. With the experimental results, 98.7% success was achieved with the EfficientNetB4 model in the MNIST dataset, 86.5% with the EfficientNetB5 model in the CIFAR-10 dataset and 90.8% with the EfficientNetB6 model in the SVHN dataset. The obtained performances were shown to be higher than Swish, Mish, Logish and Smish. These results confirm the effectiveness and performance of Gish.

Similar content being viewed by others

Data availability

Datasets derived from public resources and made available with the article.

References

Sarker IH (2021) Deep learning: a comprehensive overview on techniques, taxonomy, applications and research directions. SN Comput Sci 2:420. https://doi.org/10.1007/s42979-021-00815-1

Kiliçarslan S, Celik M (2021) RSigELU: a nonlinear activation function for deep neural networks. Expert Syst Appl 174:114805. https://doi.org/10.1016/j.eswa.2021.114805

Krizhevsky A, Sutskever I, Hinton GE (2017) ImageNet classification with deep convolutional neural networks. Commun ACM 60(6):84–90. https://doi.org/10.1145/3065386

Jogunola O, Adebisi B, Hoang KV, Tsado Y, Popoola SI, Hammoudeh M, Nawaz R (2022) CBLSTM-AE: a hybrid deep learning framework for predicting energy consumption. Energies 15(3):810. https://doi.org/10.3390/en15030810

Ertuğrul ÖF, Akıl MF (2022) Detecting hemorrhage types and bounding box of hemorrhage by deep learning. Biomed Signal Process Control 71:103085. https://doi.org/10.1016/j.bspc.2021.103085

Zhou Y, Li D, Huo S, Kung S-Y (2021) Shape autotuning activation function. Expert Syst Appl 171:114534. https://doi.org/10.1016/j.eswa.2020.114534

Koçak Y, Şiray GÜ (2021) New activation functions for single layer feedforward neural network. Expert Syst Appl 164:113977. https://doi.org/10.1016/j.eswa.2020.113977

Khan IU, Afzal S, Lee JW (2022) Human activity recognition via hybrid deep learning based model. Sensors 22(1):323. https://doi.org/10.3390/s22010323

Parisi L, Ma R, RaviChandran N, Lanzillotta M (2021) hyper-sinh: an accurate and reliable function from shallow to deep learning in TensorFlow and Keras. Mach Learn Appl 6:100112. https://doi.org/10.1016/j.mlwa.2021.100112

Yousaf K, Nawaz T (2022) A deep learning-based approach for inappropriate content detection and classification of youtube videos. IEEE Access 10:16283–16298. https://doi.org/10.1109/access.2022.3147519

Dhiman C, Vishwakarma DK (2020) View-invariant deep architecture for human action recognition using two-stream motion and shape temporal dynamics. IEEE Trans Image Process 29:3835–3844. https://doi.org/10.1109/TIP.2020.2965299

Alwassel H, Giancola S, Ghanem B (2021) TSP: temporally-sensitive pretraining of video encoders for localization tasks. In: IEEE/CVF international conference on computer vision workshops (ICCVW). Montreal, BC, Canada, pp 3166–3176. https://doi.org/10.1109/ICCVW54120.2021.00356

Dhiman C, Vishwakarma DK, Agarwal P (2021) Part-wise spatio-temporal attention driven CNN-based 3D human action recognition. ACM Trans Multimed Comput Commun Appl 17(3):1–24. https://doi.org/10.1145/3441628

Klein S, Pluim JPW, Staring M, Viergever MA (2009) Adaptive stochastic gradient descent optimisation for image registration. Int J Comput Vision 81:227–239. https://doi.org/10.1007/s11263-008-0168-y

Huang GB, Babri HA (1998) Upper bounds on the number of hidden neurons in feedforward networks with arbitrary bounded nonlinear activation functions. IEEE Trans Neural Netw 9(1):224–229. https://doi.org/10.1109/72.655045

Yuen B, Hoang MT, Dong X, Lu T (2021) Universal activation function for machine learning. Sci Rep 11(1):18757. https://doi.org/10.1038/s41598-021-96723-8

Marder E, Taylor AL (2011) Multiple models to capture the variability in biological neurons and networks. Nat Neurosci 14(2):133–138. https://doi.org/10.1038/nn.2735

Matsuda S (2016) BPSpike: a backpropagation learning for all parameters in spiking neural networks with multiple layers and multiple spikes. In: IEEE international joint conference on neural networks (IJCNN). Vancouver, BC, Canada, pp 293–298. https://doi.org/10.1109/IJCNN.2016.7727211

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE conference on computer vision and pattern recognition (CVPR). Las Vegas, NV, USA, pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Liang X, Xu J (2021) Biased ReLU neural networks. Neurocomputing 423:71–79. https://doi.org/10.1016/j.neucom.2020.09.050

Han J, Moraga C (1995) The influence of the sigmoid function parameters on the speed of backpropagation learning. In: Mira J, Sandoval F (eds) From natural artificial neural computation international workshop on artificial neural networks (IWANN). Springer, Heidelberg, pp 195–201. https://doi.org/10.1007/3-540-59497-3_175

Oh J, Kim S, Lee C, Cha J-H, Yang SY, Im SG, Park C, Jang BC, Choi S-Y (2023) Preventing vanishing gradient problem of hardware neuromorphic system by implementing imidazole-based memristive ReLU activation neuron. Adv Mater 35(24):2300023. https://doi.org/10.1002/adma.202300023

Harrington PB (1993) Sigmoid transfer functions in backpropagation neural networks. Anal Chem 65(15):2167–2168. https://doi.org/10.1021/ac00063a042

Hahnloser RHR, Seung HS, Slotine J-J (2003) Permitted and forbidden sets in symmetric threshold-linear networks. Neural Comput 15(3):621–638. https://doi.org/10.1162/089976603321192103

Nair V, Hinton GE (2010) Rectified linear units improve restricted boltzmann machines. In: Proceedings of the 27th international conference on machine learning (ICML). Omnipress, Madison, WI, USA, pp 807–814. https://doi.org/10.5555/3104322.3104425

Courbariaux M, Bengio Y, David J-P (2015) BinaryConnect: training deep neural networks with binary weights during propagations. In: Proceedings of the 28th international conference on neural information processing systems (NIPS). MIT Press, Cambridge, MA, USA, 2:3123–3131. https://doi.org/10.5555/2969442.2969588

Gulcehre C, Moczulski M, Denil M, Bengio Y (2016) Noisy activation functions. arXiv preprint arXiv:1603.00391v3. https://doi.org/10.48550/arXiv.1603.00391

Maas AL, Hannun AY, Ng AY (2013) Rectifier nonlinearities improve neural network acoustic models. In: Proceedings of the 30th international conference on machine learning (ICML) vol 28, Atlanta, Georgia, USA. https://ai.stanford.edu/~amaas/papers/relu_hybrid_icml2013_final.pdf

Clevert D-A, Unterthiner T, Hochreiter S (2016) Fast and Accurate deep network learning by exponential linear units (ELUs). arXiv preprint arXiv:1511.07289. https://doi.org/10.48550/arXiv.1511.07289

Wang X, Qin Y, Wang Y, Xiang S, Chen H (2019) ReLTanh: an activation function with vanishing gradient resistance for SAE-based DNNs and its application to rotating machinery fault diagnosis. Neurocomputing 363:88–98. https://doi.org/10.1016/j.neucom.2019.07.017

Dubey SR, Singh SK, Chaudhuri BB (2022) Activation functions in deep learning: a comprehensive survey and benchmark. Neurocomputing 503:92–108. https://doi.org/10.1016/j.neucom.2022.06.111

Qin Y, Wang X, Zou J (2019) The optimized deep belief networks with improved logistic sigmoid units and their application in fault diagnosis for planetary gearboxes of wind turbines. IEEE Trans Ind Electron 66(5):3814–3824. https://doi.org/10.1109/tie.2018.2856205

Ren Z, Zhu Y, Yan K, Chen K, Kang W, Yue Y, Gao D (2020) A novel model with the ability of few-shot learning and quick updating for intelligent fault diagnosis. Mech Syst Signal Process 138:106608. https://doi.org/10.1016/j.ymssp.2019.106608

Ramachandran P, Zoph B, Le QV (2017) Searching for activation functions. arXiv preprint arXiv: 1710.05941v2. https://doi.org/10.48550/arXiv.1710.05941

Athlur S, Saran N, Sivathanu M, Ramjee R, Kwatra N (2022) Varuna: scalable, low-cost training of massive deep learning models. In: Proceedings of the seventeenth european conference on computer systems (EuroSys’22). Association for computing machinery, New York, NY, USA, pp 472–487. https://doi.org/10.1145/3492321.3519584

Pacal I, Karaman A, Karaboga D, Akay B, Basturk A, Nalbantoglu U, Coskun S (2022) An efficient real-time colonic polyp detection with YOLO algorithms trained by using negative samples and large datasets. Comput Biol Med 141:105031. https://doi.org/10.1016/j.compbiomed.2021.105031

Sendjasni A, Traparic D, Larabi M-C (2022) Investigating normalization methods for CNN-based image quality assessment. In: IEEE international conference on image processing (ICIP). Bordeaux, France, pp 4113–4117. https://doi.org/10.1109/ICIP46576.2022.9897268

Misra D (2019) Mish: A self regularized non-monotonic activation function. arXiv preprint arXiv:arXiv:1908.08681v3. https://doi.org/10.48550/arXiv.1908.08681

Zhu H, Zeng H, Liu J, Zhang X (2021) Logish: a new nonlinear nonmonotonic activation function for convolutional neural network. Neurocomputing 458:490–499. https://doi.org/10.1016/j.neucom.2021.06.067

Sun T, Ding S, Guo L (2022) Low-degree term first in ResNet, its variants and the whole neural network family. Neural Netw 148:155–165. https://doi.org/10.1016/j.neunet.2022.01.012

Yin L, Hong P, Zheng G, Chen H, Deng W (2022) A novel image recognition method based on DenseNet and DPRN. Appl Sci 12(9):4232. https://doi.org/10.3390/app12094232

Wang X, Ren H, Wang A (2022) Smish: a novel activation function for deep learning methods. Electronics 11(4):540. https://doi.org/10.3390/electronics11040540

Boob D, Dey SS, Lan G (2022) Complexity of training ReLU neural network. Discret Optim 44(1):100620. https://doi.org/10.1016/j.disopt.2020.100620

Sharma O (2022) Exploring the statistical properties and developing a non-linear activation function. In: IEEE international conference on automation, computing and renewable systems (ICACRS) pp 1370–1375, Pudukkottai, India. https://doi.org/10.1109/ICACRS55517.2022.10029124

Asghari M, Fathollahi-Fard AM, Mirzapour Al-e-hashem SMJ, Dulebenets MA (2022) Transformation and linearization techniques in optimization: a state-of-the-art survey. Mathematics 10(2):283. https://doi.org/10.3390/math10020283

Tian Y, Zhang Y, Zhang H (2023) Recent advances in stochastic gradient descent in deep learning. Mathematics 11(3):682. https://doi.org/10.3390/math11030682

Tan Z, Chen H (2023) Nonlinear function activated GNN versus ZNN for online solution of general linear matrix equations. J Franklin Inst 360(10):7021–7036. https://doi.org/10.1016/j.jfranklin.2023.05.007

Kurtz M, Kopinsky J, Gelashvili R, Matveev A, Carr J, Goin M, Leiserson W, Moore S, Nell B, Shavit N, Alistarh D (2020) Inducing and exploiting activation sparsity for fast neural network inference. In: Proceedings of the 37th international conference on machine learning (ICML). https://doi.org/10.5555/3524938.3525451

SciPy User Guide (2022) Gumbel left-skewed (for minimum order statistic) distribution—SciPy v1.7.1 Manual. The SciPy community. https://docs.scipy.org/doc/scipy/tutorial/stats/continuous_gumbel_l.html. Accessed 02 Jan 2022

Jahan I, Ahmed MF, Ali MO, Jang YM (2023) Self-gated rectified linear unit for performance improvement of deep neural networks. ICT Express 9(3):320–325. https://doi.org/10.1016/j.icte.2021.12.012

Sun Y (2021) The role of activation function in image classification. In: International Conference on communications, information system and computer engineering (CISCE), Beijing, China pp 275–278. https://doi.org/10.1109/CISCE52179.2021.9445868

Mercioni MA, Tat AM, Holban S (2020) Improving the Accuracy of deep neural networks through developing new activation functions. In: IEEE 16th international conference on intelligent computer communication and processing (ICCP), pp 385–391. https://doi.org/10.1109/ICCP51029.2020.9266162

Hendrycks D, Gimpel K (2016) Gaussian error linear units (GELUs). arXiv preprint arXiv:1606.08415v5. https://doi.org/10.48550/arXiv.1606.08415

Piotrowski AP, Napiorkowski JJ, Piotrowska AE (2020) Impact of deep learning-based dropout on shallow neural networks applied to stream temperature modelling. Earth Sci Rev 201:103076. https://doi.org/10.1016/j.earscirev.2019.103076

Krueger D, Maharaj T, Kramár J, Pezeshki M, Ballas N, Ke NR, Goyal A, Bengio Y, Courville A, Pal C (2017) Zoneout: regularizing RNNs by randomly preserving hidden activations. arXiv preprint arXiv:1606.01305v4. https://doi.org/10.48550/arXiv.1606.01305

Keras: Deep Learning for humans (2022) https://keras.io/. Accessed 03 Feb 2022

TensorFlow (2022) https://www.tensorflow.org/. Accessed 14 Mar 2022

Torch Scientific computing for LuaJIT (2022) http://torch.ch/. Accessed 20 Apr 2022

PyTorch (2022) https://pytorch.org/. Accessed 21 May 2022

Xing C, Zhao J, Duan C, Wang Z, Wang M (2022) Deep encoder with kernel-wise Taylor series for hyperspectral image classification. IEEE Trans Geosci Remote Sens 60:1–13. https://doi.org/10.1109/tgrs.2022.3216732

Mahaboob B, Venkateswararao P, Kumar PSP, Sarma SVM, Reddy SR, Krishna YH (2021) A review article on mathematical aspects of nonlinear models. Turk J Comput Math Educ (TURCOMAT) 12(10):5991–6010. https://doi.org/10.17762/turcomat.v12i10.5422

Sealey V, Infante N, Campbell MP, Bolyard J (2020) The generation and use of graphical examples in calculus classrooms: the case of the mean value theorem. J Math Behav 57:100743. https://doi.org/10.1016/j.jmathb.2019.100743

Vyas V, Jiang-Wei L, Zhou P, Hu X, Friedman JS (2021) Karnaugh map method for memristive and spintronic asymmetric basis logic functions. IEEE Trans Comput 70(1):128–138. https://doi.org/10.1109/tc.2020.2986970

Yang T, Wei Y, Tu Z, Zeng H, Kinsy MA, Zheng N, Ren P (2019) Design space exploration of neural network activation function circuits. IEEE Trans Comput Aided Des Integr Circuits Syst 38(10):1974–1978. https://doi.org/10.1109/tcad.2018.2871198

Elbrächter D, Perekrestenko D, Grohs P, Bölcskei H (2021) Deep neural network approximation theory. IEEE Trans Inf Theory 67(5):2581–2623. https://doi.org/10.1109/tit.2021.3062161

Khan AH, Cao X, Li S, Katsikis VN, Liao L (2020) BAS-ADAM: an ADAM based approach to improve the performance of beetle antennae search optimizer. IEEE/CAA J Autom Sin 7(2):461–471. https://doi.org/10.1109/jas.2020.1003048

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: IEEE international conference on computer vision (ICCV) pp 1026–1034. https://doi.org/10.1109/ICCV.2015.123

Tan M, Le QV (2019) EfficientNet: rethinking model scaling for convolutional neural networks. arXiv preprint arXiv:1905.11946v5. https://doi.org/10.48550/arXiv.1905.11946

Kandel I, Castelli M (2020) The effect of batch size on the generalizability of the convolutional neural networks on a histopathology dataset. ICT Express 6(4):312–315. https://doi.org/10.1016/j.icte.2020.04.010

Gao Y, Liu Y, Zhang H, Li Z, Zhu Y, Lin H, Yang M (2020) Estimating GPU memory consumption of deep learning models. In: Proceedings of the 28th ACM joint meeting on European software engineering conference and symposium on the foundations of software engineering (ESEC/FSE). New York, NY, USA pp 1342–1352. https://doi.org/10.1145/3368089.3417050

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958. https://doi.org/10.5555/2627435.2670313

Arpit D, Campos V, Bengio Y (2019) How to initialize your network? Robust initialization for WeightNorm and ResNets. In: Proceedings of the 33rd international conference on neural information processing systems. Curran Associates Inc., Red Hook, NY, USA, Article 978, pp 10902–10911. https://doi.org/10.5555/3454287.3455265

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the thirteenth international conference on artificial intelligence and statistics, PMLR, 9:249–256. https://proceedings.mlr.press/v9/glorot10a/glorot10a.pdf

Saxe AM, McClelland JL, Ganguli S (2014) Exact solutions to the nonlinear dynamics of learning in deep linear neural networks. arXiv preprint arXiv:1312.6120v3. https://doi.org/10.48550/arXiv.1312.6120

Smith SL, Kindermans P-J, Ying C, Le QV (2018) Don’t decay the learning rate, increase the batch size. arXiv preprint arXiv:1711.00489v2. https://doi.org/10.48550/arXiv.1711.00489

Mustika IW, Adi HN, Najib F (2021) Comparison of Keras optimizers for earthquake signal classification based on deep neural networks. In: IEEE 4th international conference on information and communications technology (ICOIACT) pp 304–308. https://doi.org/10.1109/ICOIACT53268.2021.9563990

Duchi J, Hazan E, Singer Y (2011) Adaptive subgradient methods for online learning and stochastic optimization. J Mach Learn Res (JMLR) 12:2121–2159. https://doi.org/10.5555/1953048.2021068

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980v9. https://doi.org/10.48550/arXiv.1412.6980

Dogo EM, Afolabi OJ, Nwulu NI, Twala B, Aigbavboa CO (2018) A comparative analysis of gradient descent-based optimization algorithms on convolutional neural networks. In: International conference on computational techniques, electronics and mechanical systems (CTEMS) pp 92–99. https://doi.org/10.1109/CTEMS.2018.8769211

Sutskever I, Martens J, Dahl G, Hinton G (2013) On the importance of initialization and momentum in deep learning. In: Proceedings of the 30th international conference on machine learning (ICML) 28:1139–1147. https://doi.org/10.5555/3042817.3043064

Xu D, Zhang S, Zhang H, Mandic DP (2021) Convergence of the RMSProp deep learning method with penalty for nonconvex optimization. Neural Netw 139:17–23. https://doi.org/10.1016/j.neunet.2021.02.011

Nusrat I, Jang S-B (2018) A comparison of regularization techniques in deep neural networks. Symmetry 10(11):648. https://doi.org/10.3390/sym10110648

Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324. https://doi.org/10.1109/5.726791

CIFAR-10 and CIFAR-100 datasets (2022) https://www.cs.toronto.edu/~kriz/cifar.html. Accessed 29 June 2022

Netzer Y, Wang T, Coates A, Bissacco A, Wu B, Ng AY (2011) Reading digits in natural images with unsupervised feature learning. In: Neural information processing systems (NIPS) workshop on deep learning and unsupervised feature learning. http://ufldl.stanford.edu/housenumbers/nips2011_housenumbers.pdf

Farda NA, Lai J-Y, Wang J-C, Lee P-Y, Liu J-W, Hsieh I-H (2021) Sanders classification of calcaneal fractures in CT images with deep learning and differential data augmentation techniques. Injury 52(3):616–624. https://doi.org/10.1016/j.injury.2020.09.010

Xiao H, Rasul K, Vollgraf R (2017) Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747v2. https://doi.org/10.48550/arXiv.1708.07747

Noel MM, Trivedi A, Dutta P (2023) Growing cosine unit: a novel oscillatory activation function that can speedup training and reduce parameters in convolutional neural networks. arXiv preprint arXiv:2108.12943v3. https://doi.org/10.48550/arXiv.2108.12943

Zhu M, Min W, Wang Q, Zou S, Chen X (2021) PFLU and FPFLU: two novel non-monotonic activation functions in convolutional neural networks. Neurocomputing 429:110–117. https://doi.org/10.1016/j.neucom.2020.11.068

Mercioni MA, Holban S (2021) Soft-clipping swish: a novel activation function for deep learning. In: IEEE 15th international symposium on applied computational intelligence and informatics (SACI), Timisoara, Romania. https://doi.org/10.1109/SACI51354.2021.9465622

Liu X, Di X (2021) TanhExp: a smooth activation function with high convergence speed for lightweight neural networks. IET Comput Vision 15(2):136–150. https://doi.org/10.1049/cvi2.12020

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kaytan, M., Aydilek, İ.B. & Yeroğlu, C. Gish: a novel activation function for image classification. Neural Comput & Applic 35, 24259–24281 (2023). https://doi.org/10.1007/s00521-023-09035-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-09035-5