Abstract

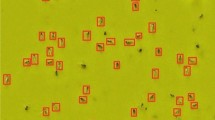

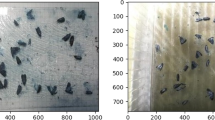

Precision agriculture has long struggled with the surveillance and control of pests. Traditional methods for estimating pest density and distribution through manual reconnaissance are often time-consuming and labor-intensive. To address these challenges, this study proposes a novel farmland detection system utilizing UAVs, yellow sticky traps, and deep learning techniques. The system includes a UAV based on UWB communication and positioning technology to collect picture information of sticky traps arranged in farmland. Moreover, a faster and more accurate Qpest-RCNN model is used to count the number of insects in the collected sticky traps. The bivariate kernel density estimation establishes the pest density distribution map. Regarding the dynamic monitoring of pest density in agricultural fields, it primarily involves four components: reaching the designated area, flight learning, image acquisition, and visual counting of insects and calculation of insect density. Experimental results demonstrate that UAVs require less time to adjust flight posture during image acquisition after undergoing flight learning, resulting in more concise flight trajectories. The Qpest-RCNN model introduces a variety of mechanisms based on the characteristics of the collected sticky trap data set to improve the faster-R-CNN model. We used two data sets separately to train the model, which were collected by sticky traps placed in greenhouses and open-air experimental fields. The data set from the greenhouse is an open-source dataset provided by M. Deserno et. al, map, precision, and recall of model are 0.923, 0.989, and 0.919, respectively. When the data set collected in the experimental farmland is used to train the model, map, precision, and recall of model are 0.781, 0.851, and 0.789, respectively. In the meantime, we explored the effects of species interference on visual insect statistics and the optimization effect of species hypothesis on statistics in two environments. At the same time, the inference speed of the improved model is about a quarter faster than the FPS of the original Faster-RCNN during inference. Through Qpest-RCNN, the number of insects captured on sticky traps can be counted quickly and accurately and the bivariate kernel density estimation is used to draw the pest density distribution map to observe the pest distribution of the whole farmland visually. This pest-density farmland detection system is valuable for agriculture by helping farmers control pests, reduce crop damage, and increase yield and quality.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Shin YK, Kim SB, Kim D-SJJOA-PE (2020) Attraction characteristics of insect pests and natural enemies according to the vertical position of yellow sticky traps in a strawberry farm with high-raised bed cultivation. J Asia-Pacific Entomol 23(4):1062–1066

Pinto-Zevallos DM, Vänninen IJCP (2013) Yellow sticky traps for decision-making in whitefly management: what has been achieved? Crop Prot 47:74–84

Qiao M et al (2008) Density estimation of Bemisia tabaci (Hemiptera: Aleyrodidae) in a greenhouse using sticky traps in conjunction with an image processing system. J Asia-Pac Entomol 11(1):25–29

Hall DG, Sétamou M, Mizell RFJCP III (2010) A comparison of sticky traps for monitoring Asian citrus psyllid (Diaphorina citri Kuwayama). Elsevier 29(11):1341–1346

Rodríguez LAR, Castañeda-Miranda CL, Lució MM, Solís-Sánchez LO, Castañeda-Miranda RJM, Simulation CI (2021) Quarternion color image processing as an alternative to classical grayscale conversion approaches for pest detection using yellow sticky traps. Math Comput Simul 182:646–660

Sun Y et al (2017) A smart-vision algorithm for counting whiteflies and thrips on sticky traps using two-dimensional Fourier transform spectrum. Biosys Eng 153:82–88

Lee H, Choi W, Eom S, Park J-JJJOA-PB (2022) "Rapid estimation of the density of whiteflies (Hemiptera: Aleyrodidae) on sticky traps in paprika greenhouses using the presence–absence model. J Asia-Pac Biodiv 15(2):225–230

Li W et al (2021) Field detection of tiny pests from sticky trap images using deep learning in agricultural greenhouse. Comput Electron Agric 183:106048

Boursianis AD et al (2022) Internet of things (IoT) and agricultural unmanned aerial vehicles (UAVs) in smart farming: a comprehensive review. Internet of Things 18:100187

Srivastava K, Bhutoria AJ, Sharma JK, Sinha A, PCJRSAS Pandey and Environment (2019) UAVs technology for the development of GUI based application for precision agriculture and environmental research. Remote Sens Appl: Soc Environ 16:100258

Anand S, Sharma AJMS (2022) AgroKy: an approach for enhancing security services in precision agriculture. Meas: Sens 24:100449

CouliablyS Kamsu-Foguem B, Kamissoko D, Traore DJISwA, (2022) Deep learning for precision agriculture: A bibliometric analysis. Intel Syst Appl 16:200102

Cui M, Qian J, LJJoRS Cui (2022) Developing precision agriculture through creating information processing capability in rural China. J Rural Stud 92:237–252

Hanson ED, Cossette MK, Roberts DCJTiS (2022) The adoption and usage of precision agriculture technologies in North Dakota. Tech Soc 71:102087

Ampatzidis Y, Partel V, Costa LJ (2020) Agroview: Cloud-based application to process, analyze and visualize UAV-collected data for precision agriculture applications utilizing artificial intelligence. Comput Electron Agri 174:105457

Comba L, Biglia A, Aimonino DR, Gay PJC, EI Agriculture (2018) Unsupervised detection of vineyards by 3D point-cloud UAV photogrammetry for precision agriculture. Comput Electron Agric 155(84):95

Elmokadem TJI-P (2019) Distributed coverage control of quadrotor multi-UAV systems for precision agriculture. IFAC-PapersOnLine 52(30):251–256

Radoglou-Grammatikis P, Sarigiannidis P, Lagkas T, Moscholios IJCN (2020) A compilation of UAV applications for precision agriculture. Comput Netw 172:107148

Su J, Zhu X, Li S, Chen W-HJN (2022) AI meets UAVs: A survey on AI empowered UAV perception systems for precision agriculture. Neurocomputing 518:242–270

Singh PK, Sharma AJC, Engineering E (2022) An intelligent WSN-UAV-based IoT framework for precision agriculture application. Comput Electr Eng 100:107912

Tetila EC et al (2020) Detection and classification of soybean pests using deep learning with UAV images. Comput Electron Agric 179:105836

Nieuwenhuizen AT, Hemming J, Suh HK (2018) Detection and classification of insects on stick-traps in a tomato crop using Faster R-CNN. In: The Netherlands Conference on Computer Vision

Nieuwenhuizen A et al (2019) Raw data from Yellow Sticky Traps with insects for training of deep learning convolutional neural network for object detection

DesernoM, Briassouli A (2021) Faster r-CNN and efficientnet for accurate insect identification in a relabeled yellow sticky traps dataset. In: 2021 IEEE international workshop on metrology for agriculture and forestry (MetroAgriFor), 2021: IEEE, pp 209–214

Mo H, Zhang J, Ma Y (2019) Application and system design of dw1000 in UAV cluster. Electron World 2019(12):135–137

Zheng Y, Xue L, Dong L (2019) Formation control of mobile robots with UWB localization technology. Chin J Intell Sci Technol 1(1):83–87

Liu B, Zhang R, Li Y, Wang Y, Fu P (2019) An UWB-based wireless module set with transparent transmission. J China Acad Electron Inf Technol 14(2):168–176

Shi D, Liu C, She F (2022) Cooperation localization method based on location confidence of multi-UAV in GPS-denied environment. Comput Sci 49(4):302–311

Yu S (2020) Design and realization of flight control for UAV Group’s autonomous formation. Inner Mongolia University, Hohhot

HuZ Tong Q, Liu SJJCJU (2020) Lane departure identification and early warning method for autonomous vehicle. J Chongqing Jiaotong Univer (Nat Sci) 39(10):118–125

Wang L (2017) Research and implementation of car lane departure warning system based on image processing. University of Electronic Science and Technology of China

Li G (2021) Design of UAV navigation system based on image recognition. J Agric Mech Res 43(1):114–118

Ibrahim MH (2021) ODBOT: outlier detection-based oversampling technique for imbalanced datasets learning. Neural Comput Appl 33(22):15781–15806

RenS, He K, Girshick R, Sun JJA i n i p s (2015) Faster r-cnn: toward real-time object detection with region proposal networks. vol 28

Girshick R (2015) Fast r-cnn. In: Proceedings of the IEEE international conference on computer vision, pp 1440–1448

Lin T-Y, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2117–2125

Yang C, Huang Z, Wang N (2022) QueryDet: cascaded sparse query for accelerating high-resolution small object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13668–13677

Lin T-Y, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, pp 2980–2988

BodlaN, Singh B, Chellappa R, Davis LS (2017) Soft-NMS-improving object detection with one line of code. In: Proceedings of the IEEE international conference on computer vision, 2017, pp 5561–5569

Sønderby SK, Sønderby CK, Maaløe L, Winther OJapa (2015) Recurrent spatial transformer networks. arXiv preprint arXiv:1509.05329

Xu Z (2021) Research on traffic target detection algorithm based on improved faster RCNN. https://doi.org/10.26976/dcnki.Gchau.2021.000610

ZhuX, Cheng D, Zhang Z, Lin S, Dai J (2019) An empirical study of spatial attention mechanisms in deep networks. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 6688–6697

Simonoff JS (2012) Smoothing methods in statistics. Springer, Berlin

Botev ZI, Grotowski JF, Kroese DP (2010) Kernel density estimation via diffusion. Ann Stat 38(5):2916–2957

Cheng C (2022) Real-time mask detection based on SSD-MobileNetV2. In: 2022 IEEE 5th international conference on automation, electronics and electrical engineering (AUTEEE), IEEE, pp 761–767

Redmon J, Farhadi AJapa (2018) Yolov3: an incremental improvement. arXiv e-prints

Acknowledgements

Supported by the National Natural Science Foundation of China (61771034). Study the online detection technology of trace dissolved oxygen using a three-electrode balance.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they are unaware of competition for financial interests or personal relationships that may affect the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Juan, Y., Ke, Z., Chen, Z. et al. Rapid density estimation of tiny pests from sticky traps using Qpest RCNN in conjunction with UWB-UAV-based IoT framework. Neural Comput & Applic 36, 9779–9803 (2024). https://doi.org/10.1007/s00521-023-09230-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-09230-4