Abstract

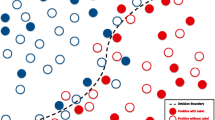

As standard probabilistic latent semantic analysis (pLSA) is oriented to discrete quantity only, pLSA with Gaussian mixtures (GM-pLSA) succeeding in transferring it to continuous feature space is proposed, which uses Gaussian mixture model to describe the feature distribution under each latent aspect. However, inheriting from pLSA, GM-pLSA still overlooks the intrinsic interdependence between terms, which indeed is an important clue for performance improvement. In this paper, we present a graph regularized GM-pLSA (GRGM-pLSA) model as an extension of GM-pLSA to embed this term correlation information into the process of model learning. Specifically, grounded on the manifold regularization principle, a graph regularizer is introduced to characterize the correlation between terms; by imposing it on the objective function of GM-pLSA, model parameters of GRGM-pLSA are derived via corresponding expectation maximization algorithm. Furthermore, two applications to video content analysis are devised. One is video categorization where GRGM-pLSA serves for feature mapping with two kinds of sub-shot correlations, respectively, incorporated, while the other provides a new perspective on video concept detection, which transforms the detection task to a GRGM-pLSA-based visual-to-textual feature conversion problem. Extensive experiments and comparison with GM-pLSA and several state-of-the-art approaches in both applications demonstrate the effectiveness of GRGM-pLSA.

Similar content being viewed by others

References

Ewerth, R., Freisleben, B.: Semi-supervised learning for semantic video retrieval. In: Proceedings of ACM International Conference on Image and Video Retrieval, pp. 154–161 (2007)

Zha, Z., Mei, T., Wang, J., Wang, Z., Hua, X.: Graph-based semi-supervised learning with multiple labels. J. Vis. Commun. Image Represent. 20(2), 97–103 (2009)

Yang, J., Yan, R., Hauptmann, A.G.: Cross-domain video concept detection using adaptive SVMs. In: Proceedings of ACM International Conference on Multimedia, pp. 188–197 (2007)

Hofmann, T.: Unsupervised learning by probabilistic latent semantic analysis. Mach. Learn. 42(2), 177–196 (2001)

Bosch, A., Zisserman, A., Muñoz, X.: Scene classification via pLSA. In: Proceedings of European Conference on Computer Vision, pp. 517–530 (2006)

Hörster, E., Lienhart, R., Slaney, M.: Continuous visual vocabulary models for pLSA-based scene recognition. In: Proceedings of ACM International Conference on Image and Video Retrieval, pp. 319–328 (2008)

Monay, F., Gatica-Perez, D.: Modeling semantic aspects for cross-media image indexing. IEEE Trans. Pattern Anal. Mach. Intell. 29(4), 1802–1817 (2007)

Li, Z., Shi, Z., Liu, X., Shi, Z.: Modeling continuous visual features for semantic image annotation and retrieval. Pattern Recognit. Lett. 32(3), 516–523 (2011)

Ahrendt, P., Larsen, J., Goutte, C.: Co-occurrence models in music genre classification. In: Proceedings of IEEE Workshop on Machine Learning for Signal Processing, pp. 247–252 (2005)

Bekkerman, R., Allan, J.: Using bigrams in text categorization. CIIR Technical Report IR-408 (2004)

Chen, B.: Word topic models for spoken document retrieval and transcription. ACM Trans. Asian Lang. Inf. Process. 8(1), 1–27 (2009)

Wong, S., Kim, T., Cipolla, R.: Learning motion categories using both semantic and structural information. In: Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 1–6 (2007)

Fergus, R., Li, F., Perona, P., Zisserman, A.: Learning object categories from Google’s image search. In: Proceedings of International Conference on Computer Vision, pp. 1816–1823 (2005)

Zhang, J., Gong, S.: Action categorization by structural probabilistic latent semantic analysis. Comput. Vis. Image Underst. 114(8), 857–864 (2010)

Belkin, M., Niyogi, P., Sindhwani, V.: Manifold regularization: a geometric framework for learning from labeled and unlabeled examples. J. Mach. Learn. Res. 7, 2399–2434 (2006)

Brezeale, D., Cook, D.J.: Automatic video classification: a survey of the literature. IEEE Trans. Syst. Man Cybern. Part C 38(3), 416–430 (2008)

Huang, C., Shih, H., Chao, C.: Semantic analysis of soccer video using dynamic Bayesian network. IEEE Trans. Multimed. 8(4), 749–760 (2006)

Lehane, B., O’Connor, N.E., Murphy, N.: Action sequence detection in motion pictures. In: Proceedings of European Workshop on the Integration of Knowledge, Semantics and Digital Media Technology (2004)

Xu, G., Ma, Y., Zhang, H., Yang, S.: An HMM-based framework for video semantic analysis. IEEE Trans. Circuits Syst. Video Technol. 15(11), 1422–1433 (2005)

Xu, C., Wang, J., Lu, H., Zhang, Y.: A novel framework for semantic annotation and personalized retrieval of sports video. IEEE Trans. Multimed. 10(3), 421–436 (2008)

Truong, B.T., Venkatesh, S., Dorai, C.: Automatic genre identification for content-based video categorization. In: Proceedings of International Conference on Pattern Recognition, pp. 4230–4233 (2000)

Yuan, X., Lai, W., Mei, T., Hua, X., Wu, X., Li, S.: Automatic video genre categorization using hierarchical SVM. In: Proceedings of IEEE International Conference on Image Processing, pp. 2905–2908 (2006)

Ulges, A., Schulze, C., Koch, M., Breuel, T.M.: Learning automatic concept detectors from online video. Comput. Vis. Image Underst. 114(4), 429–438 (2010)

Hu, W., Xie, N., Li, L., Zeng, X., Maybank, S.J.: A survey on visual content-based video indexing and retrieval. IEEE Trans. Syst. Man Cybern. Part C 41(6), 797–819 (2011)

Yang, L., Liu, J., Yang, X., Hua, X.: Multi-modality web video categorization. In: Proceedings of ACM SIGMM International Workshop on Multimedia Information Retrieval, pp. 265–274 (2007)

Lee, K., Ellis, D.P.W.: Audio-based semantic concept classification for consumer video. IEEE Trans. Audio Speech Lang. Process. 16(6), 1406–1416 (2010)

http://www-nlpir.nist.gov/projects/trecvid/. NIST. Trec video retrieval evaluation (trecvid)

Shi, R., Chua, T., Lee, C., Gao, S.: Bayesian learning of hierarchical multinomial mixture models of concepts for automatic image annotation. In: Proceedings of ACM International Conference on Image and Video Retrieval, pp. 102–112 (2006)

Grangier, D., Bengio, S.: A discriminative kernel-based approach to rank images from text queries. IEEE Trans. Pattern Anal. Mach. Intell. 30(8), 1371–1384 (2008)

Yanagawa, A., Chang, S., Kennedy, L., Hsu, W.: Columbia university’s baseline detectors for 374 LSCOM semantic visual concepts. Columbia University ADVENT Technical Report # 222-2006-8 (2007)

Li, Y., Tian, Y., Duan, L., Yang, J., Huang, T., Gao, W.: Sequence multi-labeling: a unified video annotation scheme with spatial and temporal context. IEEE Trans. Multimed. 12(8), 814–828 (2010)

Laptev, I.: On space-time interest points. Int. J. Comput. Vis. 64(2–3), 107–123 (2005)

Jiang, W., Cotton, C.V., Chang, S., Ellis, D., Loui, A.C.: Short-term audio-visual atoms for generic video concept classification. In: Proceedings of ACM International Conference on Multimedia, pp. 5–14 (2009)

Liu, K., Weng, M., Tseng, C., Chuang, Y., Chen, M.: Association and temporal rule mining for post-filtering of semantic concept detection in video. IEEE Trans. Multimed. 10(2), 240–251 (2008)

Jeon, J., Lavrenko, V., Manmatha, R.: Automatic image annotation and retrieval using cross-media relevance models. In: Proceedings of ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 119–126 (2003)

Feng, S., Manmatha, R., Lavrenko, V.: Multiple Bernoulli relevance models for image and video annotation. In: Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 1002–1009 (2004)

Blei, D.M., Jordan, M.I.: Modeling annotated data. In: Proceedings of ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 127–134 (2003)

Monay, F., Gatica-Perez, D.: On image auto-annotation with latent space models. In: Proceedings of ACM International Conference on Multimedia, pp. 275–278 (2003)

Li, Z., Shi, Z., Liu, X., Li, Z., Shi, Z.: Fusing semantic aspects for image annotation and retrieval. J. Vis. Commun. Image Represent. 21(8), 798–805 (2010)

Dempster, A., Laird, N., Rubin, D.: Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B. 39(1), 1–38 (1977)

Belkin, M., Niyogi, P.: Laplacian eigenmaps and spectral techniques for embedding and clustering. In: Advances in Neural Information Processing Systems 14, pp 585–591. MIT press (2001)

Zhou, D., Bousquet, O., Lal, T.N., Weston, J., Schölkopf, B.: Learning with local and global consistency. In: Advances in Neural Information Processing Systems 16, pp 321–328. MIT press (2004)

Tang, J., Hua, X., Mei, T., Qi, G., Wu, X.: Video annotation based on temporally consistent Gaussian random field. Electron. Lett. 43(8), 448–449 (2007)

Liu, J., Cai, D., He, X.: Gaussian mixture model with local consistency. In: Proceedings of AAAI Conference on Artificial Intelligence, pp. 512–517 (2010)

Cai, D., He, X., Han, J., Huang, T.S.: Graph regularized nonnegative matrix factorization for data representation. IEEE Trans. Pattern Anal. Mach. Intell. 33(8), 1548–1560 (2011)

Yang, J., Hauptmann, A.G.: Exploring temporal consistency for video analysis and retrieval. In: Proceedings of ACM SIGMM International Workshop on Multimedia Information Retrieval, pp. 33–42 (2006)

Wright, J., Ma, Y., Mairal, J., Sapiro, G., Huang, T.S., Yan, S.: Sparse representations for computer vision and pattern recognition. Proc. IEEE 98(6), 1031–1044 (2010)

Wang, C., Yan, S., Zhang, L., Zhang, H.: Multi-label sparse coding for automatic image annotation. In: Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 1643–1650 (2009)

Ulges, A., Schulze, C., Keysers, D., Breuel, T.M.: A system that learns to tag videos by watching Youtube. In: Proceedings of International Conference on Computer Vision Systems, pp. 415–424 (2008)

Yanagawa, A., Hsu, W., Chang, S.: Brief descriptions of visual features for baseline TRECVID concept detectors. Columbia University ADVENT Technical Report #219-2006-5 (2006)

Jiang, Y., Yang, J., Ngo, C., Hauptmann, A.G.: Representations of keypoint-based semantic concept detection: a comprehensive study. IEEE Trans. Multimed. 12(1), 42–53 (2010)

Acknowledgments

This work is supported by the National Science Foundation of China (61273274, 4123104), National 973 Key Research Program of China (2011CB302203), Ph.D. Programs Foundation of Ministry of Education of China (20100009110004), National Key Technology R&D Program of China (2012BAH01F03) and Tsinghua-Tencent Joint Lab for IIT.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by B. Huet.

Rights and permissions

About this article

Cite this article

Zhong, C., Miao, Z. Graph regularized GM-pLSA and its applications to video content analysis. Multimedia Systems 20, 429–445 (2014). https://doi.org/10.1007/s00530-014-0378-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-014-0378-9