Abstract

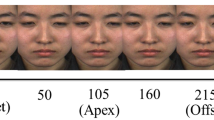

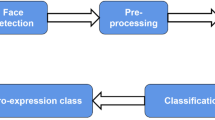

Micro-expression (ME) can reflect a person’s authentic internal thoughts and thus have significant research value in many fields. With the continuous development of computer technology, ME analysis methods have gradually shifted from psychological-based to computer vision-based in recent years. ME spotting, a critical branch in ME analysis, has received more and more attention. Some review papers on ME have been published in recent years. However, most of them focused mainly on ME recognition and lacked a detailed study of ME spotting. Therefore, this paper attempts to conduct a comprehensive review of the latter. Specifically, we first summarize the research scope of ME spotting and introduce the current spontaneous ME datasets. Then, we review the ME spotting methods from two aspects: apex detection and interval detection. We further classify ME interval detection into handcrafted-based and deep learning-based. The characteristics and limitations of each technique are discussed in detail. After that, evaluation metrics and the experimental comparison of these methods followed. In addition, we discuss the challenges in ME spotting and outline the possible directions for future research. We hope this review article could assist researchers in better understanding ME spotting.

Similar content being viewed by others

Data availability

The datasets used in our paper (CASME, CASME II, SMIC, SAMM-LV, CAS(ME)2) are publicly available.

References

Yan, W.-J., Wu, Q., Liang, J., Chen, Y.-H., Fu, X.: How fast are the leaked facial expressions: the duration of micro-expressions. J. Nonverb. Behav. 37(4), 217–230 (2013). https://doi.org/10.1007/s10919-013-0159-8

Matsumoto, D., Hwang, H.S.: Evidence for training the ability to read microexpressions of emotion. Motiv. Emot. 35(2), 181–191 (2011). https://doi.org/10.1007/s11031-011-9212-2

Haggard, E.A., Isaacs, K.S.: Micromomentary facial expressions as indicators of ego mechanisms in psychotherapy, pp. 154–165 (1966). https://doi.org/10.1007/978-1-4684-6045-2_14

Ekman, P., Friesen, W.V.: Nonverbal leakage and clues to deception. Psychiatry 32(1), 88–106 (1969). https://doi.org/10.1080/00332747.1969.11023575

Frank, M.G., Svetieva, E.: Microexpressions and deception, pp. 227–242 (2015). https://doi.org/10.1007/978-81-322-1934-7_11

Endres, J., Laidlaw, A.: Micro-expression recognition training in medical students: a pilot study. BMC Med. Educ. 9(1), 1–6 (2009). https://doi.org/10.1186/1472-6920-9-47

Oh, Y.-H., See, J., Le Ngo, A.C., Phan, R.C.-W., Baskaran, V.M.: A survey of automatic facial micro-expression analysis: databases, methods, and challenges. Front. Psychol. 9, 1128 (2018). https://doi.org/10.3389/fpsyg.2018.01128

Xie, H.-X., Lo, L., Shuai, H.-H., Cheng, W.-H.: An overview of facial micro-expression analysis: Data, methodology and challenge. IEEE Trans. Affect. Comput. (2022). https://doi.org/10.1109/TAFFC.2022.3143100

Esmaeili, V., Mohassel Feghhi, M., Shahdi, S.O.: A comprehensive survey on facial micro-expression: approaches and databases. Multim. Tools Appl. (2022). https://doi.org/10.1007/s11042-022-13133-2

Shreve, M., Godavarthy, S., Manohar, V., Goldgof, D., Sarkar, S.: Towards macro-and micro-expression spotting in video using strain patterns. In: 2009 Workshop on Applications of Computer Vision (WACV), pp. 1–6 (2009). https://doi.org/10.1109/WACV.2009.5403044. IEEE

Shreve, M., Godavarthy, S., Goldgof, D., Sarkar, S.: Macro-and micro-expression spotting in long videos using spatio-temporal strain. In: 2011 IEEE International Conference on Automatic Face & Gesture Recognition (FG), pp. 51–56 (2011). https://doi.org/10.1109/FG.2011.5771451. IEEE

Takalkar, M., Xu, M., Wu, Q., Chaczko, Z.: A survey: facial micro-expression recognition. Multim. Tools Appl. 77(15), 19301–19325 (2018). https://doi.org/10.1007/s11042-017-5317-2

Zhou, L., Shao, X., Mao, Q.: A survey of micro-expression recognition. Image Vis. Comput. 105, 104043 (2021). https://doi.org/10.1016/j.imavis.2020.104043

Li, Y., Wei, J., Mohammadifoumani, S., Liu, Y., Zhao, G.: Deep learning based micro-expression recognition: a survey. arXiv e-prints, 2107 (2021). https://doi.org/10.48550/arXiv.2107.02823

Verma, M., Vipparthi, S.K., Singh, G.: Deep insights of learning based micro expression recognition: A perspective on promises, challenges and research needs. IEEE Trans. Cogn. Dev. Syst. (2022). https://doi.org/10.1109/TCDS.2022.3226348

Li, X., Hong, X., Moilanen, A., Huang, X., Pfister, T., Zhao, G., Pietikäinen, M.: Towards reading hidden emotions: a comparative study of spontaneous micro-expression spotting and recognition methods. IEEE Trans. Affect. Comput. 9(4), 563–577 (2018). https://doi.org/10.1109/TAFFC.2017.2667642

Goh, K.M., Ng, C.H., Lim, L.L., Sheikh, U.U.: Micro-expression recognition: an updated review of current trends, challenges and solutions. Vis. Comput. 36(3), 445–468 (2020). https://doi.org/10.1007/s00371-018-1607-6

Ben, X., Ren, Y., Zhang, J., Wang, S.-J., Kpalma, K., Meng, W., Liu, Y.-J.: Video-based facial micro-expression analysis: A survey of datasets, features and algorithms. IEEE Trans. Pattern Anal. Mach. Intell. (2021). https://doi.org/10.1109/TPAMI.2021.3067464

Pan, H., Xie, L., Wang, Z., Liu, B., Yang, M., Tao, J.: Review of micro-expression spotting and recognition in video sequences. Virtual Reality Intell. Hardw. 3(1), 1–17 (2021). https://doi.org/10.1016/j.vrih.2020.10.003

Gong, W., An, Z., Elfiky, N.M.: Deep learning-based microexpression recognition: a survey. Neural Comput. Appl. 1–24 (2022). https://doi.org/10.1007/s00521-022-07157-w

Yan, W.-J., Wu, Q., Liu, Y.-J., Wang, S.-J., Fu, X.: Casme database: A dataset of spontaneous micro-expressions collected from neutralized faces. In: 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), pp. 1–7 (2013). https://doi.org/10.1109/FG.2013.6553799. IEEE

Yan, W.-J., Li, X., Wang, S.-J., Zhao, G., Liu, Y.-J., Chen, Y.-H., Fu, X.: Casme ii: an improved spontaneous micro-expression database and the baseline evaluation. PloS One 9(1), 86041 (2014). https://doi.org/10.1371/journal.pone.0086041

Qu, F., Wang, S.-J., Yan, W.-J., Li, H., Wu, S., Fu, X.: Cas(me)\(^2\): a database for spontaneous macro-expression and micro-expression spotting and recognition. IEEE Trans. Affect. Comput. 9(4), 424–436 (2017). https://doi.org/10.1109/TAFFC.2017.2654440

Li, J., Dong, Z., Lu, S., Wang, S.-J., Yan, W.-J., Ma, Y., Liu, Y., Huang, C., Fu, X.: Cas(me)<sup>3</sup>: A third generation facial spontaneous micro-expression database with depth information and high ecological validity. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1–1 (2022). https://doi.org/10.1109/TPAMI.2022.3174895

Davison, A.K., Lansley, C., Costen, N., Tan, K., Yap, M.H.: Samm: a spontaneous micro-facial movement dataset. IEEE Trans. Affect. Comput. 9(1), 116–129 (2016). https://doi.org/10.1109/TAFFC.2016.2573832

Yap, C.H., Kendrick, C., Yap, M.H.: Samm long videos: a spontaneous facial micro-and macro-expressions dataset. In: 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), pp. 771–776 (2020). https://doi.org/10.1109/FG47880.2020.00029. IEEE

Li, X., Pfister, T., Huang, X., Zhao, G., Pietikäinen, M.: A spontaneous micro-expression database: inducement, collection and baseline. In: 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (fg), pp. 1–6 (2013). https://doi.org/10.1109/FG.2013.6553717. IEEE

Tran, T.-K., Vo, Q.-N., Hong, X., Li, X., Zhao, G.: Micro-expression spotting: a new benchmark. Neurocomputing 443, 356–368 (2021). https://doi.org/10.1016/j.neucom.2021.02.022

Yan, W.-J., Wang, S.-J., Chen, Y.-H., Zhao, G., Fu, X.: Quantifying micro-expressions with constraint local model and local binary pattern. In: European Conference on Computer Vision, pp. 296–305 (2014). https://doi.org/10.1007/978-3-319-16178-5_20. Springer

Cristinacce, D., Cootes, T.F., et al.: Feature detection and tracking with constrained local models. In: Bmvc, vol. 1, p. 3 (2006). https://doi.org/10.5244/C.20.95. Citeseer

Ojala, T., Pietikäinen, M., Harwood, D.: A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 29(1), 51–59 (1996). https://doi.org/10.1016/0031-3203(95)00067-4

Yan, W.-J., Chen, Y.-H.: Measuring dynamic micro-expressions via feature extraction methods. J. Comput. Sci. 25, 318–326 (2018). https://doi.org/10.1016/j.jocs.2017.02.012

Esmaeili, V., Shahdi, S.O.: Automatic micro-expression apex spotting using cubic-lbp. Multim. Tools Appl. 79(27), 20221–20239 (2020). https://doi.org/10.1007/s11042-020-08737-5

Esmaeili, V., Mohassel Feghhi, M., Shahdi, S.O.: Spotting micro-movements in image sequence by introducing intelligent cubic-lbp. IET Image Process. 16(14), 3814–3830 (2022). https://doi.org/10.1049/ipr2.12596

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016). https://doi.org/10.48550/arXiv.1512.03385

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Commun. ACM 60(6), 84–90 (2017). https://doi.org/10.1145/3065386

Liong, S.-T., See, J., Wong, K., Le Ngo, A.C., Oh, Y.-H., Phan, R.: Automatic apex frame spotting in micro-expression database. In: 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), pp. 665–669 (2015). https://doi.org/10.1109/ACPR.2015.7486586. IEEE

Liong, S.-T., Gan, Y.S., See, J., Khor, H.-Q., Huang, Y.-C.: Shallow triple stream three-dimensional cnn (ststnet) for micro-expression recognition. In: 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), pp. 1–5 (2019). https://doi.org/10.1109/FG.2019.8756567. IEEE

Zhou, L., Mao, Q., Xue, L.: Cross-database micro-expression recognition: a style aggregated and attention transfer approach. In: 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), pp. 102–107 (2019). https://doi.org/10.1109/ICMEW.2019.00025. IEEE

Liong, S.-T., See, J., Wong, K., Phan, R.C.-W.: Less is more: micro-expression recognition from video using apex frame. Signal Process. Image Commun. 62, 82–92 (2018). https://doi.org/10.1016/j.image.2017.11.006

Asthana, A., Zafeiriou, S., Cheng, S., Pantic, M.: Robust discriminative response map fitting with constrained local models. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3444–3451 (2013). https://doi.org/10.1109/CVPR.2013.442

Ma, H., An, G., Wu, S., Yang, F.: A region histogram of oriented optical flow (rhoof) feature for apex frame spotting in micro-expression. In: 2017 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), pp. 281–286 (2017). https://doi.org/10.1109/ISPACS.2017.8266489. IEEE

Baltrusaitis, T., Robinson, P., Morency, L.-P.: Constrained local neural fields for robust facial landmark detection in the wild. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 354–361 (2013). https://doi.org/10.1109/ICCVW.2013.54

Liong, S.-T., See, J., Wong, K., Phan, R.C.-W.: Automatic micro-expression recognition from long video using a single spotted apex. In: Asian Conference on Computer Vision, pp. 345–360 (2016). https://doi.org/10.1007/978-3-319-54427-4_26. Springer

Critchley, H.D., Rotshtein, P., Nagai, Y., O’Doherty, J., Mathias, C.J., Dolan, R.J.: Activity in the human brain predicting differential heart rate responses to emotional facial expressions. Neuroimage 24(3), 751–762 (2005). https://doi.org/10.1016/j.neuroimage.2004.10.013

Gupta, P., Bhowmick, B., Pal, A.: Exploring the feasibility of face video based instantaneous heart-rate for micro-expression spotting. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 1316–1323 (2018). https://doi.org/10.1109/CVPRW.2018.00179

Gupta, P., Bhowmick, B., Pal, A.: Serial fusion of eulerian and lagrangian approaches for accurate heart-rate estimation using face videos. In: 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pp. 2834–2837 (2017). https://doi.org/10.1109/EMBC.2017.8037447. IEEE

Van Quang, N., Chun, J., Tokuyama, T.: Capsulenet for micro-expression recognition. In: 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), pp. 1–7 (2019). https://doi.org/10.1109/FG.2019.8756544. IEEE

Borza, D., Danescu, R., Itu, R., Darabant, A.: High-speed video system for micro-expression detection and recognition. Sensors 17(12), 2913 (2017). https://doi.org/10.3390/s17122913

Li, Y., Huang, X., Zhao, G.: Joint local and global information learning with single apex frame detection for micro-expression recognition. IEEE Trans Image Process 30, 249–263 (2020). https://doi.org/10.1109/TIP.2020.3035042

Zhang, Z., Chen, T., Meng, H., Liu, G., Fu, X.: Smeconvnet: a convolutional neural network for spotting spontaneous facial micro-expression from long videos. IEEE Access 6, 71143–71151 (2018). https://doi.org/10.1109/ACCESS.2018.2879485

Yee, N.L., Zulkifley, M.A., Saputro, A.H., Abdani, S.R.: Apex frame spotting using attention networks for micro-expression recognition system. Comput. Mater. Continua 73(3), 5331–5348 (2022). https://doi.org/10.32604/cmc.2022.028801

Tran, T.-K., Hong, X., Zhao, G.: Sliding window based micro-expression spotting: a benchmark. In: International Conference on Advanced Concepts for Intelligent Vision Systems. Springer, pp. 542–553 (2017). https://doi.org/10.1007/978-3-319-70353-4_46.

Burges, C.J.: A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 2(2), 121–167 (1998). https://doi.org/10.1023/A:1009715923555

Friedman, J., Hastie, T., Tibshirani, R.: Additive logistic regression: a statistical view of boosting (with discussion and a rejoinder by the authors). Ann. Stat. 28(2), 337–407 (2000). https://doi.org/10.1214/aos/1016120463

Davison, A., Merghani, W., Lansley, C., Ng, C.-C., Yap, M.H.: Objective micro-facial movement detection using facs-based regions and baseline evaluation. In: 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), pp. 642–649 (2018). https://doi.org/10.1109/FG.2018.00101. IEEE

Klaser, A., Marszałek, M., Schmid, C.: A spatio-temporal descriptor based on 3d-gradients. In: BMVC 2008-19th British Machine Vision Conference, pp. 275–1 (2008). https://doi.org/10.5244/C.22.99. British Machine Vision Association

Zhao, G., Pietikainen, M.: Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans. Pattern Anal. Mach. Intell. 29(6), 915–928 (2007). https://doi.org/10.1109/TPAMI.2007.1110

Han, Y., Li, B., Lai, Y.-K., Liu, Y.-J.: Cfd: a collaborative feature difference method for spontaneous micro-expression spotting. In: 2018 25th IEEE International Conference on Image Processing (ICIP), pp. 1942–1946 (2018). https://doi.org/10.1109/ICIP.2018.8451065. IEEE

He, Y., Wang, S.-J., Li, J., Yap, M.H.: Spotting macro-and micro-expression intervals in long video sequences. In: 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), pp. 742–748 (2020). https://doi.org/10.1109/FG47880.2020.00036. IEEE

Moilanen, A., Zhao, G., Pietikäinen, M.: Spotting rapid facial movements from videos using appearance-based feature difference analysis. In: 2014 22nd International Conference on Pattern Recognition, pp. 1722–1727 (2014). https://doi.org/10.1109/ICPR.2014.303. IEEE

Liu, Y.-J., Zhang, J.-K., Yan, W.-J., Wang, S.-J., Zhao, G., Fu, X.: A main directional mean optical flow feature for spontaneous micro-expression recognition. IEEE Trans. Affect. Comput. 7(4), 299–310 (2015). https://doi.org/10.1109/TAFFC.2015.2485205

Jingting, L., Wang, S.-J., Yap, M.H., See, J., Hong, X., Li, X.: Megc2020-the third facial micro-expression grand challenge. In: 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), pp. 777–780 (2020). https://doi.org/10.1109/FG47880.2020.00035. IEEE

Wang, S.-J., Wu, S., Qian, X., Li, J., Fu, X.: A main directional maximal difference analysis for spotting facial movements from long-term videos. Neurocomputing 230, 382–389 (2017). https://doi.org/10.1016/j.neucom.2016.12.034

Baltrušaitis, T., Robinson, P., Morency, L.-P.: Openface: an open source facial behavior analysis toolkit. In: 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 1–10 (2016). https://doi.org/10.1109/WACV.2016.7477553. IEEE

Ma, J., Theiler, J., Perkins, S.: Accurate on-line support vector regression. Neural Comput. 15(11), 2683–2703 (2003). https://doi.org/10.1162/089976603322385117

Savitzky, A., Golay, M.J.: Smoothing and differentiation of data by simplified least squares procedures. Anal. Chem. 36(8), 1627–1639 (1964). https://doi.org/10.1021/ac60214a047

Xia, Z., Feng, X., Peng, J., Peng, X., Zhao, G.: Spontaneous micro-expression spotting via geometric deformation modeling. Comput. Vis. Image Underst. 147, 87–94 (2016). https://doi.org/10.1016/j.cviu.2015.12.006

Milborrow, S., Nicolls, F.: Active shape models with sift descriptors and mars. In: 2014 International Conference on Computer Vision Theory and Applications (VISAPP), vol. 2, pp. 380–387 (2014). IEEE

Freund, Y., Schapire, R.E.: A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 55(1), 119–139 (1997). https://doi.org/10.1006/jcss.1997.1504

Li, J., Soladie, C., Seguier, R.: Ltp-ml: Micro-expression detection by recognition of local temporal pattern of facial movements. In: 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), pp. 634–641 (2018). https://doi.org/10.1109/FG.2018.00100. IEEE

Li, J., Soladie, C., Seguier, R., Wang, S.-J., Yap, M.H.: Spotting micro-expressions on long videos sequences. In: 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), pp. 1–5 (2019). https://doi.org/10.1109/FG.2019.8756626. IEEE

Li, J., Soladie, C., Seguier, R.: Local temporal pattern and data augmentation for micro-expression spotting. IEEE Trans. Affect. Comput. (2020). https://doi.org/10.1109/TAFFC.2020.3023821

See, J., Yap, M.H., Li, J., Hong, X., Wang, S.-J.: Megc 2019–the second facial micro-expressions grand challenge. In: 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), pp. 1–5 (2019). https://doi.org/10.1109/FG.2019.8756611. IEEE

Abdi, H., Williams, L.J.: Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2(4), 433–459 (2010). https://doi.org/10.1002/wics.101

Zhang, L.-W., Li, J., Wang, S.-J., Duan, X.-H., Yan, W.-J., Xie, H.-Y., Huang, S.-C.: Spatio-temporal fusion for macro-and micro-expression spotting in long video sequences. In: 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), pp. 734–741 (2020). https://doi.org/10.1109/FG47880.2020.00037. IEEE

Yuhong, H.: Research on micro-expression spotting method based on optical flow features. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 4803–4807 (2021). https://doi.org/10.1145/3474085.3479225

He, Y., Xu, Z., Ma, L., Li, H.: Micro-expression spotting based on optical flow features. Pattern Recognit. Lett. 163, 57–64 (2022). https://doi.org/10.1016/j.patrec.2022.09.009

Li, J., Yap, M.H., Cheng, W.-H., See, J., Hong, X., Li, X., Wang, S.-J.: Fme’21: 1st workshop on facial micro-expression: Advanced techniques for facial expressions generation and spotting. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 5700–5701. Association for Computing Machinery, New York, NY, USA (2021). https://doi.org/10.1145/3474085.3478579

Patel, D., Zhao, G., Pietikäinen, M.: Spatiotemporal integration of optical flow vectors for micro-expression detection. In: International Conference on Advanced Concepts for Intelligent Vision Systems, pp. 369–380 (2015). https://doi.org/10.1007/978-3-319-25903-1_32. Springer

Guo, Y., Li, B., Ben, X., Ren, Y., Zhang, J., Yan, R., Li, Y.: A magnitude and angle combined optical flow feature for microexpression spotting. IEEE MultiMedia 28(2), 29–39 (2021). https://doi.org/10.1109/MMUL.2021.3058017

Li, K., Kong, Y., Fu, Y.: Visual object tracking via multi-stream deep similarity learning networks. IEEE Trans. Image Process. 29, 3311–3320 (2019). https://doi.org/10.1109/TIP.2019.2959249

Hou, Q., Cheng, M.-M., Hu, X., Borji, A., Tu, Z., Torr, P.H.S.: Deeply supervised salient object detection with short connections. IEEE Trans. Pattern Anal. Mach. Intell. 41(4), 815–828 (2018). https://doi.org/10.1109/TPAMI.2018.2815688

He, R., Wu, X., Sun, Z., Tan, T.: Wasserstein cnn: learning invariant features for nir-vis face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 41(7), 1761–1773 (2018). https://doi.org/10.1109/TPAMI.2018.2842770

Pan, H., Xie, L., Wang, Z.: Local bilinear convolutional neural network for spotting macro-and micro-expression intervals in long video sequences. In: 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), pp. 749–753 (2020). https://doi.org/10.1109/FG47880.2020.00052. IEEE

Takalkar, M.A., Thuseethan, S., Rajasegarar, S., Chaczko, Z., Xu, M., Yearwood, J.: Lgattnet: automatic micro-expression detection using dual-stream local and global attentions. Knowl. Based Syst. 212, 106566 (2021). https://doi.org/10.1016/j.knosys.2020.106566

Liong, G.-B., See, J., Wong, L.-K.: Shallow optical flow three-stream cnn for macro-and micro-expression spotting from long videos. In: 2021 IEEE International Conference on Image Processing (ICIP), pp. 2643–2647 (2021). https://doi.org/10.1109/ICIP42928.2021.9506349. IEEE

Xue, L., Zhu, T., Hao, J.: A two-stage deep neural network for macro-and micro-expression spotting from long-term videos. In: 2021 14th International Symposium on Computational Intelligence and Design (ISCID), pp. 282–286 (2021). https://doi.org/10.1109/ISCID52796.2021.00072. IEEE

Wang, S.-J., He, Y., Li, J., Fu, X.: Mesnet: a convolutional neural network for spotting multi-scale micro-expression intervals in long videos. IEEE Trans. Image Process. 30, 3956–3969 (2021). https://doi.org/10.1109/TIP.2021.3064258

Gupta, P.: Persist: improving micro-expression spotting using better feature encodings and multi-scale gaussian tcn. Appl. Intell. (2022). https://doi.org/10.1007/s10489-022-03553-w

Yu, W.-W., Jiang, J., Li, Y.-J.: Lssnet: A two-stream convolutional neural network for spotting macro-and micro-expression in long videos. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 4745–4749 (2021). https://doi.org/10.1145/3474085.3479215

Carreira, J., Zisserman, A.: Quo vadis, action recognition? a new model and the kinetics dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6299–6308 (2017). https://doi.org/10.1109/CVPR.2017.502

Yap, C.H., Yap, M.H., Davison, A., Kendrick, C., Li, J., Wang, S.-J., Cunningham, R.: 3d-cnn for facial micro-and macro-expression spotting on long video sequences using temporal oriented reference frame. In: Proceedings of the 30th ACM International Conference on Multimedia, pp. 7016–7020 (2022). https://doi.org/10.48550/arXiv.2105.06340v4

Zhou, Y., Song, Y., Chen, L., Chen, Y., Ben, X., Cao, Y.: A novel micro-expression detection algorithm based on bert and 3dcnn. Image Vis. Comput. 119, 104378 (2022). https://doi.org/10.1016/j.imavis.2022.104378

Hara, K., Kataoka, H., Satoh, Y.: Learning spatio-temporal features with 3d residual networks for action recognition. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 3154–3160 (2017). https://doi.org/10.1109/ICCVW.2017.373

Devlin, J., Chang, M.-W., Lee, K., Toutanova, K.: Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint (2018). https://doi.org/10.04805

Yang, B., Wu, J., Zhou, Z., Komiya, M., Kishimoto, K., Xu, J., Nonaka, K., Horiuchi, T., Komorita, S., Hattori, G., et al.: Facial action unit-based deep learning framework for spotting macro-and micro-expressions in long video sequences. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 4794–4798 (2021). https://doi.org/10.1145/3474085.3479209

Ding, J., Tian, Z., Lyu, X., Wang, Q., Zou, B., Xie, H.: Real-time micro-expression detection in unlabeled long videos using optical flow and lstm neural network. In: International Conference on Computer Analysis of Images and Patterns, pp. 622–634 (2019). https://doi.org/10.1007/978-3-030-29888-3_51. Springer

Verburg, M., Menkovski, V.: Micro-expression detection in long videos using optical flow and recurrent neural networks. In: 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), pp. 1–6 (2019). https://doi.org/10.1109/FG.2019.8756588. IEEE

Davison, A.K., Yap, M.H., Lansley, C.: Micro-facial movement detection using individualised baselines and histogram-based descriptors. In: 2015 IEEE International Conference on Systems, Man, and Cybernetics, pp. 1864–1869 (2015). https://doi.org/10.1109/SMC.2015.326. IEEE

Lyu, L., Zhang, Y., Chi, M.-Y., Yang, F., Zhang, S.-G., Liu, P., Lu, W.-G.: Spontaneous facial expression database of learners’ academic emotions in online learning with hand occlusion. Comput. Electric. Eng. 97, 107667 (2022). https://doi.org/10.1016/j.compeleceng.2021.107667

Wang, K., Peng, X., Yang, J., Meng, D., Qiao, Y.: Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans. Image Process. 29, 4057–4069 (2020). https://doi.org/10.1109/TIP.2019.2956143

Ding, H., Zhou, P., Chellappa, R.: Occlusion-adaptive deep network for robust facial expression recognition. In: 2020 IEEE International Joint Conference on Biometrics (IJCB), pp. 1–9 (2020). https://doi.org/10.1109/IJCB48548.2020.9304923. IEEE

Li, Y., Zeng, J., Shan, S., Chen, X.: Patch-gated cnn for occlusion-aware facial expression recognition. In: 2018 24th International Conference on Pattern Recognition (ICPR), pp. 2209–2214 (2018). https://doi.org/10.1109/ICPR.2018.8545853. IEEE

Tang, J., Li, K., Jin, X., Cichocki, A., Zhao, Q., Kong, W.: Ctfn: Hierarchical learning for multimodal sentiment analysis using coupled-translation fusion network. In: Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pp. 5301–5311 (2021). https://doi.org/10.18653/v1/2021.acl-long.412

Wang, Z., Wan, Z., Wan, X.: Transmodality: An end2end fusion method with transformer for multimodal sentiment analysis. In: Proceedings of The Web Conference 2020, pp. 2514–2520 (2020). https://doi.org/10.1145/3366423.3380000

Acknowledgements

This work was supported in part by the National Natural Science Foundation of Changsha (kq2202176), in part by Key R &D Program of Hunan (2022SK2104), in part by Leading plan for scientific and technological innovation of high-tech industries of Hunan (2022GK4010), in part by National Key R & D Program of China (2021YFF0900600), in part by the National Natural Science Foundation of China (61672222), and in part by Hunan Provincial Innovation Foundation For Postgraduate (CX20220440 and CX20220439).

Author information

Authors and Affiliations

Contributions

HZ and LY wrote the main manuscript text. HZ proposed the conception of this work and revised the paper. LY prepared figures 1–7. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, H., Yin, L. & Zhang, H. A review of micro-expression spotting: methods and challenges. Multimedia Systems 29, 1897–1915 (2023). https://doi.org/10.1007/s00530-023-01076-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-023-01076-z