Abstract

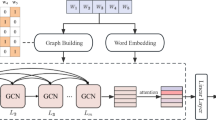

Recently, graph convolutional networks (GCNs) has demonstrated great success in the text classification. However, the GCN only focuses on the fitness between the ground-truth labels and the predicted ones. Indeed, it ignores the local intra-class diversity and local inter-class similarity that is implicitly encoded by the graph, which is an important cue in machine learning field. In this paper, we propose a local discriminative graph convolutional network (LDGCN) to boost the performance of text classification. Different from the text GCN that minimize only the cross entropy loss, our proposed LDGCN is trained by optimizing a new discriminative objective function. So that, in the new LDGCN feature spaces, the texts from the same scene class are mapped closely to each other and the texts of different classes are mapped as farther apart as possible. So as to ensure that the features extracted by GCN have good discriminative ability, achieve the maximum separability of samples. Experimental results demonstrate its superiority against the baselines.

Similar content being viewed by others

References

Phan, H.T., Nguyen, N.T., Hwang, D.: Aspect-level sentiment analysis: a survey of graph convolutional network methods. Inf. Fus. 91, 149–172 (2023)

Parlak, B., Uysal, A.K.: A novel filter feature selection method for text classification: extensive feature selector. J. Inf. Sci. 49(1), 59–78 (2023)

Rao, S., Verma, A.K., Bhatia, T.: A review on social spam detection: challenges, open issues, and future directions. Expert Syst. Appl. 186, 115742 (2021)

Chen, Y.: Convolutional neural network for sentence classification. Master’s thesis, University of Waterloo (2015)

Zhou, P., Qi, Z., Zheng, S., Xu, J., Bao, H., Xu, B.: Text classification improved by integrating bidirectional lstm with two-dimensional max pooling. arXiv preprint arXiv:1611.06639 (2016)

Peng, H., Li, J., He, Y., Liu, Y., Bao, M., Wang, L., Song, Y., Yang, Q.: Large-scale hierarchical text classification with recursively regularized deep graph-cnn. In: Proceedings of the 2018 World Wide Web Conference, pp. 1063–1072 (2018)

Yao, L., Mao, C., Luo, Y.: Graph convolutional networks for text classification. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 7370–7377 (2019)

Vashishth, S., Bhandari, M., Yadav, P., Rai, P., Bhattacharyya, C., Talukdar, P.: Incorporating syntactic and semantic information in word embeddings using graph convolutional networks. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 3308–3318 (2019)

Liu, X., You, X., Zhang, X., Wu, J., Lv, P.: Tensor graph convolutional networks for text classification. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 8409–8416 (2020)

Ragesh, R., Sellamanickam, S., Iyer, A., Bairi, R., Lingam, V.: Hetegcn: heterogeneous graph convolutional networks for text classification. In: Proceedings of the 14th ACM International Conference on Web Search and Data Mining, pp. 860–868 (2021)

Liu, Y., Guan, R., Giunchiglia, F., Liang, Y., Feng, X.: Deep attention diffusion graph neural networks for text classification. In: Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pp. 8142–8152 (2021)

Zhang, X., Zhao, J., LeCun, Y.: Character-level convolutional networks for text classification. Adv. Neural Inf. Process. Syst. 28 (2015)

Tai, K.S., Socher, R., Manning, C.D.: Improved semantic representations from tree-structured long short-term memory networks. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pp. 1556–1566 (2015)

Campos Camunez, V., Jou, B., Giró Nieto, X., Torres Viñals, J., Chang, S.-F.: Skip rnn: learning to skip state updates in recurrent neural networks. In: Sixth International Conference on Learning Representations: Monday April 30-Thursday May 03, 2018, Vancouver Convention Center, Vancouver:[proceedings], pp. 1–17 (2018)

Chang, S., Zhang, Y., Han, W., Yu, M., Guo, X., Tan, W., Cui, X., Witbrock, M., Hasegawa-Johnson, M.A., Huang, T.S.: Dilated recurrent neural networks. Adv. Neural Inf. Process. Syst. 30 (2017)

Yin, W., Schütze, H., Xiang, B., Zhou, B.: Abcnn: attention-based convolutional neural network for modeling sentence pairs. Trans. Assoc. Comput. Linguist. 4, 259–272 (2016)

Liu, P., Qiu, X., Huang, X.: Recurrent neural network for text classification with multi-task learning. In: Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, pp. 2873–2879 (2016)

Kenton, J.D.M.-W.C., Toutanova, L.K.: Bert: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of NAACL-HLT, pp. 4171–4186 (2019)

Oh, S.H., Kang, M., Lee, Y.: Protected health information recognition by fine-tuning a pre-training transformer model. Healthc. Inform. Res. 28(1), 16–24 (2022)

Wu, L., Chen, Y., Shen, K., Guo, X., Gao, H., Li, S., Pei, J., Long, B.: Graph neural networks for natural language processing: a survey. Found. Trends Mach. Learn. 16(2), 119–328 (2023)

Wu, J., Zhang, C., Liu, Z., Zhang, E., Wilson, S., Zhang, C.: Graphbert: Bridging graph and text for malicious behavior detection on social media. In: 2022 IEEE International Conference on Data Mining (ICDM), pp. 548–557 (2022). IEEE

Yang, Y., Miao, R., Wang, Y., Wang, X.: Contrastive graph convolutional networks with adaptive augmentation for text classification. Inf. Process. Manag. 59(4), 102946 (2022)

Krishnaveni, P., Balasundaram, S.: Generating fuzzy graph based multi-document summary of text based learning materials. Expert Syst. Appl. 214, 119165 (2023)

Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. In: International Conference on Learning Representations

Cao, Y., Liu, Z., Li, C., Li, J., Chua, T.-S.: Multi-channel graph neural network for entity alignment. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 1452–1461 (2019)

Dai, Y., Shou, L., Gong, M., Xia, X., Kang, Z., Xu, Z., Jiang, D.: Graph fusion network for text classification. Knowl. Based Syst. 236, 107659 (2022)

Zhu, X., Zhu, L., Guo, J., Liang, S., Dietze, S.: Gl-gcn: global and local dependency guided graph convolutional networks for aspect-based sentiment classification. Expert Syst. Appl. 186, 115712 (2021)

Jin, D., Song, X., Yu, Z., Liu, Z., Zhang, H., Cheng, Z., Han, J.: Bite-gcn: A new gcn architecture via bidirectional convolution of topology and features on text-rich networks. In: Proceedings of the 14th ACM International Conference on Web Search and Data Mining, pp. 157–165 (2021)

Jin, T., Cao, L., Zhang, B., Sun, X., Deng, C., Ji, R.: Hypergraph induced convolutional manifold networks. In: IJCAI, pp. 2670–2676 (2019)

Deng, Y., Yang, J., Xiang, J., Tong, X.: Gram: Generative radiance manifolds for 3d-aware image generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10673–10683 (2022)

Vepakomma, P., Balla, J., Raskar, R.: Privatemail: Supervised manifold learning of deep features with privacy for image retrieval. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, pp. 8503–8511 (2022)

Sugiyama, M.: Dimensionality reduction of multimodal labeled data by local fisher discriminant analysis. J. Mach. Learn. Res. 8(5) (2007)

Le, Q., Mikolov, T.: Distributed representations of sentences and documents. In: International Conference on Machine Learning, pp. 1188–1196 (2014). PMLR

Joulin, A., Grave, É., Bojanowski, P., Mikolov, T.: Bag of tricks for efficient text classification. In: Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers, pp. 427–431 (2017)

Tang, J., Qu, M., Mei, Q.: Pte: Predictive text embedding through large-scale heterogeneous text networks. In: Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1165–1174 (2015)

Shen, D., Wang, G., Wang, W., Min, M.R., Su, Q., Zhang, Y., Li, C., Henao, R., Carin, L.: Baseline needs more love: On simple word-embedding-based models and associated pooling mechanisms. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 440–450 (2018)

Wang, G., Li, C., Wang, W., Zhang, Y., Shen, D., Zhang, X., Henao, R., Carin, L.: Joint embedding of words and labels for text classification. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 2321–2331 (2018)

Liu, T., Zhang, X., Zhou, W., Jia, W.: Neural relation extraction via inner-sentence noise reduction and transfer learning. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pp. 2195–2204 (2018)

Huang, L., Ma, D., Li, S., Zhang, X., Wang, H.: Text level graph neural network for text classification. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pp. 3444–3450 (2019)

Zhang, C., Zhu, H., Peng, X., Wu, J., Xu, K.: Hierarchical information matters: Text classification via tree based graph neural network. In: Proceedings of the 29th International Conference on Computational Linguistics, pp. 950–959 (2022)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Pennington, J., Socher, R., Manning, C.D.: Glove: Global vectors for word representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 1532–1543 (2014)

Acknowledgements

This work is supported by the National Key Research and Development Program of China (No. 2022YFC3301801), the Fundamental Research Funds for the Central Universities (No. DUT22ZD205).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, B., Sun, Y., Chu, Y. et al. Local discriminative graph convolutional networks for text classification. Multimedia Systems 29, 2363–2373 (2023). https://doi.org/10.1007/s00530-023-01112-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-023-01112-y