Abstract

Multi-view learning methods have achieved remarkable results in 3D shape recognition. However, most of them focus on the visual feature extraction and feature aggregation, while viewpoints (spatial positions of virtual cameras) for generating multiple views are often ignored. In this paper, we deeply explore the correlation between viewpoints and shape descriptor, and propose a novel viewpoint-guided prototype learning network (VGP-Net). We introduce a prototype representation for each class, including viewpoint prototype and feature prototype. The viewpoint prototype is the average weight of each viewpoint learned from a small support set via Score Unit, and stored in a weight dictionary. Our VGP model self-adaptively learns the view-wise weights by dynamically assembling with the viewpoint prototypes in weight dictionary and performing element-wise operation via view pooling layer. Under the guidance of viewpoint prototype, important visual features are enhanced, while those negligible features are eliminated. These refined features are effectively fused to generate compact shape descriptor. All the shape descriptors are clustered in feature embedding space, and the cluster center represents the feature prototype of each class. The classification thus can be performed by searching the nearest distance to feature prototypes. To boost the learning process, we further present a multi-stream regularization mechanism in both feature space and viewpoint space. Extensive experiments demonstrate that our VGP-Net is efficient, and the learned deep features have stronger discrimination ability. Therefore, it can achieve better performance compared to state-of-the-art methods.

Similar content being viewed by others

Data availability

The datasets generated and/or analysed during the current study are available from the corresponding author by request.

References

Su, H., Maji, S., Kalogerakis, E., Learned-Miller, E.: Multi-view convolutional neural networks for 3d shape recognition. In Proc. ICCV, 2015.

Wei, X., Yu, R., and Sun, J.: View-GCN: View-based graph convolutional network for 3D shape analysis. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2020, pp. 1850–1859.

Feng, Y., Zhang, Z., Zhao, X., Ji R., Gao, Y.: GVCNN: Group-view convolutional neural networks for 3D shape recognition. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., Jun. 2018, pp. 264–272.

Leng, B., Zhang, C., Zhou, X., Xu, C., Xu, K.: Learning discriminative 3d shape representations by view discerning networks. Inst. Electr. Electr. Eng. (IEEE) 25, 2896 (2019). https://doi.org/10.1109/TVCG.2018.2865317

Wu, Z., Yang, P., Wang, Y.: MVPN: multi-view prototype network for 3D shape recognition. IEEE Access 99, 1–1 (2019)

Hamdi, A., et al.: MVTN Multi-view transformation network for 3D shape recognition. Proceed. IEEE Int. Conf. Comput. Vis. (2020). https://doi.org/10.48550/arXiv.2011.13244

Xu, Y., Zheng, C., Xu, R., et al.: Multi-view 3D shape recognition via correspondence-aware deep learning. IEEE Trans. Image Process 30, 5299 (2021)

Snell, J., Swersky, K., and Zemel, R. S.: Prototypical networks for few-shot learning. In Proc NIPS, 2017, pp. 4080–4090.

Luciano, L., Hamza, A.: Deep learning with geodesic moments for 3D shape classification. Pattern Recog. Lett. 105, 182–190 (2017)

David, K.H., Pierre, V., Rémi, G.: Wavelets on graphs via spectral graph theory’,’. Appl. Comput. Harmon. Anal. 30, 129–150 (2011)

Masoumi, M., Li, C., Hamza, A.: A spectral graph wavelet approach for non-rigid 3D shape retrieval. Pattern Recogn. Lett. 83, 339–348 (2016)

Han, Z., Liu, Z., Vong, C.M., Liu, Y.S., Bu, S., Han, J., Chen,: CLP: BoSCC: bag of spatial context correlations for spatially enhanced 3Dshape representation. IEEE Trans. Image Process 26(8), 3707–3720 (2017)

Qi,C. R., Su, H., Mo, K.,Guibas, L.J.: Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proc. CVPR, 2017.

Qi,C. R., Yi, L., Su, H., Guibas, L.J.: PointNet++: Deep hierarchical feature learning on point sets in a metric space. In Proc. CVPR, 2018.

Hugues, T., Qi C. R., Deschaud, J.E., Marcotegui, B., Goulette, F., Leonidas, J. Guibas. : Kpconv: Flexible and deformable convolution for point clouds. In ICCV, 2019.

Xu, M., Ding, R. , Zhao, H.,Qi, X.: PAConv: position adaptive convolution with dynamic kernel assembling on point clouds. In CVPR, 2021.

Wu, W., Qi, Z., and Li, F.: Pointconv: Deep convolutional networks on 3d point clouds. In CVPR, 2019.

Niepert, M., Ahmed, M., Kutzkov, K.: Learning convolutional neural networks for graphs. In ICML, 2014–2023, 2016.

Hamilton, W., Ying, R., Leskovec, J.: Inductive representation learning on large graphs. In NIPS. 2017.

Bruna, J., Zaremba, W., Szlam, A., LeCun, Y.: Spectral networks and locally connected networks on graphs. Computer Science, 2013.

Scarselli, F., Gori, M., Tsoi, A., Hagenbuchner, M., Monfardini, G.: The graph neural network model. IEEE Trans. Neural Netw. 20(1), 61–80 (2009)

Simonovsky, M. and Komodakis, N.: Dynamic edge-conditioned filters in convolutional neural networks on graphs. In CVPR, 2017.

Kipf, TN., Welling, M. (2017): Semi-supervised classification with graph convolutional networks. In: Proc. of International Conference on Learning Representations (ICLR)

Wang, Y., Sun, Y., Liu, Z., Sanjay, E., Sarma, M., Bronstein, M., Justin, M.: Solomon dynamic graph cnn for learning on point clouds. ACM Trans. Graph 38(5), 1–12 (2019)

Maturana, D., Scherer, S.: VoxNet: a 3D convolutional neural network for real-Time object recognition. In Proc. International Conference on Intelligent Robots & Systems (IROS), 2015.

Tran, M., Vo-Ho, V. K., Le, N.: 3DConvCaps: 3DUnet with convolutional capsule encoder for medical image segmentation. arXiv e-prints, 2022.

Mohammadi, S. S., Wang, Y. and Bue, A. D.:Pointview-GCN: 3D shape classification with multi-view point clouds. In Proc. IEEE conference on Image Processing IEEE, 2021.

Qin, S., Li, Z., Liu, L. Robust 3D shape classification via non-local graph attention network. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023: 5374–5383.

Xu C., Li Z., Qiu Q., Leng B., and Jiang J.: Enhancing 2D representation via adjacent views for 3D shape retrieval. In Proc. IEEE/CVF Int. Conf. Comput. Vis. (ICCV), Oct. 2019.

Ma, C., Guo, Y., Yang, J., An, W.: Learning multi-view representation with LSTM for 3-D shape recognition and retrieval. IEEE Trans. Multimedia 21(5), 1169–1182 (2019)

Guo, Y., Wang, H., Hu, Q., et al.: Deep learning for 3d point clouds: a survey. Proc. IEEE Trans. Pattern Anal. Mach. Intell. 43(12), 4338–4364 (2020)

He, K. et al. Masked autoencoders are scalable vision learners. In Proc. IEEE conference on computer vision and pattern recognition (CVPR). 2022.

Afham, M., Dissanayake, I., Dissanayake, D., et al. CrossPoint: self-supervised cross-modal contrastive learning for 3D point cloud understanding. In Proc. IEEE conference on computer vision and pattern recognition (CVPR), 2022.

Shi, B.G., Bai, S., Zhou, Z., et al.: DeepPano: deep panoramic representation for 3D shape recognition. IEEE Signal Process. Lett. 22(12), 2339–2343 (2015)

van der Maaten, L., Hinton, G.: Visualizing data using t-sne. J Mach Learn Res 9, 2579–2605 (2008)

Liu, X., Han, Z., Liu, Y.S., Zwicker, M.: Point2sequence: learning the shape representation of 3d point clouds with an attention-based sequence to sequence network. In Proceed AAAI Conf Artif Intell 33, 8778–8785 (2019)

Yan, X., Zheng, C., Li, Z., Wang, S., Cui, S. PointASNL: robust point clouds processing using nonlocal neural networks with adaptive sampling, CPVR, 2021.

Acknowledgements

We would like to thank the anonymous reviewers for their helpful comments, and thank Chuanming Song, Bo Fu, and Yang Liu for valuable discussion. The research presented in this paper is supported by a grant from NSFC (61702246), grants from research projects of Liaoning province (2019lsktyb-084, LJ2020015, 2020JH4/10100045, 2023020196-JH2/1013) and a fund of Dalian Science and Technology (2019J12GX038).

Author information

Authors and Affiliations

Contributions

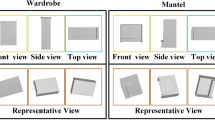

HL, HJ and DF wrote the main manuscript text. MH and XX provided some experimental results of Figures 5, 6, 7. And YW prepared Fig. 8, 9. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Additional information

Communicated by T. Li.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Han, L., He, J., Dou, F. et al. A viewpoint-guided prototype network for 3D shape classification. Multimedia Systems 29, 3531–3547 (2023). https://doi.org/10.1007/s00530-023-01177-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-023-01177-9