Abstract

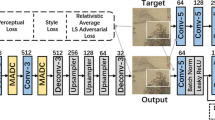

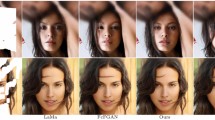

The image outpainting based on deep learning shows good performance and has a wide range of applications in many fields. The previous image outpainting methods mostly used a single image as input. In this paper, we use the left and right images as input images, and expand the unknown region in the middle to generate an image that connects the left and right images to form a complete semantically smooth image. A cascaded refinement residual attention model for image outpainting is proposed. The Residual Channel-Spatial Attention (RCSA) module is designed to effectively learn image information about known regions. The Cascaded Dilated-conv (CDC) module is used to capture deep features, and more semantic information is obtained through dilated convolutions of different rates. The Refine on Features Aggregation (RFA) module connects the encoder and decoder to refine the result image for generating it clearer and smoother. Experimental results show that the proposed model is able to hallucinate more meaningful structures and vivid textures and achieve satisfactory results.

Similar content being viewed by others

References

Kopf, J., Kienzle, W., Drucker, S., Kang, S.B.: Quality prediction for image completion. ACM Trans. Graph. 31(6), 1–8 (2012)

Zhang, Y. D., Xiao, J. X., Hays, J., Tan, P.: FrameBreak: dramatic image extrapolation by guided shift-maps. In: 26th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, pp. 1171–1178 (2013)

Wang, M., Lai, Y.K., Liang, Y., Martin, R.R., Hu, S.M.: BiggerPicture: data-driven image extrapolation using graph matching. ACM Trans. Graph. 33(6), 1–13 (2014)

Wang, Y., Tao, X., Shen, X.Y., Jia, J.Y., Soc, I.C.: Wide-context semantic image extrapolation. In: 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1399–1408 (2019)

Xiao, Q.G., Li, G.Y., Chen, Q.C.: Image outpainting: hallucinating beyond the image. Ieee Access 8, 173576–173583 (2020)

Goodfellow, I.J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A.C., Bengio, Y.: Generative adversarial nets. In: Proc. Adv. Neural Inf. Process. Syst., vol. 3, pp. 2672–2680 (2014)

Barnes, C., Shechtman, E., Finkelstein, A., Goldman, D.B.: PatchMatch: a randomized correspondence algorithm for structural image editing. ACM Trans. Graph. 28(3), 24 (2009)

Sun, J., Yuan, L., Jia, J.Y., Shum, H.Y.: Image completion with structure propagation. ACM Trans. Graph. 24(3), 861–868 (2005)

Zhang, J., Zhao, D.B., Xiong, R.Q., Ma, S.W., Gao, W.: Image restoration using joint statistical modeling in a space-transform domain. IEEE Trans. Circuits Syst. Video Technol. 24(6), 915–928 (2014)

Pathak, D., Krahenbuhl, P., Donahue, J., Darrell, T., Efros, A.A., IEEE: context encoders: feature learning by inpainting. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2536–2544 (2016)

Liu, G.L., Reda, F.A., Shih, K.J., Wang, T.C., Tao, A., Catanzaro, B.: Image inpainting for irregular holes using partial convolutions. In: 15th European Conference on Computer Vision (ECCV), Munich, GERMANY, vol. 11215, pp. 89–105 (2018)

Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., Huang, T.S.: Free-form image inpainting with gated convolution. In: IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, SOUTH KOREA, pp. 4470–4479 (2019)

Cai, X., Song, B.: Semantic object removal with convolutional neural network feature-based inpainting approach. Multimed. Syst. 24(5), 597–609 (2018)

Xiong, W., Yu, J., Lin, Z., Yang, J., Lu, X., Barnes, C., Luo, J.: Foreground-aware image inpainting. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5833–5841 (2019)

Li, J.Y., Wang, N., Zhang, L.F., Du, B., Tao, D.C.: Recurrent feature reasoning for image inpainting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 14–19 June 2020, pp. 7760–7768

Lugmayr, A., Danelljan, M., Romero, A., Yu, F., Timofte, R., Van Gool, L.: RePaint: inpainting using denoising diffusion probabilistic models. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 2022, pp. 11451–11461

Teterwak, P., et al.: Boundless: generative adversarial networks for image extension. In: IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, SOUTH KOREA, 2019, pp. 10520–10529

Brown, M., Lowe, D.G.: Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 74(1), 59–73 (2007)

Herrmann, C., Wang, C., Bowen, R.S., Keyder, E., Krainin, M., Liu, C., Zabih, R.: Robust image stitching with multiple registrations. In: Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 53–67

Wang, Z., Yang, Z.: Review on image-stitching techniques. Multimed. Syst. 26(4), 413–430 (2020)

Shan, Q., Curless, B., Furukawa, Y., Hernandez, C., Seitz, S.M.: Photo Uncrop. In: 13th European Conference on Computer Vision (ECCV), Zurich, Switzerland, vol. 8694, pp. 16–31 (2014)

Yang, Z., Dong, J., Liu, P., Yang, Y., Yan, S.: Very long natural scenery image prediction by outpainting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10561–10570 (2019)

Sabini, M., Rusak, G.J.: Painting outside the box: image outpainting with gans. arXiv preprint arXiv:1808.08483 (2018)

Gao, P., Yang, X., Zhang, R., Goulermas, J.Y., Geng, Y., Yan, Y., Huang, K.: Generalized image outpainting with U-transformer. Neural Netw. 162, 1–10 (2023)

Guo, D., et al.: Spiral generative network for image extrapolation. In: Computer Vision—ECCV 2020. 16th European Conference. Proceedings. Lecture Notes in Computer Science (LNCS 12364), pp. 701–717 (2020)

Zhang, Z., Weng, H., Zhang, T., Chen, C.L.P.: A broad generative network for two-stage image outpainting. IEEE Trans. Neural Netw. Learn. Syst. 1–15 (2023)

Shi, C., Ren, Y., Li, X., Mumtaz, I., Jin, Z., Ren, H.: Image outpainting guided by prior structure information. Pattern Recognit. Lett. 164, 112–118 (2022)

Lin, H., Pagnucco, M., Song, Y., Soc, I.C.: Edge guided progressively generative image outpainting. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 806–815 (2021)

Wei, G., Guo, J., Ke, Y., Wang, K., Yang, S., Sheng, N.: A three-stage GAN model based on edge and color prediction for image outpainting. Expert Syst. Appl. 214, 119136 (2023)

Kim, K., et al.: Painting outside as inside: edge guided image outpainting via bidirectional rearrangement with progressive step learning. In: IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 2121–2129 (2021)

Khurana, B., Dash, S.R., Bhatia, A., Mahapatra, A., Singh, H., Kulkarni, K.: SemIE: semantically-aware image extrapolation. In: IEEE/CVF International Conference on Computer Vision (ICCV), pp. 14880–14889 (2021)

Wang, Y.X., Wei, Y.C., Qian, X.M., Zhu, L., Yang, Y.: Sketch-guided scenery image outpainting. IEEE Trans. Image Process. 30, 2643–2655 (2021)

Yongzhen, Ke., Nan, S., Gang, W., Kai, W., Fan, Q., Jing, G.: Subject-aware image outpainting. SIViP 17, 2661–2669 (2023)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Glorot, X., Bordes, A., Bengio, Y.: Deep sparse rectifier neural networks. In: Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, pp. 315–323 (2011)

Wang, Y., Tao, X., Qi, X., Shen, X., Jia, J.: Image inpainting via generative multi-column convolutional neural networks. 31 (2018). arXiv preprint http://arxiv.org/abs/1810.08771

Bolei, Z., Agata, L., Aditya, K., Aude, O., Antonio, T.: Places: a 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 40(6), 1452–1464 (2018)

Lu, C.-N., Chang, Y.-C., Chiu, W.-C.: Bridging the visual gap: wide-range image blending. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 843–851 (2021)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: Proc. 3rd Int. Conf. Learn. Represent. (ICLR), pp. 1–15 (2015)

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., Hochreiter, S.: Gans trained by a two time-scale update rule converge to a local nash equilibrium. In: Proc. Adv. Neural Inf. Process. Syst., pp. 6626–6637 (2017)

Yang, C., Lu, X., Lin, Z., Shechtman, E., Wang, O., Li, H.: High-resolution image inpainting using multi-scale neural patch synthesis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6721–6729 (2017)

Ren, Y., Yu, X., Zhang, R., Li, T.H., Liu, S., Li, G.: Structureflow: image inpainting via structure-aware appearance flow. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 181–190 (2019)

Zhang, X., Chen, F., Wang, C., Tao, M., Jiang, G.-P.: SiENet: Siamese expansion network for image extrapolation. IEEE Signal Process. Lett. 27, 1590–1594 (2020)

Guo, X., Yang, H., Huang, D.: Image inpainting via conditional texture and structure dual generation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 14134–14143 (2021)

Funding

The Funding was provided by Fundamental Research Funds for the Central Universities [Grant no. JZ2021HGQA0262], National Natural Science Foundation of China [Grant nos. 61674049 and U19A2053].

Author information

Authors and Affiliations

Contributions

Yizhong Yang supervised the project; Shanshan Yao and Changjiang Liu mainly conducted experiments, and collected and analyzed the data; Zhang Zhang and Guangjun Xie provided guidance in the algorithms and experiments; Yizhong Yang, Shanshan Yao and Changjiang Liu wrote the main manuscript. All authors discussed the results, commented on and revised the manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Communicated by B. Bao.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, Y., Yao, S., Liu, C. et al. Cascaded refinement residual attention network for image outpainting. Multimedia Systems 30, 68 (2024). https://doi.org/10.1007/s00530-024-01265-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-024-01265-4