Abstract

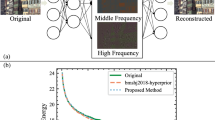

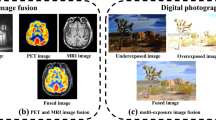

The past decade has seen significant advancements in computer vision technologies, resulting in an increasing consumption of images and videos by both human and machine. Although machines are usually the primary consumers, there are many applications where human involvement is indispensable. In this paper, we propose a novel image coding technique that targets machines while ensuring compatibility with human consumption. The proposed codec generates two distinct bitstreams: the reconstruction feature bitstreams and the enhancement feature bitstreams. The former are designed to facilitate image reconstruction for human consumption and vision tasks for machine consumption, while the latter are optimized for high-quality vision tasks. To achieve this goal, we introduce the Mask Multilayer Fusion Encoder (MMFE), which integrates multi-scale visual prior masks into partial channel features of the encoder. Additionally, due to the significant distortion of features at low bitrates, we propose a Local Feature Fusion Module (LFFM) that aggregates semantic information from the reconstruction features to obtain enhancement features, so as to improve the performance of vision tasks. Our experimental results demonstrate that our scalable codec provides significant bitrate savings of 26–77\(\%\) on machine vision tasks compared to state-of-the-art image codecs, while maintaining comparable performance in terms of image reconstruction. Our proposed codec represents a significant advancement in the field of image coding, with the potential to improve both human and machine consumption of visual media.

Similar content being viewed by others

Data availability

The data underlying this article is available from the corresponding author on reasonable request.

References

Wallace, G.K.: The jpeg still picture compression standard. IEEE Trans. Consum. Electron. 38(1), 18–34 (1992)

Lee, D.T.: Jpeg 2000: retrospective and new developments. Proc. IEEE 93(1), 32–41 (2005). https://doi.org/10.1109/JPROC.2004.839613

Li, L., Wei, S.L.: Webp: A new image compression format based on vp8 encoding. Microcontrollers Embedded Syst 3(1), 40–43 (2012)

Wiegand, T., Sullivan, G.J., Bjontegaard, G., Luthra, A.: Overview of the h.264/avc video coding standard. IEEE Trans. Circuits Syst. Video Technol. 13(7), 560–576 (2003)

Sullivan, G.J., Ohm, J.R., Han, W.J., Wiegand, T.: Overview of the high efficiency video coding (hevc) standard. IEEE Trans. Circuits Syst. Video Technol. 22(12), 1649–1668 (2012). https://doi.org/10.1109/TCSVT.2012.2221191

Bross, B., Wang, Y.K., Ye, Y., et al.: Overview of the versatile video coding (vvc) standard and its applications. IEEE Trans. Circuits Syst. Video Technol. 31(10), 3736–3764 (2021). https://doi.org/10.1109/TCSVT.2021.3101953

Toderici, G., O’Malley, S.M., Hwang, S.J., et al .: Variable rate image compression with recurrent neural networks. ArXiv preprint at (2015) arXiv: org/abs/1511.06085

Toderici, G., Vincent, D., Johnston, N., et al.: Full resolution image compression with recurrent neural networks. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 5435-5443 (2017)

Ballé, J., Laparra, V., Simoncelli, E.: End-to-end optimized image compression. In: 5th International Conference on Learning Representations, pp. 1-27 (2017)

Ballé, J., Minnen, D., Singh, S., Hwang, S.J., Johnston, N.: Variational image compression with a scale hyperprior. In: 6th International Conference on Learning Representations, pp. 1-10 (2018)

Minnen, D., Ballé, J., Toderici, G.: Joint autoregressive and hierarchical priors for learned image compression. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems, pp. 10771-10780 (2018)

Cheng, Z.X., Sun, H.M., Takeuchi, M., Katto, J.: Learned image compression with discretized gaussian mixture likelihoods and attention modules. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 7939-7948 (2020)

Agustsson, E., Tschannen, M., Mentzer, F., Timofte, R., VanGool, L.: Generative adversarial networks for extreme learned image compression. ArXiv preprint at (2018) arXiv: org/abs/1804.02958

Chen, F.D., Xu, Y.M., Wang, L.: Two-stage octave residual network for end-to-end image compression. In: 36th AAAI Conference on Artificial Intelligence, pp. 3922-3929 (2022)

Kim, J.H., Heo, B., Lee, J.S.: Joint global and local hierarchical priors for learned mage compression. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 5982-5991. (2022) https://doi.org/10.1109/CVPR52688.2022.00590

Zou, R.J., Song, C.F., Zhang, Z.X.: The devil is in the details: Window-based attention for image compression. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 17471-17480. (2022) https://doi.org/10.1109/CVPR52688.2022.01697

Zhu, X.S., Song, J.K., Gao, L.L., Zheng, F., Shen, H.T.: Unified multivariate gaussian mixture for efficient neural image compression. IEEE Conf. Comput. Vis. Patt. Recogn. (2022). https://doi.org/10.1109/CVPR52688.2022.01709

He, D.L., Yang, Z.M., Peng, W.K., Ma, R., Qin, H.W., Wang, Y.: Elic: Efficient learned image compression with unevenly grouped space-channel contextual. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 5708-5717. (2022) https://doi.org/10.1109/CVPR52688.2022.00563

Redmon, J., Divvala, S., Girshick, R., Nah, S., Farhadi, A.: You only look once: Unified, real-time object detection. In: IEEE Conference on Computer Vision and Pattern Recognition. pp. 779-788. (2016) https://doi.org/10.1109/CVPR.2016.91

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.Y.: Ssd: Single shot multibox detector. In: 14th European Conference on Computer Vision. pp. 21-37. (2016) https://doi.org/10.1007/978-3-319-46448-0_2

Redmon, J., Farhadi, A.: Yolov3: An incremental improvement. ArXiv preprint at (2018) arXiv org/abs/1804.02767

Ren, S.Q., He, K.M., Girshick, R., Sun, J.: Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2016)

He, K.M., Gkioxari, G., Dollar, P., Girshick, R.: Mask r-cnn. In: 16th IEEE International Conference on Computer Vision. pp. 2980-2988. (2017) https://doi.org/10.1109/ICCV.2017.322

Chen, L.C., Papandreou, G., Schroff, F., Adam, H.: Rethinking atrous convolution for semantic image segmentation. ArXiv preprint at (2017) arXiv: org/abs/1706.05587

Le, N., Zhang, H.L., Cricri, F., Ghaznavi-Youvalari, R., Rahtu, E.: Image coding for machines: An end-to-end learned approach. IEEE Int. Conf. Acoust. Speech Signal Process (2021). https://doi.org/10.1109/ICASSP39728.2021.9414465

Gao, C.S., Liu, D., Li, L., Wu, F.: Towards task-generic image compression: A study of semantics-oriented metrics. IEEE Trans. Multimed. 25, 721–735 (2023). https://doi.org/10.1109/TMM.2021.3130754

Kristian, F., Fabian, B., André, K.: Boosting neural image compression for machines using latent space masking. IEEE Trans. Circuits Syst. Video Technol. (2022). https://doi.org/10.1109/TCSVT.2022.3195322

Feng, R.Y., Jin, X., Guo, Z.Y., Feng, R.S., Gao, Y.X., He, T.Y.: Image coding for machines with omnipotent feature learning. In: 17th European Conference on Computer Vision. pp. 510-528 (2022)

Mei, Y.X., Li, F., Li, L., Li, Z.: Learn a compression for objection detection - vae with a bridge. In: IEEE International Conference on Visual Communications and Image Processing. (2021) https://doi.org/10.1109/VCIP53242.2021.9675387

Wang, S.R., Wang, Z., Wang, S.Q., Ye, Y.: End-to-end compression towards machine vision: Network architecture design and optimization. IEEE Open J. Circuits Syst. 2, 675–685 (2021). https://doi.org/10.1109/OJCAS.2021.3126061

Luo, S.H., Yang, Y.Z., Yin, Y.L., Shen, C.C., Zhao, .Y, Song, M.L. : Deepsic: Deep semantic image compression. In: 25th International Conference on Neural Information Processing, pp. 96-106 (2018)

Codevilla, F., Simard, J.G., Goroshin, R., Pal, C.: Learned image compression for machine perception. (2021)ArXiv preprint at arXiv: org/abs/2111.02249

Liu, L.F., Chen, T., Liu, H.J., Pu, S.L., Wang, L., Shen, Q.: 2c-net: integrate image compression and classification via deep neural network. Multimed. Syst. (2022). https://doi.org/10.1007/s00530-022-01026-1

Ma, S.W., Zhang, X., Wang, S.Q., Zhang, X.F., Jia, C.M., Wang, S.S.: Joint feature and texture coding: Toward smart video representation via front-end intelligence. IEEE Trans. Circuits Syst. Video Technol. 29(10), 3095–3105 (2018). https://doi.org/10.1109/TCSVT.2018.2873102

Wang, S.R., Wang, S.Q., Yang, W.H., et al.: Towards analysis-friendly face representation with scalable feature and texture compression. IEEE Trans. Multimed. 24, 3169–3181 (2021). https://doi.org/10.1109/TMM.2021.3094300

Yan, N., Gao, C., Liu, D., Li, H., Li, L., Wu, F.: Sssic: Semantics-to-signal scalable image coding with learned structural representations. IEEE Trans. Image Process. 30, 8939–8954 (2021). https://doi.org/10.1109/TIP.2021.3121131

Choi, H., Bajic, I.V.: Scalable image coding for humans and machines. IEEE Trans. Image Process. 31, 2739–2754 (2022). https://doi.org/10.1109/TIP.2022.3160602

Wang, Z.X., Li, F., Xu, J., Cosman, P.C.: Human-machine interaction-oriented image coding for resource-constrained visual monitoring in iot. IEEE Internet Things J. 9(17), 16181–16195 (2022)

Wang, Z., Simoncelli, E..P., Bovik, A..C.: Multiscale structural similarity for image quality assessment. In: 37th Asilomar Conference on Signals. Syst. Comput. 2, 1398–1402 (2003)

Duan, L.Y., Chandrasekhar, V., Chen, J., Lin, J., Wang, Z., et al.: Overview of the mpeg-cdvs standard. IEEE Trans. Image Process. 25(1), 179–194 (2015)

Duan, L.Y., Lou, Y., Bai, Y., Huang, T., Gao, W., et al.: Compact descriptors for video analysis: the emerging mpeg standard. IEEE Multimed. 26(2), 44–54 (2018)

Agustsson, E., Timofte, R.: Ntire 2017 challenge on single image super-resolution: Dataset and study. In: 30th IEEE Conference on Computer Vision and Pattern Recognition Workshops. pp. 1122-1131. (2017) https://doi.org/10.1109/CVPRW.2017.150

Lim, B., Son, S., Kim, H., Nah, S., Lee, K.M.: Enhanced deep residual networks for single image super-resolution. In: 30th IEEE Conference on Computer Vision and Pattern Recognition Workshops. pp. 1132-1140. (2017) https://doi.org/10.1109/CVPRW.2017.151

Everingham, M., Van Gool, L., Williams, C.K.I., Winn, J., Zisserman, A.: The pascal visual object classes (voc) challenge. Int. J. Comput. Vision 88(2), 303–338 (2010)

(2010) The kodak photocd dataset. http://r0k.us/graphics/kodak/

(2021) Vvc reference software (vtm 12.3). https://vcgit.hhi.fraunhofer.de/jvet/VVCSoftware VTM/-/tags/VTM-12.3, Accessed 10 December 2021

G. B.: Calculation of average psnr differences between rd-curves. Accessed April 2021, VCEG-M33,(2001)https://www.itu.int/wftp3/av-arch/video-site/0104Aus/VCEG-M33.doc,

Acknowledgements

This work is supported in part by the National Natural Science Foundation of China [Grants 62071287, 62020106011, 61901252, 62171002], Science and Technology Commission of Shanghai Municipality under Grant 20DZ2290100 and Grant 22ZR1424300.

Author information

Authors and Affiliations

Contributions

Ying Wu wrote the main manuscript text. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Communicated by Q. Shen.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wu, Y., An, P., Yang, C. et al. Scalable image coding with enhancement features for human and machine. Multimedia Systems 30, 77 (2024). https://doi.org/10.1007/s00530-024-01279-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-024-01279-y