Abstract

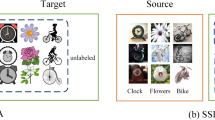

Due to data privacy concerns, a more practical task known as Source-free Unsupervised Domain Adaptation (SFUDA) has gained significant attention recently. SFUDA adapts a pre-trained source model to the target domain without access to the source domain data. Existing SFUDA methods typically rely on per-class cluster structure to refine labels. However, these clusters often contain samples with different ground truth labels, leading to label noise. To address this issue, we propose a novel Multi-level Consistency Learning (MLCL) method. MLCL focuses on learning discriminative class-wise target feature representations, resulting in more accurate cluster structures. Specifically, at the inter-domain level, we construct pseudo-source domain data based on the entropy criterion. We align pseudo-labeled target domain sample with corresponding pseudo-source domain prototype by introducing a prototype contrastive loss. This loss ensures that our model can learn discriminative class-wise feature representations effectively. At the intra-domain level, we enforce consistency among different views of the same image by employing consistency-based self-training. The self-training further enhances the feature representation ability of our model. Additionally, we apply information maximization regularization to facilitate target sample clustering and promote diversity. Our extensive experiments conducted on four benchmark datasets for classification demonstrate the superior performance of the proposed MLCL method. The code is here.

Similar content being viewed by others

References

Caron, M., Bojanowski, P., Joulin, A. et al.: Deep clustering for unsupervised learning of visual features. In: European conference on computer vision, pp. 132–149 (2018)

Chen, C., Xie, W., Huang, W., et al.: Progressive feature alignment for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 627–636 (2019)

Chen, C., Fu, Z., Chen, Z., et al.: Homm: Higher-order moment matching for unsupervised domain adaptation. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 3422–3429 (2020a)

Chen, Q., Du, Y., Tan, Z., et al.: Unsupervised domain adaptation with joint domain-adversarial reconstruction networks. In: Machine Learning and Knowledge Discovery in Databases, pp. 640–656 (2021)

Chen, T., Kornblith, S., Norouzi, M., et al.: A simple framework for contrastive learning of visual representations. In: International Conference on Machine Learning, pp. 1597–1607 (2020b)

Cicek, S., Soatto, S.: Unsupervised domain adaptation via regularized conditional alignment. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1416–1425 (2019)

Cubuk, E.D., Zoph, B., Mane, D., et al.: Autoaugment: learning augmentation strategies from data. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 113–123 (2019)

Cubuk, E.D., Zoph, B., Shlens, J., et al.: (2020) Randaugment: Practical automated data augmentation with a reduced search space. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 702–703

French, G., Mackiewicz, M., Fisher, M.: Self-ensembling for visual domain adaptation. In: International Conference on Learning Representations (2018)

Ganin, Y., Ustinova, E., Ajakan, H., et al.: Domain-adversarial training of neural networks. J. Mach. Learn. Res. 17(1), 2030–2096 (2016)

Gao, J., Xu, C.: Learning video moment retrieval without a single annotated video. IEEE Trans. Circuits Syst. Video Technol. 32(3), 1646–1657 (2021)

Gao, J., Zhang, T., Xu, C.: Learning to model relationships for zero-shot video classification. IEEE Trans. Pattern Anal. Mach. Intell. 43(10), 3476–3491 (2020)

Gao, J., Chen, M., Xu, C.: Vectorized evidential learning for weakly-supervised temporal action localization. IEEE Trans. Pattern Anal. Mach. Intell. (2023)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., et al.: Generative adversarial nets. In: Neural Information Processing Systems, pp. 2672–2680 (2014)

He, J., Wu, L., Tao, C., et al.: Source-free domain adaptation with unrestricted source hypothesis. Pattern Recogn. 149, 110 (2024)

He, K., Zhang, X., Ren, S., et al.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016a)

He, K., Zhang, X., et al.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016b)

He, K., Fan, H., Wu, Y., et al.: Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9729–9738 (2020)

Hu, Y., Gao, J., Dong, J., et al.: Exploring rich semantics for open-set action recognition. IEEE Trans. Multimed. (2023)

Huang, J., Guan, D., Xiao, A., et al.: Model adaptation: Historical contrastive learning for unsupervised domain adaptation without source data. In: Neural Information Processing Systems, pp. 3635–3649 (2021)

Jin, Y., Wang, X., Long, M., et al.: Minimum class confusion for versatile domain adaptation. In: European Conference on Computer Vision, pp. 464–480 (2020)

Kang, G., Jiang, L., Yang, Y., et al.: Contrastive adaptation network for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4893–4902 (2019)

Kim, Y., Cho, D., Han, K., et al.: Domain adaptation without source data. IEEE Trans. Artif. Intell. 2(6), 508–518 (2021)

Lee, C.Y., Batra, T., Baig, M.H., et al.: Sliced wasserstein discrepancy for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10285–10295 (2019)

Lee, J., Lee, G.: Feature alignment by uncertainty and self-training for source-free unsupervised domain adaptation. Neural Netw. 161, 682–692 (2023). https://doi.org/10.1016/j.neunet.2023.02.009

Li, J., Chen, E., Ding, Z., et al.: Maximum density divergence for domain adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 43(11), 3918–3930 (2020). https://doi.org/10.1109/TPAMI.2020.2991050

Li, K., Lu, J., Zuo, H., et al.: Source-free multi-domain adaptation with generally auxiliary model training. In: 2022 International Joint Conference on Neural Networks (IJCNN), IEEE, pp. 1–8 (2022)

Li, K., Lu, J., Zuo, H., et al.: Source-free multi-domain adaptation with fuzzy rule-based deep neural networks. IEEE Trans. Fuzzy Syst. (2023)

Li, R., Jiao, Q., Cao, W., et al.: Model adaptation: Unsupervised domain adaptation without source data. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9641–9650 (2020b)

Li, S., Lv, F., Xie, B., et al.: Bi-classifier determinacy maximization for unsupervised domain adaptation. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 8455–8464 (2021a)

Li, S., Xie, M., Gong, K., et al.: Transferable semantic augmentation for domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11516–11525 (2021b)

Liang, J., Hu, D., et al.: Do we really need to access the source data? source hypothesis transfer for unsupervised domain adaptation. In: International Conference on Machine Learning, pp. 6028–6039 (2020)

Liu, H., Wang, J., Long, M.: Cycle self-training for domain adaptation. In: Neural Information Processing Systems, pp. 22968–22981 (2021)

Long, M., Cao, Y., Wang, J., et al.: Learning transferable features with deep adaptation networks. In: International Conference on Machine Learning, PMLR, pp. 97–105 (2015)

Long, M., Zhu, H., Wang, J., et al.: Deep transfer learning with joint adaptation networks. In: International Conference on Machine Learning, pp. 2208–2217 (2017)

Long, M., Cao, Z., et al.: Conditional adversarial domain adaptation. In: Neural Information Processing Systems, pp. 1647–1657 (2018)

Lu, Z., Yang, Y., Zhu, X., et al.: Stochastic classifiers for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9111–9120 (2020)

Van der Maaten, L., Hinton, G.: Visualizing data using t-sne. J. Mach. Learn. Res. 9(11) (2008)

Mei, K., Zhu, C., Zou, J., et al.: Instance adaptive self-training for unsupervised domain adaptation. In: European Conference on Computer Vision, pp. 415–430 (2020)

Ngo, B.H., Kim, J.H., Chae, Y.J., et al.: Multi-view collaborative learning for semi-supervised domain adaptation. IEEE Access 9, 166 (2021)

Ngo, B.H., Chae, Y.J., Park, S.J., et al.: Multiple tasks-based multi-source domain adaptation using divide-and-conquer strategy. IEEE Access 11, 134 (2023)

Pan, F., Shin, I., Rameau, F., et al.: Unsupervised intra-domain adaptation for semantic segmentation through self-supervision. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3764–3773 (2020)

Park, S.J., Park, H.J., Kang, E.S., et al.: Pseudo label rectification via co-teaching and decoupling for multisource domain adaptation in semantic segmentation. IEEE Access 10, 91 (2022)

Peng, X., Usman, B., Kaushik, N., et al.: Visda: The visual domain adaptation challenge. arXiv preprint arXiv:1710.06924 (2017)

Peng, X., Bai, Q., Xia, X., et al.: Moment matching for multi-source domain adaptation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1406–1415 (2019)

Prabhu, V., Khare, S., Kartik, D., et al.: Sentry: Selective entropy optimization via committee consistency for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8558–8567 (2021)

Qiu, Z., Zhang, Y., Lin, H., et al.: Source-free domain adaptation via avatar prototype generation and adaptation. In: International Joint Conference on Artificial Intelligence, pp. 2921–2927 (2021)

Saenko, K., Kulis, B., Fritz, M., et al.: Adapting visual category models to new domains. In: European Conference on Computer Vision, Springer, pp. 213–226 (2010)

Saito, K., Watanabe, K., et al.: Maximum classifier discrepancy for unsupervised domain adaptation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3723–3732 (2018)

Shorten, C., Khoshgoftaar, T.M.: A survey on image data augmentation for deep learning. J. Big Data 6(1), 1–48 (2019). https://doi.org/10.1186/s40537-019-0197-0

Sohn, K., Berthelot, D., Carlini, N., et al.: Fixmatch: Simplifying semi-supervised learning with consistency and confidence. In: Neural Information Processing Systems, pp. 596–608 (2020)

Sun, B., Feng, J., Saenko, K.: Return of frustratingly easy domain adaptation. In: AAAI Conference on Artificial Intelligence, pp. 2058–2065 (2016)

Tang, H., Chen, K., Jia, K.: Unsupervised domain adaptation via structurally regularized deep clustering. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8722–8732 (2020)

Tang, S., Shi, Y., Ma, Z., et al.: Model adaptation through hypothesis transfer with gradual knowledge distillation. In: 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, pp. 5679–5685 (2021)

Tang, S., Zou, Y., Song, Z., et al.: Semantic consistency learning on manifold for source data-free unsupervised domain adaptation. Neural Netw. 152, 467–478 (2022). https://doi.org/10.1016/j.neunet.2022.05.015

Tian, J., Zhang, J., Li, W., et al.: Vdm-da: Virtual domain modeling for source data-free domain adaptation. IEEE Trans. Circuits Syst. Video Technol. 32(6), 3749–3760 (2021). https://doi.org/10.1109/TCSVT.2021.3111034

Tian, Y., Krishnan, D., Isola, P.: Contrastive multiview coding. In: European Conference on Computer Vision, pp. 776–794 (2020)

Venkateswara, H., Eusebio, J., Chakraborty, S., et al.: Deep hashing network for unsupervised domain adaptation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5018–5027 (2017)

Wang, D., Shelhamer, E., Liu, S., et al.: Tent: Fully test-time adaptation by entropy minimization. In: International Conference on Learning Representations (2021)

Wang, F., Han, Z., Gong, Y., et al.: Exploring domain-invariant parameters for source free domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7151–7160 (2022)

Wu, Z., Xiong, Y., Yu, S.X., et al.: Unsupervised feature learning via non-parametric instance discrimination. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3733–3742 (2018)

Xie, Q., Luong, M.T., Hovy, E., et al.: Self-training with noisy student improves imagenet classification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10687–10698 (2020)

Xu, M., Wang, H., Ni, B., et al.: Cross-domain detection via graph-induced prototype alignment. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12355–12364 (2020)

Xu, R., Li, G., Yang, J., et al.: Larger norm more transferable: An adaptive feature norm app.roach for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1426–1435 (2019)

Yang, G., Xia, H., et al.: Bi-directional generation for unsupervised domain adaptation. In: AAAI Conference on Artificial Intelligence, pp. 6615–6622 (2020a)

Yang, S., Wang, Y., Van De Weijer, J., et al.: Unsupervised domain adaptation without source data by casting a bait. (2020b). arXiv preprint arXiv:2010.12427

Yang, S., Wang, Y., Van De Weijer, J., et al.: Generalized source-free domain adaptation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8978–8987 (2021a)

Yang, S., van de Weijer, J., Herranz, L., et al.: Exploiting the intrinsic neighborhood structure for source-free domain adaptation. In: Neural Information Processing Systems, pp. 29393–29405 (2021b)

Ye, M., Zhang, X., Yuen, P.C., et al.: Unsupervised embedding learning via invariant and spreading instance feature. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6210–6219 (2019)

Zellinger, W., Grubinger, T., Lughofer, E., et al.: Central moment discrepancy (cmd) for domain-invariant representation learning. In: International Conference on Learning Representations (2017)

Zhang, Y., Liu, T., et al.: Bridging theory and algorithm for domain adaptation. In: International Conference on Machine Learning, pp. 7404–7413 (2019)

Zhao, X., Wang, S., Sun, Q.: Open-set domain adaptation by deconfounding domain gaps. Appl. Intell. 53(7), 7862–7875 (2023). https://doi.org/10.1007/s10489-022-03805-9

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No.62006094). Fund receiver: Dr. Jinjin Chi.

Author information

Authors and Affiliations

Contributions

Jihong Ouyang: Supervision, Writing-review-editing. Zhengjie Zhang: Conceptualization, Methodology, Code, Writing-original-draft. Qingyi Meng: Prepared figures, Writing-review-editing. Ximing Li: Writing-review-editing. Jinjin Chi: Writing-review-editing. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by Junyu Gao.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ouyang, J., Zhang, Z., Meng, Q. et al. Exploiting multi-level consistency learning for source-free domain adaptation. Multimedia Systems 30, 248 (2024). https://doi.org/10.1007/s00530-024-01444-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-024-01444-3