Abstract

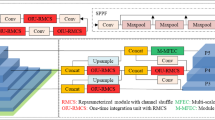

Compared to generalized object detection, research on small object detection has been slow, mainly due to the need to learn appropriate features from limited information about small objects. This is coupled with difficulties such as information loss during the forward propagation of neural networks. In order to solve this problem, this paper proposes an object detector named PS-YOLO with a model: (1) Reconstructs the C2f module to reduce the weakening or loss of small object features during the deep superposition of the backbone network. (2) Optimizes the neck feature fusion using the PD module, which fuses features at different levels and sizes to improve the model’s feature fusion capability at multiple scales. (3) Design the multi-channel aggregate receptive field module (MCARF) for downsampling to extend the image receptive field and recognize more local information. The experimental results of this method on three public datasets show that the algorithm achieves satisfactory accuracy, prediction, and recall.

Similar content being viewed by others

Data availability

This study uses 3 publicly available datasets: (1) VisDrone primary login code is https://github.com/VisDrone/VisDrone-Dataset, (2) TinyPerson primary login code is https://opendatalab.com/OpenDataLab/TinyPerson, (3) PASCAL VOC main login code is http://host.robots.ox.ac.uk/pascal/VOC/.

References

Liu, Y., Sun, P., Wergeles, N., Shang, Y.: A survey and performance evaluation of deep learning methods for small object detection. Expert Syst. Appl. 172, 114602 (2021)

Wen, L., Cheng, Y., Fang, Y., Li, X.: A comprehensive survey of oriented object detection in remote sensing images. Expert Syst. Appl. 224, 119960 (2023)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

Girshick, R.: Fast r-cnn. In: IEEE ICCV (2015)

Ren, S., He, K., Girshick, R., Sun, J.: Faster r-cnn: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, vol. 28 (2015)

Wang, C., Bai, X., Wang, S., Zhou, J., Ren, P.: Multiscale visual attention networks for object detection in vhr remote sensing images. IEEE Geosci. Remote Sens. Lett. 16(2), 310–314 (2018)

Rabbi, J., Ray, N., Schubert, M., Chowdhury, S., Chao, D.: Small-object detection in remote sensing images with end-to-end edge-enhanced gan and object detector network. Remote Sens. 12(9), 1432 (2020)

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.-Y., Berg, A.C.: Ssd: single shot multibox detector. In: ECCV (2016). Springer

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection. In: IEEE CVPR (2016)

Redmon, J., Farhadi, A.: Yolov3: An incremental improvement (2018). arXiv:1804.02767

Lin, T.-Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: IEEE CVPR (2017)

Jocher, G., Chaurasia, A., Qiu, J.: Yolo by ultralytics (2023). https://github.com/ultralytics/ultralytics

Wang, K., Liew, J.H., Zou, Y., Zhou, D., Feng, J.: Panet: few-shot image semantic segmentation with prototype alignment. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9197–9206 (2019)

Tong, K., Wu, Y.: Small object detection using deep feature learning and feature fusion network. Eng. Appl. Artif. Intell. 132, 107931 (2024)

Kim, S., Hong, S.H., Kim, H., Lee, M., Hwang, S.: Small object detection (sod) system for comprehensive construction site safety monitoring. Autom. Constr. 156, 105103 (2023)

Ji, S.-J., Ling, Q.-H., Han, F.: An improved algorithm for small object detection based on yolo v4 and multi-scale contextual information. Comput. Electr. Eng. 105, 108490 (2023)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: NeurIPS, vol. 30 (2017)

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022 (2021)

Dai, Z., Cai, B., Lin, Y., Chen, J.: Up-detr: unsupervised pre-training for object detection with transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1601–1610 (2021)

Li, F., Zhang, H., Liu, S., Guo, J., Ni, L.M., Zhang, L.: Dn-detr: accelerate detr training by introducing query denoising. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13619–13627 (2022)

Zhu, L., Wang, X., Ke, Z., Zhang, W., Lau, R.W.: Biformer: vision transformer with bi-level routing attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10323–10333 (2023)

Xu, S., Gu, J., Hua, Y., Liu, Y.: Dktnet: dual-key transformer network for small object detection. Neurocomputing 525, 29–41 (2023)

Gao, J., Chen, M., Xu, C.: Vectorized evidential learning for weakly-supervised temporal action localization. IEEE Trans. Pattern Anal. Mach. Intell. 45(12), 15949–15963 (2023). https://doi.org/10.1109/TPAMI.2023.3311447

Gao, J., Xu, C.: Learning video moment retrieval without a single annotated video. IEEE Trans. Circuits Syst. Video Technol. 32(3), 1646–1657 (2022). https://doi.org/10.1109/TCSVT.2021.3075470

Gao, J., Zhang, T., Xu, C.: Learning to model relationships for zero-shot video classification. IEEE Trans. Pattern Anal. Mach. Intell. 43(10), 3476–3491 (2021). https://doi.org/10.1109/TPAMI.2020.2985708

Hu, Y., Gao, J., Dong, J., Fan, B., Liu, H.: Exploring rich semantics for open-set action recognition. IEEE Trans. Multimed. 26, 5410–5421 (2024). https://doi.org/10.1109/TMM.2023.3333206

Jocher, G., Chaurasia, A., Qiu, J.: Yolo by ultralytics (2022). https://github.com/ultralytics/yolov5

Li, C., Li, L., Jiang, H., Weng, K., Geng, Y., Li, L., Ke, Z., Li, Q., Cheng, M., Nie, W., et al.: Yolov6: a single-stage object detection framework for industrial applications (2022). arXiv:2209.02976

Yang, Z., Guan, Q., Zhao, K., Yang, J., Xu, X., Long, H., Tang, Y.: Multi-branch auxiliary fusion yolo with re-parameterization heterogeneous convolutional for accurate object detection (2024). arXiv:2407.04381

Cheng, T., Song, L., Ge, Y., Liu, W., Wang, X., Shan, Y.: Yolo-world: real-time open-vocabulary object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16901–16911 (2024)

Wang, M., Sun, H., Shi, J., Liu, X., Cao, X., Zhang, L., Zhang, B.: Q-yolo: efficient inference for real-time object detection. In: Asian Conference on Pattern Recognition, pp. 307–321 (2023). Springer

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., Zagoruyko, S.: End-to-end object detection with transformers. In: ECCV (2020). Springer

Zhang, H., Li, F., Liu, S., Zhang, L., Su, H., Zhu, J., Ni, L.M., Shum, H.-Y.: Dino: detr with improved denoising anchor boxes for end-to-end object detection (2022). arXiv:2203.03605

Tan, M., Pang, R., Le, Q.V.: Efficientdet: scalable and efficient object detection. In: IEEE CVPR (2020)

Tan, Z., Wang, J., Sun, X., Lin, M., Li, H., et al.: Giraffedet: a heavy-neck paradigm for object detection. In: ICLR (2021)

Chen, J., Kao, S.-h., He, H., Zhuo, W., Wen, S., Lee, C.-H., Chan, S.-H.G.: Run, don’t walk: chasing higher flops for faster neural networks. In: IEEE CVPR, pp. 12021–12031 (2023)

Nascimento, M.G.d., Fawcett, R., Prisacariu, V.A.: Dsconv: efficient convolution operator. In: IEEE CVPR (2019)

Cao, J., Li, Y., Sun, M., Chen, Y., Lischinski, D., Cohen-Or, D., Chen, B., Tu, C.: Do-conv: depthwise over-parameterized convolutional layer. In: IEEE TIP, vol. 31 (2022)

Du, D., Zhu, P., Wen, L., Bian, X., Lin, H., Hu, Q., Peng, T., Zheng, J., Wang, X., Zhang, Y., et al.: Visdrone-det2019: the vision meets drone object detection in image challenge results. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (2019)

Yu, X., Gong, Y., Jiang, N., Ye, Q., Han, Z.: Scale match for tiny person detection. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 1257–1265 (2020)

Everingham, M.: The pascal visual object classes challenge 2007 (2009). http://www.Pascal-network.org/challenges/VOC/voc2007/workshop/index.Html

Everingham, M., Winn, J.: The pascal visual object classes challenge 2012 (voc2012) development kit. pattern analysis, statistical modelling and computational learning. Tech. Rep. 8(5), 2–5 (2011)

Wang, A., Chen, H., Liu, L., Chen, K., Lin, Z., Han, J., Ding, G.: Yolov10: real-time end-to-end object detection (2024). arXiv:2405.14458

Acknowledgements

This work is supported by the Natural Science Foundation of Tianshan Talent Training Program (NO. 2023TSYCLJ0023), Major science and technology programs in the autonomous region (No. 2023A03001), Xinjiang Uygur Autonomous Region (NO. 2023D01C176), Xinjiang Uygur Autonomous Region Universities Fundamental Research Funds Scientific Research Project (NO. XJEDU2022P018).

Author information

Authors and Affiliations

Contributions

Shifeng Peng: Conceptualization, methodology, data organization, writing—original manuscript preparation, visualization. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Communicated by Junyu Gao.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Peng, S., Fan, X., Tian, S. et al. PS-YOLO: a small object detector based on efficient convolution and multi-scale feature fusion. Multimedia Systems 30, 241 (2024). https://doi.org/10.1007/s00530-024-01447-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-024-01447-0