Abstract

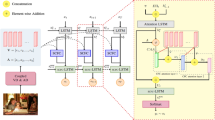

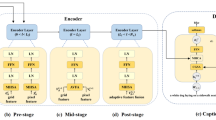

Text-based image captioning is an important task, aiming to generate descriptions based on reading and reasoning the scene texts in images. Text-based image contains both textual and visual information, which is difficult to be described comprehensively. Recent works fail to adequately model the relationship between features of different modalities and fine-grained alignment. Due to the multimodal characteristics of scene texts, the representations of text usually come from multiple encoders of visual and textual, leading to heterogeneous features. Though lots of works have paid attention to fuse features from different sources, they ignore the direct correlation between heterogeneous features, and the coherence in scene text has not been fully exploited. In this paper, we propose Heterogeneous Attention Module (HAM) to enhance the cross-modal representations of OCR tokens and devote it to text-based image captioning. The HAM is designed to capture the coherence between different modalities of OCR tokens and provide context-aware scene text representations to generate accurate image captions. To the best of our knowledge, we are the first to apply the heterogeneous attention mechanism to explore the coherence in OCR tokens for text-based image captioning. By calculating the heterogeneous similarity, we interactively enhance the alignment between visual and textual information in OCR. We conduct the experiments on the TextCaps dataset. Under the same setting, the results show that our model achieves competitive performances compared with the advanced methods and ablation study demonstrates that our framework enhances the original model in all metrics.

Similar content being viewed by others

Data availability

No datasets were generated or analysed during the current study.

References

Sidorov, O., Hu, R., Rohrbach, M., Singh, A.: TextCaps: a dataset for image captioning with reading comprehension. In: European Conference on Computer Vision, pp. 742–758. Springer (2020)

Zhu, Z., Yu, J., Wang, Y., Sun, Y., Hu, Y., Wu, Q.: Mucko: multi-layer cross-modal knowledge reasoning for fact-based visual question answering. arXiv preprint arXiv:2006.09073 (2020)

Yu, W., Zhou, J., Yu, W., Liang, X., Xiao, N.: Heterogeneous graph learning for visual commonsense reasoning. In: Advances in neural information processing systems, vol. 32 (2019)

Fan, C., Zhang, X., Zhang, S., Wang, W., Zhang, C., Huang, H.: Heterogeneous memory enhanced multimodal attention model for video question answering. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1999–2007 (2019)

Hu, Y., Fu, J., Chen, M., Gao, J., Dong, J., Fan, B., Liu, H.: Learning proposal-aware re-ranking for weakly-supervised temporal action localization. IEEE Trans. Circuits Syst. Video Technol. 34(1), 207–220 (2023)

Cai, D., Qian, S., Fang, Q., Hu, J., Ding, W., Xu, C.: Heterogeneous graph contrastive learning network for personalized micro-video recommendation. IEEE Trans. Multimedia (2022)

Gao, J., Zhang, T., Xu, C.: I know the relationships: zero-shot action recognition via two-stream graph convolutional networks and knowledge graphs. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8303–8311 (2019)

Hu, Z., Wang, Z., Song, Z., Hong, R.: Dual video summarization: From frames to captions. In: IJCAI, pp. 846–854 (2023)

Xu, K., Ba, J., Kiros, R., Cho, K., Courville, A., Salakhudinov, R., Zemel, R., Bengio, Y.: Show, attend and tell: neural image caption generation with visual attention. In: International Conference on Machine Learning, pp. 2048–2057. PMLR (2015)

Anderson, P., He, X., Buehler, C., Teney, D., Johnson, M., Gould, S., Zhang, L.: Bottom-up and top-down attention for image captioning and visual question answering. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6077–6086 (2018)

Huang, L., Wang, W., Chen, J., Wei, X.-Y.: Attention on attention for image captioning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4634–4643 (2019)

Tan, J.H., Tan, Y.H., Chan, C.S., Chuah, J.H.: ACORT: a compact object relation transformer for parameter efficient image captioning. Neurocomputing 482, 60–72 (2022)

Hu, N., Fan, C., Ming, Y., Feng, F.: MAENet: a novel multi-head association attention enhancement network for completing intra-modal interaction in image captioning. Neurocomputing 519, 69–81 (2023)

Wang, J., Tang, J., Yang, M., Bai, X., Luo, J.: Improving OCR-based image captioning by incorporating geometrical relationship. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1306–1315 (2021)

Xu, G., Niu, S., Tan, M., Luo, Y., Du, Q., Wu, Q.: Towards accurate text-based image captioning with content diversity exploration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 12637–12646 (2021)

Zhang, W., Shi, H., Guo, J., Zhang, S., Cai, Q., Li, J., Luo, S., Zhuang, Y.: Magic: Multimodal relational graph adversarial inference for diverse and unpaired text-based image captioning. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, pp. 3335–3343 (2022)

Bojanowski, P., Grave, E., Joulin, A., Mikolov, T.: Enriching word vectors with subword information. Trans. Assoc. Comput. Linguist. 5, 135–146 (2017)

Liu, S., Zhu, Z., Ye, N., Guadarrama, S., Murphy, K.: Improved image captioning via policy gradient optimization of spider. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 873–881 (2017)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 28, 91–99 (2015)

Devlin, J., Chang, M.-W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

Zhu, Q., Gao, C., Wang, P., Wu, Q.: Simple is not easy: a simple strong baseline for TextVQA and TextCaps. Proc. AAAI Conf. Artif. Intell. 35, 3608–3615 (2021)

Yang, Z., Lu, Y., Wang, J., Yin, X., Florencio, D., Wang, L., Zhang, C., Zhang, L., Luo, J.: Tap: text-aware pre-training for Text-VQA and Text-Caption. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8751–8761 (2021)

Wang, Z., Bao, R., Wu, Q., Liu, S.: Confidence-aware non-repetitive multimodal transformers for TextCaps. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 2835–2843 (2021)

Sun, Y., Han, J., Yan, X., Yu, P.S., Wu, T.: PathSim: meta path-based top-K similarity search in heterogeneous information networks. Proc. VLDB Endow. 4(11), 992–1003 (2011)

Sun, Y., Han, J.: Mining Heterogeneous Information Networks: Principles and Methodologies. Morgan & Claypool Publishers, San Rafael (2012)

Vinyals, O., Toshev, A., Bengio, S., Erhan, D.: Show and tell: a neural image caption generator. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Li, G., Zhu, L., Liu, P., Yang, Y.: Entangled transformer for image captioning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) (2019)

Yang, X., Wu, Y., Yang, M., Chen, H., Geng, X.: Exploring diverse in-context configurations for image captioning. In: Advances in Neural Information Processing Systems, vol. 36 (2024)

Vedantam, R., Lawrence Zitnick, C., Parikh, D.: CIDEr: Consensus-based image description evaluation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4566–4575 (2015)

Li, H., Wang, P., Shen, C.: Towards end-to-end text spotting with convolutional recurrent neural networks. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2017)

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., Zagoruyko, S.: End-to-end object detection with transformers. In: European Conference on Computer Vision, pp. 213–229. Springer (2020)

Ye, M., Zhang, J., Zhao, S., Liu, J., Liu, T., Du, B., Tao, D.: DeepSolo: let transformer decoder with explicit points solo for text spotting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19348–19357 (2023)

Huang, M., Zhang, J., Peng, D., Lu, H., Huang, C., Liu, Y., Bai, X., Jin, L.: ESTextSpotter: towards better scene text spotting with explicit synergy in transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 19495–19505 (2023)

Wang, J., Tang, J., Luo, J.: Multimodal attention with image text spatial relationship for OCR-based image captioning. In: Proceedings of the 28th ACM International Conference on Multimedia, pp. 4337–4345 (2020)

Wang, Q., Deng, H., Wu, X., Yang, Z., Liu, Y., Wang, Y., Hao, G.: LCM-Captioner: a lightweight text-based image captioning method with collaborative mechanism between vision and text. Neural Netw. 162, 318–329 (2023)

Radford, A., Kim, J.W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark, J., et al.: Learning transferable visual models from natural language supervision. In: International Conference on Machine Learning, pp. 8748–8763. PMLR (2021)

Ueda, A., Yang, W., Sugiura, K.: Switching text-based image encoders for captioning images with text. IEEE Access 11, 55706–55715 (2023). https://doi.org/10.1109/ACCESS.2023.3282444

Zeng, Z., Zhang, H., Lu, R., Wang, D., Chen, B., Wang, Z.: ConZIC: Controllable zero-shot image captioning by sampling-based polishing. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 23465–23476 (2023)

Xu, D., Zhao, W., Cai, Y., Huang, Q.: Zero-TextCap: zero-shot framework for text-based image captioning. In: Proceedings of the 31st ACM International Conference on Multimedia, pp. 4949–4957 (2023)

Lv, G., Sun, Y., Nian, F., Zhu, M., Tang, W., Hu, Z.: COME: clip-OCR and master object for text image captioning. Image Vis. Comput. 136, 104751 (2023)

Chen, L., Li, J., Dong, X., Zhang, P., He, C., Wang, J., Zhao, F., Lin, D.: ShareGPT4V: improving large multi-modal models with better captions. arXiv preprint arXiv:2311.12793 (2023)

Jayaswal, V., Ji, S., Kumar, A., Kumar, V., Prakash, A.: OCR based deep learning approach for image captioning. In: 2024 IEEE International Conference on Computing, Power and Communication Technologies (IC2PCT), vol. 5, pp. 239–244. IEEE (2024)

Wang, N., Xie, J., Wu, J., Jia, M., Li, L.: Controllable image captioning via prompting. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, pp. 2617–2625 (2023)

Yu, J., Zhu, Z., Wang, Y., Zhang, W., Hu, Y., Tan, J.: Cross-modal knowledge reasoning for knowledge-based visual question answering. Pattern Recognit. 108, 107563 (2020)

Song, Z., Hu, Z., Hong, R.: Efficient and self-adaptive rationale knowledge base for visual commonsense reasoning. Multimedia Syst. 29(5), 3017–3026 (2023)

Jiang, P., Han, Y.: Reasoning with heterogeneous graph alignment for video question answering. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 11109–11116 (2020)

Cai, D., Qian, S., Fang, Q., Xu, C.: Heterogeneous hierarchical feature aggregation network for personalized micro-video recommendation. IEEE Trans. Multimedia 24, 805–818 (2021)

Yu, T., Yang, Y., Li, Y., Liu, L., Fei, H., Li, P.: Heterogeneous attention network for effective and efficient cross-modal retrieval. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1146–1156 (2021)

Liu, J., Song, L., Wang, G., Shang, X.: Meta-HGT: metapath-aware hypergraph transformer for heterogeneous information network embedding. Neural Netw. 157, 65–76 (2023)

Yang, X., Yan, M., Pan, S., Ye, X., Fan, D.: Simple and efficient heterogeneous graph neural network. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, pp. 10816–10824 (2023)

Borisyuk, F., Gordo, A., Sivakumar, V.: Rosetta: Large scale system for text detection and recognition in images. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 71–79 (2018)

Krishna, R., Zhu, Y., Groth, O., Johnson, J., Hata, K., Kravitz, J., Chen, S., Kalantidis, Y., Li, L.-J., Shamma, D.A., et al.: Visual genome: connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vis. 123(1), 32–73 (2017)

Hu, R., Singh, A., Darrell, T., Rohrbach, M.: Iterative answer prediction with pointer-augmented multimodal transformers for TextVQA. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9992–10002 (2020)

Song, Z., Hu, Z., Zhou, Y., Zhao, Y., Hong, R., Wang, M.: Embedded heterogeneous attention transformer for cross-lingual image captioning. IEEE Trans. Multimedia (2024). https://doi.org/10.1109/TMM.2024.3384678

Tang, W., Hu, Z., Song, Z., Hong, R.: OCR-oriented master object for text image captioning. In: Proceedings of the 2022 International Conference on Multimedia Retrieval, pp. 39–43 (2022)

Schlichtkrull, M., Kipf, T.N., Bloem, P., Van Den Berg, R., Titov, I., Welling, M.: Modeling relational data with graph convolutional networks. In: European Semantic Web Conference, pp. 593–607. Springer (2018)

Papineni, K., Roukos, S., Ward, T., Zhu, W.-J.: BLEU: a method for automatic evaluation of machine translation. In: Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, pp. 311–318 (2002)

Denkowski, M., Lavie, A.: Meteor universal: language specific translation evaluation for any target language. In: Proceedings of the Ninth Workshop on Statistical Machine Translation, pp. 376–380 (2014)

Lin, C.-Y.: Rouge: a package for automatic evaluation of summaries. In: Text Summarization Branches Out, pp. 74–81 (2004)

Singh, A., Goswami, V., Natarajan, V., Jiang, Y., Chen, X., Shah, M., Rohrbach, M., Batra, D., Parikh, D.: MMF: a multimodal framework for vision and language research. https://github.com/facebookresearch/mmf (2020)

Acknowledgements

This work was supported by the NSFC NO. 62172138 and 61932009.

Author information

Authors and Affiliations

Contributions

Yao Zhang did experiments and wrote the manuscript. Zijie Song proposed the research topic with algorithm design and did the supervision. Zhenzhen Hu was responsible for reviewing and provided guidance on the topic.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, Y., Song, Z. & Hu, Z. Exploring coherence from heterogeneous representations for OCR image captioning. Multimedia Systems 30, 262 (2024). https://doi.org/10.1007/s00530-024-01470-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-024-01470-1