Abstract

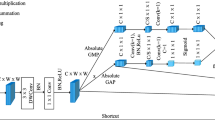

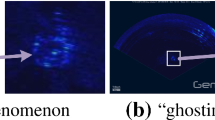

Sonar image classification is crucial in salvage operations and submarine pipeline detection. However, it faces challenges of low resolution, few-shot, and long-tail due to multipath interference and data collection issues. Current methods employ transfer learning, resampling, and adversarial attacks to address these challenges. Nonetheless, knowledge transfer from optical to sonar images is often inefficient due to significant domain differences. Furthermore, the large receptive fields of existing models make it difficult to extract local details from low-resolution sonar images. Additionally, cross-entropy loss excessively suppresses tail class gradients, causing a bias towards head classes. To address these problems, this paper proposes a Hierarchical Transfer Progressive Learning based on the Jigsaw puzzle and Block Convolution (HTPL-JB). First, we introduce a hierarchical pre-training strategy incorporating a source pre-training phase into the transfer learning phase, enhancing the efficiency of transferring knowledge from optical to sonar images. In the fine-tuning phase, we employ a progressive training strategy to progressively extract information at different granular levels, enhancing the model’s ability to capture fine details from sonar images. Finally, we introduce a key point sensitive loss (KPSLoss), which uses a larger margin distance and a smaller slope factor for the tail class to enhance accuracy and the separability of key points. Extensive experiments on the NKSID datasets demonstrate that HTPL-JB significantly outperforms the existing methods. Our code will be available at https://github.com/leeAndJim/JBHTPL.

Similar content being viewed by others

Data availability

No datasets were generated or analysed during the current study.

References

Nguyen, H.-T., Lee, E.-H., Lee, S.: Study on the classification performance of underwater sonar image classification based on convolutional neural networks for detecting a submerged human body. Sensors. 20(1), 94 (2020). https://doi.org/10.3390/s20010094

Ma, Q., Jiang, L., Yu, W.: Lambertian-based adversarial attacks on deep-learning-based underwater side-scan sonar image classification. Pattern Recogn. 138, 109363 (2023). https://doi.org/10.1016/j.patcog.2023.109363

Gerg, I.D., Monga, V.: Structural prior driven regularized deep learning for sonar image classification. IEEE Trans. Geosci. Remote Sens. 60, 1–16 (2022). https://doi.org/10.1109/TGRS.2020.3045649

Cheng, Z., Huo, G., Li, H.: A multi-domain collaborative transfer learning method with multi-scale repeated attention mechanism for underwater side-scan sonar image classification. Remote Sens. 14(2), 355 (2022). https://doi.org/10.3390/rs14020355

Yu, Y., Zhao, J., Huang, C., Zhao, X.: Treat noise as Domain Shift: Noise feature disentanglement for underwater perception and Maritime surveys in side-scan sonar images. IEEE Trans. Geosci. Remote Sens. 61, 1–15 (2023). https://doi.org/10.1109/TGRS.2023.3322787

Vishwakarma, A.: Denoising and Inpainting of Sonar images using Convolutional sparse representation. IEEE Trans. Instrum. Meas. 72, 1–9 (2023). https://doi.org/10.1109/TIM.2023.3246527

Ma, Z., Li, S., Ding, J., Zou, B.: MHGAN: A multi-headed Generative Adversarial Network for Underwater Sonar Image Super-resolution. IEEE Trans. Geosci. Remote Sens. 61, 1–16 (2023). https://doi.org/10.1109/TGRS.2023.3327045

Huang, C., Zhao, J., Zhang, H., Yu, Y.: Seg2Sonar: A full-class Sample Synthesis Method Applied to Underwater Sonar Image Target Detection, Recognition, and segmentation tasks. IEEE Trans. Geosci. Remote Sens. 62, 1–19 (2024). https://doi.org/10.1109/TGRS.2024.3363875

Preciado-Grijalva, A., Wehbe, B., Firvida, M.B., Valdenegro-Toro, M.: Self-supervised learning for sonar image classification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1499–1508 (2022)

Du, X., Sun, Y., Song, Y., Sun, H., Yang, L.: A comparative study of different CNN models and transfer learning effect for underwater object classification in side-scan sonar images. Remote Sens. 15(3), 593 (2023). https://doi.org/10.3390/rs15030593

Li, C., Ye, X., Xi, J., Jia, Y.: A texture feature removal network for sonar image classification and detection. Remote Sens. 15(3), 616 (2023). https://doi.org/10.3390/rs15030616

Chungath, T.T., Nambiar, A.M., Mittal, A.: Transfer learning and few-shot learning based deep neural network models for underwater sonar image classification with a few samples. IEEE J. Oceanic Eng. 49, 294–310 (2024). https://doi.org/10.1109/JOE.2022.3221127

Dai, Z., Liang, H., Duan, T.: Small-sample sonar image classification based on deep learning. J. Mar. Sci. Eng. 10(12), 1820 (2022). https://doi.org/10.3390/jmse10121820

Xu, H., Bai, Z., Zhang, X., Ding, Q.: MFSANet: Zero-shot side-scan Sonar Image Recognition based on style transfer. IEEE Geosci. Remote Sens. Lett. 20, 1–5 (2023). https://doi.org/10.1109/LGRS.2023.3318051

Yang, Z., Zhao, J., Yu, Y., Huang, C.: A sample augmentation method for side-scan Sonar full-class images that can be used for detection and segmentation. IEEE Trans. Geosci. Remote Sens. 62, 1–11 (2024). https://doi.org/10.1109/TGRS.2024.3371051

Jiao, W., Zhang, J.: Sonar images classification while facing long-tail and few-shot. IEEE Trans. Geosci. Remote Sens. 60, 1–20 (2022). https://doi.org/10.1109/TGRS.2022.3211847

Jiao, W., Zhang, J., Zhang, C.: Open-set recognition with long-tail sonar images. Expert Syst. Appl. 249, 123495 (2024). https://doi.org/10.1016/j.eswa.2024.123495

Huo, G., Wu, Z., Li, J.: Underwater object classification in Sidescan Sonar images using deep transfer learning and semi-synthetic Training Data. IEEE Access. 8, 47407–47418 (2020). https://doi.org/10.1109/ACCESS.2020.2978880

Reed, C.J., Yue, X., Nrusimha, A., Ebrahimi, S., Vijaykumar, V., Mao, R., Li, B., Zhang, S., Guillory, D., Metzger, S., Keutzer, K., Darrell, T.: Self-Supervised Pre-training Improves Self-Supervised Pre-training. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). pp. 2584–2594 (2022)

Du, R., Xie, J., Ma, Z., Chang, D., Song, Y.-Z., Guo, J.: Progressive learning of category-consistent Multi-granularity features for fine-grained visual classification. IEEE Trans. Pattern Anal. Mach. Intell. 44, 9521–9535 (2022). https://doi.org/10.1109/TPAMI.2021.3126668

Li, M., Cheung, Y.-M., Hu, Z.: Key Point Sensitive loss for long-tailed visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 45, 4812–4825 (2023). https://doi.org/10.1109/TPAMI.2022.3196044

Chen, T., Liu, S., Chang, S., Cheng, Y., Amini, L., Wang, Z.: Adversarial robustness: From self-supervised pre-training to fine-tuning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 699–708 (2020)

Yang, Z., Xie, L., Zhou, W., Huo, X., Wei, L., Lu, J., Tian, Q., Tang, S.: VoxSeP: Semi-positive voxels assist self-supervised 3D medical segmentation. Multimedia Syst. 29, 33–48 (2023). https://doi.org/10.1007/s00530-022-00977-9

Tang, Y., Yang, D., Li, W., Roth, H.R., Landman, B., Xu, D., Nath, V., Hatamizadeh, A.: Self-supervised pre-training of swin transformers for 3D medical image analysis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 20730–20740 (2022)

Zhang, Y., Kang, B., Hooi, B., Yan, S., Feng, J.: Deep long-tailed learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 45, 10795–10816 (2023). https://doi.org/10.1109/TPAMI.2023.3268118

Cai, J., Wang, Y., Hwang, J.-N.: ACE: Ally Complementary Experts for Solving Long-Tailed Recognition in One-Shot. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). pp. 112–121 (2021)

Li, W., Yang, X., Li, Z.: MLCB-Net: A multi-level class balancing network for domain adaptive semantic segmentation. Multimedia Syst. 29, 1405–1416 (2023). https://doi.org/10.1007/s00530-023-01055-4

Huang, C., Li, Y., Loy, C.C., Tang, X.: Learning deep representation for imbalanced classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5375–5384 (2016)

Alexandridis, K.P., Luo, S., Nguyen, A., Deng, J., Zafeiriou, S.: Inverse image frequency for long-tailed image recognition. IEEE Trans. Image Process. 32, 5721–5736 (2023). https://doi.org/10.1109/TIP.2023.3321461

Hu, R., Song, Y., Liu, Y., Zhu, Y., Feng, N., Qiu, C., Han, K., Teng, Q., Haq, I.U., Liu, Z.: Imbalance multiclass problem: A robust feature enhancement-based framework for liver lesion classification. Multimedia Syst. 30, 104 (2024). https://doi.org/10.1007/s00530-024-01291-2

Li, J., Wang, Q.-F., Huang, K., Yang, X., Zhang, R., Goulermas, J.Y.: Towards better long-tailed oracle character recognition with adversarial data augmentation. Pattern Recogn. 140, 109534 (2023). https://doi.org/10.1016/j.patcog.2023.109534

Kang, B., Xie, S., Rohrbach, M., Yan, Z., Gordo, A., Feng, J., Kalantidis, Y.: Decoupling Representation and Classifier for Long-Tailed Recognition, (2020). https://arxiv.org/abs/1910.09217

Tang, Z., Yang, H., Chen, C.Y.-C.: Weakly supervised posture mining for fine-grained classification. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 23735–23744 (2023)

Wang, S., Wang, Z., Wang, N., Wang, H., Li, H.: From coarse to fine: Multi-level feature fusion network for fine-grained image retrieval. Multimedia Syst. 28, 1515–1528 (2022). https://doi.org/10.1007/s00530-022-00899-6

Liu, M., Zhang, C., Bai, H., Zhang, R., Zhao, Y.: Cross-part learning for fine-grained image classification. IEEE Trans. Image Process. 31, 748–758 (2022). https://doi.org/10.1109/TIP.2021.3135477

Tao, H., Duan, Q., Lu, M., Hu, Z.: Learning discriminative feature representation with pixel-level supervision for forest smoke recognition. Pattern Recogn. 143, 109761 (2023). https://doi.org/10.1016/j.patcog.2023.109761

Tao, H.: Smoke Recognition in Satellite Imagery via an attention pyramid network with bidirectional Multilevel Multigranularity Feature Aggregation and Gated Fusion. IEEE Internet Things J. 11, 14047–14057 (2024). https://doi.org/10.1109/JIOT.2023.3339476

Tao, H., Duan, Q.: Hierarchical attention network with progressive feature fusion for facial expression recognition. Neural Netw. 170, 337–348 (2024). https://doi.org/10.1016/j.neunet.2023.11.033

Liu, Y., Zhou, L., Zhang, P., Bai, X., Gu, L., Yu, X., Zhou, J., Hancock, E.R.: Where to focus: Investigating hierarchical attention relationship for fine-grained visual classification. In: Proceedings of the European Conference on Computer Vision, pp. 57–73 (2022)

Feng, N., Tang, Y., Song, Z., Yu, J., Chen, Y.-P.P., Yang, W.: MA-VLAD: A fine-grained local feature aggregation scheme for action recognition. Multimedia Syst. 30, 139 (2024). https://doi.org/10.1007/s00530-024-01341-9

Valdenegro-Toro, M.: Deep Neural Networks for Marine Debris Detection in Sonar Images, (2019). http://arxiv.org/abs/1905.05241

Cui, Y., Jia, M., Lin, T.-Y., Song, Y., Belongie, S.: Class-balanced loss based on effective number of samples. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9268–9277 (2019)

Ai, J., Mao, Y., Luo, Q., Jia, L., Xing, M.: SAR Target classification using the multikernel-size Feature Fusion-based convolutional neural network. IEEE Trans. Geosci. Remote Sens. 60, 1–13 (2022). https://doi.org/10.1109/TGRS.2021.3106915

McKay, J., Gerg, I., Monga, V., Raj, R.G.: What’s mine is yours. OCEANS 2017 - Anchorage, pp. 1–7 (2017)

Mahajan, D., Girshick, R., Ramanathan, V., He, K., Paluri, M., Li, Y., Bharambe, A., van der Maaten, L.: Exploring the limits of weakly supervised pre-training. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 181–196 (2018)

Lin, T.-Y., Goyal, P., Girshick, R., He, K., Dollar, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988 (2017)

Alshammari, S., Wang, Y.-X., Ramanan, D., Kong, S.: Long-tailed recognition via weight balancing. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6887–6897 (2022)

Aimar, E.S., Jonnarth, A., Felsberg, M., Kuhlmann, M.: Balanced product of calibrated experts for long-tailed recognition. In: 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 19967–19977 (2023)

Zhao, Q., Jiang, C., Hu, W., Zhang, F., Liu, J.: MDCS: More diverse experts with consistency self-distillation for long-tailed recognition. In: 2023 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 11563–11574 (2023)

Li, J., Meng, Z., Shi, D., Song, R., Diao, X., Wang, J., Xu, H.: FCC: Feature clusters compression for long-tailed visual recognition. In: 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 24080–24089 (2023)

Chen, Y., Liang, H., Jiao, S.: NAS-MFF: NAS-Guided Multiscale Feature Fusion Network with Pareto optimization for Sonar images classification. IEEE Sens. J. 24, 14656–14667 (2024). https://doi.org/10.1109/JSEN.2024.3375372

Woo, S., Park, J., Lee, J.-Y., Kweon, I.S.: CBAM: Convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018)

Park, J., Woo, S., Lee, J.-Y., Kweon, I.S.: BAM: Bottleneck Attention Module, (2018). http://arxiv.org/abs/1807.06514

Qin, Z., Zhang, P., Wu, F., Li, X.: FcaNet: Frequency channel attention networks. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 783–792 (2021)

Hu, J., Shen, L., Sun, G.: Squeeze-and-Excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Ma, X., Guo, J., Sansom, A., McGuire, M., Kalaani, A., Chen, Q., Tang, S., Yang, Q., Fu, S.: Spatial pyramid attention for deep convolutional neural networks. IEEE Trans. Multimedia. 23, 3048–3058 (2021). https://doi.org/10.1109/TMM.2021.3068576

Zhang, H., Zu, K., Lu, J., Zou, Y., Meng, D.: EPSANet: An efficient pyramid squeeze attention block on convolutional neural network. In: Proceedings of the Asian Conference on Computer Vision, pp. 1161–1177 (2022)

Acknowledgements

This work was partly supported by the National Natural Science Foundation of China (No. 62102320), and the Key Research and Development Program of Xianyang City (No. L2024-ZDYF-ZDYF-SF-0037). (Corresponding author: Huanjie Tao).

Author information

Authors and Affiliations

Contributions

X.C. and H.T. conceptualized the study. X.C. and H.T. developed the methodology. X.C., H.Z., and H.T. performed formal analysis and investigation. X.C. prepared the original draft. X.C., H.Z., P.Z., Y.D., and H.T. reviewed and edited the manuscript. H.T. acquired funding, provided resources, and supervised the project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, X., Tao, H., Zhou, H. et al. Hierarchical and progressive learning with key point sensitive loss for sonar image classification. Multimedia Systems 30, 380 (2024). https://doi.org/10.1007/s00530-024-01590-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-024-01590-8