Abstract

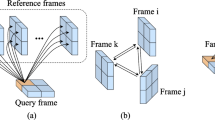

Different from traditional video-based HOI detection, which is confined to segment labeling only, the task of joint segmentation and labeling for video HOI requires predicting human sub-activity and object affordance labels while delineating their segment boundaries. Previous methods mainly rely on frame-level and segment-level features to predict segmentation boundaries and labels. However, recognizing the significance of inter-frame and long-term temporal information is imperative. Therefore, to address this task and delve deeper into the temporal dynamics of human–object interactions, we propose a novel Hierarchical spatial-temporal network with Graph And Transformer (HierGAT). This framework integrates two branches: a temporal-enhanced recurrent graph network (TRGN) and parallel transformer encoders (PTE), aimed at extracting hierarchical temporal features from video data. We first augment the temporal aspect of the recurrent graph network by incorporating inter-frame interactions to capture spatial-temporal information within and across frames. Considering the auxiliary role of adjacent frames, we also propose a grouped fusion mechanism to fuse the obtained interaction information. The parallel transformer encoders branch consists of two parallel transformer encoders to extract spatial and long-term temporal information in the video. By leveraging the outputs from these branches, our model fully exploits spatial-temporal information to predict segmentation boundaries and labels. Experimental results across three datasets demonstrate the effectiveness of our approach. All the codes and data can be found at https://github.com/wjx1198/HierGAT.

Similar content being viewed by others

Availability of data and materials

The data supporting the results of this article can be found at https://github.com/wjx1198/HierGAT.

Code Availability

All the codes can be found at https://github.com/wjx1198/HierGAT.

References

Gupta, A., Kembhavi, A., Davis, L.S.: Observing human–object interactions: using spatial and functional compatibility for recognition. IEEE Trans. Pattern Anal. Mach. Intell. 31(10), 1775–1789 (2009)

Kim, B., Choi, T., Kang, J., Kim, H.J.: Uniondet: union-level detector towards real-time human–object interaction detection. In: Proceedings of the European Conference on Computer Vision, pp. 498–514 (2020). Springer

Chen, M., Liao, Y., Liu, S., Chen, Z., Wang, F., Qian, C.: Reformulating hoi detection as adaptive set prediction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9004–9013 (2021)

Xia, L., Ding, X.: Human–object interaction detection based on cascade multi-scale transformer. Appl. Intell. 54(3), 2831–2850 (2024)

Li, L., Wei, J., Wang, W., Yang, Y.: Neural-logic human–object interaction detection. In: Advances in Neural Information Processing Systems, vol. 36 (2024)

Hu, Y., Lu, M., Xie, C., Lu, X.: Video-based driver action recognition via hybrid spatial-temporal deep learning framework. Multimed. Syst. 27(3), 483–501 (2021)

Xing, H., Burschka, D.: Understanding spatio-temporal relations in human–object interaction using pyramid graph convolutional network. In: IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5195–5201 (2022). IEEE

Wang, N., Zhu, G., Li, H., Feng, M., Zhao, X., Ni, L., Shen, P., Mei, L., Zhang, L.: Exploring spatio-temporal graph convolution for video-based human–object interaction recognition. IEEE Trans. Circuits Syst. Video Technol. 33(10), 5814–5827 (2023)

Tran, H., Le, V., Venkatesh, S., Tran, T.: Persistent-transient duality: a multi-mechanism approach for modeling human–object interaction. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9858–9867 (2023)

Banerjee, A., Singh, P.K., Sarkar, R.: Fuzzy integral-based cnn classifier fusion for 3d skeleton action recognition. IEEE Trans. Circuits Syst. Video Technol. 31(6), 2206–2216 (2020)

Russel, N.S., Selvaraj, A.: Fusion of spatial and dynamic cnn streams for action recognition. Multimed. Syst. 27(5), 969–984 (2021)

Nagarajan, T., Feichtenhofer, C., Grauman, K.: Grounded human–object interaction hotspots from video. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8688–8697 (2019)

Zeng, R., Huang, W., Tan, M., Rong, Y., Zhao, P., Huang, J., Gan, C.: Graph convolutional networks for temporal action localization. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 7094–7103 (2019)

Sunkesula, S.P.R., Dabral, R., Ramakrishnan, G.: Lighten: learning interactions with graph and hierarchical temporal networks for hoi in videos. In: Proceedings of the 28th ACM International Conference on Multimedia, pp. 691–699 (2020)

Wang, N., Zhu, G., Zhang, L., Shen, P., Li, H., Hua, C.: Spatio-temporal interaction graph parsing networks for human–object interaction recognition. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 4985–4993 (2021)

Qiao, T., Men, Q., Li, F.W., Kubotani, Y., Morishima, S., Shum, H.P.: Geometric features informed multi-person human–object interaction recognition in videos. In: Proceedings of the European Conference on Computer Vision, pp. 474–491 (2022). Springer

Morais, R., Le, V., Venkatesh, S., Tran, T.: Learning asynchronous and sparse human–object interaction in videos. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16041–16050 (2021)

Tu, D., Sun, W., Min, X., Zhai, G., Shen, W.: Video-based human–object interaction detection from tubelet tokens. Adv. Neural. Inf. Process. Syst. 35, 23345–23357 (2022)

Wang, Y., Li, K., Li, Y., He, Y., Huang, B., Zhao, Z., Zhang, H., Xu, J., Liu, Y., Wang, Z., et al.: Internvideo: general video foundation models via generative and discriminative learning. arXiv preprint arXiv:2212.03191 (2022)

Gupta, S., Malik, J.: Visual semantic role labeling. arXiv preprint arXiv:1505.04474 (2015)

Gkioxari, G., Girshick, R., Dollár, P., He, K.: Detecting and recognizing human–object interactions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8359–8367 (2018)

Mallya, A., Lazebnik, S.: Learning models for actions and person–object interactions with transfer to question answering. In: Proceedings of the European Conference on Computer Vision, pp. 414–428 (2016). Springer

Gao, C., Zou, Y., Huang, J.-B.: ican: Instance-centric attention network for human–object interaction detection. arXiv preprint arXiv:1808.10437 (2018)

Zhou, T., Qi, S., Wang, W., Shen, J., Zhu, S.-C.: Cascaded parsing of human–object interaction recognition. IEEE Trans. Pattern Anal. Mach. Intell. 44(6), 2827–2840 (2021)

Cheng, Y., Duan, H., Wang, C., Wang, Z.: Human–object interaction detection with depth-augmented clues. Neurocomputing 500, 978–988 (2022)

Liao, Y., Liu, S., Wang, F., Chen, Y., Qian, C., Feng, J.: Ppdm: parallel point detection and matching for real-time human–object interaction detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 482–490 (2020)

Yang, D., Zou, Y., Zhang, C., Cao, M., Chen, J.: Rr-net: relation reasoning for end-to-end human–object interaction detection. IEEE Trans. Circuits Syst. Video Technol. 32(6), 3853–3865 (2021)

Ulutan, O., Iftekhar, A., Manjunath, B.S.: Vsgnet: spatial attention network for detecting human object interactions using graph convolutions. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13617–13626 (2020)

Park, J., Park, J.-W., Lee, J.-S.: Viplo: vision transformer based pose-conditioned self-loop graph for human–object interaction detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 17152–17162 (2023)

Koppula, H.S., Gupta, R., Saxena, A.: Human activity learning using object affordances from rgb-d videos. arXiv preprint arXiv:1208.0967 (2012)

Jain, A., Zamir, A.R., Savarese, S., Saxena, A.: Structural-rnn: deep learning on spatio-temporal graphs. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5308–5317 (2016)

Qi, S., Wang, W., Jia, B., Shen, J., Zhu, S.-C.: Learning human–object interactions by graph parsing neural networks. In: Proceedings of the European Conference on Computer Vision, pp. 401–417 (2018)

Kuehne, H., Gall, J., Serre, T.: An end-to-end generative framework for video segmentation and recognition. In: IEEE Winter Conference on Applications of Computer Vision, pp. 1–8 (2016). IEEE

Pirsiavash, H., Ramanan, D.: Parsing videos of actions with segmental grammars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 612–619 (2014)

Li, S., Farha, Y.A., Liu, Y., Cheng, M.-M., Gall, J.: Ms-tcn++: multi-stage temporal convolutional network for action segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 45(6), 6647–6658 (2020)

Lea, C., Flynn, M.D., Vidal, R., Reiter, A., Hager, G.D.: Temporal convolutional networks for action segmentation and detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 156–165 (2017)

Huang, Y., Sugano, Y., Sato, Y.: Improving action segmentation via graph-based temporal reasoning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14024–14034 (2020)

Wang, Z., Gao, Z., Wang, L., Li, Z., Wu, G.: Boundary-aware cascade networks for temporal action segmentation. In: Proceedings of the European Conference on Computer Vision, pp. 34–51 (2020). Springer

Yi, F., Wen, H., Jiang, T.: Asformer: transformer for action segmentation. In: British Machine Vision Conference (2021)

Zhang, R., Wang, S., Duan, Y., Tang, Y., Zhang, Y., Tan, Y.-P.: Hoi-aware adaptive network for weakly-supervised action segmentation. In: Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence, pp. 1722–1730 (2023)

Li, Q., Xie, X., Zhang, J., Shi, G.: Few-shot human–object interaction video recognition with transformers. Neural Netw. 163, 1–9 (2023)

Ji, J., Desai, R., Niebles, J.C.: Detecting human–object relationships in videos. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8106–8116 (2021)

Cong, Y., Liao, W., Ackermann, H., Rosenhahn, B., Yang, M.Y.: Spatial-temporal transformer for dynamic scene graph generation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 16372–16382 (2021)

Ni, Z., Mascaró, E.V., Ahn, H., Lee, D.: Human–object interaction prediction in videos through gaze following. Comput. Vis. Image Underst. 233, 103741 (2023)

Ren, S., He, K., Girshick, R., Sun, J.: Faster r-cnn: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, vol. 28 (2015)

Krishna, R., Zhu, Y., Groth, O., Johnson, J., Hata, K., Kravitz, J., Chen, S., Kalantidis, Y., Li, L.-J., Shamma, D.A., et al.: Visual genome: connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vis. 123, 32–73 (2017)

Cho, K., van Merrienboer, B., Gülçehre, Ç., Bahdanau, D., Bougares, F., Schwenk, H., Bengio, Y.: Learning phrase representations using rnn encoder-decoder for statistical machine translation. In: EMNLP (2014)

Koppula, H.S., Gupta, R., Saxena, A.: Learning human activities and object affordances from rgb-d videos. Int. J. Robot. Res. 32(8), 951–970 (2013)

Dreher, C.R., Wächter, M., Asfour, T.: Learning object-action relations from bimanual human demonstration using graph networks. IEEE Robot. Autom. Lett. 5(1), 187–194 (2019)

Qiao, T., Li, R., Li, F.W., Shum, H.P.: From category to scenery: an end-to-end framework for multi-person human–object interaction recognition in videos. In: International Conference on Pattern Recognition (2024)

Sener, O., Saxena, A.: rcrf: Recursive belief estimation over crfs in rgb-d activity videos. In: Robotics: Science and Systems (2015)

Koppula, H.S., Saxena, A.: Anticipating human activities using object affordances for reactive robotic response. IEEE Trans. Pattern Anal. Mach. Intell. 38(1), 14–29 (2015)

Funding

This work was supported by the International Partnership Program of the Chinese Academy of Sciences (Grant mo. 104GJHZ2023053FN), and the National Natural Science Foundation of China (Grant nos. 62103410 and 62203438).

Author information

Authors and Affiliations

Contributions

Junxian Wu: conceived and designed the study, developed the methodology, performed the experiments, analyzed the data, and wrote the manuscript. Yujia Zhang and Michael Kampffmeyer: assisted in developing the methodology, provided critical feedback, and helped revise the manuscript. Yi Pan and Chenyu Zhang: assisted in developing the methodology and conducted preliminary analysis. Shiying Sun, Hui Chang, and Xiaoguang Zhao: supervised the project, provided funding and resources, and critically reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

This work was also supported by the Excellent Youth Program of State Key Laboratory of Multimodal Artificial Intelligence Systems. The authors have no conflict of interest to declare that are relevant to the content of this article.

Ethics approval

Not applicable.

Additional information

Communicated by Teng Li.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wu, J., Zhang, Y., Kampffmeyer, M. et al. HierGAT: hierarchical spatial-temporal network with graph and transformer for video HOI detection. Multimedia Systems 31, 13 (2025). https://doi.org/10.1007/s00530-024-01604-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-024-01604-5