Abstract

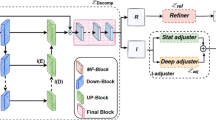

Most existing low-light image enhancement methods suffer from excess noise from inevitably noise in images captured under low-light conditions and amplified noise during the enhancement process, which affects the enhancement effect and subsequent downstream visual tasks. To balance the enhancement effect and noise suppression, we propose a zero-reference Low-light Image Enhancement and Denoising network utilizing implicit and explicit priors, named Zero-LIED. Specifically, the Image Decomposition and Denoising Network (IDDN) decomposes the input image into illumination and reflectance components based on Retinex theory, and applies deep image prior to achieve implicit denoising during training. The Illumination Adjustment Network (IAN) achieves pixel-wise exposure adjustment of the illumination component by estimating gamma correction parameter maps, rather than using only one parameter for the entire image. The IDDN and IAN training process is guided by five loss functions as explicit priors. The final enhanced image is obtained by fusing the reflectance component decomposed by IDDN and the illumination component enhanced by IAN. Extensive quantitative and qualitative experiments have shown that the proposed Zero-LIED achieves enhancement effects comparable to those of state-of-the-art methods with less data and simpler network structure.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Ren, X., Yang, W., Cheng, W.-H., Liu, J.: Lr3m: Robust low-light enhancement via low-rank regularized retinex model. IEEE Trans. Image Process. 29, 5862–5876 (2020)

Zhang, Y., Guo, X., Ma, J., Liu, W., Zhang, J.: Beyond brightening low-light images. Int. J. Comput. Vis. 129, 1013–1037 (2021)

Lee, C., Lee, C., Kim, C.-S.: Contrast enhancement based on layered difference representation of 2d histograms. IEEE Trans. Image Process. 22(12), 5372–5384 (2013)

Celik, T., Tjahjadi, T.: Contextual and variational contrast enhancement. IEEE Trans. Image Process. 20(12), 3431–3441 (2011)

Naik, S.K., Murthy, C.: Hue-preserving color image enhancement without gamut problem. IEEE Trans. Image Process. 12(12), 1591–1598 (2003)

Wang, S., Zheng, J., Hu, H.-M., Li, B.: Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 22(9), 3538–3548 (2013)

Fu, X., Zeng, D., Huang, Y., Liao, Y., Ding, X., Paisley, J.: A fusion-based enhancing method for weakly illuminated images. Signal Process. 129, 82–96 (2016)

Guo, X., Li, Y., Ling, H.: Lime: low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 26(2), 982–993 (2016)

Kimmel, R., Elad, M., Shaked, D., Keshet, R., Sobel, I.: A variational framework for retinex. Int. J. Comput. Vision 52, 7–23 (2003)

Fu, X., Zeng, D., Huang, Y., Ding, X., Zhang, X.-P.: A variational framework for single low light image enhancement using bright channel prior. In: 2013 IEEE Global Conference on Signal and Information Processing, pp. 1085–1088. IEEE (2013)

Park, S., Yu, S., Moon, B., Ko, S., Paik, J.: Low-light image enhancement using variational optimization-based retinex model. IEEE Trans. Consum. Electron. 63(2), 178–184 (2017)

Fu, G., Duan, L., Xiao, C.: A hybrid l 2- l p variational model for single low-light image enhancement with bright channel prior. In: 2019 IEEE International Conference on Image Processing (ICIP), pp. 1925–1929. IEEE (2019)

Li, M., Liu, J., Yang, W., Sun, X., Guo, Z.: Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans. Image Process. 27(6), 2828–2841 (2018)

Yang, W., Wang, W., Huang, H., Wang, S., Liu, J.: Sparse gradient regularized deep retinex network for robust low-light image enhancement. IEEE Trans. Image Process. 30, 2072–2086 (2021)

Jiang, Q., Mao, Y., Cong, R., Ren, W., Huang, C., Shao, F.: Unsupervised decomposition and correction network for low-light image enhancement. IEEE Trans. Intell. Transp. Syst. 23(10), 19440–19455 (2022)

Zhang, Y., Di, X., Zhang, B., Li, Q., Yan, S., Wang, C.: Self-supervised low light image enhancement and denoising. arXiv preprint arXiv:2103.00832 (2021)

Hai, J., Xuan, Z., Yang, R., Hao, Y., Zou, F., Lin, F., Han, S.: R2rnet: low-light image enhancement via real-low to real-normal network. J. Vis. Commun. Image Represent. 90, 103712 (2023)

Zhao, Z., Xiong, B., Wang, L., Ou, Q., Yu, L., Kuang, F.: Retinexdip: a unified deep framework for low-light image enhancement. IEEE Trans. Circuits Syst. Video Technol. 32(3), 1076–1088 (2021)

Fu, Z., Yang, Y., Tu, X., Huang, Y., Ding, X., Ma, K.-K.: Learning a simple low-light image enhancer from paired low-light instances. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 22252–22261 (2023)

Zhu, A., Zhang, L., Shen, Y., Ma, Y., Zhao, S., Zhou, Y.: Zero-shot restoration of underexposed images via robust retinex decomposition. In: 2020 IEEE International Conference on Multimedia and Expo (ICME), pp. 1–6. IEEE (2020)

Park, G.-H., Cho, H.-H., Choi, M.-R.: A contrast enhancement method using dynamic range separate histogram equalization. IEEE Trans. Consum. Electron. 54(4), 1981–1987 (2008)

Arici, T., Dikbas, S., Altunbasak, Y.: A histogram modification framework and its application for image contrast enhancement. IEEE Trans. Image Process. 18(9), 1921–1935 (2009)

Fu, X., Zeng, D., Huang, Y., Zhang, X.-P., Ding, X.: A weighted variational model for simultaneous reflectance and illumination estimation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2782–2790 (2016)

Xu, J., Hou, Y., Ren, D., Liu, L., Zhu, F., Yu, M., Wang, H., Shao, L.: Star: a structure and texture aware retinex model. IEEE Trans. Image Process. 29, 5022–5037 (2020)

Hao, S., Han, X., Guo, Y., Xu, X., Wang, M.: Low-light image enhancement with semi-decoupled decomposition. IEEE Trans. Multimed. 22(12), 3025–3038 (2020)

Lore, K.G., Akintayo, A., Sarkar, S.: Llnet: a deep autoencoder approach to natural low-light image enhancement. Pattern Recogn. 61, 650–662 (2017)

Wei, C., Wang, W., Yang, W., Liu, J.: Deep retinex decomposition for low-light enhancement. arXiv preprint arXiv:1808.04560 (2018)

Zhang, Y., Zhang, J., Guo, X.: Kindling the darkness: A practical low-light image enhancer. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 1632–1640 (2019)

Wu, W., Weng, J., Zhang, P., Wang, X., Yang, W., Jiang, J.: Uretinex-net: Retinex-based deep unfolding network for low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5901–5910 (2022)

Xu, X., Wang, R., Fu, C.-W., Jia, J.: Snr-aware low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 17714–17724 (2022)

Zhang, Z., Zheng, H., Hong, R., Xu, M., Yan, S., Wang, M.: Deep color consistent network for low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1899–1908 (2022)

Xu, X., Wang, R., Lu, J.: Low-light image enhancement via structure modeling and guidance. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9893–9903 (2023)

Wan, F., Xu, B., Pan, W., Liu, H.: Psc diffusion: patch-based simplified conditional diffusion model for low-light image enhancement. Multimed. Syst. 30(4), 1–16 (2024)

Guo, C., Li, C., Guo, J., Loy, C.C., Hou, J., Kwong, S., Cong, R.: Zero-reference deep curve estimation for low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1780–1789 (2020)

Zhang, Y., Di, X., Zhang, B., Wang, C.: Self-supervised image enhancement network: Training with low light images only. arXiv preprint arXiv:2002.11300 (2020)

Jiang, Y., Gong, X., Liu, D., Cheng, Y., Fang, C., Shen, X., Yang, J., Zhou, P., Wang, Z.: Enlightengan: deep light enhancement without paired supervision. IEEE Trans. Image Process. 30, 2340–2349 (2021)

Liu, R., Ma, L., Zhang, J., Fan, X., Luo, Z.: Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10561–10570 (2021)

Ma, L., Ma, T., Liu, R., Fan, X., Luo, Z.: Toward fast, flexible, and robust low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5637–5646 (2022)

Mi, A., Luo, W., Huo, Z.: Dual-band low-light image enhancement. Multimed. Syst. 30(2), 91 (2024)

Ulyanov, D., Vedaldi, A., Lempitsky, V.: Deep image prior. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9446–9454 (2018)

Cai, J., Gu, S., Zhang, L.: Learning a deep single image contrast enhancer from multi-exposure images. IEEE Trans. Image Process. 27(4), 2049–2062 (2018)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 586–595 (2018)

Wang, W., Wei, C., Yang, W., Liu, J.: Gladnet: low-light enhancement network with global awareness. IEEE Computer Society (2018)

Talebi, H., Milanfar, P.: Nima: neural image assessment. IEEE Trans. Image Process. 27(8), 3998–4011 (2018)

Dabov, K., Foi, A., Katkovnik, V., Egiazarian, K.: Image denoising with block-matching and 3d filtering. In: Image Processing: Algorithms and Systems, Neural Networks, and Machine Learning, vol. 6064, pp. 354–365. SPIE (2006)

Xu, J., Huang, Y., Cheng, M.-M., Liu, L., Zhu, F., Xu, Z., Shao, L.: Noisy-as-clean: learning self-supervised denoising from corrupted image. IEEE Trans. Image Process. 29, 9316–9329 (2020)

Acknowledgements

This work is supported by the National Natural Science Foundation of China (No.62472145 and 62273292), the Natural Science Foundation of Henan Province (No. 242300420284) and the Fundamental Research Funds for the Universities of Henan Province (No. NSFRF240820).

Author information

Authors and Affiliations

Contributions

Author contribution. J.Y.: Conceptualization, Methodology, Software, Investigation, Writing; F.X.: Data Curation, Software, Writing; Z.H.: Visualization, Investigation, Writing; Y.Q.: Investigation, Formal Analysis.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Communicated by fei wu.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yu, J., Xue, F., Huo, Z. et al. Combining implicit and explicit priors for zero-reference low-light image enhancement and denoising. Multimedia Systems 31, 112 (2025). https://doi.org/10.1007/s00530-025-01708-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-025-01708-6