Abstract

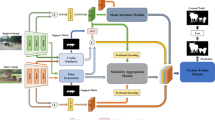

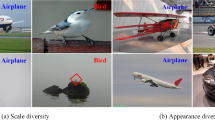

Existing Few-Shot Semantic Segmentation (FSS) methods often focus on extracting semantic information from support images to guide the segmentation of query images, while less attention is paid to exploring the query branch. However, due to the limited number of support images, there exists significant intra-class variance between support and query images. Additionally, relying solely on a single support prototype to guide query segmentation often leads to inaccurate segmentation boundaries in the prediction results, which can affect the model’s performance. In this study, we simultaneously consider the information extraction from both the support and query branches and propose a Dual-branch Aggregation and Edge Refinement (DAER) network for accurate query image segmentation. Specifically, to better explore the information from the query branch, we introduce an Initial Mask Generation Module (IMGM) that generates an initial mask for the query image. Furthermore, we propose a Dual-Branch Aggregation Module (DBAM) that simultaneously captures information from both the support and query branches. Finally, an Edge Refinement Module (ERM) is introduced to integrate more query-specific positional information into the network. Extensive experiments on standard few-shot semantic segmentation benchmarks, including PASCAL-\(5^i\) and COCO-\(20^i\), demonstrate the effectiveness of our proposed method.

Similar content being viewed by others

Data Availability

Data will be made available on request.

References

Badrinarayanan, V., Kendall, A., Cipolla, R.: Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(12), 2481–2495 (2017)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, Massachusetts, USA, pp. 3431–3440 (2015)

Ronneberger, O., Fischer, P., Brox, T.: U-net: convolutional networks for biomedical image segmentation. In: Medical Image Computing and Computer-Assisted Intervention-MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18, pp. 234-241. Springer International Publishing (2015)

Zhao, H., Shi, J., Qi, X., et al.: Pyramid scene parsing network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, Hawaii, USA, pp. 2881–2890 (2017)

Chen, L.C., Papandreou, G., Kokkinos, I., et al.: Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 40(4), 834–848 (2017)

Ravi, S., Larochelle, H.: Optimization as a model for few-shot learning. In: International Conference on Learning Representations (ICLR), San Juan, Puerto Ric (2016)

Vinyals, O., Blundell, C., Lillicrap, T., et al.: Matching networks for one shot learning. Adv. Neural Inf. Process. Syst. 29, 3630–3638 (2016)

Shaban, A., Bansal, S., Liu, Z., et al.: One-shot learning for semantic segmentation. arXiv preprint arXiv:1709.03410 (2017)

Lin, G., Milan A., Shen, C., et al.: Refinenet: multi-path refinement networks for high-resolution semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, Hawaii, USA,pp. 1925–1934 (2017)

Huang, Z., Wang, X., Huang, L., et al.: Ccnet: criss-cross attention for semantic segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, South Korea,pp. 603–612 (2019)

Li, H., Eigen D., Dodge, S., et al.: Finding task-relevant features for few-shot learning by category traversal. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, California, USA, pp. 1–10 (2019)

Snell, J., Swersky, K., Zemel, R.: Prototypical networks for few-shot learning. Adv. Neural Inf. Process. Syst. 30 (2017). https://proceedings.neurips.cc/paper_files/paper/2017/file/cb8da6767461f2812ae4290eac7cbc42-Paper.pdf

Sung, F., Yang, Y., Zhang, L., et al.: Learning to compare: relation network for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, Utah, USA,pp. 1199–1208 (2018)

Finn, C., Abbeel, P., Levine, S.: Model-agnostic meta-learning for fast adaptation of deep networks. In: International Conference on Machine Learning (ICML), New York City, USA, pp. 1126–1135 (2017)

Jamal, M.A., Qi, G.J.: Task agnostic meta-learning for few-shot learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, California, USA, pp. 11719–11727 (2019)

Chen, Z., Fu, Y., Chen, K., et al.: Image block augmentation for one-shot learning. Proc. AAAI Conf. Artif. Intell. 33(01), 3379–3386 (2019)

Chen, Z., Fu, Y., Wang, Y.X., et al.: Image deformation meta-networks for one-shot learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, California, USA, pp. 8680–8689 (2019)

Dong, N., Xing, E.P.: Few-shot semantic segmentation with prototype learning. Br. Mach. Vis. Conf. 3, 4 (2018)

Li, G., Jampani, V., Sevilla-Lara, L., et al.: Adaptive prototype learning and allocation for few-shot segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, Tennessee, USA, pp. 8334–8343 (2021)

Wang, K., Liew, J.H., Zou, Y., et al.: Panet: few-shot image semantic segmentation with prototype alignment. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, South Korea, pp. 9197–9206 (2019)

Yang, L., Zhuo, W., Qi, L., et al.: Mining latent classes for few-shot segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Kyoto, Japan, pp. 8721–8730 (2021)

Zhang, X., Wei, Y., Yang, Y., et al.: Sg-one: similarity guidance network for one-shot semantic segmentation. IEEE Trans. Cybern. 50(9), 3855–3865 (2020)

Liu, Y., Zhang, X., Zhang, S., et al.: Part-aware prototype network for few-shot semantic segmentation. In: Computer Vision-ECCV 2020: 16th European Conference, Glasgow, UK, August 23-28, 2020, Proceedings, Part IX 16, pp. 142–158. Springer International Publishing (2020)

Lang, C., Cheng G., Tu, B., et al.: Learning what not to segment: a new perspective on few-shot segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, Louisiana, USA, pp. 8057–8067 (2022)

Liu, W., Zhang, C., Lin, G., et al.: Crnet: cross-reference networks for few-shot segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, Washington, USA, pp. 4165–4173 (2020)

Zhang, C., Lin, G., Liu, F., et al.: Canet: class-agnostic segmentation networks with iterative refinement and attentive few-shot learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, California, USA, pp. 5217–5226 (2019)

Tian, Z., Zhao, H., Shu, M., et al.: Prior guided feature enrichment network for few-shot segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 44(2), 1050–1065 (2020)

Zhang, C., Lin, G., Liu, F., et al.: Pyramid graph networks with connection attentions for region-based one-shot semantic segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, South Korea, pp. 9587-9595 (2019)

Lu, Z., He, S., Zhu, X., et al.: Simpler is better: few-shot semantic segmentation with classifier weight transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Kyoto, Japan, pp. 8741–8750 (2021)

Yang, Y., Chen, Q., Feng, Y., et al.: MIANet: aggregating unbiased instance and general information for few-shot semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, Canada, pp. 7131–7140 (2023)

Fan, Q., Pei, W., Tai, Y.W., et al.: Self-support few-shot semantic segmentation. In: European Conference on Computer Vision (ECCV), Tel Aviv, Israel, pp. 701–719 (2022)

Liu, Y., Liu, N., Yao, X., et al.: Intermediate prototype mining transformer for few-shot semantic segmentation. Adv. Neural Inf. Process. Syst. 35, 38020–38031 (2022)

Liu, Y., Liu, N., Cao, Q., et al.: Learning non-target knowledge for few-shot semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, Louisiana, USA,pp. 11573–11582 ( 2022)

Min, J., Kang, D., Cho, M.: Hypercorrelation squeeze for few-shot segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Kyoto, Japan, pp. 6941–6952 (2021)

Cheng, G., Lang, C., Han, J.: Holistic prototype activation for few-shot segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 45(4), 4650–4666 (2022)

Nguyen, K., Todorovic, S.: Feature weighting and boosting for few-shot segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, South Korea, pp. 622–631 (2019)

Everingham, M., Van Gool, L., Williams, C.-K.-I., et al.: The pascal visual object classes (voc) challenge. Int. J. Comput. Vis 88, 303–338 (2010)

Hariharan, B., Arbeláez, P., Bourdev, L., et al.: Semantic contours from inverse detectors. In: International Conference on Computer Vision, pp. 991–998. IEEE (2011)

Lin, T.Y., Maire, M., Belongie, S., et al.: Microsoft coco: common objects in context. In: Computer Vision-ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13, pp. 740–755. Springer International Publishing (2014)

Liu, H., Peng, P., Chen, T., et al.: Fecanet: boosting few-shot semantic segmentation with feature-enhanced context-aware network. IEEE Trans. Multimed. 25, 8580–8592 (2023)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: 3rd International Conference on Learning Representations (ICLR), San Diego, California, USA (2015)

He, K., Zhang, X., Ren, S., et al.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition( CVPR), Las Vegas, Nevada, USA, pp. 770–778 (2016)

Funding

No funding was obtained for this study.

Author information

Authors and Affiliations

Contributions

Qingosong Tang: Methodology, Investigation, Writing-original draft and review. Yalei Ren: Investigation, Code, Writing-review and editing. Zhanghui Han: Investigation, Data curation, Visualization. Chenyan Bao: Data curation, Code. Yang Liu: Investigation, Writing-review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this study.

Additional information

Communicated by Junyu Gao.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tang, Q., Ren, Y., Shan, Z. et al. Dual-branch aggregation and edge refinement network for few shot semantic segmentation. Multimedia Systems 31, 142 (2025). https://doi.org/10.1007/s00530-025-01718-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-025-01718-4