Abstract

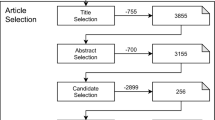

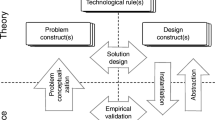

This paper was triggered by concerns about the methodological soundness of many RE papers. We present a conceptual framework that distinguishes design papers from research papers, and show that in this framework, what is called a research paper in RE is often a design paper. We then present and motivate two lists of evaluation criteria, one for research papers and one for design papers. We apply both of these lists to two samples drawn from the set of all submissions to the RE’03 conference. Analysis of these two samples shows that most submissions of the RE’03 conference are design papers, not research papers, and that most design papers present a solution to a problem but neither validate this solution nor investigate the problems that can be solved by this solution. We conclude with a discussion of the soundness of our results and of the possible impact on RE research and practice.

Similar content being viewed by others

References

Archer B (1969) The structure of the design process. In: Broadbent G, Ward A (eds) Design methods in Architecture. Lund Humphries, pp 76–102

Babbie E (2001) The practice of social research, 9th edn. Wadsworth, Belmont

Cooper D, Schindler P (2003) Business research methods, 8th edn. Irwin/McGraw-Hill

Cross N (1994) Engineering design methods: strategies for product design, 2nd edn. Wiley, New York

Cross N (2001) Design cognition: results from protocol and other empirical studies of design activity. In: Eastman C, McCracken M, Newstetter W (eds) Design knowing and learning: cognition in design education. Elsevier, Amsterdam, pp 79–103

Davis A, Hickey A (2004) A new paradigm for planning and evaluating requirements engineering research. In: 2nd international workshop on comparative evaluation in requirements engineeering, pp 7–16

Gause D, Weinberg G (1989) Exploring requirements: quality before design. Dorset House Publishing

Glass R, Ramesh V, Vessey I (2004) An analysis of research in the computing disciplines. Commun ACM 47(6):89–94

Hicks M (1999) Problem solving in business and management; hard, soft and creative approaches. International Thomson Business Press

Kaplan A (1998) The conduct of inquiry. Methodology for behavioral science. Transaction Publishers, 1998. First edition 1964 by Chandler Publishers

Kitchenham B, Pfleeger S, Hoaglin D, Emam K, Rosenberg J (2002) Preliminary guidelines for empirical research in software engineering. IEEE Trans Softw Eng 28(8):721–733

Kuhn T (1970) Logic of discovery or psychology of research? In: Lakatos I, Musgrave A (eds) Criticism and the growth of knowledge. Cambridge University Press, Cambridge, pp 1–23

Lakatos I (1976) Proofs and refutations. In: Worall J, Zahar E (eds) Cambridge University Press, Cambridge

March J (1994) A primer on decision-making. Free Press

Pahl G, Beitz W (1986) Konstruktionslehre. Handbuch für Studium und Praxis. Springer, Berlin Heidelberg New York

Parnas D, Clements P (1986) A rational design process: how and why to fake it. IEEE Trans Softw Eng SE-12:251–257

Pfleeger S (1995) Experimental design and analysis in software engineering. Ann Softw Eng 1:219–253

Roozenburg N, Eekels J (1995) Product design: fundamentals and Methods. Wiley, New York

Sikkel N, Wieringa R (eds) (2003) In: Proceedings of the 11th IEEE international requirements engineering conference. IEEE Computer Science Press

Suchman L (1983) Office procedures as practical action: models of work and system design. ACM Trans Office Inf Syst 1:320–328

Suchman L, Wynn E (1984) Procedures and problems in the office. Office Technol People 2:135–154

Tichy W, Lukowicz P, Prechelt L, Heinz E (1997) Experimental evaluation in computer science: a quantitative study. J Syst Softw 28:9–18

Wieringa R (1996) Requirements engineering: frameworks for understanding. Wiley, New York

Witte E (1972) Field research on complex-decision-making processes—the phase theorem. Int Stud Manage Organ 2:156–182

Wohlin C, Runeson P, Höst M, Ohlsson MC, Regnell B, Weslén A (2002) Experimentation in software engineering: an introduction. Kluwer, Dordrecht

Zelkowitz M, Wallace D (1997) Experimental validation in software engineering. Inform Softw Technol 39:735–743

Acknowledgements

This paper benefited from discussions with Klaas van den Berg and the participants of the CERE04 workshop and from the comments by anonymous reviewers.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1. Checklist for papers about solutions to world problems

An engineering paper does not need to satisfy all criteria listed below. Which criteria are relevant depends on the task in the engineering cycle that the paper reports about.

-

1.

Which world problem is solved?

-

1.1

Phenomena

-

1.2

Norms

-

1.3

Relation between norms and phenomena

-

1.4

Stakeholders

-

1.5

Problem-solver’s priorities

-

1.1

-

2.

How was the problem solved?

-

2.1

Problem investigation

-

2.1.1

Causal relationships between phenomena

-

2.1.1

-

2.2

Solution design

-

2.2.1

Source of the solution. Is it borrowed or adapted from some published source, or is it totally new?

-

2.2.2

Solution specification

-

2.2.1

-

2.3

Design validation

-

2.3.1

Solution properties

-

2.3.2

Solution evaluation against the criteria identified in problem investigation.

-

2.3.1

-

2.4

Choice of a solution

-

2.4.1

Alternatives considered

-

2.4.1

-

2.5

Implementation description

-

2.6

Implementation evaluation

-

2.6.1

Observations

-

2.6.2

Relating observations to the criteria relevant for this implementation.

-

2.6.1

-

2.1

-

3.

Is the solution relevant?

-

3.1

Novelty of the solution

-

3.2

Relevance for classes of world problems

-

3.3

Relevance for theory

-

3.1

Appendix 2. Checklist for papers about solutions to knowledge problems

A research paper must satisfy all criteria.

-

1.

Which knowledge problem is solved?

-

1.1

Phenomena

-

1.2

Variables

-

1.3

Relationships among the variables

-

1.4

Research questions

-

1.5

Priorities

-

1.1

-

2.

How was the problem solved?

-

2.1

Research design

-

2.1.1

Population

-

2.1.2

Measurement procedure

-

2.1.3

Analysis method

-

2.1.1

-

2.2

Validity

-

2.2.1

Construct validity

-

2.2.2

Internal validity

-

2.2.3

External validity

-

2.2.1

-

2.3

Measurements

-

2.4

Analysis

-

2.4.1

Answers to research questions

-

2.4.2

Theoretical explanations

-

2.4.3

Possible fallacies

-

2.4.1

-

2.1

-

3.

What is the relevance of this solution?

-

3.1

Relevance for theory

-

3.2

Relevance for engineering practice

-

3.1

Rights and permissions

About this article

Cite this article

Wieringa, R.J., Heerkens, J.M.G. The methodological soundness of requirements engineering papers: a conceptual framework and two case studies. Requirements Eng 11, 295–307 (2006). https://doi.org/10.1007/s00766-006-0037-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00766-006-0037-6