Abstract

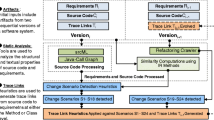

Because of the large amount of effort it takes to manually trace requirements, automated traceability methods for mapping textual software engineering artifacts to each other and generating candidate links have received increased attention over the past 15 years. Automatically generated links, however, are viewed as candidates until human analysts confirm/reject them for the final requirements traceability matrix. Studies have shown that analysts are a fallible, but necessary, participant in the tracing process. There are two key measures guiding analyst work on the evaluation of candidate links: accuracy of analyst decision and efficiency of their work. Intuitively, it is expected that the more effort the analyst spends on candidate link validation, the more accurate the final traceability matrix is likely to be, although the exact nature of this relationship may be difficult to gauge outright. To assist analysts in making the best use of their time when reviewing candidate links, prior work simulated four possible behaviors and showed that more structured approaches save the analysts’ time/effort required to achieve certain levels of accuracy. However, these behavioral simulations are complex to run and their results difficult to interpret and use in practice. In this paper, we present a mathematical model for evaluating analyst effort during requirements tracing tasks. We apply this model to a simulation study of 12 candidate link validation approaches. The simulation study is conducted on a number of different datasets. In each study, we assume perfect analyst behavior (i.e., analyst always being correct when making a determination about a link). Under this assumption, we evaluate the estimated effort for the analyst and plot it against the accuracy of the recovered traceability matrix. The effort estimation model is guided by a parameter specifying the relationship between the time it takes an analyst to evaluate a presented link and the time it takes an analyst to discover a link not presented to her. We construct a series of effort estimations based on different values of the model parameter. We found that the analysts’ approach to candidate link validation—essentially the order in which the analyst examines presented candidate links—does impact the effort. We also found that the lowest ratio of the cost of finding a correct link from scratch over the cost of recognizing a correct link yields the lowest effort for all datasets, but that the lowest effort does not always yield the highest quality matrix. We finally observed that effort varies by dataset. We conclude that the link evaluation approach we call “Top 1 Not Yet Examined Feedback Pruning” was the overall winner in terms of effort and highest quality and, thus, should be followed by human analysts if possible.

Similar content being viewed by others

Notes

Making the best use of analysts’ effort is difficult, which is the reason why “Cost-Effective” was named as the #2 Grand Challenge [4] facing requirement practitioners and researchers. The challenge reads “2. Cost-Effective—The return from using traceability is adequate in relation to the outlay of establishing it.”

An automated method returns a link if it evaluates the similarity of the high-level and low-level elements forming the link above a certain, pre-defined in the method threshold value.

These parameters were labeled α, β, and γ in prior work [5]. We rename them for this paper to avoid confusion with the Rochio feedback parameters.

Note that “requirements tracing” is often the moniker even when requirements are not being traced.

It should be noted that many of the 48 scenarios resulted in ties for minimum effort value; thus, there were far less than 48 scenarios that were “in the running” for best and worst.

References

Gotel O, Finkelstein A (1997) Extended requirements traceability: results of an industrial case study. In: Proceedings of the 3rd IEEE international symposium on requirements engineering (RE’97). IEEE Computer Society, p 169

Hayes JH, Dekhtyar A, Sundaram S (2006) Advancing candidate link generation for requirements tracing: the study of methods. IEEE Trans Softw Eng 32:4–19

U.S. Department of Health and Human Services, Food and Drug Administration (2002) General principles of software validation; Final Guidance for Industry and FDA Staff

Gotel O, Huang JC, Hayes JH, Zisman A, Egyed A, Grünbacher P, Dekhtyar A, Antoniol G, Maletic J, Mäder P (2012) Traceability fundamentals. In: Huang JC, Gotel O, Zisman A (eds) Software and systems traceability. Springer. ISBN: 1447122380

Dekhtyar A, Hayes JH, Smith M (2011) Towards a model of analyst effort for traceability research: a position paper. In: Proceedings of traceability of emerging forms of software engineering (TEFSE)

Hayes JH, Dekhtyar A, Osborne J (2003) Improving requirements tracing via information retrieval. In: International conference on requirements engineering, Monterey, California, pp 151–161

Sundaram S, Hayes J, Dekhtyar A, Holbrook E (2010) Assessing traceability of software engineering artifacts. Requir Eng 15:313–335

Axiom. http://www.iconcur-software.com/. Last accessed 29 June 2016

Baeza-Yates R, Ribeiro-Neto B (1999) Modern information retrieval. ACM Press, Addison-Wesley

Hayes JH, Dekhtyar A, Sundaram S (2005) Improving after the fact tracing and mapping to support software quality predictions. IEEE Softw 22:30–37

Antoniol G, Canfora G, Casazza G, Lucia AD, Merlo E (2002) Recovering traceability links between code and documentation. IEEE Trans Softw Eng 28:970–983

Antoniol G, Caprile B, Potrich A, Tonella P (1999) Design-code traceability for object oriented systems. Ann Softw Eng 9:35–58

Marcus A, Maletic J (2003) Recovering documentation-to-source code traceability links using latent semantic indexing. In: Proceedings of the twenty-fifth international conference on software engineering 2003, pp 125–135

Hayes JH, Dekhtyar A (2005) Humans in the traceability loop: can’t live with ‘em, can’t live without ‘em. In: Proceedings of the 3rd international workshop on traceability in emerging forms of software engineering. ACM, New York, NY, USA, pp 20–23

Cuddeback D, Dekhtyar A, Hayes J (2010) Automated requirements traceability: the study of human analysts. In: Requirements engineering, IEEE international conference on, vol 0, 2010, pp 231–240

Dekhtyar A, Hayes JH, Larsen J (2007) Make the most of your time: how should the analyst work with automated traceability tools? In: Proceedings of the third international workshop on predictor models in software engineering. IEEE Computer Society, Washington, DC, USA

Kong W-K, Hayes JH, Dekhtyar A, Dekhtyar O (2012) Process improvement for traceability: a study of human fallibility. In: Requirements engineering conference (RE), 2012 20th IEEE international, pp 31–40, 24–28 Sept. 2012

Ali Nasir, Guéhéneuc Yann-Gaël, Antoniol Giuliano (2013) Trustrace: mining software repositories to improve the accuracy of requirement traceability links. Trans Softw Eng (TSE) 39(5):725–741

“GanttProject Home”

G. SBRS MODIS science data processing software requirements specification version 2

Cleland-Huang J, Chang C, Sethi G, Javvaji K, Hu H, Xia J (2002) Automating speculative queries through event-based requirements traceability. In: Proceedings of the IEEE joint international requirements engineering conference (RE ‘02), 2002, pp 289–296

Hayes JH, Dekhtyar A, Sundaram S, Howard S (2004) Helping analysts trace requirements: an objective look. In: International conference on requirements engineering (RE’2004)

Hayes J, Dekhtyar A, Sundaram S, Holbrook E, Vadlamudi S, April A (2007) REquirements TRacing On target (RETRO): improving software maintenance through traceability recovery. Innov Syst Softw Eng 3:193–202

Egyed A, Graf F, Grunbacher P (2010) Effort and quality of recovering requirements-to-code traces: two exploratory experiments. In: Requirements engineering, IEEE international conference on, vol 0, 2010, pp 221–230

Zhang Z, Liu C, Zhang Y, Zhou T (2010) Solving the cold-start problem in recommender systems with social tags. CoRR, abs/1004.3732

Lika B, Kolomvatsos K, Hadjiefthymiades S (2014) Facing the cold start problem in recommender systems. Expert Syst Appl 41(4, Part 2):2065–2073

Bostandjiev S, O’Donovan J, Höllerer T (2012) TasteWeights: a visual interactive hybrid recommender system. In: Proceedings of the sixth ACM conference on recommender systems (RecSys ‘12). ACM, New York, NY, USA, pp 35–42

Hariri N, Mobasher B Burke R (2014) Context adaptation in interactive recommender systems. In: Proceedings of the 8th ACM conference on recommender systems(RecSys ‘14). ACM, New York, NY, USA, pp 41–48

CM1 DataSet, Metrics data program website, CM-1 project

Acknowledgements

We thank Matt Smith and Chelsea Hayes for their work on the spreadsheets and charts and tables in this paper. This work was partially sponsored by NASA under Grant NNX06AD02G. This work was funded in part by the National Science Foundation under NSF Grant CCF 0811140 and by a Lockheed Martin grant.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hayes, J.H., Dekhtyar, A., Larsen, J. et al. Effective use of analysts’ effort in automated tracing. Requirements Eng 23, 119–143 (2018). https://doi.org/10.1007/s00766-016-0260-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00766-016-0260-8