Abstract

In the growing field of sonic interaction design, increasing emphasis is being placed on walking-based interactions within an array of applications, including virtual reality, interactive media, and rehabilitation. Our study focuses on recreating the aural experience of wading through water, specifically the challenge of eliciting accurate and natural movement-sound associations for wading, both in interactive and listening-only contexts. We engineered a real-time wading simulation using a digital Foley technique that maps lower limb angular velocity contours to the intensity of steady-state flowing sounds. Our first iteration was evaluated in a perceptual experiment involving 16 participants, as well as an interactive test with 9 participants, which revealed the need for additional sensors along with time-domain preprocessing to ensure a consistently natural sound envelope across walking cadences (step tempi). We then refined the mapping function and incorporated more sound layers. In our subsequent listening test, 55 participants compared the realism of the initial and refined versions with real-life wading sounds at various step cadences. While the refined version demonstrated a notable improvement over the initial one and was deemed fairly realistic overall, it fell just short of the authenticity of the real-life recordings at faster cadences, suggesting room for further improvement of our simulation. Nevertheless, this research marks a stride in the evolution of walking-based sonic interactions, instigating wider acceptance and application of such systems in the future.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Rapid advancements in body sensing and real-time audio technology have opened up a whole new realm of possibilities for human interaction with digital sounds, unveiling a rich landscape of multimodal experiences [1]. The emergence of inexpensive sensor technologies [2] along with significant improvements in computing power have greatly augmented the accessibility of realistic and captivating sonic interaction paradigms [3]. As a result, the application domains of these technologies have expanded to encompass virtual reality [4], museum installations [5], collaborative music composition [6], digital Foley [7, 8], and motor rehabilitation [9], to name just a few. One of the most remarkable aspects of these technologies lies in their ability to transcend the limitations of the physical world to create previously unimaginable experiences capable of influencing and shaping user perceptions and behaviors. For instance, digitally manipulating the natural sounds of walking [10], striking one’s hand with a hammer [11], or rubbing one’s hands together [12] can dramatically alter perceptions of weight, hardness, and dryness respectively. By harnessing the mechanisms of multimodal cognition, these interactive sonic experiences have the potential to profoundly mediate the way users engage with their surroundings, although the art of designing sonic interactions is a relatively new research field [13, 14]. In practice, designing sonic interactions involves devising mappings between interactive input data and digitally generated sounds, a challenging task in its own right. It exhibits considerable overlap with the field of sonification (use of nonspeech audio for communication and interpretation purposes); in both cases, the mapping design challenge involves generating sonic responses that effectively convey information about a state or interaction through sound [15].

Our current work focuses on the act of walking (gait), which, alongside its fundamental role in navigating natural environments, is becoming increasingly prevalent in our interactions with computational systems [16]. Gait-based sonic interactions have been explored to a considerable degree in recent years in a variety of contexts from virtual reality to rehabilitation [17,18,19,20,21,22]. Walking, an essential, and often subconscious daily human activity, presents a fascinating conduit for sonic interaction research. It is a highly complex combination of movements whose biomechanical characteristics are mediated by visual, auditory, proprioceptive, and cutaneous sensory feedback [23,24,25]. Gait naturally generates a variety of sounds depending on the walking kinematics, environment, walker characteristics, etc. [16]. Sonic interaction endeavors across application domains have focused on recreating or altering these sound morphologies with a view toward emphasizing, manipulating, or even redefining our relationship to our surroundings [16, 18, 26]. An example of a natural walking sound that has received relatively little attention from the sonic interaction design community is that of wading through water. Our work aims to contribute to the burgeoning body of sonic interaction design research by digitally recreating this in real-time.

When wading, the physical disturbance of the liquid mass by the swinging limb leads to bubble generation, resulting in a sound that reflects the properties of both the liquid mass and the physical excitation, i.e., the pushing force of the swinging limb [27]. A digital simulation that accurately reproduces the auditory experience of wading through water can have significant applications in various contexts. In the realm of virtual reality, where presence and navigation are important aspects, the incorporation of realistic wading sounds can greatly enhance the sense of immersion and authenticity [28]. As users walk through water-filled landscapes, the accompanying movement-synchronized sound effects can contribute to a more authentic and engaging experience, heightening the overall impact of the virtual world [29]. Furthermore, the auditory feedback provided by the wading simulation can play a crucial role in rehabilitation settings. By leveraging this technology, individuals undergoing rehabilitation programs can receive enhanced feedback on their gait symmetry and coordination [30, 31]. As it is an ecological sound morphology, the audio cues associated with wading can help these individuals develop a better sense of bodily awareness and spatial orientation, ultimately improving their motor skills and facilitating the recovery process [32, 33].

In addition to rehabilitation, the calming and soothing sounds of wading have proven to be beneficial in therapeutic and relaxa- tion contexts [34]. Splashing sounds have previously been explored to signify the start and end of a movement, and shown to enhance motivation and relaxation [35]. These sounds can be utilized in stress reduction techniques, meditation practices, and mindfulness exercises [36]. By incorporating the auditory simulation of wading, individuals can experience a greater sense of tranquility and serenity, allowing for a deeper level of relaxation and mental rejuvenation [37]. The accessibility aspect of the wading simulation is another significant application. For individuals who face physical limitations that prevent them from participating in water-based activities, such a simulation can provide a means to engage in and enjoy such experiences in virtual reality [38]. The wading sound simulation also holds potential in virtual training scenarios. Stakeholders like the military, police, emergency response, and maritime operations can leverage virtual training technology [39], and the wading simulation can enhance the fidelity of their training environments [28]. By incorporating virtual scenarios that mimic water-based challenges, trainees can develop and refine their situational awareness, decision-making, and problem-solving skills in a setting that is immersive and realistic but also safe and controlled. Finally, such a simulation can help accelerate Foley workflows in film production [40] by reducing the required amount of manually synchronized movement recording and editing.

As all these applications have different specific criteria that the digital wading sound simulation must satisfy, our goal was to create a perceptually robust starting point that can later be honed to cater to the needs of specific applications. In this study, we investigated the specific problem of designing and developing a real-time algorithmic simulation of the auditory experience of wading through water. Our main requirement was that the simulation should elicit compelling perceptions of variables such as depth, speed, effort, realism, agency, and naturalness. We finally studied how these synthesized sounds compared in terms of perceived realism to real recorded wading sounds across various simulated conditions. This article is a modified and extended version of [41] where Experiments 1 and 2 were presented. A thorough overview of established methodologies and pertinent theories which shaped the strategies of this project is provided in the following section.

2 Related work

Many existing systems have sonified human movement parameters related to ground reaction force and limb velocity [42, 43] by using these variables to control the real-time generation and manipulation of footstep sounds, with several researchers using physics-based models for sound synthesis. As early as 2002, Cook developed a model for gravel walking [44] by applying signal processing to audio recordings of gait. Fontana et al. [45] created several models for walking and running based on parameters representing size, force, and softness. Using mic-captured gait sounds, Turchet et al. [46] generated footstep sounds for a range of solid and aggregate surface sounds (e.g., gravel, sand, wood). Encouragingly, many of these studies found that the surfaces were easy for listeners to identify and classify based on the sounds. Bresin et al. [17] achieved similar results with force sensor-based measurements. More recent studies have shown that digital manipulation of footstep sounds can alter both body perception and gait characteristics. Tajadura-Jimenez et al. [10] found that emphasizing certain frequency ranges in footstep sounds changed walker perceptions of weight and speed. Gomez-Andres et al. [47] found that these spectral manipulations could also reduce gait asymmetries in chronic stroke patients. Turchet et al. [18] found that providing footstep sounds that were incongruent with the walkers’ haptic perceptions led to slower walking and greater levels of departure from normal gait parameters than when congruent sounds were provided. These approaches only generate sound when the foot is in contact with the ground (stance phase) and not during the non-contact phase of gait where the limb rotates and displaces through the air (swing phase). The swing phase does not generate sound during the course of normal over-ground walking, but it does generate sound during wading. In fact, very few studies (e.g., [42, 48]) have even tackled the challenge of designing interactions around the swing phase [20]. Existing examples of water-based walking interactions have not considered the continuous trajectories of the swing phase either. We came across two notable examples in the literature. Ley-Flores et al. [35] developed a simulation that triggered splashing sounds at the start and end of limb movement during the gait cycle, and Groß-Vogt et al. [49] triggered splashing sound simulations using pressure sensor readings that reflected foot contact. Despite the seemingly inaccurate excitation signals used, the simulations were perceived to sound realistic by participants in both studies [35, 49]. Given the nature of wading, the integration of swing phase kinematics into the sonification design is a logical next step toward improving the plausibility of these simulations.

One potential approach to simulate wading sounds is to capture and map swing phase kinematic variables to physics-based liquid sound models. In the last two decades, physics-based simulations of liquid sounds have seen significant progression, although none of them have been controlled in real time by kinematic signals corresponding to walking. Van Den Doel [27] laid the groundwork, emphasizing the central role of bubbles in acoustic emission, while suggesting the necessity for a parameterized model that combines individual bubble physics with an empirical model for bubble statistics. Expanding upon this, Moss et al. [50] introduced two fluid simulators — shallow water and hybrid grid smoothed particle hydrodynamics method — which added complexity through the inclusion of nonspherical bubbles. This model also highlighted the importance of audiovisual synchronization in augmenting the perceived realism of sounds in a multimodal context. Giordano et al. [51] underscored the complexity of liquid synthesis, noting that much of the existing research concentrated on simulating bubble formation. Lastly, Cheng et al. [52] made substantial advancements by focusing on liquid–solid interaction sound synthesis. This approach maintained practical computation times while enhancing realism through a blend of recorded and synthesized sounds. Overall, perceptual tests conducted during several of these studies [27, 50, 52] revealed that the generated sounds were perceived to be moderately realistic by listeners (although no experimental comparison of these models has been conducted to our knowledge), implying that this might be worth exploring in the current context.

The inherent spontaneity of physics-based synthesis can offer an embodied experience through the natural alignment of sound feedback and action [53]. Yet, for the effectiveness of physical models, it is important to balance sound realism with interactive aspects [45]. A critical consideration is the dynamic relationship between the user’s actions and the subsequent functional or meaningful outcomes, which lies at the heart of interaction design [53]. To bridge this dynamic, it is necessary to have an independent mapping layer that facilitates intimate interaction with sound [8, 54]. Such a structure can appropriately leverage the potential of synthesis, enabling users to directly control and interact with the sound [8]. Software packages such as the Sound Design Toolkit [53] have been developed for the purpose of facilitating the creation of meaningful interactive mappings.

Contrary to the impulsive nature of regular footsteps, the forward limb swing responsible for exciting the sound of wading is a continuous phenomenon. When a person interacts with a sonic medium, a tight motor coupling is necessary for a sense of embodiment, which entails that the stimuli presented to the various sensory modalities involved are synchronized [4, Chapter 10]. As alluded to above, reliable sonic feedback depends on how movement variables are mapped to the digital synthesis engine [55], and “correct” parameter values and ranges are crucial to obtaining a resulting sound similar to the acoustic counterpart [14]. Indeed, sound effects (non-speech / non-music sounds used in audiovisual media to accompany observable sound-producing events) are always approximations of natural sound events [55], just as physics-based sound models are approximations of real phenomena [4, Chapter 10]. More detailed sound models can increase sonic accuracy but also decrease responsiveness, and a key design challenge is that of balancing these two aspects [4, Chapter 10]. To address this, it has been proposed that a combination of synthesized sounds and recorded samples may be worth exploring [56], although this can be complex due to differences in approach when dealing with either type; sample-based processing requires a sculptural approach (shaping the time and frequency domain evolution), while abstract synthesis requires complex control layers [53]. Moreover, designing continuous interactions such as wading is challenging as such interactions are far more prone to expressive manipulation than simple button-triggering, and the problem is hence one of creating a compelling (albeit fictional) sense of causality [57]. To the best of our knowledge, this has never been done for wading sounds.

Standard methods for creating sound effects are an essential source of knowledge for sonic interaction design [55]. We refer specifically to the technique of Foley sound, a standard practice in film sound production for adding concreteness and materiality to what is shown onscreen [7, 40]. Here, onscreen sounds are generated by performers (Foley artists) who generate sounds by performing objects that model the intended sound rather than reproducing the original process [8, 14]. For instance, the sound of an onscreen clinking chain may be simulated by a Foley artist shaking a bunch of keys. The success of Foley goes to show that sound effects do not have to sound absolutely real, only convincing enough to be plausible within their context [8]. In recent years, digital Foley tools have been explored as a means to automate parts of the workflow [7]. Using microcontrollers coupled with motion sensors, one can intuitively map gestures to sound by deriving synthesis parameters from physical parameters [8] Several digital Foley tools have been built as well. Van den Doel et al.’s FoleyAutomatic [58] produced sound effects for interactive simulations and animation automatically from 3D models. More recently, Aly et al. [7] developed DiGIT, which adopted a granular synthesis approach based on gestural input to a tangible interface. However, none of these tools appear to support wading sound generation. Foley artists consider two key factors — how realistically a Foley tool simulates the intended sound, and how performable it is [14, 55]; these factors formed the basis for our own success criteria as outlined next.

2.1 Design approach

We primarily opted to see the interaction design problem of real-time wading sounds as one of interactive parameter mapping sonification [59]. The first consideration was that of capturing a continuous trajectory of motion data that faithfully represented the swinging limb, which in turn could control sound generation. In previous work, velocity profiles of the movement effector (e.g., finger in the case of pointing) have been successfully used to evoke the underlying movement through sound mapping [60, 61]. More largely speaking, velocity is a common physical dimension to map to sound in sonification systems, and it is very common for it to be mapped to loudness-related sound features [62, 63], with research having shown that this is an ecological and understandable mapping [61, 64]. This notion lends itself well to the case of wading sound generation, where a fast limb swing typically generates a louder and more energetic sound than a slow swing. To capture the relevant swing characteristics, we deemed it more appropriate to measure angular velocity of the leg segments than linear velocity. This is because the motion of the thigh and shank about the hip and knee joint during gait is circular rather than translational [65]. Angular velocity is readily and inexpensively measurable using gyroscopes, which, although seldom used [63], have been suggested as an intuitive tool to facilitate the mapping of movements to sound in a 2-D plane [66]. Moreover, gyroscopes are integrated into inertial measurement units, which have been suggested for movement sonification applications for their easy accessibility and low cost [62].

The overall block diagram of the system. The normalization was carried out such that 0–100 \(^\circ \)/s (empirically chosen range) of thigh rotation velocity in the positive (forward) direction was represented as a 0–1 range. LPF, lowpass filter; Pol Invert, polarity invert; OSC, open sound control. The mapped audio processing parameters in REAPER are shown in dark yellow

Our rationale for sound generation combined knowledge from the aforementioned literature on liquid sound synthesis [27, 51], sonic interaction design [4, 67], Foley sound [8, 14, 40, 55], and interactive movement sonification [60,61,62,63]. Specifically, it involved mapping gyroscope-captured angular velocity trajectories from the lower limbs to a digital synthesis engine combining pre-recorded liquid sounds and the physics-based model of Van Den Doel [27]. At the outset, we framed the following research questions:

-

RQ1: How accurately can liquid sounds modulated by angular velocity signals evoke real-life movement-sound associations while naturally simulating the sound of wading?

-

RQ2: How do the resulting movement-sound associations manifest in various aspects of interactive user experience in a real-time use case scenario?

-

RQ3: How does the simulation compare to real wading sounds in terms of perceived naturalness over a range of walking cadences?

The research questions RQ1-3 were sequentially addressed over the three stages of this iterative study, each of which involved a separate evaluation protocol. Improvements were made to the developed wading sound simulation at each stage based on literature and, in part, the findings from previous evaluation procedures. We evaluated evoked movement-sound associations (16 participants) after the first stage, interactive user experience (9 participants) after the second, and perceived naturalness (55 participants) after the third. Akin to the criteria used by Foley artists [14, 55], we aimed to maximize perceptual realism as well as a set of variables tied to overall user satisfaction. The following sections detail the design and evaluation processes followed by a discussion of key results at each stage.

3 System design and implementation

At the outset, we made the following simplifying assumptions about the sound generation process:

-

1.

The sound of wading is spectrally similar to the sound of flowing water.

-

2.

Sound is only generated during the forward swing of the limb (sufficiently captured by thigh rotation about the hip).

-

3.

The intensity and timbre of the wading sound are directly correlated to the angular velocity trajectory of the limb.

3.1 Design tools and methodology

As such, our system (shown in Fig. 1) was built to track the thigh’s angular velocity in real-time and map it to the amplitude envelope and textural parameters of flowing water sounds. This was done to create a direct and synchronous association between the temporal characteristics of the motion and the evolution of the sound texture, which is the working principle of Foley [14]. It comprised (A) two wireless inertial measurement units (IMUs) to track thigh rotation bilaterally, (B) custom PC software to process and map the IMU data in real-time (upgraded version of the technical framework described in [68]), and (C) a REAPERFootnote 1 session with a custom-built signal chain for audio generation. The components communicated via Open Sound Control (OSC) messages at a fixed data sampling rate of 100 Hz, and the final audio output was 44.1 kHz/24-bit stereo.

3.2 Sensing

For motion sensing and wireless transmission to the software, we used thigh-mounted (both legs, lateral placement) M5Stack GreyFootnote 2 microcontrollers equipped with MPU-9250 IMU units and enclosed in bespoke silicone casing. These devices transmitted motion data as per the 802.11n wireless local area network standard to our custom JUCE software.

3.3 Real-time data processing

As shown in Fig. 1, the gyroscope signals from each thigh were first smoothed using 2nd-order Butterworth low-pass filters with a 5 Hz cutoff frequency and Q-factor of 0.7, which we found to be the best tradeoff between smoothing and added latency. The angular velocity signal typically shows large positive excursions during the swing phase, whereas the stance phase is characterized by small negative excursions due to the slow backward rotation. The smoothed left and right signals were then processed through a real-time mapping architecture in the software. Specifically, the positive rotation velocity range was normalized to a 0–1 range in such a way that negative values (backward limb rotations) were pinned to zero, forward rotation values > 100 \(^\circ \)/s were pinned at one, and values in between were in a 0–1 range. As only one limb rotates forward at any given time during walking gait, the normalized angular velocities were summed to a single control signal representing the overall gait. This was split and scaled to the respective parameter ranges of interest as shown in Fig. 1 to yield two control signals for sound generation. These were transmitted to REAPER using OSC for further processing.

3.4 Sound mapping and generation

We adopted a hybrid approach combining pre-recorded flowing water samples with the synthesized output of van den Doel’s physical model [27]. Our aim was to leverage the textural realism of recorded sounds as well as the parametric control provided by physical models. The core of their algorithm calculates the impulse response of a radially oscillating bubble as a damped oscillation that simulates the sound of a single bubble suspended in water. Details on how to stochastically concatenate several virtual bubbles to create a stream of water are explained in [27].

The physical model component was achieved using the WaterFootnote 3 VST plugin (32 bit), which is a real-time implementation of the liquid synthesis algorithm described in [27]. As shown in Fig. 1, we mapped one control signal to the bubble generation rate parameter. The bounds were selected such that stationary standing (and double support periods during walking) resulted in no bubble generation, slow limb swing led to slow bubble generation, and fast limb swing led to rapid bubble generation. Audio examples of the physical model in isolation are provided here.

The recorded sample component was achieved using recorded sound clips of flowing water from the FreeSound online library.Footnote 4 These clips were edited, crossfaded, looped, and summed with the physical model component. The second control signal, as shown in Fig. 1, was linearly mapped to a gain control applied to the summed physical model and recorded sample components. The control signal value ranges were tuned by an analysis-by-synthesis approach. Separate REAPER sessions were created for shallow (ankle-deep) and deep (thigh-deep) wading simulations, and sound examples at different speeds are provided in the Supplementary Materials. The system had an approximate round-trip latency of 100 ms.

4 Experiment 1: sound quality assessment

The purpose of this assessment was to test whether the developed wading sound simulations were capable of eliciting in listeners the intended perceptions of depth, walking speed, naturalness, and effort. To evaluate this, we carried out a web-based perceptual evaluation of the two-depth simulations recorded at various walking speeds. At the outset, we hypothesized that perceived depth and effort would increase with increasing simulated depth, perceived speed would increase with actual speed, and perceived naturalness would not vary with either factor.

4.1 Stimuli

We captured IMU recordings at the thigh from one healthy 30 y/o male walker (author PRK) during overground back-and-forth walking on a straight 4 m path and sampled them at 100 Hz. This was carried out at three walking speeds — slow (minimal speed), normal (brisk), and fast (threshold of running). Using our setup, we generated sonified sequences from the data corresponding to each of the simulated depths (shallow, deep). The sequences were adjusted by ear to have roughly equal loudness. We rendered a set of 7-s excerpts (including turning pauses) as stereo 320 kbit MP3 files. Hence, the complete set of stimuli comprised six audio clips (2 simulated depths \(\times \) 3 walking speeds) (link in footnote).

4.2 Participants

A convenience sample of 16 participants (9 men, 7 women) aged 42 ± 15.15 years (ranging from 24 to 69) were invited to participate via mailing lists and social media. The assessment was conducted anonymously, and no sensitive information was collected from them. The social media invitation mentioned the purpose of the research and the planned use of their data.

Boxplots visualizing the results of the survey for all factor levels and outcomes. Within each plot, the ratings are clustered vertically based on simulated depth (dark blue = deep, light blue = shallow). In each case, the boxes represent the interquartile range (IQR), and the notches within the boxes represent the median. The whiskers indicate variability outside the IQR. The small circles represent potential outliers (> 1.5 IQR but \(<=\) 3 IQR above (below) the upper (lower) quartile. The dots denote extreme values (\(>3\) IQR above (below) the upper (lower) quartile). Significant differences are indicated by the asterisks between levels. *p < 0.05, **p < 0.01

4.3 Experimental procedure and outcomes

The evaluation was set up as a survey on Google Forms. Participants were initially briefed about the purpose of the research as well as the structure of the survey, but not about our hypotheses. They were encouraged to use good quality speakers or headphones if possible but were free to use any available listening device. After collecting basic information about the participants’ age and gender, the survey was divided into four parts addressing the four outcomes of the study that took a total of 5–10 min to complete:

-

Perceived water depth: Participants listened to each of the six audio clips (presented in a random order) and were asked to rate on an 11-point scale (0–10) their perceived depth of the water (0 = 0 m / 0 ft, 10 = 1 m / 3.28 ft).

-

Perceived walking speed: They then listened to the same clips in a different random order and were asked to rate the perceived walking speed of the walker (0 = slowest possible, 10 = almost running).

-

Perceived effort: The procedure was repeated and participants were asked to rate the clips with regard to the physical activity of the walker in terms of perceived effort (0 = minimal effort, 10 = maximal effort).

-

Perceived naturalness: The participants were asked to assess the extent to which the sounds resembled those of walking through water in real life (0 = highly unrealistic, 10 = highly realistic).

4.4 Data analysis

The age and gender data were aggregated, the rating data were exported from Google Forms in a comma-separated format, rearranged, and statistically analyzed in SPSS 27.0. In correspondence with the stated hypotheses, we aimed to analyze the effects of (a) simulated depth (deep, shallow), and (b) actual walking speed (slow, medium, fast) on perceived depth, walking speed, effort, and naturalness ratings. We first checked all data for normality (Shapiro-Wilks test) and homogeneity of variance (Levene’s test) for each set of factors, and found the distributions to both exhibit significant deviations from normality and significantly non-homogeneous variance between factor levels. Therefore, we chose to adopt a non-parametric repeated measures analysis for each outcome. We first checked for the main effects of simulated depth and actual walking speed using Friedman tests. If significant effects were detected, planned pairwise comparisons specific to the hypotheses were carried out using Wilcoxon signed-rank tests. A significance criterion \(\alpha = 0.05\) was used for all statistical analyses. The reported p-values are those obtained post Holm-Bonferroni correction(p-values multiplied by correction factor). The SPSS datasets are provided in the supplementary materials.

4.5 Results

A significant main effect of simulated depth and actual walking speed on perceived depth (\({\chi }^2(7)=43.68\), \(p<\) 0.001), walking speed (\({\chi }^2(7)=50.31\), \(p<\) 0.001), and effort (\({\chi }^2(7)=17.33\), \(p =\) 0.004), but not for naturalness (\({\chi }^2(7)=7.19\), p = 0.207) were shown. The results of the planned Wilcoxon signed-rank comparisons are depicted in Fig. 2.

There was a clear effect of simulated depth on perceived depth at all three walking speeds; as hypothesized, the clips corresponding to the deep water simulation were rated as deeper than those of the shallow simulation for slow (Z = \(-\)3.192, p = 0.009), medium (Z = \(-\)3.246, p = 0.009), and fast (Z = \(-\)3.335, p = 0.007) walking. There were no significant differences in perceived depth ratings between the actual walking speeds within either of the simulated depths.

There was an effect of actual walking speed on perceived walking speed; as hypothesized, the medium walking clips were rated as significantly faster than the slow clips for both deep (Z = \(-\)3.313, p = 0.003) and shallow (Z = \(-\)3.255, p = 0.003) simulated depths. The fast clips, on the other hand, were rated significantly higher in terms of perceived walker speed than the medium clips for only the deep water simulation (Z = \(-\)2.259, p = 0.047) but not the shallow simulation (Z = \(-\)1.349, p = 0.177).

There was an effect of simulated depth on perceived effort; as per our hypothesis, the clips corresponding to the deep water simulation were rated significantly higher than their shallow counterparts for each of the walking speeds — slow (Z = \(-\)2.214, p = 0.026), medium (Z = \(-\)2.381, p = 0.034), and fast (Z = \(-\)2.778, p = 0.016).

Although there was no significant main effect on perceived naturalness across the depth/speed combinations (\({\chi }^2(7)=\)7.19, \(p = \) 0.207) in line with our hypothesis, it is worth noting that both simulated depths received high ratings of naturalness for slow walking (median rating of 8/10 in both cases). However, it is also evident in Fig. 2 that there was a lot of individual variability in the ratings, and the naturalness ratings tend to be somewhat worse for the faster walking speeds compared to the slowest.

4.6 Interim discussion

The results indicate that the respondents were able to distinguish between the clips representing different walking speeds and simulated water depths. For each walking speed, they rated the perceived effort of walking to be greater for deep water, which is logical given the greater drag force that exists in real-life [69], although there was interestingly no difference between the walking speeds themselves, even though drag force is known to increase with speed [69]. In terms of perceived naturalness, we estimated that the fast-walking clips received somewhat low ratings primarily due to the shape of their amplitude envelopes. Specifically, the slow walking sounds had a slow onset and decay analogous to the relatively gradual thigh acceleration and deceleration compared to fast walking, where the sounds sharply increased and decreased in amplitude. The latter, although in line with our second simplifying assumption, may have been perceived to be unnatural as real liquid sounds take some time to dissipate after the initial excitation has died away (due to the physics of bubble generation [27]). Figure 3 (top pane) shows how the swing phase is characterized by rotations of the thigh as well as the shank. With no shank information used for sonification purposes, the wading sound was in its decay phase when the shank rotation reached its peak velocity (unnatural). To satisfy our overall aim of creating a plausible real-time interactive wading simulation, this would require immediate addressal before proceeding. An important limitation of the test protocol was that there was no learning phase to help respondents form a frame of reference for the relatively subject aspects of effort and naturalness. Yet, since the sound quality assessment validated the majority of our hypotheses and provided positive early evidence in support of our basic assumptions, our next step was to test the system in an interactive setting.

An illustration of the swing phase shown in context with smoothed thigh and shank angular velocity trajectories as well as the temporal extent and amplitude envelope of the resulting wading sound for two smoothing filter cutoff frequencies (fc = 5 Hz and fc = 0.8 Hz). Shank information was not used for sonification purposes and is plotted for context only

LEFT: A top-view illustration of the designated walking path in Exp. 2. An inner square (white) was marked on the floor using tape to demarcate the walking path (yellow) and start/end point of each lap. A pair of wired headphones was suspended from the ceiling. RIGHT: A participant during the task, with the wired headphones as well as the thigh sensors visible

5 Experiment 2: user experience evaluation

The purpose of this experiment was to assess the experience of users interacting with the wading simulation system in real time with a particular focus on the impact of time-domain sound envelope manipulation on user agency, comfort, self-reported effort, motor change, and perceived naturalness of the interaction. To this end, we added a new mapping configuration by modifying the smoothing filter applied to the gyroscope signals to have its \(f_c = 0.8 Hz\) (empirically set for a natural-sounding decay) followed by a gain factor to compensate for the resulting amplitude decrease. The result of this is shown in the bottom pane of Fig. 3. As shown, the wading sound is now more temporally aligned with the shank rotation due to phase delay and takes substantially longer to smoothly die out due to the attenuation of high-frequency components in the angular velocity signal. The filtering was designed to address the rapid decay issue previously alluded to in the Interim Discussion. To gauge the effect of usings clearly movement-incongruent sound envelope shapes on user perception, we, in addition to the configuration with 5 Hz cutoff (S5.0) and 0.8 Hz cutoff (S0.8), created an asymmetric configuration (AS) which used different cutoff frequencies for the left and right thigh signals as summarized in Table 1. In all conditions, the sounds corresponding to the left and right limb were respectively panned 50% left and right in the stereo field. We proceeded to test user experience when walking with AS, S5.0, and S0.8 in a brief pilot study. Audio examples of each are provided in the supplementary materials.

5.1 Participants

A convenience sample of 9 participants (8 men, 1 woman) aged 29.66 ± 4.24 y/o from the student and staff population of Aalborg University, Copenhagen, volunteered to participate. None of them reported having any form of documented hearing loss. All of them were briefed beforehand about the purpose and length of the experiment and informed that they could withdraw at any time. Participants were told that there would be three identically structured experimental conditions, and the walking task details were explained to them verbally by the experimenter. Each of them provided informed consent prior to participation, and the experiment was carried out according to the Helsinki declaration. Following this, they were asked to fill out basic information about themselves (age, gender, and documented hearing loss).

5.2 Setup

The test was carried out in a quiet laboratory at Aalborg University, Copenhagen. The laboratory has an approximately 6 m \(\times \) 6 m vacant space with black synthetic flooring and surrounded on three sides by black curtains. An inner square was marked on the floor using tape to demarcate an approximately 1 m wide walking path around its outer perimeter. The start/end point for each walking lap was also marked. A pair of AirSonic wired headphones was suspended loosely from the center of the ceiling. We used headphones to ensure that participants perceived the stereo field consistently irrespective of head orientation or their physical location in the space (Fig. 4).

Motion data streaming, mapping, and sound generation (simulated depth: deep) were carried out on a Dell Inspiron 15 7000 Windows laptop computer. The computer and experimenter were set up adjacent to the path. For low-latency sound output, an M-Audio M-Track SoloFootnote 5 audio interface was used.

5.3 Procedure

The IMU sensors were calibrated for static bias compensation and mounted laterally on the participants’ thighs. During each of the three conditions (order counterbalanced across participants), they were asked to walk a total of six laps of the square path with the sensors and headphones on while their movements were recorded. They were told that during the first three laps (baseline), no sound would be audible whereas there would be some walking-generated feedback during the last three (feedback). No information was given to them about the specifics of the sound mapping. During each lap, they started walking from the start point (alternating clockwise and anticlockwise paths around the space) until they reached the start point again. They then paused for \(\sim \)2 s, turned around, and started in the opposite direction. After three laps, the water sound feedback was provided at a standardized volume (approximately 80 dB SPL), and the participant walked three more laps. Once each condition was complete, the participant filled out a Google Forms survey about various aspects of user experience — perceived agency, naturalness, effort, speed, comfort, change in movement — and proceeded to the next condition. The survey, which was identical for S5.0, S0.8 and slightly different for AS as not all items were deemed relevant to this condition, had a series of 7-point rating scale items:

-

(S5.0, S0.8) Whether the sound perfectly followed/respon-ded to their movements (1 = strongly disagree, 7 = strongly agree).

-

(S5.0, S0.8, AS) Whether they noticed a change in their walking after the sounds started (1 = no change, 7 = extremely drastic change).

-

(S5.0, S0.8) How natural they felt the sounds were (1 = highly unnatural, 7 = highly natural).

-

(S5.0, S0.8) Whether the sounds changed their perception of the muscular effort they applied in order to walk (1 = much less effort, 4 = no change, 7 = much more effort).

-

(S5.0, S0.8) How disturbing the sounds were while walking (1 = not at all disturbing, 7 = highly disturbing).

-

(S5.0, S0.8, AS) How comfortable they felt when walking with the sounds (1 = highly uncomfortable, 7 = highly comfortable).

-

(S5.0, S0.8, AS) Whether there was a change in their walking speed after the sounds started (1 = major decrease, 4 = no change, 7 = major increase).

Some participants also provided spontaneous verbal comments, which were noted. In all, the experiment took \(\sim \)25 min per participant.

5.4 Data analysis

Due to the small sample size (n = 9), we used non-parametric tests for all statistical analyses. The survey responses were exported to IBM SPSS 27.0 for analysis. Each rating scale was compared between conditions using (a) Wilcoxon Signed-Rank (WSR) tests where only S5.0 and S0.8 were assessed, and (b) Friedman’s ANOVA conditionally followed by post-hoc WSR tests if all conditions were applicable. Additionally, Spearman correlation coefficients were computed on the long-form Likert data for all responses between each pair of items. A significance criterion \(\alpha = 0.05\) was used for all statistical analysis. The SPSS datasets are provided in the supplementary materials.

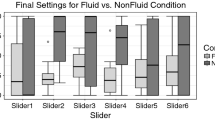

Boxplots visualizing the rating results. In each case, the boxes represent the interquartile range (IQR), and the notches within the boxes represent the median. The whiskers indicate variability outside the IQR. The small circles represent potential outliers (> 1.5 IQR but < = 3 IQR above (below) the upper (lower) quartile. The dots denote extreme values (> 3 IQR above (below) the upper (lower) quartile)

5.5 Results

The survey results are depicted in Fig. 5. In terms of perceived naturalness, the WSR test revealed no significant difference (Z = \(-\)1.781, p = 0.075) between S0.8 and S5.0. There was no significant difference between disturbance level ratings either (Z = \(-\)1.725, p = 0.084). In terms of comfort level ratings, the Friedman test did not reveal a significant main effect across conditions (\({\chi }^2(2)=\) 4.067, \(p=\) 0.131) However, as seen in the figure, the sample median ratings differed between the conditions for each of these variables. We found significant negative correlations between disturbance and comfort ratings (\(\rho \) = \(-\)0.688, \(p=\) 0.002) and between disturbance and naturalness ratings (\(\rho \) = \(-\)0.504, \(p=\) 0.033).

For both S0.8 and S5.0, the participants tended to agree with relative uniformity that the sound followed their movement (Z = \(-\)0.171, p = 0.865). They also expressed that the sound feedback, in general, led to changes in their walking compared to the baseline laps (mid-bottom of Fig. 5), although their estimated magnitude of walking change did not significantly vary between conditions (\({\chi }^2(2) = \)0.074, \(p=\) 0.964). However, we did observe that S0.8 seemed to elicit somewhat lower walking change ratings than the other conditions. There was no significant difference in terms of speed changes across conditions (\({\chi }^2(2)\) = 4.727, \(p=\) 0.094). Between S0.8 and S5.0, there were no significant differences in terms of changes in muscular effort (Z = \(-\)1.0, p = 0.317), where the median rating was 4 (no change) in both cases. There were significant positive correlations between rated walking change and speed change (\(\rho \) = 0.543, p = 0.003) and between rated walking change and whether the sound was perceived to follow the movement (\(\rho \) = 0.528, \(p=\) 0.024).

Three participants commented verbally that they tried to adapt their walking to attain a more comfortable sound. Two others stated that they felt a greater awareness of their walking and sense of rhythm during the S5.0 condition, whereas they felt a sense of imbalance in the AS condition that they needed to compensate for.

5.6 Interim discussion

Although the user experience assessment did not yield significant differences between the data filtering conditions, we believe that this was due to the small sample size and conservative nonparametric analysis. Our use of a lower resolution 7-point Likert scale in Experiment 2 (as opposed to the 11-point scale in Experiment 1) may have partially contributed to the observed lack of significant differences between conditions. Our rationale for implementing S0.8 was to give the sound a more natural decay, and the naturalness, comfort, and disturbance ratings show minor tendencies that support this notion, albeit seemingly at the cost of a slightly weaker sense of agency (median rating 5.0 v/s 6.0 for S5.0). We attribute the latter to the onset delay (i.e., \(\sim \)200 ms latency) brought about by the more aggressive filtering applied in S0.8, indicating that filtering may not be the optimal method for envelope shaping, and that separate control over the rising and falling edge of the sound might be necessary in the algorithm. While these observed tendencies in the results should be interpreted with caution, they yield a set of interesting insights about the interaction design problem at hand. The participants’ overall agreement that S0.8 and S5.0 sounds followed their movements (i.e., agency) supports the notion that the wading simulation was movement-congruent in terms of spatial and temporal contiguity [70]. This, coupled with the high ratings of naturalness in both experiments, suggests that the movement and sound were also perceived as semantically congruent. This is further supported by how effectively the participants in Exp. 1 were able to distinguish between the actual walking speeds and depths based on sound alone.

Based on the results of Menzer et al. [71], one may have expected S0.8 to elicit considerably lower ratings of agency than S5.0 due to the delayed onset of sound relative to the start of the swing phase, but no such tendency was evident. This could be because Menzer et al. used delayed footstep sounds (impulsive events by nature) where temporal discrepancies are more easily perceptible than with gradual onset sounds such as wading [72, 73]. The negative correlations seen between disturbance and both naturalness and comfort ratings, while not directly indicative of causal relations, may point to the importance of sonic naturalness when designing an application for long-term use. One interpretation is that unnatural sounds are perceived as less semantically congruent with the movement, which in turn causes users to be less comfortable and find them more disturbing than natural sounds [18, 74]. This would also explain why the explicitly incongruent AS configuration elicited the lowest median comfort ratings.

Across conditions, the participants reported only minimal change in perceived walking speed and muscular effort during the feedback laps versus the baseline. Even though wading entails greater effort due to fluid drag forces [69], the participants carrying out the task also received contradictory information from other modalities (vision, touch, proprioception). Based on known mechanisms of intersensory bias [75], the auditory information was most likely suppressed in their overall perception of effort. This is, however, at odds with similar studies that have demonstrated changes in body and movement perception due to altered auditory feedback [10, 35]. A follow-up experiment with a larger sample is necessary to confirm this finding. Participants did, however, report some amount of perceived change in their walking over the baseline in each of the feedback conditions. The positive correlation between agency and perceived walking change ratings indicates that a strong feeling of agency is necessary in order for the digital simulation to actually affect walking characteristics, a notion well aligned with the existing literature [76, 77]. However, a limitation of the current work is that it did not include a detailed kinematic analysis of the participants’ gait during the various conditions. It is therefore not possible for us to draw conclusions on the precise nature of conscious or subsconscious kinematic changes resulting from the sounds. Although this was not the focus of the current work, this aspect is an important part of user experience and should be investigated in future studies.

In light of the findings from Experiments 1 and 2, we discerned multiple avenues to enhance our simulation. These improvements predominantly pertain to the amplitude envelope and the spectral evolution of the sound. This provided a clear direction for refining our algorithm in its final iteration, concentrating on enhancing its perceptual authenticity.

6 System modification

We undertook a final design iteration to improve the realism of the algorithm output. Our first focus was on integrating the kinematics of the shank, a critical factor in more faithfully representing the limb’s trajectory during the swing phase [78] to ensure tighter movement-sound correspondence. The second area identified for enhancement revolved around the falling edge of the sound, which, based on the experience of GroSS-Vogt et al. [49] needed to be less abrupt while maintaining the temporal integrity of the initial, rising phase of the sound (which was time-smeared by the aggressive filtering applied in S0.8). Thirdly, we sought to enrich our simulation with spectral changes to amplify the sounds associated with splashing and pronounced bubble generation, specifically at higher swing velocities so as to mimic the real-world variance in wading sounds with changes in velocity. Lastly, we decided to incorporate an additional sound layer to correspond with splashes resulting from displacing the liquid body. The resulting implementation underwent a final perceptual evaluation where it was compared with real wading sounds and the prior version of the algorithm at various walking cadences.

6.1 Modified architecture

We carried out the following technical upgrades during the development of the final version of the mapping algorithm:

-

Addition of shank sensors: Additional IMU sensors (identical to the thigh sensors) were added in order to measure the angular velocity trajectories of the shanks. The data underwent identical filtering and normalization to that of the thigh sensors.

-

Envelope followers: In order to make the falling edges of the normalized gyroscope signals more gradual while leaving the rising edges intact, we took inspiration from [49] and implemented envelope followers as described in [79, Chapter 12] and depicted in Fig. 6. The time constants TC, \(\tau _a\), \(\tau _b\), and output signal env[t] were calculated as follows:

$$\begin{aligned} TC = log (0.01) \end{aligned}$$(1)$$\begin{aligned} \tau _a = e^{TC / (attack \cdot f_s \cdot 0.001)} \end{aligned}$$(2)$$\begin{aligned} \tau _r = e^{TC / (release \cdot f_s \cdot 0.001)} \end{aligned}$$(3)$$\begin{aligned} env[t] = x[t] + \tau _a \cdot (env[t-1] - x[t]) \quad \qquad x[t] > env[t-1] \end{aligned}$$(4)$$\begin{aligned} env[t] = x[t] + \tau _r \cdot (env[t-1] - x[t]) \quad \qquad x[t] \le env[t-1] \end{aligned}$$(5) -

Pre-rendering of physical model output: So as to improve real-time efficiency, we decided to use a pre-rendered clip of the physical model output (steady-state bubbling) instead of synthesizing it in real-time. Although this is not the same as dynamically varying the bubble generation rate, we judged the perceptual difference between the two approaches to be negligible when layered with other broadband sounds.

-

Nonlinear shaping: To achieve the desired time-domain shape of the amplitude envelopes of the water sounds for optimal perceptual scaling of intensity, we implemented simple exponential functions as:

$$\begin{aligned} y = x^{k} \qquad \qquad \qquad \qquad x \in [0, 1]\qquad k \in [0.35, 6] \end{aligned}$$(6)Values of k > 1 led to exponential transfer function shapes, while k values < 1 led to logarithmic shapes.

-

ReaLearn for OSC mapping: In order to obtain greater flexibility and real-time control during the mapping design process, we integrated the REAPER extension ReaLearnFootnote 6 into the mapping workflow in conjunction with our custom software framework. Using ReaLearn, we were able to define multiple parallel parameter mappings using the same control data and experiment with and realize more complex configurations.

6.2 Upgraded algorithm

A general schematic of the final algorithm is shown in Fig. 7. As shown, the custom framework was configured to process the filtered angular velocity signals to yield three normalized control signals \(C_1\), \(C_2\), and \(C_3\) (0–1 range) that, using ReaLearn, were scaled and routed to audio processors applied to a set of relatively stationary audio clips corresponding to flowing, bubbling, and continuous splashing sounds. The overall principle was similar to the algorithms employed in previous experiments, but the mappings were more detailed and complex overall.

Sound generation and processing

The pre-rendered audio clips were placed on parallel REAPER track channels, looped, and crossfaded. Their fader (volume) controls were set at the threshold of silence (approx. -35 dBFS). As shown in Fig. 7, each grouped sound type (flowing, bubbling, splashing) was processed using the standard REAPER parametric equalizer ReaEQ for tone adjustment, following which the signals were gain-adjusted using the track fader controls and summed to a stereo signal, which also underwent equalization prior to final output. As shown, the wading simulation was achieved by manipulating the fader controls and equalization parameters in real-time.

Control signal generation

As shown on the left of Fig. 7, filtered gyroscope signals were normalized between 0 and 1 in a manner similar to Fig. 1. The normalization bounds for the positive half-cycle of the gyroscope signal were adjustable in real-time to tailor the sensitivity of the sound control. The control signals \(C_1\)–\(C_3\) were also constrained to a 0–1 range. \(C_1\) controlled the gain and spectral shape of two summed sound recordings of steady-state flowing. It was obtained by computing the weighted sum of the normalized angular velocities in the forward direction, followed by an envelope follower (attack = 0 ms, release = 890 ms, empirically chosen for immediate responsiveness but gradual sound decay) and nonlinear transformation (k = 1.93). \(C_1\) was then scaled and positively mapped to the gain of two parametric equalizer bands centred at 260 Hz and 9250 Hz (0 to +9 dB range, frequencies empirically chosen to emphasize bubbling and splashing respectively) as well as the fader control (-33 dB to 0 dB range) to control the overall volume of the composite sound. \(C_2\) controlled the gain of a steady-state bubbling sound clip generated by the Water physical modelling VST plugin. The normalized and summed shank angular velocities were processed by an envelope follower (attack = 0 ms, release = 2230 ms, empirically chosen for immediate responsiveness and natural-sounding decay) and nonlinear transformation (k = 2.56). \(C_2\) was subsequently scaled and positively mapped to the fader control of the corresponding audio track in REAPER (-38 to -5 dB range). \(C_3\), which controlled a sound clip of continuous light splashes, was generated in a similar fashion from the normalized shank angular velocities, which were passed through a 150 ms delay (as water splashes typically occur slightly after the initial movement excitation). Thereafter, it went through an envelope follower (attack = 0 ms, release = 890 ms, similar rationale to \(C_1\)) and transformation (k = 1, effectively a linear transfer function). Finally, it was scaled (-40dB to +5 dB range) and positively mapped to the audio track fader. The mapping preset and REAPER project files are available in the supplementary materials.

7 Experiment 3: final perceptual evaluation

The purpose of this evaluation was to experimentally compare the perceived naturalness/realism of the algorithm output (S5.0 tested in Experiment 2 = Algo V1, and final version = Algo V2) with real-life wading sounds at different walking cadences (steps/min) obtained from professional sound libraries. As the evaluation was planned as an online listening test, we anticipated that participants’ ratings might be affected by their individual levels of expertise in music and audio as well as the listening device they used when performing the test. As these differences could have implications for the real-life utility of the algorithm, we opted to study the overall effects of these individual variables on ratings of perceived realism. We hypothesized that:

-

H1: Algo V2 will receive higher ratings of perceived realism than Algo V2 at all cadences.

-

H2: There will be no difference in perceived realism betweenAlgo V2 and real wading sounds.

-

H3: Cadence will affect perceived realism (higher caden-ce perceived to sound less realistic) for Algo V1 but not Algo V2.

-

H4: Audio expertise, music expertise, and listening device type will affect perceived realism, with higher expertise and better listening equipment leading to lower ratings.

7.1 Stimulus generation

We carried out an extensive online search in royalty-free sound libraries such as Pixabay, Pond5, and FreeSound to obtain wading simulations at various walking cadences. Our criteria were: (1) high-fidelity, full-spectrum sounds with no audible background noise, (2) no background sounds in the recordings (flowing water, wind, etc.), (3) based on the text description and sound of the clip, the water body was not in motion (e.g., lake), (4) the wading gait had minimal instances of foot impact with the water surface (i.e., the foot remained below the water surface), and (5) the wading sounds at different cadences had comparable timbres. We obtained three clips from the Pond5 sound libraryFootnote 7 that satisfied the above criteria. We then imported the recordings into a REAPER session and ascertained the average walking cadence of each as — 27 steps/min (slow), 63 steps/min (medium), and 118 steps/min) (fast). Next, we recorded overground normal walking gait data (thigh and shank sensors) from author PRK at each cadence. The walker (a trained musician) synchronized his gait with a metronome at each respective tempo. We then “played” the gait data through the sound generation algorithm and recorded the output of Algo V1 and Algo V2. Next, we temporally aligned short excerpts of these clips with the respective real-life recordings and prepared a total of nine 10-s excerpts (3 walking cadences \(\times \) 3 sound types).

7.2 Participants

A convenience sample of 55 participants (38 men, 16 women, 1 preferred not to say) aged 44 ± 15.12 years (ranging from 22 to 83) were invited to participate via mailing lists and social media. The assessment was conducted anonymously, and no sensitive information was collected from the participants. The social media invitation mentioned the purpose of the research and the planned use of the data, specifying that proceeding to the survey implied that they provided their informed consent to participate.

7.3 Experimental procedure and outcomes

The evaluation was set up as a survey on Google Forms in a similar manner to Experiment 1. The participants were asked to rate on an 11-point scale the audio clips in terms of their perceived realism, i.e., the extent to which the sounds resembled those of walking through water in real life (0 = highly unrealistic, 10 = highly realistic). The clips were presented in random order and each clip was rated twice by each participant. Finally, the participants filled out basic information about age, gender, and self-reported expertise in music and audio as well as the listening device they used. The survey took a total of 5–10 min to complete.

7.4 Data analysis

The age and gender data were aggregated, the rating data were exported from Google Forms in a comma-separated format, rearranged, and statistically analyzed in SPSS 27.0. We started by calculating the median and upper/lower quartiles of the raw ratings for each sound type across cadences. We then checked all data for normality (Shapiro-Wilks test) and homogeneity of variance (Levene’s test); we found that neither condition was satisfied for the factor combinations. Therefore, we chose to adopt a nonparametric statistical analysis approach.

Within-participant analysis

To check for overall rating differences between the sound types, we found each participant’s median rating across the three cadences for every sound type and checked for an overall main effect of Sound Type (Real, Algo V1, Algo V2) using Friedman’s ANOVA tests. If significant effects were detected, we carried out pairwise comparisons using WSR tests. We then carried out a similar analysis for all nine-factor combinations (3 Sound Types \(\times \) 3 Cadences) after performing within-participant averaging of the two ratings collected for each clip. Planned pairwise comparisons corresponding to H1-3 were conducted using WSR tests.

Between-participant analysis

The participants were grouped based on their self-reported music expertise, audio expertise, and listening device type, and their median rating across factor combinations was computed. For each of these variables, we checked for main effects using Kruskal-Wallis tests followed by post-hoc pairwise Mann–Whitney U tests in case of significant differences.

A significance criterion \(\alpha \) = 0.05 was used for all statistical analyses. The reported p-values are those obtained post Holm-Bonferroni correction (p-values multiplied by correction factor). The SPSS datasets are provided in the supplementary materials.

Boxplots visualizing the results of the survey for all factor levels and outcomes. The ratings are clustered vertically based on cadence. The statistically significant differences between the sound types and cadences are separated horizontally. For each factor combination, the box represents the interquartile range (IQR), and the notch within the box represents the median. The whiskers indicate variability outside the IQR. The small circles represent potential outliers (> 1.5 IQR but \(<=\) 3 IQR above (below) the upper (lower) quartile. Significant differences are indicated by the asterisks between levels. *p < 0.05, **p < 0.01, ***p < 0.001

7.5 Results

7.5.1 Within-participant effects based on sound type and cadence

The Friedman’s ANOVA revealed a significant main effect of Sound Type across the cadence-aggregated ratings (\({\chi }^2(7)\) = 22.03, \(p<\) 0.001). Post-hoc WSR tests showed that the Real clips (median 7.0, IQR 5.0–\(-\)8.0) were rated to be significantly more realistic than both Algo V1 (median 5.0, IQR 3.0–\(-\)7.0) (Z = \(-\)4.412, p < 0.001) and Algo V2 (median 6.0, IQR 4.0–\(-\)8.0) (Z = \(-\)3.079, p = 0.004), thereby violating H2. Algo V2 was rated as significantly more realistic than Algo V1 (Z = \(-\)3.039, p = 0.002), supporting H1. We next examined the disparate effects of Cadence and Sound Type by appraising the differences between the nine-factor combinations (descriptive statistics in Table 2), which are depicted in Fig. 8. The Friedman’s ANOVA revealed a significant main effect (\({\chi }^2(7)\) = 108.01, \(p<\) 0.001) across combinations, and the post-hoc WSR results were as follows:

Effect of sound type

For medium and fast cadence, the Real clips were rated significantly more realistic than those corresponding to Algo V1 (medium: Z = \(-\)4.609, p < 0.001, fast: Z = \(-\)5.558, p < 0.001) as well as Algo V2 (medium: Z = \(-\)2.818, p = 0.039, fast: Z = \(-\)2.887, p = 0.035). Additionally, Algo V2 was rated as significantly more realistic than Algo V1 (medium: Z = \(-\)3.168, p = 0.015, fast: Z = \(-\)5.837, p < 0.001). No significant differences were detected between the sound types for the slow cadence clips. H2 was hence violated, while H1 was partially validated.

Effect of cadence

For Algo V1, the fast clips were rated significantly worse than both the slow clips (Z = \(-\)5.537, p < 0.001) and the medium clips (Z = \(-\)5.400, p < 0.001). Algo V2 performed more consistently across cadences than Algo V1, with no significant differences between the slow and medium clip ratings (Z = \(-\)1.946, p < 0.258) and a relatively small, albeit significant difference between the medium and fast clips (Z = \(-\)3.762, p = 0.002). Hence, H3 was partially validated. For the Real sound type, the medium cadence clips were rated as significantly more realistic than the slow clips (Z = \(-\)4.275, p < 0.001) as well as the fast clips (Z = \(-\)4.133, p < 0.001).

7.5.2 Between-participant effects based on audio expertise, music expertise, and listening device type

The distribution of participants in each category was as follows:

-

Music expertise: no expertise - 9/55, casual amateurs - 24/55, serious amateurs - 13/55, professionals - 9/55.

-

Audio expertise: no expertise - 12/55, casual amateurs - 22/55, serious amateurs - 8/55, professionals - 13/55.

-

Listening device: phone/tablet speakers - 15/55, laptop speakers - 12/55, consumer headphones/speakers - 16/55, professional headphones/speakers - 12/55.

The Kruskal-Wallis tests revealed no significant differences between the overall median ratings of participants grouped on the basis of audio expertise (H = 3.330, p = 0.344), music expertise (H = 1.440, p = 0.696), or listening device type (H = 1.739, p - 0.628), violating H4.

7.6 Interim discussion

We summarize the key findings from Experiment 3:

-

The added algorithmic complexity in Algo V2 improved the perceived realism ratings across cadences and reduced the stark inter-cadence differences seen for Algo V1 (H1 partially validated).

-

The final version of the algorithm (Algo V2) was unable to match real recordings in terms of perceived realism, although the median differences were not very large (1\(-\)1.5 scale points) (H2 not validated).

-

The perceived realism of the algorithmic sounds depended on the walking cadence, with the differences between the sound types becoming starker with increasing cadence (H3 validated).

-

There was a large degree of inter-rater variability, although this could not be conclusively attributed to self-reported music/audio expertise or the listening device used (H4 not validated).

Across sound types and cadences, the participants exhibited considerable variability in their rating behaviors. This was akin to the results of past liquid sound synthesis work [27, 52], and although we could not detect a measurable effect of any of the between-participant variables individually (likely due to the relatively small number of participants in each category), it is possible that they contributed to this result. Algo V2 was considerably more intricate than Algo V1, and the results of Expt. 3 reflected the greater level of realism it achieved, particularly at the faster cadences. As there were many changes made between versions, it is not possible to ascertain precisely how the various signal processing operations each contributed to improving the perceived realism, but we believe that it was the addition of the shank sensor combined with the time-domain amplitude shaping carried out by the envelope follower and nonlinear functions that made the bulk of the difference. The result aligns with existing theories on the importance of the transfer function between data and sound in achieving effective mappings [8, 59].

Despite the improvement between versions, Algo V2 did not succeed in eliciting an equal perception of realism to real wading sounds at the medium and high cadences, no doubt due to the limitations of the algorithm. Considering that the foot contributes substantially to gait [65, 80], it may significantly influence real-life wading sounds. Our algorithm is a cartoonification / simplification of the underlying physics [53] based on a crude approximation of the lower body into two linear segments - thigh and shank. The current version accounted neither for the foot contact with the water surface nor for lateral or backward leg movements while walking. The simulations were for fixed depths and did not take into account the height and weight of the walker. The water body was assumed to be static and not flowing, although the latter can often be the case in real life (e.g., when wading through a river). Ultimately, Algo V2 is a highly simplified approximation of the real-life sound that does not account for the majority of the nonlinearities and nuances associated with liquid sound generation [27, 50] or solid–liquid interaction [52]. Lastly, the stereo image remained static throughout, in contrast to the organically varying spatial signatures of real-life sounds [81]. As a result, it is likely that prolonged and varied interaction with the current algorithm will, to a greater or lesser extent, expose its inaccuracies to most users who have waded before.

The median ratings across cadences for Algo V1 (5.0/10) and Algo V2 (6.0/10) corresponded to neither extreme of realism nor unrealism. Considering that even the real recordings only received a median rating of 7.0/10, we inferred that participants perceived Algo V2 to be fairly realistic. It was indeed interesting to note the relatively low median rating given to the real recordings. This may have occurred due to the quality of the sound files chosen or, as noted in [8], a degree of inaccuracy in the participants’ mental image of a real wading sound. The stimuli were created from IMU recordings of normal overground walking, hence not accounting for spontaneous gait changes that may occur when a user simulates wading in an immersive virtual environment. For instance, the fast cadence may simply be an unrealistic gait pattern when walking in thigh-deep water. Lastly, the use of an online survey reduced the amount of control over listening environments and devices used, which may have added noise to the data and contributed to the variability observed in the ratings. The influence of the listening test paradigm may have also played a significant role in their perceptions as well, and it is plausible that they may have rated the sounds differently in an interactive setting due to the influence of the sensory feedback loop as well as multisensory integration [14, 55, 67, 70]. Due to the multitude of changes made between Algo V1 and Algo V2, it was not easy to pinpoint precisely how the various modifications (shank sensing, mapping topology, envelope following, nonlinear transform, sound layering) individually contributed to the observed difference in perceived realism between the versions. We did not ask participants to verbally describe their perceptions of the various sounds, which is a step that would have made it easier to interpret their rating behaviors.

8 General discussion

In this study, we developed a real-time gait sonification algorithm to simulate wading sounds with the aim of creating a perceptually robust starting point for specific applications. We evaluated it in terms of perceptual characteristics and interactive user experience. Ultimately, the basic premise of mapping angular velocity to the intensity-related parameters of steady-state flowing sounds proved capable of creating a fairly realistic simulation of real-life wading sounds that evoked various water depths and walking speeds. The layering of real recordings and physical models turned out to be a feasible approach for sound generation that balanced textural realism and computational complexity. Based on [4, Chapter 10] and the positive ratings of naturalness and agency obtained during Experiment 2, Algo V2 achieves a good balance between responsiveness and sonic accuracy despite its aforementioned limitations.

Concerning our evaluation protocols, we focused more on the perceptual qualities of the sound and conducted only one experiment in an interactive setting (none in the context of real-life applications like virtual reality or accessibility). We also ended up switching to a 7-point rating scale for Experiment 2 because we did not deem the higher resolution of an 11-point scale necessary in that context, but ultimately, we found that the resolution loss may have contributed to the lack of observed significant differences in that experiment. Therefore, we reverted to the 11-point scale for Experiment 3. Even so, the overall results of the study have several positive implications. We successfully showed how inexpensive gyroscopes can be used to capture limb angular velocity in a 2-D plane (in line with [66]). With some improvements, such a simulation may effectively reduce the work required to create movement-synchronized Foley sounds during film sound design. The basic principle of mapping limb velocity to the intensity of steady-state stochastic sounds can be generalized to the auditory simulation of wading through grass or even entirely different movement types that have rotational components. At the time of writing, we have successfully used a version of the simulation to provide intuitive and ecological feedback on swing phase kinematics to patients suffering from gait asymmetry in a separate study [82]. Given the relative simplicity of the underlying algorithm, there is considerable potential for plausibly simulating complex real-world acoustic interactions on low-power embedded systems in various sonic interaction paradigms.

As far as the design approach was concerned, we were able to use our distributed software framework to undertake extensive real-time sonic experimentation, much like how a music producer experiments with mixer settings to achieve a desired sound. Real-time tweaking of data-sound mappings is known to be an important characteristic of design platforms for sonification [15, 83], and the ability to tweak the normalization parameters, envelope follower time constants, and exponential coefficients of Algo V2 on the fly certainly accelerated the design process. REAPER and ReaLearn afforded us similar flexibility in the audio signal domain. It is worth highlighting the crucial importance of the mapping layer in sculpting realistic-sounding envelope shapes for this form of sonic interaction. Inspired by similar work with other movement types [61, 64], gyroscope-measured thigh and shank angular velocity proved effective as a means to capture the continuously varying nuances of the swing phase as far as wading goes. In terms of our design philosophy, we were successfully able to transfer Foley sound principles [8, 14, 40, 55] to the digital domain within the context of our application, which allowed us to acoustically model a complex real-world sonic phenomenon using a relatively simple set of mappings of angular velocity data to audio envelopes to obtain a fairly realistic output. As our design decisions were primarily guided by our past wading experiences (first-person design perspective [84]), we believe that our design process may have benefited from firsthand sound-focused experimentation with wading in water over the course of the study. But overall, we believe that by focusing on the swing phase, our work serves as an interesting complement to existing research on footstep-centric sonic interaction design [17,18,19].

The next steps will be to apply the simulation in a range of real-life application domains. Our immediate focus will be to test Algo V2 in an interactive setting, perhaps a virtual environment that also provides compelling visual feedback. When using it in conjunction with visual feedback, the key challenge will be that of achieving good correspondence between the modalities [70] so as to maximize the authenticity of the overall audiovisual experience [28]. Testing in the contexts of meditation [37], accessibility [38], and virtual training [39] should also be conducted. Finally, it would be highly interesting to study the effects of the real-time simulations on gait quality as well as user perceptions of movement, especially given the documented effects of manipulating footstep sound characteristics [18, 26, 35].

9 Conclusion

In this study, we successfully developed a real-time simulation of fairly realistic wading sounds that we deem a worthy starting point for use in specific applications. Despite only being an approximation of the natural processes involved in wading sound generation, our digital Foley approach proved effective and the resulting algorithm was usable in an interactive setting. Moreover, the technique of modulating environmental textures based on velocity contours can be applied to simulate other terrains, such as grass. Our future work will involve improving the model and conducting comprehensive testing in interactive use cases, particularly in the context of gait rehabilitation and virtual reality. Overall, we believe this research contributes to the advancement of walking-based sonic interactions across various domains, paving the way for the broader adoption of these systems in the years to come.

10 Supplementary information