Abstract

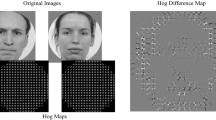

Under natural viewing conditions, human observers selectively allocate their attention to subsets of the visual input. Since overt allocation of attention appears as eye movements, the mechanism of selective attention can be uncovered through computational studies of eyemovement predictions. Since top-down attentional control in a task is expected to modulate eye movements significantly, the models that take a bottom-up approach based on low-level local properties are not expected to suffice for prediction. In this study, we introduce two representative models, apply them to a facial discrimination task with morphed face images, and evaluate their performance by comparing them with the human eye-movement data. The result shows that they are not good at predicting eye movements in this task.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Itti L, Koch C, Niebur E (1998) A model of saliency-based visual attention for rapid scene analysis. IEEE Trans Pattern Anal Mach Intell 20:1254–1259

Renninger LW, Verghese P, Coughlan J (2007) Where to look next? Eye movements reduce local uncertainty. J Vision 7(3):1–17

Kumar S (2002) Winmorph [computer software]. http://www.debugmode.com/winmorph

Beier T, Neely S (1992) Feature-based image metamorphosis. ACM SIGGRAPH Comput Graphics 26(2):35–42

Coornelissen FW, Peteres EM, Palmer J (2002) The eyelink toolbox: eye tracking with MATLAB and the psychophysics toolbox. Behav Res Methods Instrum Comput 34(4):613–617

Levi DM, Klein SA, Aitsebaomo AP (1985) Vernier acuity, crowding and cortical magnification. Vision Res 25(7):963–977

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was presented in part at the 14th International Symposium on Artificial Life and Robotics, Oita, Japan, February 5–7, 2009

About this article

Cite this article

Nishida, S., Shibata, T. & Ikeda, K. Prediction of human eye movements in facial discrimination tasks. Artif Life Robotics 14, 348–351 (2009). https://doi.org/10.1007/s10015-009-0679-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10015-009-0679-9