Abstract

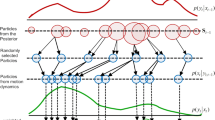

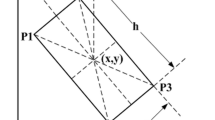

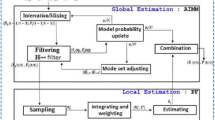

Recently, particle filter has been applied to many visual tracking problems and it has been modified in order to reduce the computation time or memory usage. One of them is the Mean-Shift embedded particle filter (MSEPF, for short) and it is further modified as Randomized MSEPF. These methods can decrease the number of the particles without the loss of tracking accuracy. However, the accuracy may depend on the definition of the likelihood function (observation model) and of the prediction model. In this paper, the authors propose an extension of these models in order to increase the tracking accuracy. Furthermore, the expansion resetting method, which was proposed for mobile robot localization, and the changing the size of the window in Mean-Shift search are also selectively applied in order to treat the occlusion or rapid change of the movement.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Kitagawa G (1996) Monte–Carlo filter and smoother for non-Gaussian nonlinear state space models. J Comput Graphical Stat 5(1):1–25

Gordon NJ, Salmond D, Smith AFM (1993) Novel approach to nonlinear/non-Gaussian Bayesian state estimation. IEEE Proc F 140(2):107–113

Isard M, Blake A (1998) CONDENSATION: conditional density propagation for visual tracking. Int J Comput Vis 29(1):5–28

Shan C, Wei Y, Tan T, Ojardias F (2004) Real time hand tracking by combining particle filter and mean shift. In: Proceedings of 6th IEEE international conference of automatic face and gesture recognition, pp 669–674

Nakagama Y, Yokomichi M (2011) An improvement of MSEPF for visual tracking. Int J Artif Life Robot 15(4):534–538

Boers Y, Driessen JN (2003) Interacting multiple model particle filter. IEEE Proc Radar Sonar Navig 150(5):344–349

Wang J, Zhao D, Gao W, Shan S (2004) Interacting multiple model particle filter to adaptive visual tracking. In: Proceedings of international conference on image and graphics, pp 568–571

Ueda R, Arai T, Sakamoto K, Kikuchi T, Kamiya S (2004) Expansion resetting for recovery from fatal error in Monte Carlo localization—comparison with sensor resetting methods. In: Proceedings of International Conference on Intelligent Robots and Systems (IROS) 2004, vol 3, pp 2481–2486

Yang C et al (2005) Efficient Mean-Shift tracking via a new similarity measure. In: Proceedings of IEEE computer society conference on computer vision and pattern recognition (CVPR’05), vol 1, pp 176–183

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Yokomichi, M., Nakagama, Y. Multimodal MSEPF for visual tracking. Artif Life Robotics 17, 257–262 (2012). https://doi.org/10.1007/s10015-012-0050-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10015-012-0050-4