Abstract

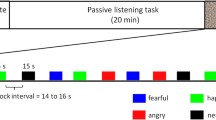

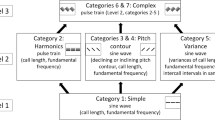

Emotions play an important role in human communication. We conducted a study to identify the effect of emotions in language sounds in terms of brain functions. The sounds of Japanese sentences spoken with and without emotion were reversed to eliminate their semantic influence on the subjects’ emotional perception. Three sets of sentences with non-emotional and happy, sad, and angry emotional tones were recorded and reversed. The brain activities of 20 native Japanese speakers in their twenties were monitored by near-infrared spectroscopy (NIRS) while they listened to the reversed Japanese sounds with and without the emotions. Our analysis of the experimental results demonstrated that almost all the brain areas monitored by the NIRS probes were activated more when the subjects listened to emotional language sounds than to non-emotional sounds. In particular, the frontopolar cortex area, which is associated with short-term memory, was significantly activated. Since short-term memory is known to provide important information for communication, these results suggest that emotional aspects of language sounds are essential for successful communication and thus should be implemented in human–robot communication systems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Brosch T, Pourtois G, Sander D, Vuilleumier P (2011) Additive effects of emotional, endogenous, and exogenous attention: behavioral and electrophysiological evidence. Neuropsychologia 49(7):1779–1787

Ohman A, Lundqvist D, Esteves F (2001) The face in the crowd revisited: a threat advantage with schematic stimuli. J Pers Soc Psychol 80(3):381–396

Jordi V (2014) Handbook of research on synthesizing human emotion in intelligent systems and robotics. IGI Global, Hershey, PA, USA

Javier GR, David S, Rahim R, Aron L, Antonio MC, Isis B, (2015), Speech emotion recognition in emotional feedback for human-robot interaction, Int J Adv Res Artif Intelligence, 4(2)

Nobuyoshi M, Hiroyuki F, Michio O (2006) Minimal design for human–agent communication. Artif life Robot 10:49–54

Ryohei N (1999) Communications, artificial life, and art. Artif Life Robot 3:190–196

Takanori S, Kazuya O, Kazuo T (1997) Spontaneous behavior for cooperation through interaction: an emotionally intelligent robot system. Artif life Robot 1:105–109

Kanu B, Taro A, Yasunari Y, Masayoshi T (2013) Speech synthesis of emotions using vowel features of a speaker. Artif Life Robot 19:27–32

Valentina A, Victor T, vS C (2018) Natural language oral communication in humans under stress. Linguistic cognitive coping strategies for enrichment of artificial intelligence. Procedia Comput Sci 123:4–28

Schirmer A, Kotz SA (2006) Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cognitive Sci 10:24–30

Victoria F, Robert R, Nina H (2007) An introduction to language, 8th edn. Boston, Thomson Wadsworth

Lindquist KA, Barrett LF, Bliss-Moreau E, Russell JA (2006) Language and the perception of emotion. Emotion 6:1:125–138

George MS, Parekh PI, Rosisnsky N, Ketter TA, Kimbrell TA, Heilman KM, Herscovitch P, Post RM (1996) Understanding emotional prosody activates right hemisphere regions. Arch Neurol 53:665–670

Buchanan TW, Lutz K, Mirzazade S, Specht K, Shah NJ, Zilles K, Jancke L (2000) Recognition of emotional prosody and verbal components of spoken language: an fMRI study. Cogn Brain Res 9:227–238

Wildgruber D, Hertrich I, Riecker A, Erb M, Anders S, Grodd W, Ackermann H (2004) Distinct frontal regions subserve evaluation of linguistic and emotional aspects of speech intonation. Cereb Cortex 14:1384–1389

Gandour J, Wong D, Dzemidzic M, Lowe M, Tong Y, Li X (2003) A cross-linguistic fMRI study of perception of intonation and emotion in Chinese. Hum Brain Mapp 18:149–157

Pena M, Maki A, Kovacic D, Dehaene-Lambertz G, Koizumi H, Bouquet F, Mehler J, (2003), Sounds and silence: an optical topography study of language recognition at birth, In: Proceedings of national academy of sciences of the United States of America, 100:11702–11705

Hall MA, (2012), Temporal mapping and connectivity using NIRS for language related task, FIU electronic theses and dissertations, 560

Kuniyoshi S (2009) The language map of the brain. Meiji Shoin, Tokyo, Japan

Ihara A, Wei Q, Matani A, Fujimaki N, Yagura H (2012) Language comprehension dependent on emotional context: a magnetoencephalography study. Neurosci Res 72:50–58

Elizabeth AK, Suzanne C (2003) Effect of negative emotional content on working memory and long-term memory. Emotion 3:378–393

Luis-Alberto PG, Santiago-Omar CM, Felipe TR (2016) Multimodal emotion recognition with evolutionary computation for human-robot interaction. Expert Syst Appl 66:42–61

Breazeal C (2003) Emotion and sociable humanoid robots. Int J Hum Comput Stud 59:119–155

Lisetti C, Nasoz F, LeRouge C, Ozyer O, Alvarez K (2003) Developing multimodal intelligent affective interfaces for tele-home health care. Int J Hum Comput Stud 59:245–255

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

About this article

Cite this article

Mohd Anuardi, M.N.A., Yamazaki, A.K. Effects of emotionally induced language sounds on brain activations for communication. Artif Life Robotics 24, 312–317 (2019). https://doi.org/10.1007/s10015-019-00529-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10015-019-00529-x