Abstract

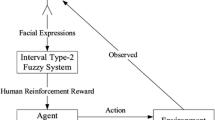

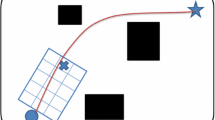

Although the design of the reward function in reinforcement learning is important, it is difficult to design a system that can adapt to a variety of environments and tasks. Therefore, we propose a method to autonomously generate rewards from sensor values, enabling task- and environment-independent reward design. Under this approach, environmental hazards are recognized by evaluating sensor values. The evaluation used for learning is obtained by integrating all the sensor evaluations that indicate danger. Although prior studies have employed weighted averages to integrate sensor evaluations, this approach does not reflect the increased danger arising from a higher amount of more sensor evaluations indicating danger. Instead, we propose the integration of sensor evaluation using logarithmic transformation. Through a path learning experiment, the proposed method was evaluated by comparing its rewards to those gained from manual reward setting and prior approaches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Kamegawa T, Akiyama T, Sakai S, Fujii K, Une K, Ou E, Matsumura Y, Kishutani T, Nose E, Yoshizaki Y (2020) Development of a separable search-and-rescue robot composed of a mobile robot and a snake robot. Adv Robot 334(2):132–139

Salgotra D, Khan AH, Mithaiwala H, Mithaiwala M, Kakkeri RB (2020) Restaurant waiter robot. SAMRIDDHI: J Phys Sci Eng Technol 12(2):46–49

Shimaya J, Yoshikawa Y, Kumazaki H, Matsumoto Y, Miyao M, Ishiguro H (2019) Communication support via a tele-operated robot for easier talking: case/laboratory study of individuals with/without autism spectrum disorder. Int J Soc Robot 11(1):171–184

Sutton RS, Barto Andrew G (2018) Reinforcement learning, 2nd edn. The MIT Press, New York

Moazami S, Doerschuk P (2020) Modeling survival in model-based reinforcement learning. In: 2020 Second International Conference on Transdisciplinary AI (TransAI), pp 17–24

Kaiser L, Babaeizadeh M, Milos P, Osinski B, Campbell RH, Czechowski K, Erhan D, Finn C, Kozakowski P, Levine S (2019) Model-based reinforcement learning for atari. arXiv:1903.00374

Arora S, Doshi P (2021) A survey of inverse reinforcement learning: Challenges, methods and progress. Artif Intell 297:103500

Mutti M, Restelli M (2020) An intrinsically-motivated approach for learning highly exploring and fast mixing policies. Proc AAAI Conf Artif Intell 34(4):5232–5239

Liu J, Wang D, Tian Q, Chen Z (2022) Learn goal-conditioned policy with intrinsic motivation for deep reinforcement learning. Proc AAAI Conf Artif Intell 36(7):7558–7566

Kurashige K, Nikaido K (2015) Self-generation of reward by moderate-based index for senor inputs. J Robot Mechatron 27(1):57–63

Ishizuka M, Kurashige K (2018) Self-generation of reward by inputs from multi sensors-integration of evaluations for inputs to avoid danger. In: 2018 International symposium on micro-nanomechatronics and human science (MHS), pp 1–7

Hakim Afiqe Anuar bin MN, Fukuzawa K, Kurashige K (2020) Proposal of time-based evaluation for universal sensor evaluation index in self-generation of reward. In: 2020 IEEE international conference on systems, man, and cybernetics (SMC), pp 1161–1166

Ono Y, Kurashige K, Hakim Afiqe Anuar Bin MN, Kondo S, Fukuzawa K (2021) Proposal of Self-generation of Reward for danger avoidance by disregarding specific situations. In: 2021 IEEE Symposium Series on Computational Intelligence (SSCI), pp 1–6

Watanabe M, Narita M (2018) Brain reward circuit and pain. In: Advances in pain research: mechanisms and modulation of chronic pain, pp 201–210

Salcido CA, Geltmeier MK, Fuchs PN (2018) Pain and decision-making: interrelated through homeostasis. Open Pain J 11(1):31–40

Zhang F, O’Donnell LJ (2020) Support vector regression. Mach Learn, pp 123–140

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Yuya Ono is the presenter of this paper

This work was submitted and accepted for the Journal Track of the joint symposium of the 28th International Symposium on Artificial Life and Robotics, the 8th International Symposium on BioComplexity, and the 6th International Symposium on Swarm Behavior and Bio-Inspired Robotics (Beppu, Oita, January 25–27, 2023).

About this article

Cite this article

Ono, Y., Kurashige, K., Hakim, A.A.B.M.N. et al. Self-generation of reward by logarithmic transformation of multiple sensor evaluations. Artif Life Robotics 28, 287–294 (2023). https://doi.org/10.1007/s10015-023-00855-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10015-023-00855-1