Abstract

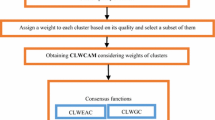

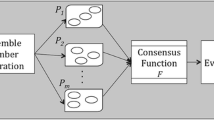

Each clustering algorithm usually optimizes a qualification metric during its learning process. The qualification metric in traditional clustering algorithms considers all the features of under-consideration dataset equally; it means each feature participates in the clustering process equivalently. Considering that some features have more information than the others in a dataset (due to their lower information or their higher variances, etc.), we proposes a fuzzy weighted clustering algorithm. We name this new clustering algorithm, Fuzzy Weighted Locally Adaptive Clustering (FWLAC) algorithm. The proposed FWLAC algorithm is capable of handling imbalanced clustering. However, FWLAC algorithm suffers from its sensitivity to the two parameters that should be tuned manually. The performance of FWLAC algorithm is affected by well-tuning of its parameters. So the paper proposes two solutions to well-tuning of its two parameters. In the first solution, we propose a simple clustering ensemble framework to show the sensitivity of the WLAC algorithm to its manual well-tuning. Although it is not a try-and-error procedure, it is like a grid search, where we use different pairs of values for both parameters h 1 and h 2. Per each pair of values for parameters h 1 and h 2, the algorithm produces a partitioning. So after the grid search, we obtain a large number of partitionings. We break any of the partitionings into its clusters, and then they form an ensemble of clusters. Finally the consensus partitioning is extracted from them by a consensus function. The algorithm is not data dependent at all. For all datasets, we use a similar grid search and a similar set of values for the parameters h 1 and h 2. In this way we have proposed an alternative solution to parameter selection. We use a selection phase to select/remove some clusters from our ensemble of clusters to obtain an elite ensemble of clusters. To do this, a stability measure, normalized mutual information (NMI), was used to validate a cluster. The paper shows the effectiveness of the proposed clustering frameworks both theoretically and experimentally.

Similar content being viewed by others

References

Agrawal R, Gehrke J, Gunopulos D, Raghavan P (1998) Automatic subspace clustering of high dimensional data for data mining applications. In: Proceedings of the 1998 ACM SIGMOD international conference on management of data, pp 94–10

Alizadeh H, Parvin H, Parvin S (2012) A framework for cluster ensemble based on a max metric as cluster evaluator. IAENG Int J Comp Sci 363(39):1

Alizadeh H, Minaei-Bidgoli B, Parvin H (2011) A new criterion for clusters validation. Artif Intell Appl Innov 364:240–246

Alizadeh H, Minaei-Bidgoli B, Parvin H (2013a) Optimizing fuzzy cluster ensemble in string representation. Int J Pattern Recognit. doi:10.1142/S0218001413500055

Alizadeh H, Minaei-Bidgoli B, Parvin H (2013b) To improve the quality of cluster ensembles by selecting a subset of base clusters. J Exp Theor Artif Intell. doi:10.1080/0952813X.2013.813974

Alizadeh H, Minaei-Bidgoli B, Parvin H (2014) Cluster ensemble selection based on a new cluster stability measure. Intell Data Anal 18(3):1–19

Alizadeh H, Minaei-Bidgoli B, Parvin H, Moshki M (2011b) An asymmetric criterion for cluster validation. Dev Concepts Appl Intell Stud Comput Intell 363:1–14

Blum A, Rivest R (1992) Training a 3-node neural network is NP-complete. Neural Netw 5:117–127

Chang JW, Jin DS (2002) A new cell-based clustering method for large-high dimensional data in data mining applications. In: Proceedings of the ACM symposium on applied computing, pp 503–507

Cheng CH, Fu AW, Zhang Y (1999) Entropy-based subspace clustering for mining numerical data. In: Proceedings of the fifth ACM SIGKDD international conference on knowledge discovery and data mining, pp 84–93

Domeniconi C, Al-Razgan M (2009) Weighted cluster ensembles: methods and analysis. ACM Trans Knowl Discov Data 10:1145/1460797.1460800

Domeniconi C, Gunopulos D, Ma S, Yan B, Al-Razgan M, Papadopoulos D (2007) Locally adaptive metrics for clustering high dimensional data. Data Min Knowl Discov 14:63–97

Duda RO, Hart PE, Stork DG (2000) Pattern classification. Wiley, London

Dudoit S, Fridlyand J (2003) Bagging to improve the accuracy of a clustering procedure. Bioinformatics 19(9):1090–1099

Faceli K, Marcilio CP, Souto D (2006) Multi-objective clustering ensemble. In: Proceedings of the sixth international conference on hybrid intelligent systems (HIS’06)

Fred A (2001) Finding consistent clusters in data partitions. Second international workshop multiple classifier systems, pp 309–318

Fred A, Jain AK (2002a) Data clustering using evidence accumulation. In: Proceedings of the 16th international conference on pattern recognition, pp 276–280

Fred A, Jain AK (2002b) Evidence accumulation clustering based on the k-means algorithm. Structural, syntactic, and statistical pattern recognition, joint IAPR international workshops, pp 442–451

Fred A, Jain AK (2005) Combining multiple clusterings using evidence accumulation. IEEE Trans Pattern Anal Mach Intell 27:835–850

Fern XZ, Lin W (2008) Cluster ensemble selection. SIAM International Conference on Data Mining, pp 128–141

Jain AK, Dubes RC (1988) Algorithms for clustering data. Prentice Hall, Englewood Cliffs, NJ

Kohavi R, John RG (1997) Wrappers for feature subset selection. Artif Intell 97:273–324

Liu B, Xia Y, Yu PS (2000) Clustering through decision tree construction. In: Proceedings of the ninth international conference on information and knowledge management, pp 20–29

Miller R, Yang Y (1997) Association rules over interval data. In: Proceedings of ACM SIGMOD international conference on management of data, pp 452–461

Mirzaei A, Rahmati M, Ahmadi M (2008) A new method for hierarchical clustering combination. Intell Data Anal 12:549–571

Minaei-Bidgoli B, Parvin H, Alinejad H, Alizadeh H, Punch W (2011) Effects of resampling method and adaptation on clustering ensemble efficacy. Artif Intell Rev Int Scie Eng J. doi:10.1007/s10462-011-9295-x

Munkres J (1957) Algorithms for the assignment and transportation problems. J Soc Ind Appl Math 5:32–38

Newman CBDJ, Hettich S, Merz C (1998) UCI repository of machine learning databases. http://www.ics.uci.edu/~mlearn/MLSummary.html

Parsons L, Haque E, Liu H (2004) Subspace clustering for high dimensional data: a review. ACM SIGKDD Explor Newsl 6:90–105

Parvin H, Beigi A, Mozayani N (2012) A clustering ensemble learning method based on the ant colony clustering algorithm. Int J Appl Comput Math 11:286–302

Parvin H, Minaei-Bidgoli B, Parvin S, Alinejad H (2012b) A New Classifier ensemble methodology based on subspace learning. J Exp Theor Artif Intell. doi:10.1080/0952813X.2012.715683

Parvin H, Minaei-Bidgoli B, Alinejad H (2013) Data weighing mechanisms for clustering ensembles. Comput Electr Eng. http://dx.doi.org/10.1016/j.compeleceng.2013.02.004

Procopiuc CM, Jones M, Agarwal PK, Murali TM (2002) A Monte Carlo algorithm for fast projective clustering. In: Proceedings of the ACM SIGMOD conference on management of data, pp 418–427

Srikant R, Agrawal R (1996) Mining quantitative association rules in large relational tables. In: Proceedings of the ACM SIGMOD conference on management of data

Strehl A, Ghosh J (2002) Cluster ensembles-a knowledge reuse framework for combining multiple partitions. J Mach Learn Res 3:583–617

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Parvin, H., Minaei-Bidgoli, B. A clustering ensemble framework based on selection of fuzzy weighted clusters in a locally adaptive clustering algorithm. Pattern Anal Applic 18, 87–112 (2015). https://doi.org/10.1007/s10044-013-0364-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-013-0364-4