Abstract

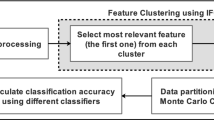

With the continuous development of Internet technology, data gradually present a complicated and high-dimensional trend. These high-dimensional data have a large number of redundant features and irrelevant features, which bring great challenges to the existing machine learning algorithms. Feature selection is one of the important research topics in the fields of machine learning, pattern recognition and data mining, and it is also an important means in the data preprocessing stage. Feature selection is to look for the optimal feature subset from the original feature set, which would improve the classification accuracy and reduce the machine learning time. The traditional feature selection algorithm tends to ignore the kind of feature which has a weak distinguishing capacity as a monomer, whereas the feature group’s distinguishing capacity is strong. Therefore, a new dynamic interaction feature selection (DIFS) algorithm is proposed in this paper. Initially, under the theoretical framework of interactive information, it redefines the relevance, irrelevance and redundancy of the features. Secondly, it offers the computational formulas for calculating interactive information. Finally, under the eleven data sets of UCI and three different classifiers, namely, KNN, SVM and C4.5, the DIFS algorithm increases the classification accuracy of the FullSet by 3.2848% and averagely decreases the number of features selected by 15.137. Hence, the DIFS algorithm can not only identify the relevance feature effectively, but also identify the irrelevant and redundant features. Moreover, it can effectively improve the classification accuracy of the data sets and reduce the feature dimensions of the data sets.

Similar content being viewed by others

References

Che J, Yang Y, Li L, Bai X, Zhang S, Deng C (2017) Maximum relevance minimum common redundancy feature selection for nonlinear data. Inf Sci 409–410:68–86. https://doi.org/10.1016/j.ins.2017.05.013

Macedo F, Rosário Oliveira M, Pacheco A, Valadas R (2019) Theoretical foundations of forward feature selection methods based on mutual information. Neurocomputing 325:67–89. https://doi.org/10.1016/j.neucom.2018.09.077

Lin X, Li C, Ren W, Luo X, Qi Y (2019) A new feature selection method based on symmetrical uncertainty and interaction gain. Comput Biol Chem 83:107149. https://doi.org/10.1016/j.compbiolchem.2019.107149

Cheng X, Zhu Y, Song J, Wen G, He W (2017) A novel low-rank hypergraph feature selection for multi-view classification. Neurocomputing 253:115–121. https://doi.org/10.1016/j.neucom.2016.10.089

Liu H, Ditzler G (2019) A semi-parallel framework for greedy information-theoretic feature selection. Inf Sci 492:13–28. https://doi.org/10.1016/j.ins.2019.03.075

Shi H, Li H, Zhang D, Cheng C, Cao X (2018) An efficient feature generation approach based on deep learning and feature selection techniques for traffic classification. Comput Netw 132:81–98. https://doi.org/10.1016/j.comnet.2018.01.007

Zhang Y, Yang A, Xiong C, Wang T, Zhang Z (2014) Feature selection using data envelopment analysis. Knowl Based Syst 64:70–80. https://doi.org/10.1016/j.knosys.2014.03.022

Li Z, Tan J, Li S, Liu J, Chen H, Shen J, Huang R, Liu J (2019) An efficient online wkNN diagnostic strategy for variable refrigerant flow system based on coupled feature selection method. Energy Build 183:222–237. https://doi.org/10.1016/j.enbuild.2018.11.020

Chamakura L, Saha G (2019) An instance voting approach to feature selection. Inf Sci 504:449–469. https://doi.org/10.1016/j.ins.2019.07.018

Peng H, Long F, Ding C (2005) Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238. https://doi.org/10.1109/TPAMI.2005.159

Lee J, Kim D-W (2015) Fast multi-label feature selection based on information-theoretic feature ranking. Pattern Recognit 48(9):2761–2771. https://doi.org/10.1016/j.patcog.2015.04.009

Nagpal A, Singh V (2018) A feature selection algorithm based on qualitative mutual information for cancer microarray data. Procedia Comput Sci 132:244–252. https://doi.org/10.1016/j.procs.2018.05.195

Liang Hu WG, Zhao K, Zhang P, Wang F (2018) Feature selection considering two types of feature relevancy and feature interdependency. Expert Syst Appl 93:423–434. https://doi.org/10.1016/j.eswa.2017.10.016

Zheng K, Wang X (2018) Feature selection method with joint maximal information entropy between features and class. Pattern Recognit 77:20–29. https://doi.org/10.1016/j.patcog.2017.12.008

Bennasar M, Hicks Y, Setchi R (2015) feature selection using joint mutual information maximisation. Expert Syst Appl 42(22):8520–8532. https://doi.org/10.1016/j.eswa.2015.07.007

Yuan M, Yang Z, Ji G (2019) Partial maximum correlation information: a new feature selection method for microarray data classification. Neurocomputing 323:231–243. https://doi.org/10.1016/j.neucom.2018.09.084

Gustavo S-C, Miguel G-T, Santiago G-G, Christian ES, Federico D (2019) A multivariate approach to the symmetrical uncertainty measure: application to feature selection problem. Inf Sci 494:1–20. https://doi.org/10.1016/j.ins.2019.04.046

Sharma V, Juglan KC (2018) Automated classification of fatty and normal liver ultrasound images based on mutual information feature selection. IRBM 39(5):313–323. https://doi.org/10.1016/j.irbm.2018.09.006

Murthy SCA, Chanda B (2018) Generation of compound features based on feature interaction for classification. Expert Syst Appl 108:61–73. https://doi.org/10.1016/j.eswa.2018.04.033

Cai J, Luo J, Wang S, Yang S (2018) Feature selection in machine learning: a new perspective. Neurocomputing 300:70–79. https://doi.org/10.1016/j.neucom.2017.11.077

Wang X, Guo B, Shen Y, Zhou C, Duan X (2019) Input feature selection method based on feature set equivalence and mutual information gain maximization. IEEE Access 7:151525–151538. https://doi.org/10.1109/access.2019.2948095

Wang J, Wei J-M, Yang Z, Wang S-Q (2017) Feature selection by maximizing independent classification information. IEEE Trans Knowl Data Eng 29(4):828–841. https://doi.org/10.1109/tkde.2017.2650906

Bermejo P, Ldl O, Gámez JA, Puerta JM (2012) Fast wrapper feature subset selection in high-dimensional datasets by means of filter re-ranking. Knowl Based Syst 25(1):35–44. https://doi.org/10.1016/j.knosys.2011.01.015

Cano A, Nguyen DT, Ventura S, Cios KJ (2016) ur-CAIM: improved CAIM discretization for unbalanced and balanced data. Soft Comput 20(1):173–188. https://doi.org/10.1007/s00500-014-1488-1

Wang L-L, Ngan HYT, Yung NHC (2018) Automatic incident classification for large-scale traffic data by adaptive boosting SVM. Inf Sci 467:59–73. https://doi.org/10.1016/j.ins.2018.07.044

Gómez-Verdejo V, Verleysen M, Fleury J (2009) Information-theoretic feature selection for functional data classification. Neurocomputing 72(16–18):3580–3589. https://doi.org/10.1016/j.neucom.2008.12.035

Sun X, Liu Y, Wei D, Xu M, Chen H, Han J (2013) Selection of interdependent genes via dynamic relevance analysis for cancer diagnosis. J Biomed Inform 46(2):252–258. https://doi.org/10.1016/j.jbi.2012.10.004

Acknowledgements

The experimental data set selects the world-famous UCI universal data set (http:// https://archive.ics.uci.edu/ml/datasets.html).

Funding

This work is supported by the National Science and Technology Basic Work Special Project of China under Grant 2015FY111700-6.

Author information

Authors and Affiliations

Contributions

I wrote the manuscript, read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no competing interests.

Ethics approval and consent to participate

This study does not involve any ethical issues.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, Z. A new feature selection using dynamic interaction. Pattern Anal Applic 24, 203–215 (2021). https://doi.org/10.1007/s10044-020-00916-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-020-00916-2