Abstract

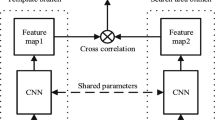

Many Siamese tracking algorithms attempt to enhance the target representation through target aware. However, the tracking results are often disturbed by the target-like background. In this paper, we propose a multi-view confidence-aware method for adaptive Siamese tracking. Firstly, a shrink-enhancement loss is designed to select channel features that are more sensitive to the target, which reduces the effect of simple background negative samples and enhances the contribution of difficult background negative samples, so as to achieve the balance of the sample data. Secondly, to enhance the reliability of the confidence map, a multi-view confidence-aware method is constructed. It integrates the response maps of template, foreground, and background through Multi-view Confidence Guide to highlight target features and suppress background interference, thus obtaining a more discriminative target response map. Finally, to better accommodate variable tracking scenarios, we design a state estimation criterion for tracking results and adaptive update the template. Experimental results show that the present tracking approach performs well, especially on six benchmark datasets, including OTB-2015, TC-128, UAV-123, DTB, VOT2016, and VOT-2019.

Similar content being viewed by others

Data availability

All data generated or analyzed during this study are included in this published article [and its supplementary information files].

References

Yu M-X, Zhang Y-H, Li Y-K, Li J-Z, Wang C-L (2020) Distractor-aware long-term correlation tracking based on information entropy weighted feature. IEEE Access. 8:29417–29429. https://doi.org/10.1109/ACCESS.2020.2973287

Zhang H, Cheng L, Zhang T, Wang Y, Zhang WJ, Zhang J (2022) Target-distractor aware deep tracking with discriminative enhancement learning loss. IEEE Trans Circuits Syst Video Technol. https://doi.org/10.1109/TCSVT.2022.3165536

Sheng X, Liu Y, Liang H, Li F, Man Y (2019) Robust visual tracking via an improved background aware correlation filter. IEEE Access 7:24877–24888. https://doi.org/10.1109/ACCESS.2019.2900666

Danelljan M, Hager G, Shahbaz Khan F, Felsberg M (2015) Convolutional features for correlation filter based visual tracking. In: Proceedings of the IEEE international conference on computer vision (ICCV) workshops

Wang H, Zhang H (2022) Adaptive target tracking based on channel attention and multi-hierarchical convolutional features. Pattern Anal Appl 25(2):305–313

Bertinetto L, Valmadre J, Henriques JF, Vedaldi A, Torr P (2016) Fully-convolutional Siamese networks for object tracking. In: European conference on computer vision

Bo L, Yan J, Wei W, Zheng Z, Hu X (2018) High performance visual tracking with Siamese region proposal network. In: 2018 IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Wang Q, Zhang L, Bertinetto L, Hu W, Torr P (2020) Fast online object tracking and segmentation: a unifying approach. In: 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Wang Q, Teng Z, Xing J, Gao J, Maybank S (2018) Learning attentions: Residual attentional Siamese network for high performance online visual tracking. In: 2018 IEEE/CVF conference on computer vision and pattern recognition

Li X, Ma C, Wu B, He Z, Yang MH (2019) Target-aware deep tracking. In: 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Zhang Z, Peng H (2020) Ocean: Object-aware anchor-free tracking

Guo D, Shao Y, Cui Y, Wang Z, Zhang L, Shen C (2021) Graph attention tracking. In: Computer vision and pattern recognition

Zhao Y, Fan C, Zhang X, Chen X (2021) Target re-aware deep tracking based on correlation filters updated online. Pattern Anal Appl 24:1275–1286

Possegger H, Mauthner T, Bischof H (2015) In defense of color-based model-free tracking. In: IEEE conference on computer vision and pattern recognition (CVPR)

Zha Y, Wu M, Qiu Z, Dong S, Yang F, Zhang P (2019) Distractor-aware visual tracking by online Siamese network. IEEE Access 7:89777–89788. https://doi.org/10.1109/ACCESS.2019.2927211

Zeng F, Ji Y, Levine MD (2018) Contextual bag-of-words for robust visual tracking. IEEE Trans Image Process 27:1433–1447. https://doi.org/10.1109/TIP.2017.2778561

Galoogahi HK, Fagg A, Lucey S (2017) Learning background-aware correlation filters for visual tracking. In: IEEE Computer Society

Yi W, Lim J, Yang MH (2015) Online object tracking: a benchmark. In: Computer vision and pattern recognition

Liang P, Blasch E, Ling H (2015) Encoding color information for visual tracking: algorithms and benchmark. IEEE Trans Image Process 24(12):5630–5644

Mueller M, Smith N, Ghanem B (2016) A benchmark and simulator for UAV tracking. In: European conference on computer vision (ECCV16)

Li S, Yeung D (2017) Visual object tracking for unmanned aerial vehicles: a benchmark and new motion models. In: National conference on artificial intelligence

Kristan M, Leonardis AM (2016) The visual object tracking vot2016 challenge results. In: Computer vision—ECCV 2016 workshops. Springer, Cham, pp 777–823

Kristan M, Berg A, Zheng L, Rout L, Zhou L (2019) The seventh visual object tracking vot2019 challenge results. In: 2019 IEEE/CVF international conference on computer vision workshop (ICCVW)

Lin TY, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. IEEE Trans Pattern Anal Mach Intell PP(99):2999–3007

Lu X, Ma C, Shen J, Yang X, Yang MH (2020) Deep object tracking with shrinkage loss. IEEE Trans Pattern Anal Mach Intell PP(99):1

Bhat G, Danelljan M, Gool LV, Timofte R (2019) Learning discriminative model prediction for tracking. In: International conference on computer vision

Dong X, Shen J (2018) Triplet loss in siamese network for object tracking. In: Proceedings of the European conference on computer vision (ECCV)

Yuan D, Chang X, Huang P-Y, Liu Q, He Z (2020) Self-supervised deep correlation tracking. IEEE Trans Image Process 30:976–985

Sun R, Fang L, Gao X, Gao J (2021) A novel target-aware dual matching and compensatory segmentation tracker for aerial videos. IEEE Trans Instrum Meas 70:1–13. https://doi.org/10.1109/TIM.2021.3109722

Kuai Y, Wen G, Li D, Xiao J (2019) Target-aware correlation filter tracking in RGBD videos. IEEE Sens J PP(99):1

Zhou Z, Li X, Fan N, Wang H, He Z (2022) Target-aware state estimation for visual tracking. IEEE Trans Circuits Syst Video Technol 32:2908–2920. https://doi.org/10.1109/TCSVT.2021.3103063

Zhang F, Ma S, Qiu Z, Qi T (2022) Learning target-aware background-suppressed correlation filters with dual regression for real-time UAV tracking. Signal Process 191:108352. https://doi.org/10.1016/j.sigpro.2021.108352

Yang K, He Z, Pei W, Zhou Z, Li X, Yuan D, Zhang H (2021) Siamcorners: siamese corner networks for visual tracking. IEEE Trans Multimedia 24:1956–1967

Lv PY, Sun SL, Lin CQ, Liu GR (2018) Space moving target detection and tracking method in complex background. Infrared Phys Technol. 91:107–118

Wang X, Tang J, Luo B, Wang Y, Tian Y, Wu F (2021) Tracking by joint local and global search: a target-aware attention based approach. IEEE Trans Neural Netw Learn Syst 33(2021):6931–6945

Xiao J, Qiao L, Stolkin R, Leonardis A (2016) Distractor-supported single target tracking in extremely cluttered scenes. In: European conference on computer vision

Chen G, Pan G, Zhou Y, Kang W, Hou J, Deng F (2020) Correlation filter tracking via distractor-aware learning and multi-anchor detection. IEEE Trans Circuits Syst Video Technol 30:4810–4822. https://doi.org/10.1109/TCSVT.2019.2961999

Yu MX, Zhang YH, Li YK, Li JZ, Wang CL (2020) Distractor-aware long-term correlation tracking based on information entropy weighted feature. IEEE Access PP(99):1

Liu Y, Zhang Y, Hu M, Si P, Xia C (2017) Fast tracking via spatio-temporal context learning based on multi-color attributes and pca. In: 2017 IEEE international conference on information and automation (ICIA), pp 398–403. https://doi.org/10.1109/ICInfA.2017.8078941

Zhang Z, Zhong B, Zhang S, Tang Z, Liu X, Zhang Z (2021) Distractor-aware fast tracking via dynamic convolutions and mot philosophy. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition

Wang D, Lu H, Yang MH (2013) Online object tracking with sparse prototypes. Image Process IEEE Trans 22(1):314–325

Peng X, Bennamoun M, Qian M, Ying L, Zhang Q, Chen W (2010) Drift-correcting template update strategy for precision feature point tracking. Image Vis Comput 28(8):1280–1292

Yu W, Hou Z, Hu D, Wang P (2017) Robust mean shift tracking based on refined appearance model and online update. Multimed Tools Appl 76(8):10973–10990

Hua X, Wang X, Rui T, Shao F, Wang D (2021) Light-weight UAV object tracking network based on strategy gradient and attention mechanism. Knowl Based Syst 224:107071

Borsuk V, Vei R, Kupyn O, Martyniuk T, Krashenyi I, Matas (2022) Fear: fast, efficient, accurate and robust visual tracker. In: Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXII, pp 644–663

Valmadre J, Bertinetto L, Henriques JF, Vedaldi A, Torr P (2017) End-to-end representation learning for correlation filter based tracking. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR)

Danelljan M, Hager G, Khan FS, Felsberg M (2015) Learning spatially regularized correlation filters for visual tracking. In: 2015 IEEE international conference on computer vision (ICCV)

Bertinetto L, Valmadre J, Golodetz S, Miksik O, Torr P (2016) Staple: complementary learners for real-time tracking. In: Computer vision and pattern recognition

Li P, Chen B, Ouyang W, Wang D, Yang X, Lu H (2020) Gradnet: gradient-guided network for visual object tracking. In: 2019 IEEE/CVF international conference on computer vision (ICCV)

Zhu Z, Wang Q, Li B, Wu W, Yan J, Hu W (2018) Distractor-aware siamese networks for visual object tracking. In: European conference on computer vision

Danelljan M, Hager G, Khan FS, Felsberg M (2016) Convolutional features for correlation filter based visual tracking. In: 2015 IEEE international conference on computer vision workshop (ICCVW),

Danelljan M, Bhat G, Khan FS, Felsberg M (2019) Atom: accurate tracking by overlap maximization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4660–4669

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J (2019) SiamRPN++: evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4282–4291

Song Y, Ma C, Gong L, Zhang J, Lau R, Yang MH (2017) Crest: convolutional residual learning for visual tracking. In: Proceedings of the IEEE international conference on computer vision

Chao M, Huang JB, Yang X, Yang MH (2016) Hierarchical convolutional features for visual tracking. In: IEEE international conference on computer vision

Henriques JF, Caseiro R, Martins P, Batista J (2015) High-speed tracking with kernelized correlation filters. IEEE Trans Pattern Anal Mach Intell 37(3):583–596. https://doi.org/10.1109/TPAMI.2014.2345390

Mueller M, Smith N, Ghanem B (2017) Context-aware correlation filter tracking. In: IEEE conference on computer vision and pattern recognition

Ma C, Huang J, Yang X, Yang M (2019) Robust visual tracking via hierarchical convolutional features. IEEE Trans Pattern Anal Mach Intell 41(11):2709–2723

Danelljan M, Häger G, Khan FS, Felsberg M (2014) Accurate scale estimation for robust visual tracking. In: British machine vision conference

Danelljan M, Bhat G, Khan FS, Felsberg M (2016) Eco: efficient convolution operators for tracking. In: IEEE Computer Society

Hare S, Saffari A, Torr P (2011) Struck: structured output tracking with kernels. In: IEEE international conference on computer vision, ICCV 2011, Barcelona, Spain, November 6–13, 2011

Henriques JF, Rui C, Martins P, Batista J (2012) Exploiting the circulant structure of tracking-by-detection with kernels. In: Proceedings of the 12th European conference on computer vision - volume part IV

Li Y, Fu C, Ding F, Huang Z, Lu G (2020) Autotrack: towards high-performance visual tracking for UAV with automatic spatio-temporal regularization. In: 2020 IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Huang Z, Fu C, Li Y, Lin F, Lu P (2019) Learning aberrance repressed correlation filters for real-time UAV tracking. In: IEEE

Lukezic A, Vojir T, Zajc LC, Matas J, Kristan M (2017) Discriminative correlation filter with channel and spatial reliability. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR)

Fu C, Xu J, Lin F, Guo F, Zhang Z (2020) Object saliency-aware dual regularized correlation filter for real-time aerial tracking. IEEE Trans Geosci Remote Sens 58:8940–8951

Li F, Tian C, Zuo W, Zhang L, Yang MH (2018) Learning spatial-temporal regularized correlation filters for visual tracking. In: 2018 IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Yang L, Zhu J (2014) A scale adaptive kernel correlation filter tracker with feature integration. Springer, Cham

Danelljan M, Robinson A, Khan FS, Felsberg M (2016) Beyond correlation filters: learning continuous convolution operators for visual tracking. Springer International Publishing, New York

Nam H, Han B (2016) Learning multi-domain convolutional neural networks for visual tracking. In: IEEE

Nam H, Baek M, Han B (2016) Modeling and propagating cnns in a tree structure for visual tracking. arXiv:1608.07242

Chung T-Y, Cho M, Lee H, Lee S (2022) SSAT: self-supervised associating network for multi-object tracking. IEEE Trans Circuits Syst Video Technol. https://doi.org/10.1109/TCSVT.2022.3186751

Wang Q, Gao J, Xing J, Zhang M, Hu W (2017) DCFNet: discriminant correlation filters network for visual tracking. arXiv preprint arXiv:1704.04057

Dunnhofer M, Martinel N, Luca Foresti G, Micheloni C (2019) Visual tracking by means of deep reinforcement learning and an expert demonstrator. In: Proceedings of The IEEE/CVF international conference on computer vision workshops

Zhang Z, Peng H (2019) Deeper and wider siamese networks for real-time visual tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4591–4600

Funding

National Natural Science Foundation of China under Grant (Grant Nos. 62273243, 62072416, 62102373, 62006213), Science and Technology Innovation Talents in Universities of Henan Province (Grant No. 21HASTIT028), Natural Science Foundation of Henan Province (Grant No. 202300410495), and Zhongyuan Science and Technology Innovation Leadership Program (Grant No. 214200510026)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, H., Ma, Z., Zhang, J. et al. Multi-view confidence-aware method for adaptive Siamese tracking with shrink-enhancement loss. Pattern Anal Applic 26, 1407–1424 (2023). https://doi.org/10.1007/s10044-023-01169-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-023-01169-5