Abstract

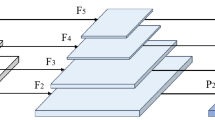

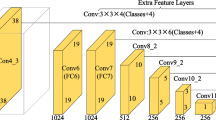

To enhance the performance of object detection algorithm, this paper proposes segmentation attention feature pyramid network (SAFPN) to address the issue of semantic information loss. Compared to prior works, SAFPN discards the original \(1\times 1\) convolutions and achieves feature dimension reduction through a segmentation and accumulation architecture, thereby preserving the semantic information of high-dimensional features completely. To capture fine-grained semantic details, it integrates channel attention and spatial attention mechanisms to enhance the network’s focus on important information. Extensive experimental validation demonstrates that SAFPN achieves favorable results on multiple public datasets, and can better complete the target detection task.

Similar content being viewed by others

Data availability statements

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Zhang L, Wang H, Wang X, Liu Q, Wang H, Wang H (2021) Vehicle object detection method based on candidate region aggregation. Pattern Anal Appl 24:1635–1647

Sugiura M, Miyauchi CM, Kotozaki Y, Akimoto Y, Nozawa T, Yomogida Y, Hanawa S, Yamamoto Y, Sakuma A, Nakagawa S et al (2015) Neural mechanism for mirrored self-face recognition. Cereb Cortex 25(9):2806–2814

Yan K, Wang X, Lu L, Summers RM (2018) Deeplesion: automated mining of large-scale lesion annotations and universal lesion detection with deep learning. J Med Imaging 5(3):036501–036501

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 580–587

Girshick R (2015) Fast r-CNN. In: Proceedings of the IEEE international conference on computer vision, pp 1440–1448

Ren S, He K, Girshick R, Sun J (2015) Faster r-CNN: Towards real-time object detection with region proposal networks. In: Advances in neural information processing systems

Zhang H, Chang H, Ma B, Wang N, Chen X (2020) Dynamic r-CNN: towards high quality object detection via dynamic training. In: Computer vision—ECCV 2020: 16th European conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XV 16. Springer, Berlin, pp 260–275

Sun P, Zhang R, Jiang Y, Kong T, Xu C, Zhan W, Tomizuka M, Li L, Yuan Z, Wang C et al (2021) Sparse r-cnn: End-to-end object detection with learnable proposals. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 14454–14463

Lin T-Y, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, pp 2980–2988

Tian Z, Shen C, Chen H, He T (2019) FCOS: fully convolutional one-stage object detection. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 9627–9636

Tian Z, Shen C, Chen H, He T (2019) FCOS: fully convolutional one-stage object detection. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 9627–9636

Zhang S, Chi C, Yao Y, Lei Z, Li SZ (2020) Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9759–9768

Zhang H, Wang Y, Dayoub F, Sunderhauf N (2021) Varifocalnet: an IOU-aware dense object detector. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8514–8523

Chen Q, Wang Y, Yang T, Zhang X, Cheng J, Sun J (2021) You only look one-level feature. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13039–13048

Feng C, Zhong Y, Gao Y, Scott MR, Huang W (2021) TOOD: task-aligned one-stage object detection. In: 2021 IEEE/CVF international conference on computer vision (ICCV). IEEE Computer Society, pp 3490–3499

Li S, He C, Li R, Zhang L (2022) A dual weighting label assignment scheme for object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9387–9396

Lin T-Y, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2117–2125

Liu S, Qi L, Qin H, Shi J, Jia J (2018) Path aggregation network for instance segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8759–8768

Liu S, Huang D, Wang Y (2019) Learning spatial fusion for single-shot object detection. arXiv preprint arXiv:1911.09516

Tan M, Pang R, Le QV (2020) Efficientdet: Scalable and efficient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10781–10790

Qiao S, Chen L-C, Yuille A (2021) Detectors: detecting objects with recursive feature pyramid and switchable atrous convolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10213–10224

Wang G, Gan X, Cao Q, Zhai Q (2022) MFANet: multi-scale feature fusion network with attention mechanism. Visual Comput. https://doi.org/10.1007/s00371-022-02503-4

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Woo S, Park J, Lee J-Y, Kweon IS (2018) CBAM: convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV), pp 3–19

Rahman MM, Fiaz M, Jung SK (2020) Efficient visual tracking with stacked channel-spatial attention learning. IEEE Access 8:100857–100869

Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q (2020) ECA-NET: efficient channel attention for deep convolutional neural networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11534–11542

Hou Q, Zhou D, Feng J (2021) Coordinate attention for efficient mobile network design. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13713–13722

Yang L, Zhang R-Y, Li L, Xie X (2021) SIMAM: a simple, parameter-free attention module for convolutional neural networks. In: International conference on machine learning, pp 11863–11874. PMLR

Zhang Q-L, Yang Y-B (2021) Sa-net: shuffle attention for deep convolutional neural networks. In: ICASSP 2021-2021 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 2235–2239

Mou L, Zhao Y, Chen L, Cheng J, Gu Z, Hao H, Qi H, Zheng Y, Frangi A, Liu J (2019) CS-NET: channel and spatial attention network for curvilinear structure segmentation. In: Medical Image Computing and Computer Assisted Intervention—MICCAI 2019: 22nd international conference, Shenzhen, China, October 13–17, 2019, Proceedings, Part I 22. Springer, pp 721–730

Hsyu M-C, Liu C-W, Chen C-H, Chen C-W, Tsai W-C (2021) CSANET: high speed channel spatial attention network for mobile ISP. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2486–2493

Li H, Xiong P, An J, Wang L (2018) Pyramid attention network for semantic segmentation. arXiv preprint arXiv:1805.10180

Liu Z, Gong P, Wang J (2019) Attention-based feature pyramid network for object detection. In: Proceedings of the 2019 8th international conference on computing and pattern recognition, pp 117–121

Guo C, Fan B, Zhang Q, Xiang S, Pan C (2020) AUGFPN: improving multi-scale feature learning for object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12595–12604

Min K, Lee G-H, Lee S-W (2022) Attentional feature pyramid network for small object detection. Neural Netw 155:439–450

Yang X, Wang W, Wu J, Ding C, Ma S, Hou Z (2022) MLA-NET: feature pyramid network with multi-level local attention for object detection. Mathematics 10(24):4789

Lin T-Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft coco: common objects in context. In: Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6–12, 2014, Proceedings, Part V 13. Springer, Berlin, pp 740–755

Everingham M, Van Gool L, Williams CK, Winn J, Zisserman A (2010) The pascal visual object classes (VOC) challenge. Int J Comput Vision 88:303–338

Everingham M, Eslami SA, Van Gool L, Williams CK, Winn J, Zisserman A (2015) The pascal visual object classes challenge: a retrospective. Int J Comput Vis 111:98–136

Zhang H, Li D, Ji Y, Zhou H, Wu W (2019) Deep learning-based beverage recognition for unmanned vending machines: an empirical study. In: 2019 IEEE 17th international conference on industrial informatics (INDIN). IEEE, vol 1, pp 1464–1467

Zhang H, Li D, Ji Y, Zhou H, Wu W, Liu K (2019) Toward new retail: a benchmark dataset for smart unmanned vending machines. IEEE Trans Ind Inf 16(12):7722–7731

Chen K, Wang J, Pang J, Cao Y, Xiong Y, Li X, Sun S, Feng W, Liu Z, Xu J, et al (2019) Mmdetection: open MMLAB detection toolbox and benchmark. arXiv preprint arXiv:1906.07155

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Funding

This work was supported by the National Key R &D Program of China (Grant numbers [2017YFB1302400]).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection, and analysis were performed by [Gaihua Wang], [Nengyuan Wang], and [Hong Liu]. The first draft of the manuscript was written by [Qi Li] and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Consent to publish

The authors affirm that informed consent was obtained from all participants

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Research involving human participants and/or animals

Not applicable.

Informed consent

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, G., Li, Q., Wang, N. et al. SAFPN: a full semantic feature pyramid network for object detection. Pattern Anal Applic 26, 1729–1739 (2023). https://doi.org/10.1007/s10044-023-01200-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-023-01200-9