Abstract

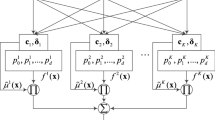

Fuzzy broad learning system (FBLS) is a newly proposed fuzzy system, which introduces Takagi–Sugeno fuzzy model into broad learning system. It has shown that FBLS has better nonlinear fitting ability and faster calculation speed than the most of fuzzy neural networks proposed earlier. At the same time, compared to other fuzzy neural networks, FBLS has fewer rules and lower cost of training time. However, label errors or missing are prone to appear in large-scale dataset, which will greatly reduce the performance of FBLS. Therefore, how to use limited label information to train a powerful classifier is an important challenge. In order to address this problem, we introduce Mean-Teacher model for the fuzzy broad learning system. We use the Mean-Teacher model to rebuild the weights of the output layer of FBLS, and use the Teacher–Student model to train FBLS. The proposed model is an implementation of semi-supervised learning which integrates fuzzy logic and broad learning system in the Mean-Teacher-based knowledge distillation framework. Finally, we have proved the great performance of Mean-Teacher-based fuzzy broad learning system (MT-FBLS) through a large number of experiments.

Similar content being viewed by others

Data availability

We made use of publicly available datasets.

References

Weiss SM, Indurkhya N (1997) Predictive data mining. A practical guide. Morgan Kaufmann Publishers Inc, Burlington

Mitchell TM (1997) Machine learning. McGraw-Hill, New York

Ohlsson S (2011) Deep learning: how the mind overrides experience. Cambridge University Press, Cambridge

Northcutt C, Athalye A, Mueller J (2021) Label errors in ML test sets. https://labelerrors.com Accessed June 10, 2021

Bishop C (2006) Pattern Recognition and Machine Learning. Springer, New York

Sáez J, Galar M, Luengo J, Herrera F (2014) Analyzing the presence of noise in multi-class problems: alleviating its influence with the one-vs-one decomposition. Knowl Inf Syst 38(1):179–206

Frenay B, Verleysen M (2014) Classification in the presence of label noise: a survey. IEEE Trans Neural Netw Learn Syst 25(5):845–869

Teng CM (2000) Evaluating noise correction. In: PRICAI 2000 topics in artificial intelligence: 6th Pacific rim international conference on artificial intelligence Melbourne, Australia, Proceedings 6. Springer, Berlin, Heidelberg, pp 188–198

Thongkam J, Xu G, Zhang Y, Huang F (2008) Support vector machine for outlier detection in breast cancer survivability prediction. In: Advanced web and network technologies, and applications

Li CH, Kuo BC, Lin CT, Huang CS (2012) A spatial-contextual support vector machine for remotely sensed image classification. IEEE Trans Geosci Remote Sens 50(3):784–799

Hinton GE, Krizhevsky A, Wang SD (2012) Transforming auto-encoders. In: Artificial neural networks and machine learning—ICANN 2011—21st international conference on artificial neural networks, Espoo, Finland, June 14–17, 2011, Proceedings, Part I

Zhang L, Qi GJ, Wang L, Luo J (2019) Aet vs. aed: unsupervised representation learning by auto-encoding transformations rather than data. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial networks. Adv Neural Inf Process Syst 3:2672–2680

Kingma DP, Welling M (2013) Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114

Vincent P, Larochelle H, Bengio Y, Manzagol PA (2008) Extracting and composing robust features with denoising autoencoders. In: Machine learning, proceedings of the twenty-fifth international conference (ICML 2008), Helsinki, Finland, June 5–9, 2008

Wang G, Wong KW, Lu J (2021) AUC-based extreme learning machines for supervised and semi-supervised imbalanced classification. IEEE Trans Syst Man Cybern Syst 51(12):7919–7930. https://doi.org/10.1109/TSMC.2020.2982226

Joachims T et al (1999) Transductive inference for text classification using support vector machines. In: ICML, vol 99. pp 200–209

Chapelle O, Chi M, Zien A (2006) A continuation method for semi-supervised SVMs. In: Proceedings of the 23rd international conference on machine learning. pp 185–192

Wang J, Zhu S, Gong Y (2010) Driving safety monitoring using semisupervised learning on time series data. IEEE Trans Intell Transp Syst 11(3):728–737

Zhu X, Ghahramani Z, Lafferty JD (2003) Semi-supervised learning using Gaussian fields and harmonic functions. In: Machine learning, proceedings of the twentieth international conference (ICML 2003), August 21–24, 2003, Washington, DC, USA

Nigam K, Mccallum AK, Thrun S (2000) Text classification from labeled and unlabeled documents using EM. Mach Learn 39(2/3):103–134

Fujino A, Ueda N, Saito K (2008) Semisupervised learning for a hybrid generative/discriminative classifier based on the maximum entropy principle. IEEE Trans Pattern Anal Mach Intell 30(3):424–437

Kingma DP, Rezende DJ, Mohamed S, Welling M (2014) Semi-supervised learning with deep generative models. Adv Neural Inf Process Syst 4:3581–3589

Siddharth N, Paige B, Meent J, Desmaison A, Torr P (2017) Learning disentangled representations with semi-supervised deep generative models. arXiv preprint arXiv:1706.00400

Rasmus A, Valpola H, Honkala M, Berglund M, Raiko T (2015) Semi-supervised learning with ladder networks. Comput Sci 1(1):1–9

Laine S, Aila T (2016) Temporal ensembling for semi-supervised learning. arXiv preprint arXiv:1610.02242

Tarvainen A, Valpola H (2017) Mean teachers are better role models: weight-averaged consistency targets improve semi-supervised deep learning results. arXiv preprint arXiv:1703.01780

Zhu X, Ghahramani Z (2002) Learning from labeled and unlabeled data with label propagation. ProQuest Number: INFORMATION TO ALL USERS

Wang F, Zhang C (2008) Label propagation through linear neighborhoods. IEEE Trans Knowl Data Eng 20(1):55–67

Zhou ZH, Li M (2005) Tri-training: exploiting unlabeled data using three classifiers. IEEE Trans Knowl Data Eng 17(11):1529–1541

Blum A, Mitchell T (1998) Combining labeled and unlabeled data with co-training. In: Proceedings of the eleventh annual conference on computational learning theory. pp 92–100

Lee S-J, Ouyang C-S, Du S-H (2003) A neuro-fuzzy approach for segmentation of human objects in image sequences. IEEE Trans Syst Man Cybern Part B (Cybern) 33(3):420–437. https://doi.org/10.1109/TSMCB.2003.811765

Cpalka K (2009) A new method for design and reduction of neuro-fuzzy classification systems. IEEE Trans Neural Netw 20(4):701–714. https://doi.org/10.1109/TNN.2009.2012425

Segatori A, Bechini A, Ducange P, Marcelloni F (2017) A distributed fuzzy associative classifier for big data. IEEE Trans Cybern PP(99):1–14

Subramanian K, Suresh S, Sundararajan N (2013) A metacognitive neuro-fuzzy inference system (McFIS) for sequential classification problems. IEEE Trans Fuzzy Syst 21(6):1080–1095

Zhou T, Chung FL, Wang S (2017) Deep tsk fuzzy classifier with stacked generalization and triplely concise interpretability guarantee for large data. IEEE Trans Fuzzy Syst 25(5):1207–1221

Ng KC, Trivedi MM (1998) A neuro-fuzzy controller for mobile robot navigation and multirobot convoying. IEEE Trans Syst Man Cybern Part B (Cybern) 28(6):829–840. https://doi.org/10.1109/3477.735392

Efe MÖ (2008) Fractional fuzzy adaptive sliding-mode control of a 2-DOF direct-drive robot arm. IEEE Trans Syst Man Cybern Part B (Cybern) 38(6):1561–1570. https://doi.org/10.1109/TSMCB.2008.928227

Kukolj D, Levi E (2004) Identification of complex systems based on neural and Takagi-Sugeno fuzzy model. IEEE Trans Syst Man Cybern Part B (Cybern) 34(1):272–282. https://doi.org/10.1109/TSMCB.2003.811119

Jiang Y, Wu D, Deng Z, Qian P, Wang J, Wang G, Chung F-L, Choi K-S, Wang S (2017) Seizure classification from EEG signals using transfer learning, semi-supervised learning and TSK fuzzy system. IEEE Trans Neural Syst Rehabil Eng 25(12):2270–2284

Jiang Y, Deng Z, Chung F-L, Wang S (2017) Realizing two-view TSK fuzzy classification system by using collaborative learning. IEEE Trans Syst Man Cybern Syst 47(1):145–160. https://doi.org/10.1109/TSMC.2016.2577558

Wang J, Lin D, Deng Z, Jiang Y, Zhu J, Chen L, Li Z, Gong L, Wang S (2021) Multitask TSK fuzzy system modeling by jointly reducing rules and consequent parameters. IEEE Trans Syst Man Cybern Syst 51(7):4078–4090. https://doi.org/10.1109/TSMC.2019.2930616

Shen YW, Yap KS, Yap HJ, Tan SC, Chang SW (2017) On equivalence of FIS and ELM for interpretable rule-based knowledge representation. IEEE Trans Neural Netw Learn Syst 26(7):1417–1430

Shuang F, Chen C (2018) Fuzzy broad learning system: a novel neuro-fuzzy model for regression and classification. IEEE Trans Cybern PP(99):1–11

Takagi T, Sugeno M (1985) Fuzzy identification of systems and its applications to modeling and control. IEEE Trans Syst Man Cybern 1:116–132

Deng Z, Choi KS, Chung FL, Wang S (2011) Scalable TSK fuzzy modeling for very large datasets using minimal-enclosing-ball approximation. IEEE Trans Fuzzy Syst 19(2):210–226

Chen CP, Liu Z (2017) Broad learning system: an effective and efficient incremental learning system without the need for deep architecture. IEEE Trans Neural Netw Learn Syst 29(1):10–24

Chen CLP (1996) A rapid supervised learning neural network for function interpolation and approximation. IEEE Trans Neural Netw 7(5):1220–1230

Chen CLP, Wan JZ (1999) A rapid learning and dynamic stepwise updating algorithm for flat neural networks and the application to time-series prediction. IEEE Trans Syst Man Cybern B Cybern 29(1):62–72

Polyak BT, Juditsky A (2006) Acceleration of stochastic approximation by averaging. SIAM J Control Optim 30(4):838–855

Deng Z, Jiang Y, Choi K-S, Chung F-L (2013) Knowledge-leverage-based TSK fuzzy system modeling. IEEE Trans Neural Netw Learn Syst 24(8):1200–1212

Zhang K, Tsang IW, Kwok JT (2009) Maximum margin clustering made practical. IEEE Trans Neural Netw 20(4):583–596. https://doi.org/10.1109/TNN.2008.2010620

Zhang T, Deng Z, Ishibuchi H, Pang LM (2020) Robust TSK fuzzy system based on semisupervised learning for label noise data. IEEE Trans Fuzzy Syst 29(8):2145–2157

Li Y-F, Zhou Z-H (2014) Towards making unlabeled data never hurt. IEEE Trans Pattern Anal Mach Intell 37(1):175–188

Leski JM (2005) TSK-fuzzy modeling based on \(\epsilon\)-insensitive learning. IEEE Trans Fuzzy Syst 13(2):181–193

Chiu SL (1994) Fuzzy model identification based on cluster estimation. J Intell Fuzzy Syst 2:267–278

Bennett K, Demiriz A (1998) Semi-supervised support vector machines. Adv Neural Inf Process Syst 11:368–374

Funding

This work was supported in part by the Natural Science Foundation of China (61991401, U20A20189), in part by the National Key Research and Development Project (2020YFB1710003), in part by the Jiangxi Engineering Technology Research Center of Nuclear Geoscience Data Science and System (JETRCNGDSS202206), in part by the Quzhou Science and Technology Plan Project (2023K265), and in part by the Engineering Research Center of Ministry of Education for Nuclear Technology Application (HISTYB2022-7).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fan, Z., Huang, Y., Xi, C. et al. Semi-supervised fuzzy broad learning system based on mean-teacher model. Pattern Anal Applic 27, 18 (2024). https://doi.org/10.1007/s10044-024-01217-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10044-024-01217-8