Abstract

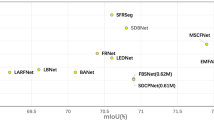

Deep neural networks have significantly improved semantic segmentation, but their great performance frequently comes at the expense of expensive computation and protracted inference times, which fall short of the exacting standards of real-world applications. A lightweight feature-enhanced fusion network (LFFNet) for real-time semantic segmentation is proposed. LFFNet is a particular type of asymmetric encoder–decoder structure. In the encoder, A multi-dilation rate fusion module can guarantee the retention of local information while enlarging the appropriate field in the encoder section, which resolves the issue of insufficient feature extraction caused by the variability of target size. In the decoder, different decoding modules are designed for spatial information and semantic information. The attentional feature enhancement module takes advantage of the attention mechanism to feature-optimize the contextual information of the high-level output, and the lightweight multi-scale feature fusion module fuses the features from various stages to aggregate more spatial detail information and contextual semantic information. The experimental findings demonstrate that LFFNet achieves 72.1% mIoU and 67.0% mIoU on Cityscapes and Camvid datasets at 102 FPS and 244 FPS, respectively, with only 0.63M parameters. Note that there is neither pretraining nor pre-processing. Our model can achieve superior segmentation performance with fewer parameters and less computation compared to existing networks.

Similar content being viewed by others

Data availability statement

Data will be made available on request.

Code availability

custom code

References

Guo Y, Liu Y, Georgiou T, Lew MS (2018) A review of semantic segmentation using deep neural networks. Int J Multimedia Inform Retr 7:87–93

Lateef F, Ruichek Y (2019) Survey on semantic segmentation using deep learning techniques. Neurocomputing 338:321–348

Feng D, Haase-Schütz C, Rosenbaum L, Hertlein H, Glaeser C, Timm F, Wiesbeck W, Dietmayer K (2020) Deep multi-modal object detection and semantic segmentation for autonomous driving: datasets, methods, and challenges. IEEE Trans Intell Transp Syst 22(3):1341–1360

Hsu J, Chiu W, Yeung S (2021) Darcnn: Domain adaptive region-based convolutional neural network for unsupervised instance segmentation in biomedical images. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 1003–1012

Sze V, Chen Y-H, Yang T-J, Emer JS (2017) Efficient processing of deep neural networks: a tutorial and survey. Proc IEEE 105(12):2295–2329

Yosinski, J., Clune, J., Bengio, Y., Lipson, H.: How transferable are features in deep neural networks? Adv Neural Inform Process Syst 27 (2014)

Zhao H, Shi J, Qi X, Wang X, Jia J (2017) Pyramid scene parsing network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2881–2890

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation. In: Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18, pp. 234–241 (2015). Springer

Paszke A, Chaurasia A, Kim S, Culurciello E (2016) Enet: a deep neural network architecture for real-time semantic segmentation. arXiv preprint arXiv:1606.02147 (2016)

Badrinarayanan V, Kendall A, Cipolla R (2017) Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Pattern Anal Mach Intell 39(12):2481–2495

Zhao H, Qi X, Shen X, Shi J, Jia J (2018) Icnet for real-time semantic segmentation on high-resolution images. In: Proceedings of the European conference on computer vision (ECCV), pp. 405–420

Yu C, Wang J, Peng C, Gao C, Yu G, Sang N (2018) Bisenet: bilateral segmentation network for real-time semantic segmentation. In: Proceedings of the European conference on computer vision (ECCV), pp. 325–341

Fan M, Lai S, Huang J, Wei X, Chai Z, Luo J, Wei X (2021) Rethinking bisenet for real-time semantic segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 9716–9725

Milletari F, Navab N, Ahmadi S-A (2016) V-net: fully convolutional neural networks for volumetric medical image segmentation. In: 2016 Fourth international conference on 3D vision (3DV), pp. 565–571 . IEEE

Zhou Z, Siddiquee MMR, Tajbakhsh N, Liang J (2019) Unet++: Redesigning skip connections to exploit multiscale features in image segmentation. IEEE Trans Med Imaging 39(6):1856–1867

Romera E, Alvarez JM, Bergasa LM, Arroyo R (2017) Erfnet: Efficient residual factorized convnet for real-time semantic segmentation. IEEE Trans Intell Transp Syst 19(1):263–272

Hao S, Zhou Y, Guo Y, Hong R, Cheng J, Wang M (2022) Real-time semantic segmentation via spatial-detail guided context propagation. IEEE transactions on neural networks and learning systems

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 7132–7141 (2018)

Zhou Q, Wang Y, Fan Y, Wu X, Zhang S, Kang B (2020) Latecki LJ (2020) Aglnet: towards real-time semantic segmentation of self-driving images via attention-guided lightweight network. Appl Soft Comput 96:106682

Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, Lu H (2019) Dual attention network for scene segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 3146–3154

Huang Z, Wang X, Huang L, Huang C, Wei Y, Liu W (2019) Ccnet: Criss-cross attention for semantic segmentation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 603–612

Ding X, Shen C, Zeng T, Peng Y (2022) Sab net: a semantic attention boosting framework for semantic segmentation. IEEE transactions on neural networks and learning systems

Yu F, Koltun V (2015) Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122

Mehta S, Rastegari M, Caspi A, Shapiro L, Hajishirzi H (2018) Espnet: efficient spatial pyramid of dilated convolutions for semantic segmentation. In: Proceedings of the European conference on computer vision (ECCV), pp. 552–568

Chollet F (2017) Xception: deep learning with depthwise separable convolutions. In: Proceedings of the IEEE Conference on computer vision and pattern recognition, pp. 1251–1258

Radosavovic I, Kosaraju RP, Girshick R, He K, Dollár P (2020) Designing network design spaces. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 10428–10436

Zhang X, Zhou X, Lin M, Sun J (2018) Shufflenet: an extremely efficient convolutional neural network for mobile devices. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 6848–6856

Gao R (2021) Rethink dilated convolution for real-time semantic segmentation. arXiv preprint arXiv:2111.09957

Sanghyun W, Jongchan P, Joon-Young L In S, Cbam, S (2018) Convolutional block attention module proceedings of the European conference on computer vision (eccv)

Hou Q, Zhang L, Cheng M-M, Feng J (2020) Strip pooling: rethinking spatial pooling for scene parsing. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 4003–4012

Wang P, Chen P, Yuan Y, Liu D, Huang Z, Hou X, Cottrell G (2018) Understanding convolution for semantic segmentation. In: 2018 IEEE winter conference on applications of computer vision (WACV), pp. 1451–1460. IEEE

Wu T, Tang S, Zhang R, Cao J, Zhang Y (2020) Cgnet: A light-weight context guided network for semantic segmentation. IEEE Trans Image Process 30:1169–1179

Hu X, Gong J (2022) Larfnet: lightweight asymmetric refining fusion network for real-time semantic segmentation. Comput Graphics 109:55–64

Wang P, Li L, Pan F, Wang L (2023) Lightweight bilateral network for real-time semantic segmentation. J Adv Comput Intell Intell Inform 27(4):673–682

Zhuang M, Zhong X, Gu D, Feng L, Zhong X, Hu H (2021) Lrdnet: a lightweight and efficient network with refined dual attention decorder for real-time semantic segmentation. Neurocomputing 459:349–360

Mazhar S, Atif N, Bhuyan M, Ahamed SR (2023) Block attention network: a lightweight deep network for real-time semantic segmentation of road scenes in resource-constrained devices. Eng Appl Artif Intell 126:107086

Li H, Xiong P, Fan H, Sun J (2019) Dfanet: deep feature aggregation for real-time semantic segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 9522–9531

Lu M, Chen Z, Wu QJ, Wang N, Rong X, Yan X (2020) Frnet: factorized and regular blocks network for semantic segmentation in road scene. IEEE Trans Intell Transp Syst 23(4):3522–3530

Singha T, Pham D-S, Krishna A (2023) A real-time semantic segmentation model using iteratively shared features in multiple sub-encoders. Pattern Recogn 140:109557

Hu X, Liu Y (2023) Lightweight multi-scale attention-guided network for real-time semantic segmentation. Image Vis Comput 139:104823

Jiang B, Tu W, Yang C, Yuan J (2020) Context-integrated and feature-refined network for lightweight object parsing. IEEE Trans Image Process 29:5079–5093

Tang X, Tu W, Li K, Cheng J (2021) Dffnet: an iot-perceptive dual feature fusion network for general real-time semantic segmentation. Inf Sci 565:326–343

Elhassan MA, Huang C, Yang C, Munea TL (2021) Dsanet: Dilated spatial attention for real-time semantic segmentation in urban street scenes. Expert Syst Appl 183:115090

Hu X, Jing L, Sehar U (2022) Joint pyramid attention network for real-time semantic segmentation of urban scenes. Appl Intell 52(1):580–594

Lu M, Chen Z, Liu C, Ma S, Cai L, Qin H (2022) Mfnet: multi-feature fusion network for real-time semantic segmentation in road scenes. IEEE Trans Intell Transp Syst 23(11):20991–21003

Fan J, Wang F, Chu H, Hu X, Cheng Y, Gao B (2022) Mlfnet: multi-level fusion network for real-time semantic segmentation of autonomous driving. IEEE Transact Intell Veh 8(1):756–767

Orsic M, Kreso I, Bevandic P, Segvic S (2019) In defense of pre-trained imagenet architectures for real-time semantic segmentation of road-driving images. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 12607–12616

Funding

We thank all the anonymous reviewers for their constructive suggestions. This work is supported by the National Natural Science Foundation of China (Project Number 62076044), and the Natural Science Foundation of Chongqing, China, China (Grant No.cstc2019jcyj-zdxm0011).

Author information

Authors and Affiliations

Contributions

Xuegang Hu was contributed to resources, formal analysis, supervision, project administration, writing—review and editing. Jing Feng was contributed to conceptualization, methodology, software, validation, writing—original draft. Juelin Gong was contributed to writing—review and editing

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethics approval

Ethical approval does not apply to this article.

Consent to participate

All co-authors are aware and agree.

Consent for publication

Agree to publish personal data or images.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hu, X., Feng, J. & Gong, J. LFFNet: lightweight feature-enhanced fusion network for real-time semantic segmentation of road scenes. Pattern Anal Applic 27, 27 (2024). https://doi.org/10.1007/s10044-024-01237-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10044-024-01237-4