Abstract

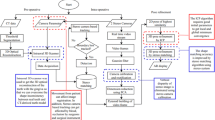

With the increasing demand for orthodontic treatment, the skill of wire bending is more and more important for orthodontists. Traditional wire bending training needs a high cost of time and resources. In this paper, an augmented reality assisted wire-bending training system (ARAWTS) is proposed. ARAWTS provides 4 typical wire bending training tasks for the trainee and can give training feedback and improvement advice to the trainee by gesture recognition during the training. For the elaborate and vague wire bending gesture recognition, we develop a temporal logical relation (TLR) module to sparsely sample dense frames and learn the TLRs between frames of gestures. To reduce the computational cost and time, we introduce a new type of sparse optical flow called Focus Grid Optical Flow (FGOF). From the results of experiments, the proposed algorithm implemented on an AR device (HoloLens) achieves a high recognition rate with low computational complexity and ARAWTS is proved reliable.

Similar content being viewed by others

References

Ballesté F, Torras C (2013) Effects of human–machine integration on the construction of identity. In: Luppicini R (ed) Handbook of research on technoself: identity in a technological society. IGI Global, pp 574–591

Baraldi L, Paci F, Serra G et al (2014) Gesture recognition in ego-centric videos using dense trajectories and hand segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, 688–693

Carreira J, Zisserman A (2017) Quo vadis, action recognition? A new model and the kinetics dataset. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6299–6308

Cheng W, Sun Y, Li G et al (2019) Jointly network: a network based on CNN and RBM for gesture recognition. Neural Comput Appl 31(1):309–323

Dong J, Xia Z, Yan W et al (2019) Dynamic gesture recognition by directional pulse coupled neural networks for human-robot interaction in real time. J Vis Commun Image Represent 63:102583

Hilliges O, Kim D, Izadi S et al (2017) Grasping virtual objects in augmented reality: U.S. Patent 9,552,673. 2017-1-24.

Hughes CE, Stapleton CB, Hughes DE et al (2005) Mixed reality in education, entertainment, and training. IEEE Comput Graph Appl 25(6):24–30

Karpathy A, Toderici G, Shetty S et al (2014) Large-scale video classification with convolutional neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1725–1732

Kay W, Carreira J, Simonyan K et al (2017) The kinetics human action video dataset. arXiv:1705.06950

Kethman W (2021) Human–machine integration and the evolution of neuroprostheses. In: Atallah S (ed) Digital surgery. Springer, Cham, pp 275–284

Kim TK, Wong SF, Cipolla R (2007) Tensor canonical correlation analysis for action classification. In: 2007 IEEE conference on computer vision and pattern recognition. IEEE, pp 1–8

Kono H, Kikuchi M (2020) Analysis of orthodontic wire springback to simplify wire bending. Orthod Waves 79(1):57–63

Lau MN, Kamarudin Y, Zakaria NN et al (2021) Comparing flipped classroom and conventional live demonstration for teaching orthodontic wire-bending skill. PLoS ONE 16(7):e0254478

Lee SH, Cui J, Liu L et al (2021a) An evidence-based intelligent method for upper-limb motor assessment via a VR training system on stroke rehabilitation. IEEE Access 9:65871–65881

Lee SH, Yeh SC, Cui J et al (2021b) Motor indicators for the assessment of frozen shoulder rehabilitation via a virtual reality training system. Electronics 10(6):740

Lo YC, Chen GA, Liu YC et al (2021) Prototype of augmented reality technology for orthodontic bracket positioning: an in vivo study. Appl Sci 11(5):2315

Lucas BD, Kanade T (1981) An iterative image registration technique with an application to stereo vision, vol 81, pp 674–679

Mehta D, Sridhar S, Sotnychenko O et al (2017) Vnect: real-time 3d human pose estimation with a single RGB camera. ACM Trans Graph: TOG 36(4):1–14

Nyre-Yu MM (2019) Determining system requirements for human-machine integration in cyber security incident response. Purdue University Graduate School, West Lafayette

Osti F, de Amicis R, Sanchez CA et al (2021) A VR training system for learning and skills development for construction workers. Virtual Real 25(2):523–538

Rios H, Hincapié M, Caponio A et al (2011) Augmented reality: an advantageous option for complex training and maintenance operations in aeronautic related processes. In: International conference on virtual and mixed reality. Springer, Berlin, Heidelberg, pp 87–96

Rodriguez MD, Ahmed J, Shah M (2008) Action Mach a spatio-temporal maximum average correlation height filter for action recognition. In: 2008 IEEE conference on computer vision and pattern recognition. IEEE, pp 1–8

Sanin A, Sanderson C, Harandi MT et al (2013) Spatio-temporal covariance descriptors for action and gesture recognition. In: 2013 IEEE workshop on applications of computer vision (WACV). IEEE, pp 103–110

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. arXiv:1406.2199

Sivarajan S, Soh EX, Zakaria NN et al (2021) The effect of live demonstration and flipped classroom with continuous formative assessment on dental students’ orthodontic wire-bending performance. BMC Med Educ 21(1):1–12

Soomro K, Zamir AR, Shah M (2012) UCF101: a dataset of 101 human actions classes from videos in the wild. arXiv:1212.0402

Tang YM, Ng GWY, Chia NH et al (2021) Application of virtual reality (VR) technology for medical practitioners in type and screen (T&S) training. J Comput Assist Learn 37(2):359–369

Tran D, Bourdev L, Fergus R et al (2015) Learning spatiotemporal features with 3d convolutional networks. In: Proceedings of the IEEE international conference on computer vision, pp 4489–4497

Vakaliuk TA, Pochtoviuk SI (2021) Analysis of tools for the development of augmented reality technologies. In: CEUR workshop proceedings

Wang J, Liu Z, Wu Y et al (2012) Mining actionlet ensemble for action recognition with depth cameras. In: 2012 IEEE conference on computer vision and pattern recognition. IEEE, pp 1290–1297

Wang L, Xiong Y, Wang Z et al (2016) Temporal segment networks: towards good practices for deep action recognition. In: European conference on computer vision. Springer, Cham, pp 20–36

Waters NE, Stephens CD, Houston WJB (1975) Physical characteristics of orthodontic wires and archwires—part 1. Br J Orthod 2(1):15–24

Wong SF, Kim TK, Cipolla R (2007) Learning motion categories using both semantic and structural information. In: 2007 IEEE conference on computer vision and pattern recognition. IEEE, pp 1–6

Wu XY (2020) A hand gesture recognition algorithm based on DC-CNN. Multimed Tools Appl 79(13):9193–9205

Wu Y, Zheng B, Zhao Y (2018) Dynamic gesture recognition based on LSTM-CNN. 2018 Chinese Automation Congress (CAC). IEEE, pp 2446–2450

Zhao Z, Elgammal AM (2008) Information theoretic key frame selection for action recognition. In: BMVC, pp 1–10

Zhou J (2021) Virtual reality sports auxiliary training system based on embedded system and computer technology. Microprocess Microsyst 82:103944

Zhu G, Zhang L, Shen P et al (2017) Multimodal gesture recognition using 3-D convolution and convolutional LSTM. IEEE Access 5:4517–4524

Acknowledgements

This study was funded by the National Natural Science Foundation of China (U2013205).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declare that they have no conflict of interest.

Ethical statements

Ethical review and approval were waived for this study, due to the nature of data collected, which does not involve any personal information that could lead to the later identification of the individual participants.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Dong, J., Xia, Z., Zhao, Q. et al. Human–machine integration based augmented reality assisted wire-bending training system for orthodontics. Virtual Reality 27, 627–636 (2023). https://doi.org/10.1007/s10055-022-00675-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10055-022-00675-x