Abstract

Cybersickness (CS) is a serious usability problem in virtual reality (VR). Postural instability theory has emerged as one major hypothesis for the cause of CS. Based on such a hypothesis, we present two experiments to observe the trends in users’ trained balance ability with respect to their susceptibilities to CS. The first experiment (as a preliminary study) evaluated the effects of 2-week balance training under three different operational conditions: training in VR (VRT), training in non-immersive media with a 2D projection display (2DT), and VR exposure without any training (VRO; Baseline). The effect toward CS was tested not only in the training space but also in a different VR content to observe for any transfer effect. As results clearly indicated that the non-VR 2DT was ineffective in gaining any significant tolerance to CS, we conducted a follow-up second experiment with 1-week balance training, focusing on comparing only the VRT and VRO conditions. Overall, the experimental findings have shown, aside from the obvious improvement in balance performance itself, that accompanying balance training had the stronger effect of increasing tolerance to CS than mere exposure to VR. Furthermore, the tolerance to CS developed through VR balance training exhibited a transfer effect, that is, with reduced levels of CS in another VR content (not used during the training sessions).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The immersive and spatial nature of 3D virtual reality (VR) are important qualities in lending itself as an attractive means for various types of training—including physical exercises (Lee and Kim 2018; Neumann et al. 2018). A typical method of physical training would be, e.g., understanding and remembering to follow various exercise instructions illustrated on paper or watching the trainer’s motion in the third person viewpoint and mimicking it. VR may be particularly suited for teaching physical exercises as it can provide the first person viewpoint and convey the spatial/proprioceptive sense of the required movements, e.g., as if enacted by one’s own body parts (Yang and Kim 2002; Cannavò et al. 2018). Consequently, many VR-based physical training systems have indeed been developed and shown their effectiveness (Ahir et al. 2020).

Postural instability has been proposed and has emerged (although not universally accepted yet) as one of the competing hypotheses for the cause of cybersickness (CS) or VR sickness (Riccio and Stoffregen 1991; Keshavarz et al. 2015; Stoffregen et al. 2008). This theory postulates that a VR user can become sick in provocative and unfamiliar situations (such as being immersed in a VR space) in which one does not possess (or has not yet learned) strategies or skills to maintain a stable posture and balance (Riccio and Stoffregen 1991; So et al. 2007; Dennison and D’zmura 2018). Losing balance is often seen as a consequence (rather than a cause) of CS, as one major symptom is dizziness, and it has even been used as one measure for CS (Chang et al. 2020; Weech et al. 2019). However, there is also some evidence that visually induced sickness (like CS) can be predicted by one’s postural instability (Smart et al. 2002). This led us to investigate VR-based balance training as one possible way to increase the tolerance to CS, focusing on a particular and clearly observable relevant physical ability. In this context, it is important that the proposed application of balance training for CS is also situated in the VR environment. If shown to be effective, the proposed method can also further corroborate the postural instability theory as well.

This paper presents a series of two human-subject experiments that examined the long-term trends of user balance learning and tolerance to CS under different experimental conditions. We assessed the potential inter-relationship between one’s balance ability and the level of CS. The transfer effect was also investigated by having the trained users tested for CS tolerance in new VR content. The main contributions are as follows:

-

Balance training can be effective in developing the tolerance to sickness from visual motion.

-

Immersive balance training is more effective for developing tolerance to CS than non-immersive training and mere extended exposure to VR.

-

The effect of balance training can be transferred to other VR content (not used for training).

The structure of this paper is as follows: Sect. 2 gives an overview of the related research. Section 3 details the first experiment (as a preliminary pilot study), comparing three training methods over a 2-week period towards any change in CS. Section 4 presents the second similar experiment (as a main study), but focuses on the two effective conditions as found in Experiment 1, with 1 week of training. Section 5 provides the experimental findings and makes an in-depth discussion of the implications, and lastly, Sect. 6 concludes the paper.

2 Related works

2.1 Balance training in VR

The human body as an articulated and complex skeleton structure is inherently mechanically unstable (Pai and Patton 1997; Lee and Chou 2006). Maintaining balance (around the center of mass) is a complex process that involves multiple systems in the human body. The vestibular, proprioceptive, and visual channels are used to detect and gather balance/pose-related information and the brain integrates them to coordinate and generate the motor responses and establish the center of pressure through the muscles and joints with constant adjustments to counteract the external perturbation to the body (Peterka 2018; Lee and Farley 1998; Lafond et al. 2004).

There are numerous physical training routines to improve one’s balance by strengthening and improving the capabilities of the aforementioned subsystems (Brachman et al. 2017). Conventional means of balance training usually involve following paper or live instructions from the second/third person point of view. Virtual reality (VR) can be an effective media offering the first-person perspective and sense of personal space in enhancing the understanding of the various training poses and work-outs (Cannavò et al. 2018; Pastel et al. 2022). Gamification can further provide the motivation and impetus to facilitate the training process (Dietz et al. 2022; Tuveri et al. 2016). However, the effect of balance training (VR-based or not) on CS has not been investigated much despite the fact that they are often touted to be closely related (Riccio and Stoffregen 1991; Haran and Keshner 2009; Rine et al. 1999).

2.2 Cybersickness

Cybersickness (CS) refers to the unpleasant symptoms when using immersive or VR simulators, especially with navigational content. Typical symptoms include disorientation, headache, nausea, and ocular strains (LaViola 2000; Kennedy et al. 1993). The leading explanation for CS is the “sensory mismatch theory”, which attributes CS to the conflicting user’s motion information as interpreted by between the visual and vestibular senses (LaViola 2000; Rebenitsch and Owen 2016). That is, the aforementioned unpleasant symptoms arise when the virtual/visual motion is perceived by the human’s visual system while the vestibular senses detect no physical motion. Note that the visual and vestibular systems are neurally coupled (Grsser and Grüsser-Cornehls 1972).

To combat these symptoms, several studies have focused on reducing the amount of or neutralizing the visual motion information to minimize the sensory mismatch (Fernandes and Feiner 2016; Park et al. 2022; Keshavarz et al. 2014). For instance, Fernandes and Feiner (2016) developed a dynamic size-shifting field-of-view (FOV) in response to the speed/angular velocity of users or content. When the user’s motion accelerates, the FOV is reduced, which in turn reduces the extent of the visual stimulation and, ultimately, the sickness. In a similar vein, blurring the peripheral visual field has been proposed to minimize the visual stimulation (Budhiraja et al. 2017; Lin et al. 2020; Caputo et al. 2021). Park et al. (2022), Yang et al. (2023) have proposed neutralizing the visual motion stimuli by simultaneously presenting the reverse optical flow.

Another popular theory is the rest frame theory (Harm et al. 1998), which points to the absence of reference object(s) (objects in the VR content that are not moving with respect to the user). The rest frame is thought to help the user maintain one’s balance and be aware of the ground (or gravity) direction (Hemmerich et al. 2020; Wienrich et al. 2018; Harm et al. 1998). One interesting remedy to CS is the inclusion of the virtual nose, which can be considered as a rest frame object (Wienrich et al. 2018; Wittinghill et al. 2015; Cao 2017).

Alternatively, a potential strategy for addressing CS might involve methods to alleviate the immediate symptoms and enhance users’ physical well-being, rather than directly targeting the root cause. These can include, e.g., supplying a fresh breeze (Igoshina et al. 2022; Kim et al. 2023), providing pleasant music or calming aural feedback (Keshavarz and Hecht 2014; Kourtesis et al. 2023; Joo et al. 2023), and reducing the weight of the headset (Kim et al. 2023). These measures can be regarded as a cognitive distraction as a way to reduce CS by preventing users from focusing on the sickness-inducing VR content (Kourtesis et al. 2023).

One newer hypothesis for the cause of CS is the “postural instability theory” (Riccio and Stoffregen 1991; Li et al. 2018), which suggests that the inability to maintain balance due to external factors such as unfamiliar, provocative, and challenging situations can induce sickness. Note that this does not preclude the fact that imbalance is one typical after-effect of the sickness as well. This is based on the various studies that have observed a strong correlation between one’s balancing ability (before) and the extent of the motion sickness (after) (Riccio and Stoffregen 1991; Li et al. 2018; Smart et al. 2002). This theory is also in line with the rest frame theory, i.e., the lack of the object (indicating the direction of gravity and helping one maintain balance) could be seen as a provocative situation for the user (Hemmerich et al. 2020; Wienrich et al. 2018; Harm et al. 1998).

Based on all these studies, one can posit that balance training while navigating in immersive VR would make the user even more unstable and exacerbate the extent of the CS. In turn, this could make the balance training itself even harder (Imaizumi et al. 2020; Horlings et al. 2009). Nevertheless, given that the user can endure the training, its effect can eventually ease and break this vicious cycle. We can further hypothesize that immersive feedback will be an important factor, as maintaining and training for balance involves the visual channel and spatial awareness, which is difficult to fully provide in non-immersive and 2D-oriented media.

On a related note, the length of time exposed to a virtual environment is known to affect CS (Duzmanska et al. 2018). Stanney et al. (2003) has found high correlations between exposure time and CS, with longer exposure times increasing the risk of CS. On the other hand, there is also the opposite view that people may build up a resistance or adapt over time (or by frequent exposures) to CS (Duzmanska et al. 2018). Thus, the exact relationship between extended exposure and CS symptoms is not firmly established.

To our knowledge, no prior work on applying balance training as a way to train for tolerance to CS has been reported. Note that similarly to any external stimulation, the mere repeated and prolonged exposure to VR in itself can certainly have the effect of insensitization or habituation to the CS (Palmisano and Constable 2022). However, we expect it to be a relatively time-consuming method and quickly receding in its effect (compared to active training), and little is known about whether there is any transfer effect to other VR contents (Palmisano and Constable 2022; Dużmańska et al. 2018; Adhanom et al. 2022; Smither et al. 2008). Considering various aspects, the formulated hypotheses are as follows:

-

H1: The training effect for CS through (with VR or non-VR based) balance training, if any, will be greater than just by the mere extended exposure to the same VR content.

-

H2: The training effect for balancing and developing CS tolerance will be greater with the use of immersive VR than with the non-VR 2D environment.

-

H3: There will be a transfer effect such that tolerance to CS developed by balance training is conveyed to newly exposed VR contents.

-

H4: If balance training enhances tolerance to CS, this partly serves as evidence for the posture instability theory, which suggests that imbalance is a potential cause of CS.

3 Experiment 1: VRT versus VRO versus 2DT

The purpose of this study was to make a preliminary exploration and investigation of any effect of trained and enhanced balance ability on individuals’ tolerance to CS. As such a tolerance may be affected by a range of possible factors, including the training methods, duration, and media types, the results would be used to solidify the design of the more focused follow-up second experiment with a larger participant pool.

3.1 Experimental design

The balance training may occur in either a non-immersive environment or a VR environment, using sickness-eliciting contents (i.e., navigation). We hypothesize that, given the same content, the effects of balance training on tolerance to CS will be stronger if the training occurs in the VR environment compared to using the non-immersive environment. Furthermore, we expected that even if the given VR content may be new and different from the one used for training, this trained tolerance can be transferred to it.

On the other hand, to differentiate the effect (if any) by between the training method/media type and mere exposure to VR, subjects were also tested as such under the same sickness-eliciting VR contents without any balance training. Humans can become habituated, desensitized, and tolerant to CS after long exposure to various stimuli by VR (Fransson et al. 2019; Duzmanska et al. 2018). Thus, EXP1 was designed as a two-factor mixed model study with repeated measures. The first factor was a between-subject factor with three training methods (see Fig. 1):

-

VRO: only exposure/just watching a sickness-eliciting navigation content using a VR headset, but without any balance training.

-

VRT: watching a sickness-eliciting navigation content using a VR headset while carrying out a balance training routine (i.e., it is a combined effect of balanced training with extended VR exposure.).

-

2DT: watching a sickness-eliciting navigation content on a 2D projection-based display while carrying out a balance training routine.

The overview of the first experiment, conducted over 2 weeks and under three conditions. Participants were divided into between-subject groups according to the three different training conditions: a virtual reality-based balance training (VRT); b virtual reality exposure only (VRO); and c 2D projection display-based balance training (2DT). The two VR training contents used were d a jet fighter flight through a forest for the first week (EW1), which was relatively less CS-inducing, and e a wild roller-coaster ride for the second week (EW2), which was more CS-inducing. To assess the transfer effects of the balance training, participants were exposed to two other unexperienced transfer VR contents: f a rollercoaster ride [completely different from (e)], and g space exploration, on the first and last days of each respective week

As the effects of training may take time, the experiment was conducted over 2 weeks, but in two separate weekly segments: EXP1’s Week 1 (EW1) and Week 2 (EW2). Note that 2 weeks of balance training was deemed sufficient because marked progress is usually attainable in that time frame (Rasool and George 2007; Szczerbik et al. 2021). Thus, the time (days) constituted the second and within-subject factor.

EW1 proceeded over 4 days, and the participants were trained while watching the sickness-eliciting navigation content, which induced only a relatively moderate/lesser degree of CS to start the overall training gently (not too abruptly). After a 3-day break, EW2 was conducted with a duration of 5 days. Due to the possible learning effect and getting accustomed to the same content after repeated exposures, a new and more dynamic content (i.e., inducing more severe CS) was used. Although it is difficult to exactly quantify the difference in the induced-sickness levels, Fig. 2, which shows the navigation motion profiles of the respective content, makes it reasonably clear that the content from EW2 is likely to induce a much more severe level of CS.

In summary, there were two mixed model and longitudinal experiments; each designed as a two-factor, \(3 \times 2\), repeated measure between subjects. While experimental tasks were carried out and data measured daily during the 4-day/5-day periods for EW1/EW2, the analysis focused solely on the differences between the first and last days of each week (making it a two-factor study).

The overall process for Experiment 1: Training with EW1 spanned 4 days, whereas that with EW2 lasted for 5 days, with a 3-day rest period between them. Balance tests were conducted on the first and last days of each week to assess the effects before and after the balance training sessions, taking into account physical fatigue. Additionally, transfer content was presented on the first and last days of each week, and the test for this content was conducted last on the last day of each week

3.2 Experimental setup and task

In both EW1 and EW2, except for the training contents used, the experimental task (training/exposing procedure) was the same (see Fig. 3).

For 2DT and VRT groups, participants engaged in a balance training routine known as the “one leg stand” (also known as the Flamingo test Uzunkulaoğlu et al. 2019; Marcori et al. 2022). In the 2DT setup, balance training was performed while participants viewed navigational content on a 60-inch projection display from a distance of 1.5 m (see Fig. 1c). In contrast, participants in the VRT group watched the same content through a Meta Quest 2 VR headset, which has a field of view of \(104 \times 98\)°.

The balance training routine during the 3-min viewing period was structured as follows: ready/rest for 30s—training (30s’)—rest (30s’)—training (30s’)—rest (30s’)—training (30s’). Instructions for the training, such as when to raise the leg or rest, were delivered through a visible user interface integrated into the system.

On the other hand, the VRO group did not perform any balance training, i.e., participants only experienced the same VR content using the VR headset while standing on two feet.

As already indicated, two VR contents (inducing the different levels of CS) were used—the lesser sickness eliciting one in EW1 (flying through the forest trail, Fig. 1d) and more in EW2 (wild roller coaster ride, Fig. 1e). Experimenting with the new, more difficult (sickening) content also allowed us to examine the user’s behavior and performance after a week of training.

The navigation path contained several types of motion—forward translation and pitch/yaw, rotation/turning in varied speed and acceleration (see Fig. 2). Moreover, to mitigate the learning effect as much as possible, not only were different VR contents used between EW1 and EW2, but adjustments were also implemented for the same content: e.g. the content was subtly altered by changing the mood of the surrounding environment (e.g., dawn, midday, evening, night, cloudy, and rainy) while ensuring that the sickness-inducing level remained consistent between each day.

The training was conducted twice daily for 4 days in EW1 and 5 days in EW2. Note that participants were free to put their foot back down anytime if they felt they were in danger of falling down (or for any reason, e.g., not being able to maintain balance or due to too much sickness) but were asked to resume and continue in their best way. The experiment helper stood by to prevent the participant from completely falling down. The participant was also free to stop the experiment at any time, although there was no such case.

3.3 Dependent variables

Three dependent variables of main interest were changes in (1) balance performance, (2) the level of CS over time, and (3) whether tolerance to CS was developed as a transfer effect. First, quantitative balance performance was measured in three ways:

-

Maintenance time: To assess the changes in participant’s balance ability, “one leg stand with eyes closed” (Bohannon et al. 1984) test was administered on the first and last days of each week (see Fig. 3). Maintenance time was measured until the foot was placed on the ground in seconds.

-

Number of foot down: The number of times the participant put their foot down during the training process was manually counted.

-

Center of mass variability: The extent of the deviation of the standing body from the reference center of mass was computed by analyzing the participant’s 2D pose data extracted from recorded video using PoseNet (Papandreou et al. 2017, 2018). Specifically, the variation in the midpoint of the screen space locations of the right and left hips was used to estimate this measure.

As for the level of CS, the Simulation Sickness Questionnaire (SSQ) was used (Kennedy et al. 1993) with 16 questions. SSQ gives the three scores for the symptoms of nausea (SSQ-N), oculomotor (SSQ-O), disorientation (SSQ-D), and the total (SSQ-T). However, the SSQ only asks of the existence of certain symptoms; thus, it is not possible to assess their probable cause, e.g., whether ones stem from the visual motion or the balancing act. Thus, in addition to the “Original” SSQ, two revised versions, “Visual” and “Balance”, were made and used. Each newly revised questionnaires asked of the same symptoms, but also of what the participants thought the source might be, i.e., from the visual motion or the balancing act.

Lastly, to confirm the transfer effects (related to H3), namely whether the tolerance to CS developed through balance training was effective even with new VR content, the participants’ CS levels (using the “Original” SSQ) were measured using completely different, sickness-inducing VR contents. These were tested with the EW1 transfer VR content, a rollercoaster rideFootnote 1 (see Fig. 1f), and the EW2 transfer VR content, space exploration (see Fig. 1g). It should be noted that the transfer content also lasted 3 min, and participants in all conditions viewed it while standing (with two feetFootnote 2) and wearing a VR headset, without engaging in any balance training.

3.4 Participants

Participants were recruited through the university’s online community. The first round of participants was surveyed for their self-reported sensitivity to motion sickness using the MSSQ-short (Golding 2006; Nesbitt et al. 2017) and familiarity or prior experiences in using the VR system. We notified the potential participants of the need to carry out balance training (one leg stand) for about 10–15 min per day for 2 weeks and asked them to excuse themselves if they deemed it to be beyond their physical capabilities. Participants in the extreme ends in terms of their reported sensitivity were also excluded, as our study targeted participants in the middle of the sensitivity spectrum.

Fifteen final participants (all male, aged 19 to 33, \(M = 25.6\), \(SD = 2.19\)) were selected and placed in the three-between groups (5 each) for VRT, VRO, and 2DT such that their MSSQ score variations were similar and within an reasonable range (see Table 1). All participants had at least some experience in using VR applications (mostly game playing and video watching) but did not have any prior balance training experience. The subjects were paid 16 USD per hour for their participation (a total of about $120 for the whole 2 weeks). All 15 subjects managed to finish the experiment in 2 weeks without giving up in the middle.

3.5 Experimental procedure

The participants first filled out the consent agreement form, were briefed about the procedure of the experiment, and were explained the experimental tasks. Five to ten minutes were given for the participants to get oneself familiarized with the balancing task while watching the content through the monitor or the headset. In particular, the participants were given detailed instructions on how to carefully respond to the three types of SSQs and to think deeply about the probable causes of the symptoms the best they could. The helper assisted the subject to position oneself in front of the monitor on the floor (with cushioned walls) or donning and adjusting the headset. The helper also stood by to prevent the subject from falling down.

On each day, the participants for 2DT and VRT performed balance training routines (described in Sect. 3.2). The participants selected which foot to use to stand or lift on their own. This protocol was designed considering that the similar one-leg with eyes closed test lasted around 30 s on average (Hong-sun et al. 2019), and our own pilot test (with four males) indicated that exceeding 1 min often led to muscle strain. Meanwhile, the VRO group just watched the VR content for 3 min in a normal standing pose.

After the respective treatments, participants rested and filled out the survey. After experiencing all treatments, informal post-briefings were taken. It should be noted that participants were free to stop the experiment at any time for any reason, and the experiment received approval from the Institutional Review Board (No. 2023-0143-01).

3.6 Results

Considering the \(3 \times 2\) mixed design and the collected longitudinal data being both continuous and non-parametric, the nparLD (Noguchi et al. 2012) method was utilized to evaluate the statistical effects of the factor and its interactions. Pairwise comparisons were conducted using the Kruskal–Wallis test to analyze the factor of the training method (a between-design factor), while the factor of the day (a within-design factor) was assessed using the Wilcoxon signed-rank test. All tests were applied Bonferroni correction with a 5% significant level. As EW1 and EW2 were conducted in different settings, the analysis was performed separately.

3.6.1 Change in sickness levels

The primary focus of this study was to alleviate CS caused by visual mismatch through balance training. There is a possibility that physical challenges from the balancing act could be similar to many CS symptoms assessed by the SSQ (e.g., “disorientation” from trying to stand on one foot). We acknowledge the inherent difficulty in objectively and correctly judging the sources of the CS symptoms and any possible interaction between these factors (participants were allowed to attribute a given symptom to both visual stimulation and balance exercises). Thus, as described earlier, two additional versions of the “Original” SSQ-termed “Visual” and “Balance”-were prepared. Such measures may enable participants to differentiate and report whether the CS symptoms were induced by the visual and/or balance training. Nevertheless, given that the Original SSQ is widely used, and as such has been more thoroughly validated than our revised versions, this section primarily reports the statistical analysis results based on the Original SSQ. The results derived from the modified versions (Visual and Balance) are presented in the “Appendix”.

Trends in CS score over EW1/EW2 are shown in Table 2, Figs. 4 and 5.

In EW1, the nparLD revealed significant differences at the CS level for all SSQ items in relation to Days (\(p <.001\)), but there were no effects of Training Methods and interaction effects (\(p >.05\)), as shown in Table 3.

On the other hand, the pairwise comparisons with respect to the within-factor of Days (1 day vs. 4 day) showed significant reductions in the level of CS for both VRT groups (1 day > 4 day; \(p <.05\)) and 2DT groups (1 day > 4 day; \(p <.05\)) for all SSQ items. However, the VRO group showed a significant reduction only in the Nausea (1 day > 4 day; \(p =.045\)*), while the reductions in other items did not reach statistical significance (see Table 3b). It should be noted that the VRO groups were only exposed to immersive/visual simulation without any balance training. These results indicate the possibility that balance training is significantly effective in developing CS tolerance (supporting H1). Meanwhile, the pairwise comparison for between-factor Training Methods revealed no significant differences in the first and last days.

For EW2, due to the high complexity of its content (see Fig. 2), overall CS levels increased as expected (see Table 2). Statistical analysis indicates that significant effects of Days were observed across all SSQ items (\(p <.001\)***), while interaction effects were significant for all SSQ items except SSQ-O with a p value of.076. However, Training Methods showed a significant effect only for SSQ-D with a p value of.016* (see Table 3a).

Regarding the pairwise comparisons on Days, the SSQ scores on the last day were significantly lower than those on the first day in all training methods (see Table 3b). Among them, increased tolerance to the CS by simple sustained exposure and habituation to VR (i.e., VRO) were observed. This effect did not occur in EW1, where significant differences were observed only in SSQ-N (\(p =.045\)*)and not in other categories, leading us to posit that the exposure effect without balance training requires a relatively longer duration, supporting H1.

The pairwise comparison for the Training Methods showed several significant differences: SSQ-T (VRT < VRO*; 2DT < VRO**), SSQ-O (2DT < VRO**) and SSQ-D (VRT < VRO*; 2DT < VRO***). It is noteworthy that VRO, in particular, exhibited significantly higher levels compared to other training methods on the first day. This was due to the lack of prior balance training and no increased tolerance to sickness from sustained exposure in EW1. Interestingly, the VRT, which initially started with the highest CS levels in EW1, showed lower levels than VRO on the first day of EW2.

3.6.2 Balance performance

To relate the potential effect of balance training on tolerance for CS, three measures were taken: (1) the duration of participants’ balance maintenance; (2) the number of feet down, where participants had to place one foot back on the ground (indicating balance failure); and (3) the variability in their centers of mass. The first one was measured before and after the training sessions, while the latter two were measured during the training sessions (see Fig. 3).

Table 4 shows the trend in balance performance during EW1 and EW2, along with the statistical results for the within-subject factor. Note that the between-subject factor, i.e., the Training Methods, is not presented due to the limited number of participants in each subject group.

Balance maintenance was measured using the “one leg stand with eyes closed” test (Hongsun et al. 2018). The Wilcoxon signed-rank test showed no statistical differences in VRT and 2DT among the tested days; however, when comparing the first day (EW1-1) and the final day (EW2-5), we observed a relatively large increase of 27 s in the average time for VRT, whereas the increase was only 2 s for 2DT. These findings indicate that the VR environment possibly may have had a more significant impact on developing balance abilities compared to the 2D environment.

For the number of foot downs, the Wilcoxon signed-rank test revealed that EW2-1 was significantly larger than EW2-5 in VRT (\(p =.049\)), indicating that VR environments can enhance balance performance. The lack of significance in EW1 may be attributed to the relatively less-inducing CS, resulting in a lower number of foot downs. Moreover, in the 2DT setup, where participants did not wear a VR headset, the visible real environment (e.g., wall, floor) might have helped the participants maintain their balance.

The center of mass variability showed significant differences in all comparisons. In EW1, both groups significantly decreased in EW1-4 compared to EW1-1 (VRT: \(p =.002\)**; 2DT: \(p =.005\)**). However, in EW2, while there was a significant decrease in VRT (EW2-1 > EW2-5; \(p =.001\)**), 2DT showed a increase (EW2-1 < EW2-5; \(p =.47\)). We believe this is because EW1’s training content is quite monotonous. This simplicity helps them maintain a stable center of balance, making it difficult for any factors to have an effect.

Overall, these findings support our second hypothesis (H2), suggesting that immersive training can be more effective in improving balance compared to a 2D or non-immersive environment.

3.6.3 Balance and sickness correlation

The Pearson correlation coefficient test was used to further investigate the relationship between balance performance and the reduction in sickness. The following null hypothesis values of correlations were made: (1) sickness scores and balance maintenance time would be negatively correlated; (2) sickness and the number of balance fails would be positively correlated; and (3) sickness and center of mass variability would be positively correlated. The results are summarized in Table 5, and they are mostly consistent with our assumptions.

Statistically significant correlations were found between the improvements in the number of balance failures and center of mass variability, respectively (either by VRT or 2DT) with the SSQ’s total sickness scores over EW1 and EW2. VRT showed higher correlation coefficients than the 2DT in two measures: number of foot downs (\(r = 0.612 > 0.267\)) and center of mass variability (\(r = 0.305 > 0.269\)). For the balance maintenance time, there was no significant correlation found; however, it is worth noting that while VRT showed a negative correlation as expected (\(r = -0.295\)), 2DT only showed near zero correlation (\(r = 0.001\)).

In summary, as balance performance improves, there is an associated increase in tolerance to sickness in both VRT and 2DT, which supports H4. Additionally, the correlation values suggest that the training effect in VRT was more significant compared to 2DT, supporting H2.

3.6.4 Transfer effect

The true test for any effect of the balance training on CS would be observing how the balance-trained participants perform on completely different VR contents, i.e., the “transfer” contents (see Fig. 1f, g). Assessments were conducted twice: on the first and last days of each week. The descriptive and statistical results are shown in Tables 6 and 7.

The nparLD test revealed that there were no significant differences in EW1. In the case of EW2, significant effects were observed for the Days factor across all SSQ items, but no significant effects were found for Training Methods or their interaction (see Table 7a).

Pairwise comparison tests revealed no significant differences in the between-factor analysis (see Table 7b). However, significant differences were observed within factors (i.e., first day vs. last day) for VRT and 2DT. Specifically, VRT showed significant reductions in SSQ-T (\(p =.029\)*) and SSQ-D (\(p =.029\)*) on the last day compared to the first day, while 2DT demonstrated a significant reduction in SSQ-D (\(p =.049\)*).

This suggests that a transfer effect was only developed by the VR-based balance training. It aligns with the findings that the VRO group, who received no training effect in EW1, showed much higher CS levels early in EW2 (when switched to the new training content) compared to VRT. Thus, it may lead to support for our hypotheses (H2, H3) that an immersive VR environment with long-term balance training is a more effective method than simply being exposed to VR content for an equal amount of time. Note that VRT is still required to accompany extended exposure to VR.

4 Experiment 2: VRT versus VRO

EXP1 had several limitations. The training lasted 2 weeks, but the simplicity of the path in the EW1 content hindered the effective manifestation of any training effect. Although the EW2 content was more challenging, participants could predict their path by the rollercoaster rails, leading to less engagement with the virtual/surrounding environment. The inclusion of the 2D projection display-based training (2DT) in EXP1 was perhaps not appropriate in the first place. For one, it was, expectedly so and as shown in the experimental results, difficult to elicit CS (even though a “large” projection was used, the field of view of only 60° was fixed (i.e., imagery not view angle dependent) and the real world was visible in the periphery). The balancing ability was also notably higher as the training occurred non-immersively—in another words, in the real world, rather than in the unfamiliar VR space. Furthermore, the number of participants was not sufficient to establish a strong validity.

Considering these issues, the second experiment (EXP2) with more participants was conducted to explore further and compare only the effects of VR-based training (VRT) and VR exposure (VRO) over a week (5 days). Specifically, this EXP2 was designed as a two-factor, \(2 \times 2\) mixed model study with repeated measures between subjects.

4.1 Participants

For EXP2, 28 new male participants (ages 19 to 31, \(M = 23.96\), \(SD = 3.16\)) were recruited from the University. The recruitment process was the same as in EXP1, with no one from EXP1 involved. These participants were divided into two groups of 14 each: VRT and VRO. The division was based on their maximum balance ability, measured in seconds (VRT: \(M = 40.57\), \(SD = 26.31\); VRO: \(M = 38.64\), \(SD = 40.10\)) and their sensitivity to CS (MSSQ) (Golding 2006) (VRT: \(M = 16.20\), \(SD = 8.63\); VRO: \(M = 16.24\), \(SD = 7.84\)), ensuring the groups were as balanced as possible (see Table 8).

This experiment received another approval from the University’s IRB (No. 2023-0296-02). Participants were paid $80 for their participation.

4.2 Procedures and measures

The procedure was mostly similar to that of EXP1, but EXP2 was conducted over 1 week (refer to the second week’s procedure of EXP1 in Fig. 3). Participants were asked to perform the ‘one leg stand and eye closed’ test to measure their balance performance. They then experienced the transfer content in a seated position, eliminating any influence of balance effects, to more effectively evaluate the effects of the developed tolerance to CS from balance training in another VR content. The CS score levels were reported using the “Original” SSQ (before the training state for the transfer content). After a break, participants engaged in the training VR content, with or without balance training, depending on the condition, and again evaluated their level of CS using the Original, Visual, and Balance SSQ. The training (or only exposure) was repeated twice daily. On the 2nd to 4th days, participants engaged in only the training VR content twice daily and evaluated their CS levels. On the last day, they performed the balance test again, the training contents twice as usual, and finally, experienced the transfer content in a seated position (after the training state for the transfer content). Note that sufficient break was presented between all steps (i.e., balance test, training, and transfer test). This concluded the second experiment over 1 week.

A new VR training content was used—the “Whale belly exploration” (Joo et al. 2024), which did not have any indicator for the upcoming path (in contrast to the railed rollercoaster content used in EXP1). For the transfer content, the same space exploration content (EW2) was used. Note that both contents lasted 3 min.

4.3 Results

4.3.1 CS tolerance and transfer effects

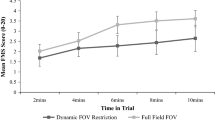

Similar statistical methods, as applied in EXP1, were used for the experimental data analysis. However, since there were two between-subject groups, VRT and VRO, the pairwise comparison was conducted using the Mann–Whitney U test. The “Original” SSQ scores over 1 week are shown in Table 9 and Fig. 6. Similarly, those from the other versions of the SSQ are presented in the “Appendix”.

Similar to EXP1, a decreasing trend was observed in both groups, with VRT showing overall lower levels of CS compared to VRO. Considering the mixed model study, the nparLD methods were also applied. Only the Days effect for all SSQ items had significance, indicating that extended exposure time to VR had an effect on decreasing CS (see Table 10). Moreover, its interactions with the SSQ-D item had a significant effect (\(p =.039\)*). In the pairwise comparisons, although no significant effects were observed in the between-factor analysis, significant effects were found within-factors for both the VRT and VRO groups. Specifically, CS scores on 1 day were significantly higher than those on 5 day across all SSQ items 10b.

Regarding the transfer content, the nparLD test revealed significant effects of the Days factor on all SSQ items, as well as significant interaction effects for SSQ-T (\(p =.044\)*) and SSQ-D (\(p =.049\)*). In the pairwise comparison, a significant decrease in CS was observed only in the VRT from day 1 to day 5 for all SSQ items: SSQ-T (\(p =.005\)**), SSQ-N (\(p =.006\)**), SSQ-O (\(p =.011\)*), and SSQ-D (\(p =.023\)*) This finding indicates that a significant transfer effect was only developed in VRT, which accompanied the balance training (supporting H3).

4.3.2 Balance and cybersickness

With respect to the potential relationship between the trained balance ability and the extent of the cybersickness, the statistical analysis focused particularly on such variables between the first and last days of the training experiment. As for the balance maintenance time, the Shapiro–Wilk test revealed that VRT (\(p =.119\)) and VRO (\(p =.057\)) followed a normal distribution; thus, the Student-t test was utilized. The VRT group showed an average time of 28.14 s (\(SD = 22.49\)) on the first day and showed an increased average time up to 73.78 (\(SD = 46.95\)) on the last day, with a statistical significance (\(p =.002\)**). In contrast, the VRO group started with an average time of 24.21 s (\(SD = 19.41\)) and only resulted in a slight increase to 29.90 (\(SD = 28.05\)) by the end of the week, with no statistical significance (\(p =.243\)).

The trends in the center of mass variability, another indicator of the trained balance ability, over 1 week are summarized in Table 11 and Fig. 6b. The Shapiro-0Wilk test confirmed that only the VRT (\(p =.146\)) followed a normal distribution, unlike the VRO (\(p <.001\)). The Student’s t-test revealed a significant increase in the VRT from day 1 to day 5 (\(p =.049\)*). However, the Wilcoxon signed-rank test showed no significant difference in the VRO (\(p =.526\)). These findings suggest that while the increased exposure to VR developed one’s ability to maintain bodily stability, accompanying balance training further enhanced this improvement.

Correlation analyses were performed between the center of mass and the level of CS. The Pearson correlation test indicated a strong positive relationship in the VRT (\(r = 0.344\)) with a significance (p value \(=.004\)**), suggesting that greater body stability is associated with a lower CS. In contrast, the VRO showed a positive but relatively weak relationship (\(r = 0.090\)) without a statistical significance (\(p =.459\)). These findings further support our assumption that enhancing balance ability through training improves tolerance to CS (related to H1 and H4).

5 Discussion

5.1 The effect of balance training on cybersickness

As discussed in length in Sect. 2.2, there have been several theories as to why and how CS occurs, such as the sensory mismatch (LaViola 2000; Rebenitsch and Owen 2016), lack of the rest frame (Harm et al. 1998), and postural instability (Riccio and Stoffregen 1991; Li et al. 2018; Smart et al. 2002). All such factors are plausible and debatable at the same time. While the proposal of immersive balance training for developing tolerance to CS hinges on postural instability in particular, it does not discount the effect of those other factors nor is it in conflict with them.

Our two experiments have shown significant reductions in CS symptoms in all the treatments. This trend was also observed, albeit to a lesser extent, in the VRO treatment that did not include training. These results indicate that repeated exposure to VR contents reduced sickness (Adhanom et al. 2022; Palmisano and Constable 2022), and it is difficult to deny that this effect may have influenced other conditions as well (in terms of what contributed to the reduction). On the other side, balance training may have reduced the sickness acting as cognitive distraction (Kourtesis et al. 2023; Venkatakrishnan et al. 2023). However, cognitive distraction alone makes it difficult to explain the transfer effect. The same is true for the mere exposure to a particular VR content.

Palmisano and Constable (2022) have shown that repeated exposure to VR content could significantly improve CS. However, this improvement was observed only for the very content the participants were exposed to, and it was not shown whether the effect extended to other VR contents. On the other hand, our experiment confirmed the transfer effect of the balance training to a completely different content. Only the VRT group, which engaged in immersive balance training, experienced significantly reduced CS in the transfer contents. This is the critical finding that sets forth the training (and the improved physical/mental capability) as the main culprit to the sickness reduction—more so than the exposure itself or distraction. This also signifies the potential practicality of the approach.

5.2 The potential of balance training on cybersickness

One representative experiment in the attempt to validate the postural instability theory by Riccio and Stoffregen (1991) showed the decreasing sickness levels in a provocative situation by the subject making a more widened and stable stance (Dennison and D’Zmura 2016). In contrast, the experiment in this work went to other ways, where the participants were purposely situated to be unstable (one leg stand), leading to a possible expectation that the “sickness” should increase according to the same theory. One important difference, however, is that the participants were also instructed to “learn” and train as to how to maintain their balance. Indeed, instead of the increased level of sickness, our results clearly show the reduction and even the transfer effects, singling out the very effect of the “training”.

Interestingly, according to Menshikova et al. (2017), when compared figure skaters, soccer players, and wushu fighters, figure skaters showed the most resilience to CS. Thus, innate or learned balancing capability seems related to tolerance to CS. Ritter et al. (2023) studied the VR-based (safe) training of balance beam performance with gymnastics beginners. Among others, the work showed that the participants generally performed worse in VR than in the real world. This indirectly suggests that, for CS improvement by balance training, the training environment will be important. Likewise, our results point in a similar direction, i.e., VRT being more effective than 2DT and even VRO.

As for 2DT, the level of the CS arising from the visual motion must have been less so to begin with compared to that by VR. The visual content has a substantially smaller field of view (approximately VRT: 100° vs. 2DT: 60°), and objects such as walls and the floor may serve as potential reference points. These are aspects that can diminish the training effect in 2DT as well. On a similar note, training for a spatial task (which the balancing or even withstanding CS from visual motion could be examples of) on the 2D oriented environment has shown a negative transfer effect to the corresponding 3D VR environment (Pausch et al. 1997).

Even though our study seems to show that extended exposure to VR does have an effect on building tolerance to CS, in relation to the related work, its firm establishment is still debatable. Even if it was, we believe that its effect is weaker and not so long-lasting than that of balance training. In balance training, the user makes a conscious effort to encode the relevant information into one’s proprioceptive and muscular control system. How long the training effect can be sustained would be a topic of future research.

5.3 Limitations and future works

Our study is limited in several aspects. CS is a truly multifactorial issue, including gender, age, the nature of the tasks undertaken, type of feedback, and multimodality (Feng et al. 2016; Peng et al. 2020) and the types of devices used (Kourtesis et al. 2023; Kim et al. 2008; Chang et al. 2020). Our work only investigated one such probable factor, i.e., balancing capability. While most factors mentioned above are known to influence the level of CS in one way or another, the variance from the individual difference is relatively large (Tian et al. 2022; Chang et al. 2020; Howard and Van Zandt 2021). Balancing capability can be considered a more predictable control factor (Arcioni et al. 2019; Chardonnet and Mérienne 2017). Training for it is also expected to be much less dependent on the immersive training environment (content genre). Note that the training process can be further expedited by employing multimodal feedback, guidance features, and gamification (Dietz et al. 2022; Juras et al. 2018; Prasertsakul et al. 2018).

Another limitation of our study is the relatively small number of participants in each training method group. The participant pool was limited to a specific demographic, i.e., young adult males, which further restricts the generalizability of our findings to other populations. It is, therefore, premature to extend our claims to a broader audience or diverse subject groups. Future studies should aim to address these limitations by conducting larger-scale experiments that include a more diverse participant pool in terms of age, gender, and background. Moreover, such studies should consider employing a wider variety of sickness-inducing or “provocative” VR content to evaluate the effectiveness of the training methods under different scenarios. Moreover, as there may be more fitting and proper balance training routines, these new VR contents may involve interaction techniques to guide such balancing acts more effectively, as demonstrated in Yang and Kim (2002). This would provide more comprehensive insights and increase the robustness and applicability of the findings across varied contexts.

6 Conclusion

In this paper, we conducted two experiments to observe the relationship between user balance learning and developing CS tolerance under different experimental conditions. The findings indicate that enhancing balance performance leads to an increased tolerance for CS. The study also corroborated the greater effectiveness of balance training in immersive environments compared to non-immersive settings. Furthermore, the improvement in the balance ability demonstrated sustainable effects, enabling individuals to tolerate CS in newly encountered VR content as well.

Although our findings are still preliminary, it is the first of its kind. If further validated with continued in-depth and larger-scale studies (e.g., including various postures such as seated, supine, and prone), we hope to be able to design and recommend a standard VR-based balance training for building tolerance to CS for active yet sickness-sensitive “wannabe” VR users (while also improving one’s fitness at the same time as a bonus).

Data availibility

The data generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Code availability

This code is not available.

Notes

The YouTube 360-degree video link is available at https://www.youtube.com/watch?v=eHAu8BV85vE.

The participants engaged with the transfer content while standing in Experiment 1, whereas they engaged while seated in Experiment 2. The detailed reasons are explained in the Sect. 4 (which describes Experiment 2.

References

Adhanom I, Halow S, Folmer E, MacNeilage P (2022) VR sickness adaptation with ramped optic flow transfers from abstract to realistic environments. Front Virtual Real 3:848001

Ahir K, Govani K, Gajera R, Shah M (2020) Application on virtual reality for enhanced education learning, military training and sports. Augment Hum Res. https://doi.org/10.1007/s41133-019-0025-2

Arcioni B, Palmisano S, Apthorp D, Kim J (2019) Postural stability predicts the likelihood of cybersickness in active HMD-based virtual reality. Displays 58:3–11. https://doi.org/10.1016/j.displa.2018.07.001

Bohannon R, Larkin P, Cook A, Gear J, Singer J (1984) Decrease in timed balance test scores with aging. Phys Ther 64:1067–1070. https://doi.org/10.1093/ptj/64.7.1067

Brachman A, Kamieniarz A, Michalska J, Pawłowski M, Kajetan S, Juras G (2017) Balance training programs in athletes—a systematic review. J Hum Kinet. https://doi.org/10.1515/hukin-2017-0088

Budhiraja P, Miller MR, Modi AK, Forsyth D (2017) Rotation blurring: use of artificial blurring to reduce cybersickness in virtual reality first person shooters. arXiv preprint arXiv:1710.02599. https://doi.org/10.48550/arXiv.1710.02599

Cannavò A, Pratticò FG, Ministeri G, Lamberti F (2018) A movement analysis system based on immersive virtual reality and wearable technology for sport training. In: Proceedings of the 4th international conference on virtual reality. ICVR 2018. Association for Computing Machinery, New York, NY, USA, pp 26–31. https://doi.org/10.1145/3198910.3198917

Cao Z (2017) The effect of rest frames on simulator sickness reduction. PhD thesis, Duke University. https://doi.org/10.13140/RG.2.2.14954.98240

Caputo A, Giachetti A, Abkal S, Marchesini C, Zancanaro M (2021) Real vs simulated foveated rendering to reduce visual discomfort in virtual reality. In: Human–computer interaction—INTERACT 2021: 18th IFIP TC 13 international conference, Bari, Italy, August 30–September 3, 2021, proceedings, part V, pp 177–185. https://doi.org/10.48550/arXiv.2107.01669

Chang E, Kim HT, Yoo B (2020) Virtual reality sickness: a review of causes and measurements. Int J Hum Comput Interact 36(17):1658–1682

Chardonnet MAMJ-R, Mérienne F (2017) Features of the postural sway signal as indicators to estimate and predict visually induced motion sickness in virtual reality. Int J Hum Comput Interact 33(10):771–785. https://doi.org/10.1080/10447318.2017.1286767

Dennison M, D’Zmura M (2016) Cybersickness without the wobble: experimental results speak against postural instability theory. Appl Ergon. https://doi.org/10.1016/j.apergo.2016.06.014

Dennison M, D’zmura M (2018) Effects of unexpected visual motion on postural sway and motion sickness. Appl Ergon. https://doi.org/10.1016/j.apergo.2018.03.015

Dietz D, Oechsner C, Ou C, Chiossi F, Sarto F, Mayer S, Butz A (2022) Walk this beam: impact of different balance assistance strategies and height exposure on performance and physiological arousal in VR. In: Proceedings of the 28th ACM symposium on virtual reality software and technology. VRST ’22. Association for Computing Machinery, New York, NY, USA. https://doi.org/10.1145/3562939.3567818

Duzmanska N, Strojny P, Strojny A (2018) Can simulator sickness be avoided? A review on temporal aspects of simulator sickness? Front Psychol 9:2132. https://doi.org/10.3389/fpsyg.2018.02132

Dużmańska N, Strojny P, Strojny A (2018) Can simulator sickness be avoided? a review on temporal aspects of simulator sickness. Front Psychol 9:2132

Feng M, Dey A, Lindeman RW (2016) An initial exploration of a multi-sensory design space: Tactile support for walking in immersive virtual environments. In: 2016 IEEE symposium on 3D user interfaces (3DUI), pp 95–104. https://doi.org/10.1109/3DUI.2016.7460037

Fernandes AS, Feiner SK (2016) Combating VR sickness through subtle dynamic field-of-view modification. In: 2016 IEEE symposium on 3D user interfaces (3DUI), pp 201–210. https://doi.org/10.1109/3DUI.2016.7460053

Fransson P-A, Patel M, Jensen H, Lundberg M, Tjernström F, Magnusson M, Hansson EE (2019) Postural instability in an immersive virtual reality adapts with repetition and includes directional and gender-specific effects. Sci Rep 9:3168. https://doi.org/10.1038/s41598-019-39104-6

Golding JF (2006) Predicting individual differences in motion sickness susceptibility by questionnaire. Personal Individ Differ 41(2):237–248. https://doi.org/10.1016/j.paid.2006.01.012

Grsser O-J, Grüsser-Cornehls U (1972) Interaction of vestibular and visual inputs in the visual system. Prog Brain Res 37:573–583. https://doi.org/10.1016/S0079-6123(08)63933-3

Haran FJ, Keshner E (2009) Sensory reweighting as a method of balance training for labyrinthine loss. J Neurol Phys Ther JNPT 32:186–91. https://doi.org/10.1097/NPT.0b013e31818dee39

Harm DL, Parker DE, Reschke MF, Skinner NC (1998) Relationship between selected orientation rest frame, circular vection and space motion sickness. Brain Res Bull 47(5):497–501. https://doi.org/10.1016/s0361-9230(98)00096-3

Hemmerich W, Keshavarz B, Hecht H (2020) Visually induced motion sickness on the horizon. Front Virtual Real. https://doi.org/10.3389/frvir.2020.582095

Hong-sun S, Jhin-yi S, Ki-hyuk L (2019) Reliability of balance ability testing tools: Y-balance test and standing on one leg with eyes closed test. Korean J Meas Eval Phys Educ Sports Sci 20(3):53–66

Hongsun S, Jhin-Yi S, Kihyuk L (2018) Reliability of balance ability testing tools: Y-balance test and standing on one leg with eyes closed test. Korea Ins Sport Sci 20(3):53–66. https://doi.org/10.21797/ksme.2018.20.3.005

Horlings CG, Carpenter MG, Küng UM, Honegger F, Wiederhold B, Allum JH (2009) Influence of virtual reality on postural stability during movements of quiet stance. Neurosci Lett 451(3):227–231

Howard MC, Van Zandt EC (2021) A meta-analysis of the virtual reality problem: Unequal effects of virtual reality sickness across individual differences. Virtual Real 25(4):1221–1246

Igoshina E, Russo FA, Haycock B, Keshavarz B (2022) Comparing the effect of airflow direction on simulator sickness and user comfort in a high-fidelity driving simulator. In: International conference on human-computer interaction. Springer, pp 208–220. https://doi.org/10.1007/978-3-031-06015-1_15

Imaizumi LFI, Polastri PF, Penedo T, Vieira LHP, Simieli L, Navega FRF, de Mello Monteiro CB, Rodrigues ST, Barbieri FA (2020) Virtual reality head-mounted goggles increase the body sway of young adults during standing posture. Neurosci Lett 737:135333

Joo D, Kim HY, Kim GJ (2023) The effect of false but stable heart rate feedback via sound and vibration on VR user experienc. In: Proceedings of the 29th ACM symposium on virtual reality software and technology. Association for Computing Machinery, New York, NY, USA, pp 1–2. https://doi.org/10.1145/3611659.3617222

Joo D, Kim H, Kim GJ (2024) The effects of false but stable heart rate feedback on cybersickness and user experience in virtual reality. In: Proceedings of the CHI conference on human factors in computing systems. CHI ’24. Association for Computing Machinery, New York, NY, USA. https://doi.org/10.1145/3613904.3642072

Juras G, Brachman A, Michalska J, Kamieniarz-Olczak A, Pawłowski M, Hadamus A, Białoszewski D, Błaszczyk J, Kajetan S (2018). Standards of virtual reality application in balance training programs in clinical practice: a systematic review. https://doi.org/10.1089/g4h.2018.0034

Kennedy RS, Lane NE, Berbaum KS, Lilienthal MG (1993) Simulator sickness questionnaire: an enhanced method for quantifying simulator sickness. Int J Aviat Psychol 3(3):203–220. https://doi.org/10.1207/s15327108ijap0303_3

Keshavarz B, Hecht H (2014) Pleasant music as a countermeasure against visually induced motion sickness. Appl Ergon 45(3):521–527. https://doi.org/10.1016/j.apergo.2013.07.009

Keshavarz B, Riecke B, Hettinger L, Campos J (2015) Vection and visually induced motion sickness: how are they related? Front Psychol 6:472. https://doi.org/10.3389/fpsyg.2015.00472

Keshavarz B, Hecht H, Lawson B (2014) Visually induced motion sickness: characteristics, causes, and countermeasures. In: Handbook of virtual environments: design, implementation, and applications, pp 648–697. https://doi.org/10.1201/b17360-32

Kim YY, Kim EN, Park MJ, Park KS, Ko HD, Kim HT (2008) The application of biosignal feedback for reducing cybersickness from exposure to a virtual environment. Presence Teleoper Virtual Environ 17(1):1–16

Kim H, Kim J, Joo D, Kim GJ (2023) A pilot study on the impact of discomfort relief measures on virtual reality sickness and immersion. In: Proceedings of the 29th ACM symposium on virtual reality software and technology. Association for Computing Machinery, New York, NY, USA, pp 1–2. https://doi.org/10.1145/3611659.3616893

Kourtesis P, Amir R, Linnell J, Argelaguet F, MacPherson SE (2023) Cybersickness, cognition, & motor skills: the effects of music, gender, and gaming experience. IEEE Trans Visual Comput Graph 29(5):2326–2336. https://doi.org/10.48550/arXiv.2302.13055

LaViola J Jr (2000) A discussion of cybersickness in virtual environments. ACM Sigchi Bull. 32(1):47–56. https://doi.org/10.1145/333329.333344

Lafond D, Duarte M, Prince F (2004) Comparison of three methods to estimate the center of mass during balance assessment. J Biomech 37(9):1421–1426. https://doi.org/10.1016/S0021-9290(03)00251-3

Lee H-J, Chou L-S (2006) Detection of gait instability using the center of mass and center of pressure inclination angles. Arch Phys Med Rehabil 87(4):569–575. https://doi.org/10.1016/j.apmr.2005.11.033

Lee CR, Farley CT (1998) Determinants of the center of mass trajectory in human walking and running. J Exp Biol 201(Pt 21):2935–44. https://doi.org/10.1242/jeb.201.21.2935

Lee H-T, Kim YS (2018) The effect of sports VR training for improving human body composition. EURASIP J Image Video Process. https://doi.org/10.1186/s13640-018-0387-2

Li R, Peterson N, Walter H, Rath R, Curry C, Stoffregen T (2018) Real-time visual feedback about postural activity increases postural instability and visually induced motion sickness. Gait Posture. https://doi.org/10.1016/j.gaitpost.2018.08.005

Lin Y-X, Venkatakrishnan R, Venkatakrishnan R, Ebrahimi E, Lin W-C, Babu SV (2020) How the presence and size of static peripheral blur affects cybersickness in virtual reality. ACM Trans Appl Percept. https://doi.org/10.1145/3419984

Marcori A, Monteiro P, Oliveira J, Doumas M, Teixeira L (2022) Single leg balance training: A systematic review. Percept Mot Skills 129:003151252110701. https://doi.org/10.1177/00315125211070104

Menshikova G, Kovalev A, Klimova O, Barabanschikova V (2017) The application of virtual reality technology to testing resistance to motion sickness. Psychol Russia State Art 10:151–164. https://doi.org/10.11621/pir.2017.0310

Nesbitt K, Davis S, Blackmore K, Nalivaiko E (2017) Correlating reaction time and nausea measures with traditional measures of cybersickness. Displays 48:1–8. https://doi.org/10.1016/j.displa.2017.01.002

Neumann D, Moffitt R, Thomas P, Loveday K, Watling D, Lombard C, Antonova S, Tremeer M (2018) A systematic review of the application of interactive virtual reality to sport. Virtual Real. https://doi.org/10.1007/s10055-017-0320-5

Noguchi K, Gel YR, Brunner E, Konietschke F (2012) nparld: an r software package for the nonparametric analysis of longitudinal data in factorial experiments. J Stat Softw 50(12):1–23. https://doi.org/10.18637/jss.v050.i12

Pai Y-C, Patton J (1997) Center of mass velocity-position predictions for balance control. J Biomech 30(4):347–354. https://doi.org/10.1016/S0021-9290(96)00165-0

Palmisano S, Constable R (2022) Reductions in sickness with repeated exposure to HMD-based virtual reality appear to be game-specific. Virtual Real 26(4):1373–1389

Papandreou G, Zhu T, Kanazawa N, Toshev A, Tompson J, Bregler C, Murphy K (2017) Towards accurate multi-person pose estimation in the wild. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). https://doi.org/10.1016/j.archger.2018.10.012

Papandreou G, Zhu T, Chen L-C, Gidaris S, Tompson J, Murphy K (2018) PersonLab: person pose estimation and instance segmentation with a bottom-up, part-based, geometric embedding model: 15th European Conference, Munich, Germany, September 8–14, 2018, proceedings, part XIV, pp 282–299. https://doi.org/10.1007/978-3-030-01264-9_17

Park SH, Han B, Kim GJ (2022) Mixing in reverse optical flow to mitigate vection and simulation sickness in virtual reality. In: CHI conference on human factors in computing systems, pp 1–11. https://doi.org/10.1145/3491102.3501847

Pastel S, Petri K, Chen C-H, Cáceres A, Stirnatis M, Nübel C, Schlotter L (2022) Training in virtual reality enables learning of a complex sports movement. Virtual Real 27:3. https://doi.org/10.1007/s10055-022-00679-7

Pausch R, Proffitt D, Williams G (1997) Quantifying immersion in virtual reality. In: Proceedings of the 24th annual conference on computer graphics and interactive techniques. SIGGRAPH ’97. ACM Press/Addison-Wesley Publishing Co., USA, pp 13–18. https://doi.org/10.1145/258734.258744

Peng Y-H, Yu C, Liu S-H, Wang C-W, Taele P, Yu N-H, Chen MY (2020) Walkingvibe: Reducing virtual reality sickness and improving realism while walking in VR using unobtrusive head-mounted vibrotactile feedback. In: Proceedings of the 2020 CHI conference on human factors in computing systems, pp 1–12

Peterka RJ (2018) Sensory integration for human balance control. Handb Clin Neurol 159:27–42. https://doi.org/10.1016/B978-0-444-63916-5.00002-1

Prasertsakul T, Kaimuk P, Chinjenpradit W, Limroongreungrat W, Charoensuk W (2018) The effect of virtual reality-based balance training on motor learning and postural control in healthy adults: a randomized preliminary study. BioMedical Eng OnLine 17:52. https://doi.org/10.1186/s12938-018-0550-0

Rasool J, George K (2007) The impact of single-leg dynamic balance training on dynamic stability. Phys Ther Sport 8(4):177–184. https://doi.org/10.1016/j.ptsp.2007.06.001

Rebenitsch L, Owen C (2016) Review on cybersickness in applications and visual displays. Virtual Real 20(2):101–125. https://doi.org/10.1007/s10055-016-0285-9

Riccio GE, Stoffregen TA (1991) An ecological theory of motion sickness and postural instability. Ecol Psychol 3(3):195–240. https://doi.org/10.1207/s15326969eco0303_2

Rine RM, Schubert MC, Balkany TJ (1999) Visual-vestibular habituation and balance training for motion sickness. Phys Ther 79(10):949–957. https://doi.org/10.1093/ptj/79.10.949

Ritter Y, Bürger D, Pastel S, Sprich M, Lück T, Hacke M, Stucke C, Witte K (2023) Gymnastic skills on a balance beam with simulated height. Hum Mov Sci 87:103023. https://doi.org/10.1016/j.humov.2022.103023

Smart JLJ, Stoffregen TA, Bardy BG (2002) Visually induced motion sickness predicted by postural instability. Hum Factors 44(3):451–465. https://doi.org/10.1518/0018720024497745

Smither JA-A, Mouloua M, Kennedy R (2008) Reducing symptoms of visually induced motion sickness through perceptual training. Int J Aviat Psychol 18(4):326–339

So R, Cheung R, Chow E, Li J, Lam A (2007) Final program and proceedings of the first international symposium on visually induced motion sickness, fatigue, and photosensitive epileptic seizures (vims2007)

Stanney K, Hale K, Nahmens I, Kennedy R (2003) What to expect from immersive virtual environment exposure: influences of gender, body mass index, and past experience. Hum Factors 45:504–520. https://doi.org/10.1518/hfes.45.3.504.27254

Stoffregen T, Faugloire E, Yoshida K, Flanagan M, Merhi O (2008) Motion sickness and postural sway in console video games. Hum Factors 50:322–31. https://doi.org/10.1518/001872008X250755

Szczerbik E, Kalinowska M, Swiecicka A, Graff K, Syczewska M (2021) The impact of two weeks of traditional therapy supplemented with virtual reality on balance control in neurologically-impaired children and adolescents. J Bodyw Mov Ther 28:513–520. https://doi.org/10.1016/j.jbmt.2021.09.007

Tian N, Lopes P, Boulic R (2022) A review of cybersickness in head-mounted displays: raising attention to individual susceptibility. Virtual Real 26(4):1409–1441

Tuveri E, Macis L, Sorrentino F, Spano LD, Scateni R (2016) Fitmersive games: fitness gamification through immersive VR. In: Proceedings of the international working conference on advanced visual interfaces. AVI ’16. Association for Computing Machinery, New York, NY, USA, pp 212–215. https://doi.org/10.1145/2909132.2909287

Uzunkulaoğlu A, Yıldırım İB, Aytekin MG, Ay S (2019) Effect of flamingo exercises on balance in patients with balance impairment due to senile osteoarthritis. Arch Gerontol Geriatr 81:48–52. https://doi.org/10.1016/j.archger.2018.10.012

Venkatakrishnan R, Venkatakrishnan R, Raveendranath B, Sarno DM, Robb AC, Lin W-C, Babu SV (2023) The effects of auditory, visual, and cognitive distractions on cybersickness in virtual reality. IEEE Trans Visual Comput Graph 30(8):1–16. https://doi.org/10.1109/TVCG.2023.3293405

Weech S, Kenny S, Barnett-Cowan M (2019) Presence and cybersickness in virtual reality are negatively related: a review. Front Psychol 10:158. https://doi.org/10.3389/fpsyg.2019.00158

Wienrich C, Weidner CK, Schatto C, Obremski D, Israel JH (2018) A virtual nose as a rest-frame-the impact on simulator sickness and game experience. In: 2018 10th international conference on virtual worlds and games for serious applications (VS-Games), pp 1–8. https://doi.org/10.1109/VS-Games.2018.8493408

Wittinghill D, Ziegler B, Moore J, Case T (2015) Nasum virtualis: a simple technique for reducing simulator sickness. In: 2015 games developers conference (GDC)

Yang U, Kim GJ (2002) Implementation and evaluation of “just follow me’’: an immersive, VR-based, motion-training system. Presence 11(3):304–323. https://doi.org/10.1162/105474602317473240

Yang Y, Kim H, Kim GJ (2023) The effects of customized strategies for reducing vr sickness. In: Proceedings of the 29th ACM symposium on virtual reality software and technology. Association for Computing Machinery, New York, NY, USA, pp 1–2. https://doi.org/10.1145/3611659.3617208

Acknowledgements

This work was supported by the IITP/ITRC Program (IITP-2022-RS-2022-00156354), KIAT/KEA Competency Development Program (N0009999), and NRF Korea BRL Program (2022R1A4A1018869).

Author information

Authors and Affiliations

Contributions

Seonghoon Kang proposed the main idea of the paper, designed the methodology, conducted user experiments, performed statistical analysis, and wrote the paper.Yechan Yang and Minchae Kim conducted user experiments.Gerard Jounghyun Kim proposed the main idea, designed the methodology, wrote the paper, and provided guidance.Hanseob Kim designed the methodology, conducted user experiments, performed statistical analysis, provided guidance, and managed the overall progress of the paper.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethics approval

The experiment was approved by the Institutional Review Board (IRB No. 2023-0143-01 and No. 2023-0296-02).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 2 (mp4 39349 KB)

Appendix 1: Descriptive and statistical results using visual SSQ

Appendix 1: Descriptive and statistical results using visual SSQ

Physical challenges arising from the balancing act may resemble certain CS symptoms measured by the SSQ, such as “disorientation” experienced when attempting to stand on one foot. We acknowledge the inherent difficulty in objectively and accurately identifying the sources of CS symptoms and any potential interaction between them. This complexity is compounded by the fact that participants were permitted to attribute a symptom to both visual stimulation and balance exercises simultaneously. To address this issue, two additional versions of the “Original” SSQ-referred to as the “Visual” and “Balance”-were prepared. These versions aim to help participants more effectively distinguish and report whether their CS symptoms were caused by visual stimulation, balance training, or a combination of both.

To verify the effects of balance training, the statistical analysis results of the relatively more validated Original SSQ were included in the main text. In this appendix, the results of the separately prepared questionnaires are presented. However, it is not common for people to suffer severe sickness-like symptoms solely from performing balance exercises. Considering all these, only the descriptive results and statistical analysis of the “Visual” SSQ are reported.

1.1 Appendix 1.1: Experiment 1: VRT versus VRO vsersus 2DT

The first experiment employed a mixed design, where the between-subject factor was the training method, including the VRT, VRO, and 2DT conditions, and the within-subject factor was time, comparing the first and last days of each week. The experiment spanned two weeks, with the first week consisting of 4 days of training and the second week consisting of 5 days of training. The collected results are summarized in Table 12.

Statistical analyses were conducted separately for each week. To evaluate the effects of each factor, the nparLD method was used. For post hoc tests, the Kruskal–Wallis test was applied to between-subject factors, while the Wilcoxon signed-rank test was used for within-subject factors. All analyses applied Bonferroni corrections, with a significance level set at 5%. The results are summarized in Table 13.

1.2 Appendix 1.2: Experiment 2: VRT versus VRO

The second experiment also employed a mixed design; however, unlike Experiment 1, the between-subject factors included only the VRT and VRO training methods. The within-subject factor compared the first and last days of the week. This experiment was conducted over one week, spanning 5 days. The collected data are summarized in Table 14.

For statistical analysis, the nparLD method was used to assess the effect factor. The Mann-Whitney U test was applied for the between-subject factor, while the Wilcoxon signed-rank test was used for the within-subject factor. The remaining analysis procedures followed the same approach as in Experiment 1. Statistical results are summarized in Table 15.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, Y., Kang, S., Kim, M. et al. BalanceVR: balance training to increase tolerance to cybersickness in immersive virtual reality. Virtual Reality 29, 39 (2025). https://doi.org/10.1007/s10055-024-01097-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10055-024-01097-7