Abstract

With the rapid advancement of innovative technologies and the increasing demand for the Metaverse, accurately replicating the real world in virtual environments is becoming crucial. Static spatial distortions integrated with motion will lead to dynamic spatial distortions, which are known to contribute to visually induced motion sickness (VIMS) symptoms. Accurate quantification of these distortions is essential for effective optimization for enhanced realism in virtual environments. This study proposes an Index of Spatial Structure Similarity (ISSS) based on elastic potential energy for the objective quantification of static spatial distortions in virtual environments. This full-reference evaluation method uses perceived real-world objects as the benchmark. Systematic perception experiments were conducted to validate the proposed ISSS method, which demonstrated superior performance in evaluating perceived distortions in virtual environments compared to existing methods. Additionally, dynamic spatial distortion indexes, defined as the first and second derivatives of the ISSS with respect to time (representing velocity and acceleration of spatial distortion), were introduced. This objective quantification method considers human factors comprehensively, potentially mitigating the VIMS-related effects of spatial distortions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Avoid common mistakes on your manuscript.

1 Introduction

The Metaverse, supported by technologies such as extended reality (XR), blockchain, artificial intelligence (AI), sensors, digital twins, and governance, creates a virtual universe that extends beyond the physical world (Carrión 2024; Gronowski et al. 2024). As the third wave of the internet revolution, it enables the establishment of virtual twins of the real world. Characterized by immersion, interactivity, decentralization, and persistence, the Metaverse offers new possibilities across various fields, allowing users to perform nearly all real-world activities within virtual environments (Wang et al. 2023). The growing interest in the Metaverse highlights the demand for digital platforms that offer more immersive and interactive experiences. Among the critical factors for evaluating Metaverse platforms, Virtual-Real convergence is deemed the most significant, as it blurs the boundaries between virtual and real experiences (Shin et al. 2024). Ensuring perceived spatial consistency between the virtual and real worlds is crucial for enhancing realism in the Metaverse, particularly through devices that support three-dimensional (3D) content rendering (Yaita et al. 2023; Murakami et al. 2017).

Spatial distortions in virtual environments destroyed the realism of the virtual world. From the aspect of virtual 3D content perception, the perceived spatial distortions are induced by three fundamental processes: acquisition, display, and perception (Xia and Hwang 2020). During the process of converting the real world to the virtual world, three pairs of potential mismatches may lead to the spatial distortions of virtual world reconstruction, including (1) baseline length between the cameras vs. baseline length between the eyes, (2) camera field of view (FOV) vs. eye FOV, and (3) convergence distance of the 3D camera system vs. viewing distance of the 3D display system (Woods et al. 1993; Gao et al. 2018; Hwang and Peli 2019). Besides, distortions sourcing from camera lenses (Durgut and Maraş 2023) and display systems (Murakami et al. 2017; Bang et al. 2021) also contribute to the perceived spatial distortions in virtual environments. Any parameter mismatches and two-dimensional (2D) geometric distortions may finally affect the reconstruction of 3D virtual space, including distance estimation (Kellner et al. 2012), depth perception (Pollock et al. 2012), and slant perception of surface shape (Tong et al. 2020). The personalized virtual environments and interaction with it are necessary for the immersive experience and precise application (Valmorisco et al. 2024). The static geometric distortions fused with the motion characteristic, which results in dynamic spatial distortions. According to the sensory rearrangement theory, the dynamic spatial distortions in the virtual world will conflict with the expected stability and rigidity of the real world and finally cause visually induced motion sickness (VIMS) (HwangGao and Peli 2014; Chang et al. 2020; Stanney et al. 2020; Mittelstaedt et al. 2024; Vlahovic et al. 2024). However, the definition of dynamic spatial distortions in virtual environments is lacking for the moment. Based on our previous study (Xia et al. 2023), it is important to objectively quantify the dynamic spatial distortions in virtual environments for enhanced realism in virtual environments to eliminate the potential VIMS aggravation effect.

Dynamic spatial distortion refers to the relative distortion of objects within virtual environments during motion, and it is defined in the context of static spatial distortion. The speed at which a user moves through a virtual environment (or the speed of objects in the environment) has a direct impact on the degree of perceived dynamic spatial distortion. Faster movements tend to increase the apparent magnitude of distortion, as rapid changes in perspective or position amplify the mismatch between the visual input and the user’s spatial understanding. The direction of motion can also play a crucial role in determining the extent of dynamic spatial distortion. For instance, movements along the horizontal axis (e.g., left-right, forward-backward) may result in different types of visual distortions compared to vertical motions (e.g., up-down). Distortions might be more pronounced in some directions due to the specific visual cues involved in depth perception and perspective changes. Acceleration refers to changes in the rate of movement over time. The presence of acceleration can exacerbate spatial distortions by creating sudden shifts in perspective or object position that are more difficult for the human visual system to process. Several metrics for evaluating point cloud distortion can be applied to define static spatial distortion. Most existing point cloud distortion evaluation methods were developed to measure geometric impairment caused by lossy compression, including point-to-point (Cignoni et al. 1998), point-to-plane (Tian et al. 2017), projection Peak Signal-to-Noise Ratio (PSNR) (Durgut and Maraş 2023), geometric PSNR (Torlig et al. 2018), and Elastic Potential Energy Similarity (EPES) (Xu et al. 2022). Given the specific requirements for objectively quantifying static spatial distortions in virtual environments, the proposed method should focus on spatial information while disregarding colors, luminance, or other image attributes. Additionally, it is crucial that the proposed objective quantification method exhibits high subjective consistency.

In this study, we propose an Index of Spatial Structure Similarity (ISSS) based on elastic potential energy to evaluate static spatial distortion, inspired by the Elastic Potential Energy Similarity (EPES) used for point cloud quality modeling. We compare the proposed method with several classical point cloud distortion evaluation techniques through systematic perception experiments. The results demonstrate that the proposed method exhibits the highest subjective consistency. Incorporating a time attribute, we define dynamic spatial distortions as the first and second derivatives of the ISSS with respect to time.

2 Spatial distortions in virtual environments

2.1 Influence factors

Dynamic spatial distortions are influenced by static spatial distortions and time. Static spatial distortions arise during 3D content acquisition, display, and perception processes (Xia and Hwang 2020). Figure 1 illustrates the fundamental processes: a real-world or computer-generated scene is captured using two parallel cameras (Fig. 1a) and then presented on a 3D display for observer perception (Fig. 1b). In this model, \(\alpha \) represents the single-camera FOV, \(\beta \) denotes the single-eye FOV, \(C_d\) is the convergence distance of the 3D camera system, \(V_d\) is the viewing distance of the 3D display system, \(b_c\) is the baseline length between cameras, and \(b_e\) is the baseline length between eyes. The virtual environment is considered distortion-free when the reconstruction process satisfies Eqs. 1 and 2.

During the processes of 3D content acquisition and display, static spatial distortions are typically caused by optical lenses (see Fig. 2). To achieve an extended FOV, stereoscopic images can be captured using a wide-angle fisheye lens 3D camera (Pockett et al. 2010). The imaging process with a wide-angle fisheye lens distorts the center of the image and shifts pixel locations, leading to barrel distortions (Lee et al. 2019) (see Fig. 2b). For 3D content presentation, near-eye Head-Mounted Displays (HMDs) use magnifying lenses to focus and position images at a desired optical distance, which results in radial distortions known as pincushion distortions (Tong et al. 2020) (see Fig. 2c). Typically, the displayed content is pre-warped to counteract barrel distortion before presentation (Martschinke et al. 2019). Both optical barrel and pincushion distortions are non-uniform and depend on the eccentricity from the optical axis. The radial distortion can be described using the simple model given by Eq. 3:

where d is the radial distance from the optical axis to a point in the original image, and D is the radial distance of the corresponding point from the optical axis in the processed image. The coefficient k determines the magnitude and type of distortion (positive for barrel distortion and negative for pincushion distortion). Despite various correction methods, insufficiently corrected 2D distortions from lenses can affect the 3D space during content perception and fusion.

Parameter mismatches during the 3D content acquisition, display, and perception processes, combined with 2D distortions introduced by optical lenses, ultimately contribute to the perceived 3D spatial distortions from the observer’s perspective. Examples of these distortions are illustrated in Fig. 3. To model spatial distortions, a simulation is conducted using a 3D point cloud representation of a horse. In the simulation, the midpoint between two cameras/eyes is used as the origin for the X and Y coordinates, with the cameras/eyes aligned along the X-axis. The Z-axis extends in front of the observer, with positive values representing distances closer to the observer. In the visual representation, the red color indicates the non-distorted original horse in the real world, while the blue color represents the distorted horse in the virtual space. Under the same mismatch conditions, increased distance in the virtual space results in more pronounced distortions.

The static spatial distortion simulation. a Parameters mismatch simulation with single-camera FOV smaller than single-eye FOV; b parameters mismatch simulation with single-camera FOV bigger than single-eye FOV. The red color represents the non-distorted original objects, and the blue color represents the distorted objects

2.2 Objective quantification

The Index of Spatial Structure Similarity (ISSS), based on elastic potential energy, is proposed to objectively quantify static spatial distortions in virtual environments. This full-reference evaluation method uses perceived spatial structures from the real world as a benchmark. To assess spatial structure distortion, objects in both real and virtual environments are rendered as colorless point clouds. Although objects can be positioned anywhere within virtual environments, their location does not affect the distortion quantification. The registration of objects in virtual environments is performed using the iterative closest point (ICP) algorithm (Besl and McKay 1992) through a cost function (Eq. 4).

where \(S_r\) represents the point cloud samples from the real world, and \(S_v\) denotes the point cloud samples from the virtual environment. R is the rotation matrix obtained after registration, and T is the translation vector after registration. The point cloud samples \(S_v\) are adjusted prior to quantification through rotation and translation by minimizing the above cost function, i.e., \(\arg \min _{(R, T)} E(R, T)\).

As illustrated in Fig. 4, the point cloud is segmented into multiple local neighborhoods, with an origin \(P_k\) assigned as the initial aggregation point for the points within each neighborhood. Virtual springs are then defined as connections between the points and their origins. Each virtual spring has a specific elastic coefficient, which is a function of the point’s coordinates. The elastic coefficients were selected based on a balance between computational efficiency and the accuracy of distortion quantification. In the current implementation, the coefficients were empirically determined to provide a reasonable approximation of the spatial deformations in the context of virtual environments. The elastic coefficient of the spring connecting \(X_i\) and its origin \(P_k\) is defined by Eq. 5. The elastic work required to move \(X_i\) to its final destination is expressed by Eq. 6, where l represents the elongation of the spring.

Based on the above definition, the total elastic potential energy for both the real-world and virtual environment objects can be computed by hypothetically moving all points within the point cloud. For two sets of local neighborhoods from point cloud samples of the real world and the virtual environment, the total elastic potential energy can be calculated using Eqs. 7 and 8, respectively.

Finally, the total ISSS can be calculated using the method outlined in Eq. 9.

where \(E_{S_r}\) is the total elastic potential energy of the point cloud samples in the real world; \(E_{S_v}\) is the total elastic potential energy of the point cloud samples in the perceived virtual environment; \(\rho \) is a very small positive constant used to prevent zero denominators.

3 Experimental verification

3.1 Experiment setup

To validate the proposed method for evaluating static spatial distortions in virtual environments, a perception experiment was conducted. Six 3D point clouds (Fig. 5) were selected, and ten distorted versions of each point cloud were simulated by varying types and levels of mismatches. The 20-second video stimuli were generated by employing a virtual 3D camera within Unreal Engine (Version 4) by Epic Games, which captured the point cloud models from a dynamic perspective. Specifically, the virtual camera was programmed to revolve around each point cloud along a circular trajectory, completing a full 360-degree orbit in exactly 20 s. This setup ensured a consistent and uniform observation of the spatial characteristics of the point clouds from all angles. The camera movement was carefully calibrated to maintain a constant angular velocity, providing a smooth and stable viewing experience. Additionally, lighting and environmental settings in Unreal Engine were optimized to enhance the visibility of spatial distortions and ensure that the videos accurately represented the point cloud data under evaluation. A total of sixty such videos were created, each showcasing the point cloud from this rotating perspective to facilitate comprehensive analysis and perception-based evaluation.

The parallel camera configuration was used to ensure linearity during content acquisition, with the 3D camera system encircling the paired point clouds to produce the videos. According to the difference between designed stimuli, the perception experiment was divided into two parts. The designed stimuli were consistent across all participants in Experiment Part I with default Inter-Pupillary Distances (IPD) of 63 mm; however, varying IPDs among participants resulted in different perceived distortion levels. Consequently, subjective scores were adjusted based on each participant’s specific IPD information. In Experiment Part II, stimuli were customized based on each participant’s IPD, ensuring that the 3D content presented was tailored to enhance individual visual perception.

Since the comfortable monocular horizontal FOV of the human eye is \({60}^\circ \) (Marieb and Hoehn 2022), the single-eye FOV in this experiment was set to \({60}^\circ \). The \({60}^\circ \) single-eye FOV is a standard and commonly used field of view in virtual reality (VR) and perceptual studies. It represents a moderate view angle that balances visual immersion and practical experimental control.To minimize distortion caused by the display, a desktop polarized stereoscopic display (PHILIPS, 278G4DHSD), with a 27-inch screen and a resolution of 1920\(\times \)1080 pixels, was used. Participants were seated at a distance approximately 1.54 times the display height to ensure the single-eye FOV remained around \({60}^\circ \) (see Fig. 6). The sequence of the sixty stimuli was randomized for each participant. Participants were instructed to view each 20-second stimulus and then rate the perceptual distortion level based on the differences between the paired point clouds, using a five-grade impairment scale (ITU-R BT.500-15 2023) as detailed in Table 1.

Twenty-one participants, including seven females, volunteered for the experiment. Their ages ranged from 21 to 30 years, with a mean age of 24 years. All participants had either normal or corrected-to-normal visual acuity (20/20 or better, as measured by the Test Chart 2000 Pro, Thomson Software Solutions, London) and normal stereo acuity (\(\le 70''\)), as assessed with a clinical stereo vision testing chart (Stereo Optical Co., Inc., Chicago, IL). The experimental procedure adhered strictly to the Declaration of Helsinki. Prior to the experiment, participants completed an Informed Consent Form and were informed of their right to withdraw from the study at any time.

3.2 Result

The results of Experiment Part I and Experiment Part II were analyzed separately. In Experiment Part I, the designed stimuli were consistent across all participants, but varying Inter-Pupillary Distances (IPDs) among participants resulted in different perceived distortion levels. Consequently, subjective scores were adjusted based on each participant’s specific IPD information. Following the virtual space acquisition, display, and perception model proposed by Xia and Hwang (2020), the adjustment was performed by fitting the relationship between objective evaluation metrics and subjective ratings for each participant. This model accounts for the participant’s IPD and recalculates their subjective ratings under conditions where the distance between the stereoscopic cameras matches their IPD. The subjective distortion scores from all participants were then aligned with the same objective distortions for evaluation. In contrast, in Experiment Part II, the designed stimuli were customized based on each participant’s IPD, ensuring that the subjective scores could be used directly without further adjustments. To assess the consistency between subjective and objective performances, three performance indicators were utilized: Pearson Linear Correlation Coefficient (PLCC), Spearman Rank-Order Correlation Coefficient (SROCC), and Root Mean Square Error (RMSE). PLCC measures the linear correlation between subjective ratings and objective distortion values, assessing the degree of agreement between subjective perception and the objective metrics. SROCC evaluates the monotonic relationship between subjective scores and objective distortion measures, providing insight into how well the rankings of the distortions match. RMSE quantifies the average deviation between subjective and objective distortion scores, providing a measure of the overall accuracy of the distortion evaluation.

The results of both Experiment Part I and Experiment Part II are summarized in Table 2, which compare the performance of the proposed ISSS method with four other existing methods. Bold text indicates the best performance, while underlined text denotes the second-best. In Experiment Part I, the ISSS method demonstrated superior performance in terms of PLCC and RMSE, and it achieved the second-best performance for SROCC. The PLCC results in Experiment Part I indicate a strong linear relationship between the subjective distortion ratings and the objective distortions, suggesting that the ISSS method effectively quantifies static distortions. The RMSE analysis showed that the ISSS method yielded the smallest deviation from the objective values, further supporting its accuracy. In Experiment Part II, the ISSS method outperformed all other methods in terms of all three performance indicators, showing its robustness in assessing both static and dynamic spatial distortions. The SROCC results for Experiment Part II indicated that the ISSS method had the best rank-order consistency between subjective and objective evaluations, suggesting that it accurately captures the perceptual rankings of distortion severity.

Overall, the ISSS method proves advantageous for evaluating perceived distortions in virtual environments, providing a more comprehensive and reliable measure of visual consistency in virtual environments. Unlike other methods that typically focus on specific distortion types (e.g., geometric or texture-based distortions), the ISSS method integrates various aspects of spatial structure. The use of an elastic potential energy model in ISSS enables it to capture the interactions between virtual objects and the user’s perceptual system, offering a more nuanced representation of distortion effects. Besides, ISSS explicitly incorporates human visual perception in its evaluation process. It uses benchmarks based on real-world objects, which are aligned with human perceptual standards, resulting in more accurate and perceptually meaningful distortion measurements.

4 Quantification of dynamic spatial distortions

Visually Induced Motion Sickness (VIMS) is a psychophysiological condition triggered by motion-related visual stimuli (Zhang et al. 2016). Dynamic spatial distortions arise from static spatial distortions combined with motion characteristics. The indexes of dynamic spatial distortions are defined with respect to time. The velocity of spatial distortions captures how quickly an object’s distorted shape or position changes over time as a user navigates the virtual environment. This is particularly relevant in dynamic scenes where fast-moving objects or rapid changes in perspective can exacerbate visual discomfort. A higher rate of distortion velocity is hypothesized to cause a greater mismatch between visual cues and the user’s sense of spatial consistency, leading to increased discomfort. Acceleration refers to how the rate of distortion velocity changes over time. Rapid acceleration in spatial distortions is especially problematic in environments where objects move unpredictably or at high speeds, which is often the case in virtual environments that aim to simulate real-world dynamics. Acceleration can amplify the feeling of disorientation and increase the likelihood of VIMS as it further distorts the sensory input received by the user. Specifically, the velocity of spatial distortion \( D_v \) is the first derivative of the ISSS over time, as given in Eq. 10, and the acceleration of spatial distortion \( D_a \) is the second derivative of the ISSS over time, as shown in Eq. 11.

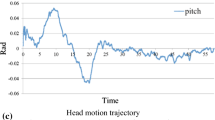

To enhance intuition, a horse modeled as a point cloud (see Fig. 3) was simulated moving at a constant speed of 5 ms per second within a distorted virtual environment. This environment was created with a mismatch in the field of view (FOV) between the camera and the eye. As shown in Fig. 7, both static and dynamic spatial distortions of the horse were quantified during its motion, with the results displayed in subplots. Solid lines depict the horse moving horizontally from left to right in front of the observer, while dotted lines illustrate vertical movement from near to far. Due to the spatial distortion characteristics of the simulated virtual environment, distortions become more pronounced as the distance increases in the \(Z\) direction, and they are symmetrical in the \(X\) and \(Y\) directions. The spatial distortions in the virtual environment appear symmetrical and change smoothly; however, the perceived asymmetry and fluctuations depicted in Fig. 7 arise from the inherent asymmetry of the quantified horse.

The fluctuations observed in the dynamic spatial distortion measurements are more pronounced compared to those in static spatial distortions. This pattern is primarily due to the fact that dynamic distortions are sensitive to changes in motion characteristics such as speed and acceleration, which introduce more variability over time. In contrast, the static spatial distortion index, which quantifies the overall spatial structure similarity, tends to exhibit smoother trends as it reflects more stable spatial relationships over time. The increased fluctuations in dynamic distortions imply that virtual environments with greater motion variability (e.g., rapid changes in motion speed or direction) may lead to stronger perceptual distortions, which are a significant factor contributing to VIMS symptoms. This suggests that mitigating VIMS in virtual environments requires controlling the rate and magnitude of dynamic distortions to maintain user comfort.

Simulation of the proposed method for static and dynamic spatial distortions objective quantification, which simulated a horse running at a constant speed of 5 ms per second. The horse is running from the near to the distant in the Z direction and from left to right in the X direction, respectively. a Index of spatial structure similarity; b velocity of spatial distortion; c acceleration of spatial distortion

5 Discussion

Virtual worlds within the Metaverse face inherent limitations in replicating the complexities of the real world. One significant challenge is the presence of perceived spatial distortions, which can greatly affect user experience, particularly leading to visually induced motion sickness (VIMS) symptoms. Addressing these distortions is crucial for enhancing the quality of virtual environments and ensuring user comfort.The proposed Index of Spatial Structure Similarity (ISSS), grounded in elastic potential energy, serves as a comprehensive evaluation method. By utilizing real-world objects as benchmarks, the ISSS allows for the objective quantification of spatial distortions. This methodology not only considers the overall spatial structure but also introduces dynamic spatial distortion indexes that account for motion speed, providing a more nuanced assessment of virtual environments.

Validation through perceptual experiments demonstrates the advantages of the ISSS method over existing techniques, particularly its ability to accurately assess perceived distortions. However, it is essential to acknowledge the limitations of the ISSS approach. Currently, the evaluation primarily focuses on the overall distortions of objects, which may overlook critical surface-level variations from the observer’s perspective. This highlights the need for further refinement to capture the subtleties of spatial distortions that directly influence user perception. Additionally, while ISSS aligns well with subjective evaluations in most cases, occasional discrepancies highlight the complexity of human perception, which is influenced by factors beyond what can be captured by objective metrics alone.

Future research should aim to integrate more detailed human factor considerations into the quantification of spatial distortions. Incorporating user feedback and subjective experiences could enhance the relevance of the ISSS method. Additionally, exploring advanced techniques such as machine learning could further improve the accuracy and adaptability of spatial distortion assessments across diverse environments. Moreover, the ISSS method holds promise for various applications beyond gaming, including training simulations and medical environments. By demonstrating its effectiveness in these contexts, we can emphasize the broader implications of our research, paving the way for enhanced user experiences in the Metaverse.

While the participants in this study all had normal binocular vision, future studies could explore the effects of spatial distortions on individuals with binocular vision imbalance (e.g., strabismus or differing refractive errors). It is possible that individuals with such vision characteristics may experience different levels of sensitivity to virtual spatial distortions, potentially leading to increased susceptibility to visually induced motion sickness (VIMS). This would be an important area for further research to assess the generalizability of our method.

This study’s findings extend beyond static and dynamic spatial distortions, offering insights that can be directly applied to the field of 3D imaging and reconstruction. By evaluating how dynamic distortions impact visual perception, we provide a framework for improving 3D model accuracy in virtual environments. This work lays the foundation for future research in the development of real-time perceptual correction algorithms, which can be integrated into 3D reconstruction pipelines to ensure a higher degree of realism and user comfort in applications across multiple domains.

6 Conclusions

With the rapid development of innovative technologies and the increasing demand for the Metaverse, accurately replicating the real world within virtual environments is crucial for enhanced realism. Dynamic spatial distortions, resulting from the integration of static spatial distortions and motion, are a known source of visually induced motion sickness (VIMS) symptoms. Effective quantification is essential for optimization. This study proposes an Index of Spatial Structure Similarity (ISSS) based on elastic potential energy for objectively quantifying dynamic spatial distortions in virtual environments. The ISSS method, a full-reference evaluation approach, uses real-world objects as the benchmark. Systematic perception experiments were conducted to validate the method, and its performance was compared with four existing methods for measuring geometrical impairment. The consistency between subjective and objective evaluations was assessed using Pearson Linear Correlation Coefficient (PLCC), Spearman Rank Order Correlation Coefficient (SROCC), and Root Mean Square Error (RMSE). The results demonstrate that the ISSS method excels in evaluating perceived distortions in virtual environments. Additionally, dynamic spatial distortion indexes, defined as the first and second derivatives of the ISSS with respect to time (velocity and acceleration), were introduced. The method’s consideration of human factors is critical in mitigating VIMS-related effects of spatial distortions.

References

Bang K, Jo Y, Chae M, Lee B (2021) Lenslet VR: Thin, flat and wide-Fov virtual reality display using Fresnel lens and Lenslet array. IEEE Trans Visual Comput Graphics 27:2545–2554. https://doi.org/10.1109/TVCG.2021.3067758

Besl PJ, McKay ND (1992) A method for registration of 3-d shapes. IEEE Trans Pattern Anal Mach Intell 14:239–256. https://doi.org/10.1109/34.121791

Carrión C (2024) Research streams and open challenges in the metaverse. J Supercomput 80:1598–1639. https://doi.org/10.1007/s11227-023-05544-1

Chang E, Kim HT, Yoo B (2020) Virtual reality sickness: a review of causes and measurements. Int J Human-Comput Interact 36:1658–1682. https://doi.org/10.1080/10447318.2020.1778351

Cignoni P, Rocchini C, Scopigno R (1998) Metro: measuring error on simplified surfaces. Comput Graph Forum 17:167–174. https://doi.org/10.1111/1467-8659.00236

De Queiroz RL, Chou PA (2017) Motion-compensated compression of dynamic voxelized point clouds. IEEE Trans Image Process 26:3886–3895. https://doi.org/10.1109/TIP.2017.2707807

Durgut T, Maraş EE (2023) Principles of self-calibration and visual effects for digital camera distortion. Open Geosci 15:20220552. https://doi.org/10.1515/geo-2022-0552

Gao Z, Hwang A, Zhai G, Peli E (2018) Correcting geometric distortions in stereoscopic 3d imaging. PLoS ONE 13:e0205032. https://doi.org/10.1371/journal.pone.0205032

Gronowski A, Arness DC, Ng J et al (2024) The impact of virtual and augmented reality on presence, user experience and performance of information visualisation. Virt Real 28:133. https://doi.org/10.1007/s10055-024-01032-w

Hwang AD, Peli E (219) Stereoscopic three-dimensional optic flow distortions caused by mismatches between image acquisition and display parameters. J Imag Sci Technol, 63: 060412–1–060412–7. https://doi.org/10.2352/J.ImagingSci.Technol.2019.63.6.060412

HwangGao AD, Peli E (2014) Instability of the perceived world while watching 3d stereoscopic imagery: a likely source of motion sickness symptoms. Iperception 5:515–535. https://doi.org/10.1068/i0647

ITU-R (2023) Methodologies for the subjective assessment of the quality of television images. BT.500-15

Kellner F, Bolte B, Bruder G et al (2012) Geometric calibration of head-mounted displays and its effects on distance estimation. IEEE Trans Visual Comput Graphics 18:589–596. https://doi.org/10.1109/TVCG.2012.45

Lee M, Kim H, Paik J (2019) Correction of barrel distortion in fisheye lens images using image-based estimation of distortion parameters. IEEE Access 7:45723–45733. https://doi.org/10.1109/ACCESS.2019.2908451

Marieb EN, Hoehn K (2022) Human anatomy physiology, 12th edn. Pearson, London

Martschinke J, Martschinke J, Stamminger M, Bauer F (2019) Gaze-dependent distortion correction for thick lenses in HMDS. In: 2019 IEEE Conference on virtual reality and 3D user interfaces (VR), pp. 1848–1851

Mittelstaedt J, Huelmann G, Marggraf-Micheel C et al (2024) Cybersickness with passenger VR in the aircraft: influence of turbulence and vr content. Virtual Real 28:112. https://doi.org/10.1007/s10055-024-01008-w

Murakami E, Oguro Y, Sakamoto Y (2017) Study on compact head-mounted display system using electro-holography for augmented reality. IEICE Trans Electr, E100-C: 956–971. https://doi.org/10.1587/transele.E100.C.965

Pockett L, Salmimaa M, Pölönen M, Häkkinen J (2010) The impact of barrel distortion on perception of stereoscopic scenes. In SID Symposium digest of technical papers, pp. 526–529

Pollock B, Burton M, Kelly JW et al (2012) The right view from the wrong location: depth perception in stereoscopic multi-user virtual environments. IEEE Trans Visual Comput Graphics 18:581–588. https://doi.org/10.1109/TVCG.2012.58

Shin J, Park J, Kim H et al (2024) Evaluating metaverse platforms: status, direction, and challenges. IEEE Cons Electr Magazine. https://doi.org/10.1109/MCE.2024.3350898

Stanney K, Lawson BD, Rokers B et al (2020) Identifying causes of and solutions for cybersickness in immersive technology: reformulation of a research and development agenda. Int J Human-Comput Interact 36:1783–1803. https://doi.org/10.1080/10447318.2020.1828535

Tian D, Ochimizu H, Feng C, et al. (2017) Geometric distortion metrics for point cloud compression. In: IEEE International Conference on Image Processing, pp. 3460–3464

Tong J, Park M, Arney T, et al. (2020) Optical distortions in VR displays: addressing the differences between perceptual and optical metrics. In: 2020 IEEE Conference on virtual reality and 3D user interfaces (VR), pp. 848–855

Torlig EM, Alexiou E, Fonseca TA, et al. (2018) A novel methodology for quality assessment of voxelized point clouds. In Applications of Digital Image Processing XLI. SPIE, Vol. 10752, pp. 174–190

Valmorisco S, Raya L, Sanchez A (2024) Enabling personalized VR experiences: a framework for real-time adaptation and recommendations in VR environments. Virtual Reality 28:128. https://doi.org/10.1007/s10055-024-01020-0

Vlahovic S, Skorin-Kapov L, Suznjevic M, Pavlin-Bernardic N (2024) Not just cybersickness: short-term effects of popular vr game mechanics on physical discomfort and reaction time. Virtual Reality 28:108. https://doi.org/10.1007/s10055-024-01007-x

Wang Y, Su Z, Zhang N et al (2023) A survey on metaverse: fundamentals, security, and privacy. IEEE Commun Surv Tutor 25:319–352. https://doi.org/10.1109/COMST.2022.3202047

Woods A, Docherty T, Koch R (1993) Image distortions in stereoscopic video systems. In: Proceeding SPIE 1915, Stereoscopic Displays and Applications IV, pp. 36–49, San Jose, California, USA. SPIE

Xia Z, Hwang A (2020) Self-position awareness-based presence and interaction in virtual reality. Virtual Reality 24:255–262. https://doi.org/10.1007/s10055-019-00396-8

Xia Z, Zhang Y, Ma F et al (2023) Effect of spatial distortions in head-mounted displays on visually induced motion sickness. Opt Express 31:1737–1754. https://doi.org/10.1364/OE.478455

Xu Y, Yang Q, Yang L, Hwang JN (2022) EPES: point cloud quality modeling using elastic potential energy similarity. IEEE Trans Broadcast 68:33–42. https://doi.org/10.1109/TBC.2021.3114510

Yaita M, Shibata Y, Ishinabe T, Fujikake H (2023) Suppression effect of randomly-disturbed lc alignment fluctuation on speckle noise for electronic holography imaging. IEICE Trans Electr, E106-C: 26–33. https://doi.org/10.1587/transele.2022DII0003

Zhang LL, Wang JQ, Qi RR et al (2016) Motion sickness: current knowledge and recent advance. CNS Neurosci Therapeut 22:15–24. https://doi.org/10.1111/cns.12468

Acknowledgements

This research is supported by the National Natural Science Foundation of China (No. 62002254) and the Natural Science Foundation of Jiangsu Province, China (No. BK20200988). The authors are grateful to the volunteers for participating in the perception experiments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xia, Z., Han, Q., Zhang, Y. et al. Objective quantification of dynamic spatial distortions for enhanced realism in virtual environments. Virtual Reality 29, 30 (2025). https://doi.org/10.1007/s10055-025-01110-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10055-025-01110-7