Abstract

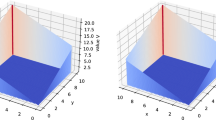

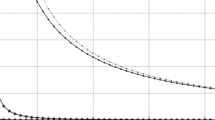

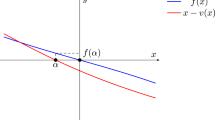

Stochastic optimization/approximation algorithms are widely used to recursively estimate the optimum of a suitable function or its root under noisy observations when this optimum or root is a constant or evolves randomly according to slowly time-varying continuous sample paths. In comparison, this paper analyzes the asymptotic properties of stochastic optimization/approximation algorithms for recursively estimating the optimum or root when it evolves rapidly with nonsmooth (jump-changing) sample paths. The resulting problem falls into the category of regime-switching stochastic approximation algorithms with two-time scales. Motivated by emerging applications in wireless communications, and system identification, we analyze asymptotic behavior of such algorithms. Our analysis assumes that the noisy observations contain a (nonsmooth) jump process modeled by a discrete-time Markov chain whose transition frequency varies much faster than the adaptation rate of the stochastic optimization algorithm. Using stochastic averaging, we prove convergence of the algorithm. Rate of convergence of the algorithm is obtained via bounds on the estimation errors and diffusion approximations. Remarks on improving the convergence rates through iterate averaging, and limit mean dynamics represented by differential inclusions are also presented.

Similar content being viewed by others

References

Benveniste A., Metivier M. and Priouret P. (1990). Adaptive algorithms and stochastic approximations. Springer, New York

Billingsley P. (1968). Convergence of probability measures. Wiley, New York

Buche R. and Kushner H.J. (2000). Stochastic approximation and user adaptation in a competitive resource sharing system. IEEE Trans. Autom. Control 45: 844–853

Chen H.-F. (2002). Stochastic approximation and its applications. Kluwer Academic, Dordrecht

Chin, D.C., Maryak, J.T.: A cautionary note in iterate averaging in stochastic approximation. In: INFORMS annual meeting pp. 5–8 (1996)

Chung K.L. (1954). On a stochastic approximation method. Ann. Math. Stat. 25: 463–483

Dippon J. and Renz J. (1997). Weighted means in stochastic approximation of minima. SIAM J. Control Optim. 35: 1811–1827

Ephraim Y. and Merhav N. (2002). Hidden Markov processes. IEEE Trans. Inform. Theory 48: 1518–1569

Haykin S. (2005). Cognitive radio: brain empowered wireless communications. IEEE J. Sel. Areas Commun. 23: 201–220

Krishnamurthy V. and Rydén T. (1998). Consistent estimation of linear and non-linear autoregressive models with Markov regime. J. Time Ser. Anal. 19: 291–308

Krishnamurthy V. and Yin G. (2002). Recursive algorithms for estimation of hidden Markov models and autoregressive models with Markov regime. IEEE Trans. Inform. Theory 48(2): 458–476

Krishnamurthy V., Yin G. (2006) Controlled hidden Markov models for dynamically adapting patch clamp experiment to estimate Nernst potential of single-ion channels. IEEE Trans. Nanobiosci. 5, 115–125 (2006)

Krishnamurthy V., Wang X. and Yin G. (2004). Spreading code optimization and adaptation in CDMA via discrete stochastic approximation. IEEE Trans. Inform. Theory 50: 1927–1949

Kushner H.J. (1984). Approximation and weak convergence methods for random processes, with applications to stochastic systems theory. MIT Press, Cambridge

Kushner H.J. and Huang H. (1981). Asymptotic properties of stochastic approximations with constant coefficients. SIAM J. Control Optim. 19: 87–105

Kushner H.J. and Yang J. (1993). Stochastic approximation with averaging of iterates: optimal asymptotic rate of convergence for general processes. SIAM J. Control Optim. 31: 1045–1062

Kushner H.J. and Yin G. (2003). Stochastic approximation and recursive algorithms and applications. 2nd edn. Springer, New York

Nevel’son, M.B., Khasminskii, R.Z.: Stochastic approximation and recursive estimation. Translation of Math. Monographs, vol. 47. AMS, Providence (1976)

Polyak B.T. (1990). New method of stochastic approximation type. Autom. Remote Control 51: 937–946

Polyak B.T. and Juditsky A.B. (1992). Acceleration of stochastic approximation by averaging. SIAM J. Control Optim. 30: 838–855

Ruppert D. (1991). Stochastic approximation. In: Ghosh, B.K. and Sen, P.K. (eds) Handbook in sequential analysis., pp 503–529. Marcel Dekker, New York

Schwabe R. (1993). Stability results for smoothed stochastic approximation procedures. Z. Angew. Math. Mech. 73: 639–644

Spall J.C. (1992). Multivariate stochastic approximation using a simultaneous perturbation gradient approximation. IEEE Trans. Autom. Control AC-37: 332–341

Spall J.C. (2003). Introduction to stochastic search and optimization: estimation, simulation and control. Wiley, New York

Tarighat A. and Sayed A.H. (2004). Least mean-phase adaptive filters with application to communications systems. IEEE Signal Process. Lett. 11: 220–223

Yin G. (1990). A stopping rule for the Robbins–Monro method. J. Optim. Theory Appl. 67: 151–173

Yin G. (1991). On extensions of Polyak’s averaging approach to stochastic approximation. Stoch. Stoch. Rep. 36: 245–264

Yin G. (1999). Rates of convergence for a class of global stochastic optimization algorithms. SIAM J. Optim. 10: 99–120

Yin G. and Krishnamurthy V. (2005). Analysis of LMS algorithm for slowly varying Markovian parameters—tracking slow hidden Markov models and adaptive multiuser detection in DS/CDMA. IEEE Trans. Inform. Theory 51: 2475–2490

Yin G. and Krishnamurthy V. (2005). Least mean square algorithms with Markov regime switching limit. IEEE Trans. Autom. Control 50: 577–593

Yin G. and Zhang Q. (2005). Discrete-time Markov chains: two-time-scale methods and applications. Springer, New York

Yin G., Krishnamurthy V. and Ion C. (2004). Regime switching stochastic approximation algorithms with application to adaptive discrete stochastic optimization. SIAM J. Optim. 14: 1187–1215

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Professor Boris Polyak on the occasion of his 70th birthday.

The research of G. Yin was supported in part by the National Science Foundation under DMS-0603287, in part by the National Security Agency under MSPF-068-029, and in part by the National Natural Science Foundation of China under #60574069. The research of C. Ion was supported in part by the Wayne State University Rumble Fellowship. The research of V. Krishnamurthy was supported in part by NSERC (Canada).

Rights and permissions

About this article

Cite this article

Yin, G., Ion, C. & Krishnamurthy, V. How does a stochastic optimization/approximation algorithm adapt to a randomly evolving optimum/root with jump Markov sample paths. Math. Program. 120, 67–99 (2009). https://doi.org/10.1007/s10107-007-0145-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-007-0145-1

Keywords

- Stochastic approximation

- Stochastic optimization

- Nonsmooth-jump component

- Two-time scale

- Convergence and rate of convergence