Abstract

We propose a modeling and optimization framework to cast a broad range of fundamental multi-product pricing problems as tractable convex optimization problems. We consider a retailer offering an assortment of differentiated substitutable products to a population of customers that are price-sensitive. The retailer selects prices to maximize profits, subject to constraints on sales arising from inventory and capacity availability, market share goals, bounds on allowable prices and other considerations. Consumers’ response to price changes is represented by attraction demand models, which subsume the well known multinomial logit (MNL) and multiplicative competitive interaction demand models. Our approach transforms seemingly non-convex pricing problems (both in the objective function and constraints) into convex optimization problems that can be solved efficiently with commercial software. We establish a condition which ensures that the resulting problem is convex, prove that it can be solved in polynomial time under MNL demand, and show computationally that our new formulations reduce the solution time from days to seconds. We also propose an approximation of demand models with multiple overlapping customer segments, and show that it falls within the class of demand models we are able to solve. Such mixed demand models are highly desirable in practice, but yield a pricing problem which appears computationally challenging to solve exactly.

Similar content being viewed by others

Notes

Although the inverse attraction functions always exist, they may not have a closed form for some complex demand models. In Sect. 4.2, we show that the objective function’s derivatives can nevertheless be computed efficiently, allowing general purpose algorithms to be used.

Even in the absence of these price bounds, Assumption 1 ensures that an optimal solution to (COP) which is strictly positive in each component exists, when it satisfies the convexity condition of the next section. This follows from Proposition 3 characterizing its dual in “Appendix D”.

Because of this negation, the values of \(M\) and \(p^*\) defined below are also the negation of the corresponding values in [6]. Therefore the phase I minimization problem is unchanged, but the objective function of the phase II minimization problem is the negation of the objective of (CMNL).

References

Ahipasaoglu, S.D., Sun, P., Todd, M.J.: Linear convergence of a modified Frank-Wolfe algorithm for computing minimum-volume enclosing ellipsoids. Optim. Methods Softw. 23(1), 5–19 (2008)

Aydin, G., Porteus, E.L.: Joint inventory and pricing decisions for an assortment. Oper. Res. 56(5), 1247–1255 (2008)

Bertsekas, D.P.: Nonlinear Programming, vol. 2. Athena Scientific, Belmont (1999)

Bitran, G., Caldentey, R.: An overview of pricing models for revenue management. Manuf. Serv. Oper. Manag. 5(3), 203–229 (2003)

Boyd, J.H., Mellman, R.E.: The effect of fuel economy standards on the U.S. automotive market: an hedonic demand analysis. Transp. Res. Part A Gen. 14(5–6), 367–378 (1980)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Brent, R.: Algorithms for Minimization without Derivatives. Prentice-Hall, Englewood Cliffs, NJ (1973)

Calafiore, G.C.: Ambiguous risk measures and optimal robust portfolios. SIAM J. Optim. 18(3), 853–877 (2007)

Cardell, N.S., Dunbar, F.C.: Measuring the societal impacts of automobile downsizing. Transp. Res. Part A Gen. 14(5–6), 423–434 (1980)

den Hertog, D., Jarre, F., Roos, C., Terlaky, T.: A sufficient condition for self-concordance, with application to some classes of structured convex programming problems. Math. Program. 69(1), 75–88 (1995)

Dong, L., Kouvelis, P., Tian, Z.: Dynamic pricing and inventory control of substitute products. Manuf. Serv. Oper. Manag. 11(2), 317–339 (2008)

Elmaghraby, W., Keskinocak, P.: Dynamic pricing in the presence of inventory considerations: research overview, current practices, and future directions. Manag. Sci. 49(10), 1287–1309 (2003)

Fourer, R., Gay, D.M., Kernighan, B.W.: A modeling language for mathematical programming. Manag. Sci. 36(5), 519–554 (1990)

Gallego ,G., Stefanescu, C.: Upgrades, upsells and pricing in revenue management (2009) (Under review)

Gallego, G., Iyengar, G., Phillips, R., Dubey, A.: Managing Flexible Products on a Network. Tech. rep., CORS Technical, Report TR-2004-01 (2004)

Gallego, G., van Ryzin, G.: A multiproduct dynamic pricing problem and its applications to network yield management. Oper. Res. 45(1), 24–41 (1997)

Hanson, W., Martin, K.: Optimizing multinomial logit profit functions. Manag. Sci. 42(7), 992–1003 (1996)

Hruschka, H.: Market share analysis using semi-parametric attraction models. Eur. J. Oper. Res. 138(1), 212–225 (2002)

Liu, Q., van Ryzin, G.: On the choice-based linear programming model for network revenue management. Manuf. Serv. Oper. Manag. 10(2), 288–310 (2008)

McFadden, D.: Conditional logit analysis of qualitative choice behavior. In: Zarembka, P. (ed.) Frontiers in Econometrics, pp. 105–142. Academic Press, New York (1974)

Mcfadden, D., Train, K.: Mixed M N L models for discrete response. J. Appl. Econom. 15, 447–470 (2000)

Miranda Bront, J.J., Mendez-Diaz, I., Vulcano, G.: A column generation algorithm for choice-based network revenue management. Oper. Res. 57(3), 769–784 (2009)

Nakanishi, M., Cooper, L.G.: Simplified estimation procedures for mci models. Mark. Sci. 1(3), 314–322 (1982)

Schaible, S.: Parameter-free convex equivalent and dual programs of fractional programming problems. Math. Methods Oper. Res. 18, 187–196 (1974)

Schön, C.: On the product line selection problem under attraction choice models of consumer behavior. Eur. J. Oper. Res. 206(1), 260–264 (2010)

Shanno, D.F., Vanderbei, R.J.: Interior-point methods for nonconvex nonlinear programming: orderings and higher-order methods. Math. Program. 87(2), 303–316 (2000)

Song, J.S., Xue, Z.: Demand management and inventory control for substitutable products. Working paper (2007)

Talluri, K.T., van Ryzin, G.J.: An analysis of bid-price controls for network revenue management. Manag. Sci. 44(11-Part-1), 1577–1593 (1998)

Talluri, K.T., van Ryzin, G.J.: The Theory and Practice of Revenue Management. Springer, Berlin (2004)

Urban, G.L.: A mathematical modeling approach to product line decisions. J. Mark. Res. 6(1), 40–47 (1969)

Vanderbei, R.J., Shanno, D.F.: An interior-point algorithm for nonconvex nonlinear programming. Comput. Optim. Appl. 13(1), 231–252 (1999)

Acknowledgments

The authors would like to thank the Associate Editor and two anonymous referees for their valuable comments. They helped improve both the content and exposition of this work. Preparation of this paper was partially supported, for the second author by grants CMMI-0846554 (CAREER Award) and DMS-0732175 from the National Science Foundation, AFOSR awards FA9550-08-1-0369 and FA9550-08-1-0369, an SMA grant and the Buschbaum Research Fund from MIT and for the third author by the CMMI-0758061 Award, the EFRI-0735905 Award and the CMMI-0824674 Award from the National Science Foundation and an SMA grant.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Approximation for multiple customer segments

Proof of Theorem

4 Assumption 1 holds since (i) the sum of decreasing functions is decreasing and the sum of differentiable functions is differentiable, and (ii) the limit of a finite sum is the sum of the limits.

Since from the choice of coefficients \(\sum _{\ell =1}^\ell \gamma _\ell = 1\), we rewrite

where we use fact (5) in the fourth equality. The ratios appearing in the last expression can be expressed as

where \(d_0^\ell (\varvec{x^0}) < 1\). Then, using assumption (26), we obtain

Note that the lower bound is non-negative by the definition of \(\mathcal X \). Since \(\sum _{\ell =1}^\ell \varGamma _\ell = 1\), we obtain from (30)

Defining shorthand \(a=B \Vert \varvec{x} -\varvec{x^0} \Vert _1<1\), notice that

The statement regarding the profit function follows immediately if the prices are also positive, by bounding each term of \(\bar{\varPi }(\varvec{x})\) individually. \(\square \)

Proof of Proposition

2 The quantities in the statement are obtained by summing

with the non-zero elements of the terms \(\nabla \varPi _i(\varvec{\theta })\) and \(\nabla ^2 \varPi _i(\varvec{\theta })\) given in (14) and (15). (By a slight abuse of notation, we now consider the terms \(\varPi _i(\varvec{\theta })\) to be functions of the entire market share vector \(\varvec{\theta }\) instead of only the variables \(\theta _0\) and \(\theta _i\) on which they each depend.) Then, we substitute in \(f_i(x_i)\) and its first and second derivatives using (16) and (17). \(\square \)

Appendix B: Background on attraction demand models

The class of attraction demand models subsumes a number of important customer choice models by retaining only their fundamental properties. Namely, the form of the demands (1) ensures that they are positive and sum to one. A related feature is the well known independence from irrelevant alternatives (IIA) property which implies that the demand lost from increasing the price of one product is distributed to other alternatives proportionally to their initial demands.

The attraction functions \(f_i(\cdot ),i=1,\ldots ,n\) may depend on a number of product attributes in general, but we limit our attention to the effect of price. The requirements of Assumption 1 are mild. The positivity assumption and (i) imply that demand for a product is smoothly decreasing in its price but always positive. The requirement (ii) implies that the demand grows to 1 if the price is sufficiently negative, and ensures that increasing the price eventually becomes unprofitable for a seller. As we demonstrate for specific instances below, if the latter two assumptions are not satisfied, the attraction functions can be suitably modified.

Though the class of attraction demand models is very general, certain instances are well studied and admit straightforward estimation methods to calibrate their parameters. This is the case for the MNL and MCI demand models [20, 23]. On the other hand, if assumptions have been made on customers responses to price changes, appropriate attraction functions can be defined to model the desired behavior. Examples of this approach include the linear attraction demand model, and the mixtures of attraction functions discussed in Sect. 4.

1.1 B.1 The multinomial logit (MNL) demand model

The MNL demand model is a discrete choice model founded on utility theory, where \(d_i(\varvec{x}_i)\) is interpreted as the probability that a utility-maximizing consumer will elect to purchase product \(i\). The utility a customer derives from buying product \(i\) is \(U_i = V_i + \epsilon _i\) whereas making no purchase is has utility \(U_0 = \epsilon _0\). The \(V_i\) terms are deterministic quantities depending on the product characteristics (including price) and the random variables \(\epsilon _i\) are independent with a standard Gumbel distribution. It can be shown that the probability of product \(i\) having the highest realized utility is then in fact given by \(d_i(\varvec{x})\) in (1), with \(f_i(x_i)\) replaced by \(e^{V_i}\). To model the impact of pricing, we let, for each alternative \(i=1,\ldots ,n\),

where \(\beta _{0,i} > 0\) represents the quality of product \(i\) and \(\beta _{1,i} > 0\) determines how sensitive a customer is to its price, denoted here by \(\hat{x}_i\). When there is a population of consumers with independent utilities, the fractions \(d_i(\varvec{x})\) represent the portion of the population opting for each product in expectation. For ease of notation, we re-scale the true price \(\hat{x}_i\) by \(\beta _{1,i}\) to obtain the single-parameter attraction functions (2), with \(v_i = e^{\beta _{0,i}}\) and \(x_i = \beta _{1,i} \hat{x_i}\), rather than using the form of the exponents (31) directly. These functions clearly satisfy Assumption 1.

Parameters for the demand model used in the experiments of Sect. 6 are generated by sampling the mean linear utilities \(V_i(\hat{x}_i)\) in Eq. (31) for each product \(i\). Specifically, \(V_i(0)\) and \(V_i(x_{max})\) are chosen uniformly over \([2\sigma ,4\sigma ]\) and \([-4\sigma ,-2\sigma ]\) respectively, where \(\sigma =\pi /\sqrt{6}\) is the standard deviation of the random Gumbel-distributed customer utility terms \(\epsilon _i\). The parameters \(\beta _{0,i}\) and \(\beta _{1,i}\) are set accordingly. Recall that the mean utility of the outside alternative is fixed at \(V_0=0\). The choice of parameters thus ensures that purchasing each product is preferred with large probability when its price is set to \(0\), and that no purchase is made with large probability when the (unscaled) prices \(\hat{x}_i\) are near \(x_{max}\).

1.2 B.2 The multiplicative competitive interaction (MCI) demand model

Another common choice of attraction functions is Cobb-Douglas attraction functions \( \hat{f}_i(x_i) = \alpha _i x_i^{-\beta _i}, \) with parameters \(\alpha _i>0\) and \(\beta _i>1\). It yields the multiplicative competitive interaction (MCI) model. Since the attraction is not defined for negative prices, we use its linear extension below a small price \(\epsilon \). Let

This is a mathematical convenience, since one would expect problems involving MCI demand to enforce positivity of the prices. The approximation can be made arbitrarily precise by reducing \(\epsilon \).

1.3 B.3 The linear attraction demand model

This demand model approximates a linear relationship between prices and demands, while ensuring that the demands remain positive and sum to less than one. The attraction function for the \(i\)th product is \( \hat{f}_i(x_i) = \alpha _i - \beta _i x_i, \) with parameters \(\alpha _i,\beta _i > 0\). An appropriate extension is needed to ensure positivity. For instance, by choosing the upper bound \(\bar{x_i} = \alpha _i/\beta _i-\epsilon \), the following attraction function satisfies our assumptions:

Appendix C: Pricing under attraction demand models

1.1 C.1 Non-convexity of the naive pricing problem under MNL demand

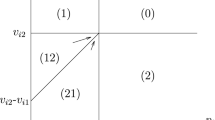

This sections illustrates why the pricing problem (P) is difficult to solve directly in terms of prices, as claimed in Sect. 2. Figure 1 shows the profit in terms of the prices under an MNL demand model when the number of products is \(n=1\) and \(n=2\). The dashed line in the first plot shows the demand as a function of the price. The profit function is not concave even for a single product. With multiple products, the level sets of the objective are not convex, i.e., the objective is not even quasi-concave.

Furthermore, combining nonlinear constraints with a non-quasi-concave objective function introduces additional complications. First, it is easy to see that, because the objective is not quasi-concave, even a linear inequality constraint in terms of prices could exclude the global maximum in the right panel of Fig. 1, and thus give rise to a local maximum on each of the ridges leading to the peak. Secondly, the feasible region of (P) is in general not convex. Figure 2 illustrates the constraints of problem (P) with data

The left panel shows the feasible region in terms of the prices, and the right panel shows the polyhedral feasible region in terms of the demands. Observe that the last two constraints are clearly non-convex in the space of prices. On the other hand, the first constraint happens to belong to the class of convex constraints characterized by Proposition 1.

1.2 C.2 Representing joint price constraints

This section shows how to incorporate certain joint price constraints into the formulations we have proposed. Under MNL demand models, it is natural to assume that the consumer’s utility (31) is equally sensitive to the price regardless of the alternative she considers. That is, \(\beta _{1,i} = \beta _{1,j}\). Then the constraint (4) can be expressed as

The assumption regarding the sensitivity to price is required so that the same scaling described in “Appendix B.1” is used to relate \(x_i\) and \(x_j\) with \(\hat{x}_i\) and \(\hat{x}_j\) of Eq. (31), respectively. This allows \(f_i\) to be replaced with \(f_j\) in the preceding equation. The resulting constraint is evidently linear in terms of the demands and is captured by the formulation (P). This transformation depends on the relationship between the attraction functions for different products and is thus specific to the MNL model. A similar transformation is possible for the linear attraction demand model with the analogous uniform price sensitivity assumption, \(\beta _i = \beta _j\) in (33). From (4), we then have

where the \(d_0(\varvec{x})\) terms can be substituted out using the simplex constraint (8).

1.3 C.3 Convexity of (COP) under common attraction demand models

Corollary 1

Under the linear, MNL and MCI attraction demand models, the objective of (COP) is a concave function and any local maximum of either (COP) or (P) is also a global maximum.

Proof

For each model, we verify the condition (12). For the MNL model (2), we have

Now consider the attractions (33) for the linear model. For \(x>\overline{x}_i\) (i.e., \(y < f_i(\overline{x}_i)\)) we have the MNL attraction function so the condition (12) holds as shown above. Elsewhere, when \(x \le \overline{x}_i\),

as desired. For the MCI attraction functions (32), we have the linear attraction function for \(x_i < \epsilon \), otherwise

where the inequality uses that \(\beta _i > 1\). So the condition (12) is also satisfied. \(\square \)

Appendix D: The dual market share problem

Proposition 3

The dual of (COP) is given by (DCOP). For any \(\varvec{\lambda }\in \mathbb R ^{m}\) and \(\mu \in \mathbb R \), there exist optimal \(y_i^* > 0\), for \(i=1,\ldots ,n\), so that \(\phi _i(y_i^*,\varvec{\lambda },\mu )>0\) in each of the inner maximization problems that appear in the equality constraint of (DCOP). Furthermore, when condition (12) (or equivalently, condition (13)) is satisfied and \((\varvec{\lambda },\mu )\) is an optimal solution of (DCOP), a primal optimal solution \(\varvec{\theta }^*\) of (COP) is given by

Proof

For each \(i=1,\ldots , m^{\prime }\), let \(\lambda _i\) be the Lagrange multiplier associated with the \(i\)th constraint in (COP). Let \(\mu \) be the multiplier associated with the equality constraint. The Lagrangian is

Taking the supremum successively over the different variables, we obtain the dual function

where \(\phi _i(y,\varvec{\lambda },\mu )\) is defined as in (28). The value of \(\theta _0\) has no impact on the value of the inner supremums in (35) since the optimization is over the ratio \(\frac{\theta _i}{\theta _0}\) with the numerator free to take any positive value. Thus we may write the dual problem as

At optimality, the quantity in the inner parentheses must be non-positive, so we may write

The inequality constraint is tight at optimality, because \(\phi _i(y_i,\varvec{\lambda },\mu )\) are strictly decreasing in \(\mu \).

We now show that \(\phi _i(y_i,\varvec{\lambda },\mu )\) achieves a maximum at some \(y_i=y_i^*>0\), for any fixed \(\varvec{\lambda }\) and \(\mu \). For ease of notation, we fix \(i\) and drop the subscript. Let

where we define \(g(y) \triangleq a_i g_i(y)\) and \(\nu \triangleq \sum _{k=1}^{m^{\prime }} \lambda _k A_{ki} + \mu \). By Assumption 1, there exists a value \(\hat{y}>0\) for which \(g(\hat{y}) = \nu \), since the attraction \(f_i(\cdot )\) is defined everywhere on \(\mathbb R \) and \(g(\cdot )\) is its inverse. Moreover, \(\phi (\cdot )\) is strictly positive on the interval \((0,\hat{y})\) and strictly negative on \((\hat{y},\infty )\) since \(g(\cdot )\) is strictly decreasing. Also by Assumption 1 (ii) ,

We consider the continuous extension of \(\phi \), with \(\phi (0)=0\), without loss of generality. Then, the continuous function \(\phi (\cdot )\) achieves a maximum \(y_i^*\) on the closed interval \([0,\hat{y}]\) by Weierstrass’ Theorem. We have \(0<y_i^* < \hat{y}\) and \(\phi (y_i^*)>0\), since \(\phi (0) = \phi (\hat{y}) = 0\) and \(\phi \) is strictly positive on the interval.

Suppose now that condition (12) (equivalently, condition (13)) holds. Then (COP) has a concave objective, a bounded polyhedral feasible set and a finite maximum (because the feasible set is bounded). Then the dual (DCOP) has an optimal solution and there is no duality gap. Consider now an optimal dual solution \((\varvec{\lambda }^*, \mu ^*)\) and corresponding maximizers \(y_1^*, \ldots , y_n^*\). Then (34) is a primal optimal solution, since it maximizes the Lagrangian \(L(\varvec{\theta }; \varvec{\lambda }, \mu )\) by definition of the dual: we have only made the change of variable \(y_i = \frac{\theta _i}{\theta _0}\). \(\square \)

1.1 D.1 The dual problem under MNL demand models

Proposition 4

The dual problem (DCOP) for the special case of MNL attraction functions (2) is given by (DMNL).

Proof

The inverse attraction functions for the MNL model (2) and their derivatives are \(g_i(y) = -\log \frac{y}{v_i}\), and \(g_i^{\prime }(y) = \frac{-1}{y}\), respectively. Then the first order necessary optimality condition for the \(i\)th inner maximization in (DCOP) is

The preceding line gives the unique maximizer since one exists by Proposition 3. Substituting the optimal value of \(y\) back into (28) yields that

which can in turn be substituted into (DCOP) to obtain (DMNL). The constraint may be relaxed to an inequality which is tight at optimality, since the right hand side is decreasing in \(\mu \). \(\square \)

1.2 D.2 Solving the dual problem in general

More generally, there may not exist a closed form solution for the values \(\phi _i(y_i^*,\varvec{\lambda },\mu )\). Then the dual problem may not reduce to a tractable optimization problem. If there is no closed form inverse for the attraction functions, not even the primal market share problem (COP) can be solved directly, even if it has a concave objective function. This is notably the case for the demand models discussed in Sect. 4 (although we have shown that the primal objective function’s gradient and Hessian can nevertheless be computed efficiently).

In this section, we present a column generation algorithm to solve the dual which avoids both of these difficulties. It is more general than solving either of the formulations (COP) and (DCOP) directly, since it does not require the convexity of the primal objective function assumed in Theorem 1, and it does not require a closed form solution for the inner maximizations of the dual problem.

In the dual (DCOP), fixing the variables \(\varvec{\lambda }\) uniquely determines the value of the remaining variable \(\mu \), because of the equality constraint. Notice that the right hand side of the constraint is decreasing in \(\mu \), because all functions \(\phi _i(y,\varvec{\lambda },\mu )\) are decreasing in \(\mu \) for any value of \(y\). Furthermore, any feasible \(\mu \) is positive since the maxima \(\phi _i(y_i^*,\varvec{\lambda },\mu )\) are positive by Proposition 3. We define \(\mu (\varvec{\lambda })\) as the unique root of equation

Its value may be computed by a line search which computes the maximizers \(y_i^*\) at each evaluation. When these one-dimensional maximizations are tractable, it is possible to evaluate the dual objective efficiently, and the Dantzig-Wolfe column generation scheme can be applied to solve (COP). (See, for instance, [3] for details.) Specifically, we propose the following algorithm:

-

1.

Initialization: Set lower and upper bounds \(LB=-\infty \) and \(UB = \infty \).

-

2.

Master Problem: Given market share vectors \(\varvec{\theta }^0,\varvec{\theta }^1,\ldots ,\varvec{\theta }^{L-1}\), solve the following linear program over the variables \(\xi _0, \xi _1, \ldots , \xi _{L-1}\):

$$\begin{aligned} \gamma ^L = \max \quad&\displaystyle \sum \limits _{\ell =0}^{L-1} \xi ^\ell \varPi ( \varvec{\theta }^\ell ) \end{aligned}$$$$\begin{aligned} \text{ s.t. } \quad&\sum _{i=1}^n A_{ki} \left( \sum _{\ell =0}^{L-1} \xi ^\ell \theta _i^\ell \right) \le u_k \quad k=1 \ldots m^{\prime } \\&\sum _{\ell =0}^{L-1} \xi ^\ell = 1, \quad \quad \xi ^\ell \ge 0, \quad \ell =0,\ldots ,L-1. \end{aligned}$$(LP)Let \(\varvec{\lambda }^L\) be the vector of optimal dual variables associated with the inequality constraints. The master problem solves (COP) with the feasible region restricted to the convex hull of the demand vectors \(\varvec{\theta }^0,\varvec{\theta }^1,\ldots ,\varvec{\theta }^{L-1}\). If the optimal value \(\gamma ^L\) of (LP) exceeds the lower bound \(LB\), update \(LB:=\gamma ^L\).

-

3.

Dual Function Evaluation: Compute the root \(\mu (\varvec{\lambda }^L)\) of the dual equality constraint \(F_{\varvec{\lambda }^L}( \mu )\) shown in (37), and let \(\varvec{\theta }^L\) be the primal solution (34) corresponding to the maximizers \(\{y_i^*, i=1,\ldots ,n\}\). If the dual objective value \(L( \varvec{\theta }^L; \varvec{\lambda }^L, \mu (\varvec{\lambda }^L) )\) is less than the upper bound, set \(UB:=L( \varvec{\theta }^L; \varvec{\lambda }^L, \mu (\varvec{\lambda }^L) )\).

-

4.

Termination: If \((UB-LB)\) is below a pre-specified tolerance, stop. Otherwise, let \(L := L+1\) and go to Step 2.

This algorithm requires at least one initial feasible solution \(\varvec{\theta }^0\), which can be found by solving any linear program with the constraints of (COP). It does not require (COP) to have a concave objective, since it computes an optimal solution to its dual, which is always a convex minimization problem. Moreover, it can be used even if there is no closed form for the inverse attraction functions \(g_i(\cdot )\). Indeed, we can equivalently represent the functions \(\phi _i(y,\varvec{\lambda },\mu )\) in terms of the original attraction functions \(f_i(x_i)\), as

Then the maximization can be performed over the price \(x_i\), and the optimal price for given dual variables \((\varvec{\lambda },\mu )\) is

The maximum is guaranteed to exist since \(y_i^*\) exists by Proposition 3. It can be computed via a line search if it is the unique local maximum. The unimodality of \(\phi _i\) (and equivalently, of \(\psi _i\)) is guaranteed, for instance, by the assumption of Theorem 1, or more generally, by the assumption of Proposition 5 below. In the column generation algorithm, the objective of (LP) depends on the prices \(x_i = g_i \left( {\theta _i^0}/{\theta _0^0} \right) \) corresponding to the initial feasible point. Because they must satisfy \(f_i(x_i) = {\theta _i^0}/{\theta _0^0} \) and \(f_i(x_i)\) is monotone, they can also be found using line search procedures in practice. For each new point \(\varvec{\theta }^L\), corresponding prices \(x_i^*\) are computed in the maximizations of \(\psi _i\) over \(x_i\).

Finally, we remark that it is not necessary to dualize the price bounds represented by the constraints \(k = (m+1), \ldots , m^{\prime }\) defined in (9). These constraints may be omitted if the price bounds \( \underline{x}_i \le x_i \le \overline{x}_i\) are instead enforced when computing the maximizers \(x_i^*\) (or, equivalently, the bounds \( f_i(\underline{x}_i) \ge y_i \ge f_i(\overline{x}_i)\) are enforced when computing \(y_i^*\)). This modification reduces the number of constraints from \(m^{\prime }=(m+2n)\) to \(m\).

The algorithm just described may also be viewed as a generalization of the procedure presented by Gallego and Stefanescu [14] to general attraction demand models and arbitrary linear inequality constraints. (Although they arrive at their method by taking the dual of the price-based formulation (P) for the special case of MNL demand). Because convergence of column generation algorithms is often slow near the optimum, we expect that directly solving (COP) or (DCOP) will be more efficient when it is possible. This, for example, is the case with the MNL demand models considered by Gallego and Stefanescu [14]. However, the column generation algorithm applies to demand models where it is not possible to solve the other formulations. It can provide an upper bound on the optimal profit when the objective function of (COP) is not concave, and can often compute an approximate solution quickly (accurate within a few percent in relatively few iterations, as shown in our experiments).

We end this section with the following proposition providing a sufficient condition on the inverse attractions guaranteeing unique maximizers \(y_i^*\). It requires that the inverse attraction functions are “sufficiently concave” (though not necessarily concave) up until some \(\bar{y}\), and then “sufficiently convex” afterward. Omitting the ratio \(\frac{x}{y}\), conditions (39) and (40) below correspond to strict concavity and strict convexity, respectively. However, the first requirement is weaker, and the second is stronger, because this ratio is less than one. (Recall that \(g_i^{\prime }(x)<0,\forall x\) since \(f_i\) and \(g_i\) are decreasing.) We note that the proposition allows \(\bar{y} = 0\) or \(\bar{y} = \infty \), in which case one of the assumptions holds trivially.

Proposition 5

If for each \(i=1,2,\ldots ,n\), there exists a point \(\bar{y}_i \in \mathbb [ 0,\infty ]\) such that

then the maximizers \(\left\{ y_i^*, i=1,\ldots ,n\right\} \) are unique for any values of \(\varvec{\lambda }\) and \(\mu \).

Proof

We fix \(i\) and use the simplified notation defined in (36). From Proposition 3, the maximizer \(y_i^*>0\) exists, and it must be a stationary point of \(\phi \). We will show that the rightmost stationary point to the left of \(\bar{y}_i\) maximizes \(\phi (y)\) over \((0,\bar{y}_i]\), and that the leftmost stationary point to the right of \(\bar{y}_i\) maximizes \(\phi (y)\) over \([\bar{y}_i,\infty )\), if they exist. At least one of them must exist since we know a maximum is attained. If both exist, we deduce that there is an additional stationary point between them by applying the mean value theorem. This contradicts the fact that they are the rightmost and leftmost stationary points on their respective intervals, proving uniqueness of the maximizer.

Suppose \(y \in (0,\bar{y}_i]\) is a stationary point of \(\phi (\cdot )\), i.e.

We will show that for any other point \(x \in (0,y)\), whether or not it is a stationary point,

where we used (41). Having fixed \(y\), we denote the left hand side as a function of \(x\) by

and note that \(\lim _{x \uparrow y} h(x) = g^{\prime }(y)\). Thus, to prove the inequality, it is sufficient to show that the continuous function \(h(x)\) is decreasing in \(x\) on the interval \((0,y)\). We consider the derivative with respect to \(x\)

The assumption (39) implies that the above derivative is negative, where we have substituted \(g_i(\cdot )\) back in, and thus \(h(x)\) is decreasing.

A similar argument shows the analogous result for stationary points to the right of \(\bar{y}_i\). Take instead \(x \in (\bar{y}_i,\infty )\) to be the leftmost stationary point in the half-line, and let \(y \in (x,\infty )\) be some other stationary point. We still have that \(x<y\), but now \(\phi (x) > \phi (y) \Leftrightarrow h(x) < g^{\prime }(y)\), because \(h(x)\) is increasing in \(x\). This is implied by the assumption (40), which shows that the derivative in (42) is now positive. \(\square \)

1.3 D.3 Performance of the column generation algorithm

Table 3 shows the accuracy achieved and the running time in seconds after a fixed number of iterations of the column generation algorithm, when applied to randomly generated problem instances with four overlapping customer segments, using the approximation of Sect. 4. Only the most recently active 512 columns are retained in the master problem (LP). We have no closed form for the inner maximizers \(y_i^*\) and instead use a numerical minimization algorithm based on Brent’s method to compute them. Brent’s method (see [7]) is also used to solve (37) numerically. The algorithm was halted if six significant digits of accuracy were achieved.

As is often the case for column generation algorithms, we observe fast convergence early on. After 500 iterations, most of the instances are solved to within 10 % of the optimal objective value. Quadrupling the number of iterations further reduces the duality gap to a few percentage points in all but the largest instances. The solution times compare favorably with the price formulation (P) and the dual formulation (DCOP) for the single-segment case, but are significantly slower than for the market-share formulation (COP). Of course, the latter formulation requires the custom objective evaluation code described in the preceding section when multiple segments are being approximated. We conclude that the column generation method offers a viable alternative when the other formulations cannot be applied easily, and only limited accuracy is needed.

Rights and permissions

About this article

Cite this article

Keller, P.W., Levi, R. & Perakis, G. Efficient formulations for pricing under attraction demand models. Math. Program. 145, 223–261 (2014). https://doi.org/10.1007/s10107-013-0646-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-013-0646-z