Abstract

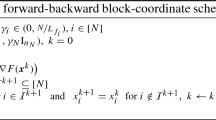

In this paper, we propose a class of block coordinate proximal gradient (BCPG) methods for solving large-scale nonsmooth separable optimization problems. The proposed BCPG methods are based on the Bregman functions, which may vary at each iteration. These methods include many well-known optimization methods, such as the quasi-Newton method, the block coordinate descent method, and the proximal point method. For the proposed methods, we establish their global convergence properties when the blocks are selected by the Gauss–Seidel rule. Further, under some additional appropriate assumptions, we show that the convergence rate of the proposed methods is R-linear. We also present numerical results for a new BCPG method with variable kernels for a convex problem with separable simplex constraints.

Similar content being viewed by others

References

Beck, A., Teboulle, M.: Mirror descent and nonlinear projected subgradient methods for convex optimization. Oper. Res. Lett. 31, 167–175 (2003)

Chen, G., Teboulle, M.: Convergence analysis of a proximal-like minimization algorithm using Bregman functions. SIAM J. Optim. 3, 538–543 (1993)

Chen, S.S., Donoho, D.L., Saunders, M.A.: Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 20, 33–61 (1998)

Collins, M., Globerson, A., Koo, T., Carreras, X., Bartlett, P.: Exponentiated gradient algorithms for conditional random fields and max-margin markov networks. J. Mach. Learn. Res. 9, 1775–1822 (2008)

Curtis, F.E., Overton, M.L.: A sequential quadratic programming algorithm for nonconvex, nonsmooth constrained optimization. SIAM J. Optim. 22, 474–500 (2012)

Friedman, J., Hastie, T., Höfling, H., Tibshirani, R.: Pathwise coordinate optimization. Ann. Appl. Stat. 1, 302–332 (2007)

Hua, X.Q.: Studies on block coordinate gradient methods for nonlinear optimization problems with separate structures, Ph.D. thesis, Graduate school of informatics, Kyoto University, Japan (2015). http://www-optima.amp.i.kyoto-u.ac.jp/papers/doctor/2015_doctor_hua

Koh, K., Kim, S.J., Boyd, S.: An interior-point method for large-scale \(l_1\)-regularized logistic regression. J. Mach. Learn. Res. 8, 1519–1555 (2007)

Liu, H., Palatucci, M., Zhang, J.: Blockwise coordinate descent procedures for the multi-task lasso, with applications to neural semantic basis discovery. In: ICML ’09 Proceedings of the 26th Annual International Conference on Machine Learning, pp. 649–656 (2009)

Luenberger, D.G.: Linear and Nonlinear Programming. Kluwer Academic, Massachusetts (2003)

Mehrotra, S.: On the implementation of a primal-dual interior point method. SIAM J. Optim. 2, 575–601 (1992)

Meier, L., Van De Geer, S., Bühlmann, P.: The group lasso for logistic regression. J. R. Stat. Soc. Series B 70, 53–71 (2008)

Moré, J.J., Toraldo, G.: On the solution of large quadratic programming problems with bound constraints. SIAM J. Optim. 1, 93–113 (1991)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Springer, The Netherlands (2004)

Taylor, H.L., Bank, S.C., McCoy, J.F.: Deconvolution with the \(l_1\) norm. Geophysics 44, 39–52 (1979)

Tseng, P.: Approximation accuracy, gradient methods, and error bound for structured convex optimization. Math. Program. 125, 263–295 (2010)

Tseng, P.: Convegence of a block coordinate descent method for nondifferentiable minimization. J. Optim. Theory Appl. 109, 475–494 (2001)

Tseng, P., Yun, S.: A coordinate gradient descent method for nonsmooth separable minimization. Math. Program. 117, 387–423 (2009)

Wright, S.J.: Accelerated block-coordinate relaxation for regularized optimization. SIAM J. Optim. 22, 159–186 (2012)

Wu, T.T., Lange, K.: Coordinate descent algorithms for lasso penalized regression. Ann. Appl. Stat. 2, 224–244 (2008)

Xu, Y., Yin, W.: A block coordinate descent method for multi-convex optimization with applications to nonnegative tensor factorization and completion, Rice University CAAM Technical Report (2012)

Yuan, M., Lin, Y.: Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Series B 68, 49–67 (2006)

Acknowledgments

We would like to thank the associate editor and the two anonymous reviewers for their constructive comments, which improved this paper significantly. In particular, they encourage us to give the inexact block coordinate descent in Sect. 6 and propose a new method for the convex problem with separable simplex constraints in Sect. 7.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hua, X., Yamashita, N. Block coordinate proximal gradient methods with variable Bregman functions for nonsmooth separable optimization. Math. Program. 160, 1–32 (2016). https://doi.org/10.1007/s10107-015-0969-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-015-0969-z