Abstract

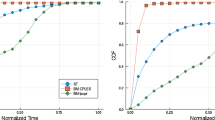

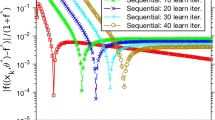

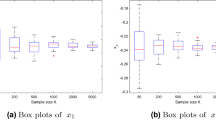

Traditionally, most stochastic approximation (SA) schemes for stochastic variational inequality (SVI) problems have required the underlying mapping to be either strongly monotone or monotone and Lipschitz continuous. In contrast, we consider SVIs with merely monotone and non-Lipschitzian maps. We develop a regularized smoothed SA (RSSA) scheme wherein the stepsize, smoothing, and regularization parameters are reduced after every iteration at a prescribed rate. Under suitable assumptions on the sequences, we show that the algorithm generates iterates that converge to the least norm solution in an almost sure sense, extending the results in Koshal et al. (IEEE Trans Autom Control 58(3):594–609, 2013) to the non-Lipschitzian regime. Additionally, we provide rate estimates that relate iterates to their counterparts derived from a smoothed Tikhonov trajectory associated with a deterministic problem. To derive non-asymptotic rate statements, we develop a variant of the RSSA scheme, denoted by aRSSA\(_r\), in which we employ a weighted iterate-averaging, parameterized by a scalar r where \(r = 1\) provides us with the standard averaging scheme. The main contributions are threefold: (i) when \(r<1\) and the parameter sequences are chosen appropriately, we show that the averaged sequence converges to the least norm solution almost surely and a suitably defined gap function diminishes at an approximate rate \(\mathcal{O}({1}\slash {\root 6 \of {k}})\) after k steps; (ii) when \(r<1\), and smoothing and regularization are suppressed, the gap function admits the rate \(\mathcal{O}({1}\slash {\sqrt{k}})\), thus improving the rate \(\mathcal{O}(\ln (k)/\sqrt{k})\) under standard averaging; and (iii) we develop a window-based variant of this scheme that also displays the optimal rate for \(r < 1\). Notably, we prove the superiority of the scheme with \(r < 1\) with its counterpart with \(r=1\) in terms of the constant factor of the error bound when the size of the averaging window is sufficiently large. We present the performance of the developed schemes on a stochastic Nash–Cournot game with merely monotone and non-Lipschitzian maps.

Similar content being viewed by others

Notes

In [38], we have considered a convex nondifferentiable optimization problem, where the smoothing was effectively applied to the subdifferental set of the objective function. A part of that proof applies here.

References

Bertsekas, D.P.: Stochastic optimization problems with nondifferentiable cost functionals. J. Optim. Theory Appl. 12(2), 218–231 (1973)

Birge, J.R., Louveaux, F.: Introduction to Stochastic Programming: Springer Series in Operations Research. Springer, Berlin (1997)

Cicek, D., Broadie, M., Zeevi, A.: General bounds and finite-time performance improvement for the Kiefer–Wolfowitz stochastic approximation algorithm. Oper. Res. 59, 1211–1224 (2011)

Duchi, J.C., Bartlett, P.L., Martin, J.: Wainwright, Randomized smoothing for stochastic optimization. SIAM J. Optim. 22(2), 674–701 (2012)

Ermoliev, Y.M.: Stochastic quasigradient methods and their application to system optimization. Stochastics 9, 1–36 (1983)

Facchinei, F., Pang, J.-S.: Finite-Dimensional Variational Inequalities and Complementarity Problems. Vols. I, II, Springer Series in Operations Research. Springer, New York (2003)

Ghadimi, S., Lan, G.: Optimal stochastic approximation algorithms for strongly convex stochastic composite optimization, part I: a generic algorithmic framework. SIAM J. Optim. 22(4), 1469–1492 (2012)

Golshtein, E.G., Tretyakov, N.V.: Modified Lagrangians and Monotone Maps in Optimization, Wiley-Interscience Series in Discrete Mathematics and Optimization. Wiley, New York (1996), Translated from the 1989 Russian original by Tretyakov, A Wiley-Interscience Publication

Jiang, H., Xu, H.: Stochastic approximation approaches to the stochastic variational inequality problem. IEEE Trans. Autom. Control 53(6), 1462–1475 (2008)

Juditsky, A., Nemirovski, A., Tauvel, C.: Solving variational inequalities with stochastic mirror-prox algorithm. Stoch. Syst. 1(1), 17–58 (2011)

Kannan, A., Shanbhag, U.V.: Distributed computation of equilibria in monotone Nash games via iterative regularization techniques. SIAM J. Optim. 22(4), 1177–1205 (2012)

Kannan, A., Shanbhag, U.V., Kim, H.M.: Addressing supply-side risk in uncertain power markets: stochastic Nash models, scalable algorithms and error analysis. Optim. Methods Softw. 28(5), 1095–1138 (2013)

Knopp, K.: Theory and Applications of Infinite Series. Blackie & Son Ltd., Glasgow, Great Britain (1951)

Koshal, J., Nedić, A., Shanbhag, U.V.: Regularized iterative stochastic approximation methods for variational inequality problems. IEEE Trans. Autom. Control 58(3), 594–609 (2013)

Kushner, H.J., Yin, G.G.: Stochastic Approximation and Recursive Algorithms and Applications. Springer, New York (2003)

Lakshmanan, H., Farias, D.: Decentralized recourse allocation in dynamic networks of agents. SIAM J. Optim. 19(2), 911–940 (2008)

Larsson, T., Patriksson, M.: A class of gap functions for variational inequalities. Math. Program. 64, 53–79 (1994)

Lu, S.: Symmetric confidence regions and confidence intervals for normal map formulations of stochastic variational inequalities. SIAM J. Optim. 24(3), 1458–1484 (2014)

Lu, S., Budhiraja, A.: Confidence regions for stochastic variational inequalities. Math. Oper. Res. 38(3), 545–568 (2013)

Marcotte, P., Zhu, D.: Weak sharp solutions of variational inequalities. SIAM J. Optim. 9(1), 179–189 (1998)

Mayne, D.Q., Polak, E.: Nondifferential optimization via adaptive smoothing. J. Optim. Theory Appl. 43(4), 601–613 (1984)

Metzler, C., Hobbs, B.F., Pang, J.-S.: Nash–Cournot equilibria in power markets on a linearized dc network with arbitrage: formulations and properties. Netw. Spatial Econ. 3(2), 123–150 (2003)

Nedić, A., Lee, S.: On stochastic subgradient mirror-descent algorithm with weighted averaging. SIAM J. Optim. 24(1), 84–107 (2014)

Nemirovski, A., Juditsky, A., Lan, G., Shapiro, A.: Robust stochastic approximation approach to stochastic programming. SIAM J. Optim. 19(4), 1574–1609 (2009)

Norkin, V.I.: The analysis and optimization of probability functions. Technical report, International Institute for Applied Systems Analysis technical report, 1993, WP-93-6

Polyak, B.T.: Introduction to Optimization. Optimization Software Inc, New York (1987)

Polyak, B.T., Juditsky, A.B.: Acceleration of stochastic approximation by averaging. SIAM J. Control Optim. 30(4), 838–855 (1992)

Ravat, U., Shanbhag, U.V.: On the characterization of solution sets in smooth and nonsmooth stochastic convex Nash games. SIAM J. Optim. 21(3), 1046–1081 (2011)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 22, 400–407 (1951)

Rockafellar, R.T., Wets, R.J.-B.: Variational Analysis. Springer, Berlin (1998)

Shapiro, A.: Monte Carlo Sampling Methods, Handbook in Operations Research and Management Science, vol. 10. Elsevier Science, Amsterdam (2003)

Shapiro, A., Dentcheva, D., Ruszczynski, A.: Lectures on Stochastic Programming: Modeling and Theory. The society for industrial and applied mathematics and the mathematical programming society, Philadelphia, USA (2009)

Steklov, V.A.: Sur les expressions asymptotiques decertaines fonctions dfinies par les quations diffrentielles du second ordre et leers applications au problme du dvelopement d’une fonction arbitraire en sries procdant suivant les diverses fonctions. Commun. Charkov Math. Soc. 2(10), 97–199 (1907)

Xu, H.: Adaptive smoothing method, deterministically computable generalized jacobians, and the newton method. J. Optim. Theory Appl. 109(1), 215–224 (2001)

Xu, H.: Sample average approximation methods for a class of stochastic variational inequality problems. Asia-Pacific J. Oper. Res. 27(1), 103–119 (2010)

Yousefian, F., Nedić, A., Shanbhag, U.V.: On smoothing, regularization, and averaging in stochastic approximation methods for stochastic variational inequalities. arXiv:1411.0209v2

Yousefian, F., Nedić, A., Shanbhag, U.V.: Self-tuned stochastic approximation schemes for non-Lipschitzian stochastic multi-user optimization and Nash games. IEEE Trans. Autom. Control 61(7), 1753–1766. doi:10.1109/TAC.2015.2478124.

Yousefian, F., Nedić, A., Shanbhag, U.V.: On stochastic gradient and subgradient methods with adaptive steplength sequences. automatica 48(1), 56–67 (2012), An extended version of the paper available at arXiv:1105.4549

Yousefian, F., Nedić, A., Shanbhag, U.V.: A Regularized smoothing stochastic approximation (RSSA) algorithm for stochastic variational inequality problems. In: Proceedings of the 2013 Winter Simulation Conference (2013), pp. 933–944

Author information

Authors and Affiliations

Corresponding author

Additional information

Nedić and Shanbhag gratefully acknowledge the support of the NSF through awards CMMI 0948905 ARRA (Nedić and Shanbhag), and CMMI-1246887 (Shanbhag). A part of this paper has appeared in [39].

Rights and permissions

About this article

Cite this article

Yousefian, F., Nedić, A. & Shanbhag, U.V. On smoothing, regularization, and averaging in stochastic approximation methods for stochastic variational inequality problems. Math. Program. 165, 391–431 (2017). https://doi.org/10.1007/s10107-017-1175-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-017-1175-y