Abstract

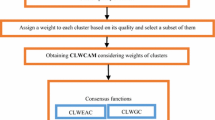

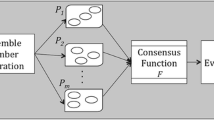

Ensemble clustering has attracted increasing attention in recent years. Its goal is to combine multiple base clusterings into a single consensus clustering of increased quality. Most of the existing ensemble clustering methods treat each base clustering and each object as equally important, while some approaches make use of weights associated with clusters, or to clusterings, when assembling the different base clusterings. Boosting algorithms developed for classification have led to the idea of considering weighted objects during the clustering process. However, not much effort has been put toward incorporating weighted objects into the consensus process. To fill this gap, in this paper, we propose a framework called Weighted-Object Ensemble Clustering (WOEC). We first estimate how difficult it is to cluster an object by constructing the co-association matrix that summarizes the base clustering results, and we then embed the corresponding information as weights associated with objects. We propose three different consensus techniques to leverage the weighted objects. All three reduce the ensemble clustering problem to a graph partitioning one. We experimentally demonstrate the gain in performance that our WOEC methodology achieves with respect to state-of-the-art ensemble clustering methods, as well as its stability and robustness.

Similar content being viewed by others

Notes

Other functions satisfying these properties can be used as well.

In real applications, classes may be multimodal, and thus \(k^*\) should be larger than the number of classes. In other cases, there may be less clusters than classes. These scenarios are not considered in this paper, and we simply set \(k^*\) equal to the number of classes.

As shown in Fig. 5a, the circled points are far away from the mean points of the classes and their density is considerably lower than that of the other points. These points can bias the computation of the mean vector. They are generated from the same distribution as the other points in the same class, and should be grouped in the same cluster, but behave like outliers for the purpose of this discussion. For this reason, we call them “pseudo-outliers”.

References

Al-Razgan M, Domeniconi C (2006) Weighted clustering ensemble. In: Proceedings of the 6th SIAM international conference on data mining, pp 258–269

Arbelaitz O, Gurrutxaga I, Muguerza J, Perez JM, Perona I (2013) An extensive comparative study of cluster validity indices. Pattern Recognit 46(1):243–256

d’Amato C, Fanizzi N, Esposito F (2009) A semantic similarity measure for expressive description logics. CoRR. arXiv:0911.5043

Domeniconi C, Al-Razgan M (2009) Weighted cluster ensembles: methods and analysis. ACM Trans Knowl Discov Data 2(4):17:1–17:40

Domeniconi C, Papadopoulos D, Gunopulos D, Ma S (2004) Subspace clustering of high dimensional data. In: Proceedings of the SIAM international conference on data mining, pp 517–521

Fern XZ, Brodley CE (2004) Solving cluster ensemble problems by bipartite graph partitioning. In: Proceedings of the 21th international conference on machine learning, pp 281–288

Fred A, Jain AK (2002a) Data clustering using evidence accumulation. In: Proceedings of the 16th international conference of pattern recognition, pp 276–280

Fred A, Jain AK (2002b) Evidence accumulation clustering based on the k-means algorithm. In: Proceedings of the joint IAPR international workshop on structural, syntactic, and statistical pattern recognition, pp 442–451

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55:119–139

Ghosh J, Acharya A (2011) Cluster ensembles. WIREs Data Min Knowl Discov 1(4):305–315

Hamerly G, Elkan C (2002) Alternatives to the k-means algorithm that find better clusterings. In: Proceedings of the 11th international conference on information and knowledge management, pp 600–607

Hubert L, Arabie P (1985) Comparing partitions. J Classif 2(1):193–218

Jing L, Tian K, Huang JZ (2015) Stratified feature sampling method for ensemble clustering of high dimensional data. Pattern Recognit 48(11):3688–3702

Karypis G, Aggarwal R, Kumar V, Shekhar S (1997) Multilevel hypergraph partitioning: application in vlsi domain. In: Proceedings of the design and automation conference, pp 526–529

Karypis G, Kumar V (1998) A fast and high quality multilevel scheme for partitioning irregular graphs. SIAM J Sci Comput 20(1):359–392

Legany C, Juhasz S, Babos A (2006) Cluster validity measurement techniques. In: Proceedings of the 5th WSEAS international conference on artificial intelligence, knowledge engineering and data bases, pp 388–393

Li T, Ding C (2008) Weighted consensus clustering. In: Proceedings of the 8th SIAM international conference on data mining, pp 798–809

Li T, Ding C, Jordan MI (2007) Solving consensus and semi-supervised clustering problems using nonnegative matrix factorization. In: Proceedings of the 7th IEEE international conference on data mining, pp 577–582

Liu H, Liu T, Wu J, Tao D, Fu Y (2015) Spectral ensemble clustering. In: Proceedings of the 21th ACM SIGKDD international conference on knowledge discovery and data mining, pp 715–724

Ng AY, Jordan MI, Weiss Y (2001) On spectral clustering: analysis and an algorithm. In: Dietterich TG, Becker S, Ghahramani Z (eds) Advances in neural information processing systems. MIT Press, Vancouver, British Columbia, pp 849–856

Nock R, Nielsen F (2006) On weighting clustering. IEEE Trans Pattern Anal Mach Intell 28(8):1223–1235

Ren Y, Domeniconi C, Zhang G, Yu G (2013) Weighted-object ensemble clustering. In: Proceedings of the IEEE 13th international conference on data mining, pp 627–636

Schapire RE (1990) The strength of weak learnability. Mach Learn 5(2):197–227

Shi J, Malik J (2000) Normalized cuts and image segmentation. IEEE Trans Pattern Anal Mach Intell 22(8):888–905

Strehl A, Ghosh J (2002) Cluster ensembles—a knowledge reuse framework for combining multiple partitions. J Mach Learn Res 3:583–617

Topchy A, Minaei-Bidgoli B, Jain AK, Punch WF (2004) Adaptive clustering ensembles. In: Proceedings of the 17th international conference on pattern recognition, pp 272–275

Tversky A (1977) Features of similarity. Psychol Rev 84(4):327–352

Wang H, Shan H, Banerjee A (2009) Bayesian cluster ensembles. In: Proceedings of the 9th SIAM international conference on data mining, pp 209–220

Yi J, Yang T, Jin R, Jain AK, Mahdavi M (2012) Robust ensemble clustering by matrix completion. In: Proceedings of the 12th IEEE international conference on data mining, pp 1176–1181

Zhang B, Hsu M, Dayal U (2001) \(K\)-harmonic means—a spatial clustering algorithm with boosting. In: Roddick JF, Hornsby K (eds) Temporal, spatial, and spatio-temporal data mining, vol 2007. Springer, Berlin, Heidelberg, pp 31–45

Zhou Z (2012) Ensemble methods: foundations and algorithms. Chapman & Hall, London

Acknowledgments

This paper was in part supported by Grants from the Fundamental Research Funds for the Central Universities of China (No. A03012023601042), the Natural Science Foundation of China (Nos. 61572111, 61402378), and the Natural Science Foundation of CQ CSTC (Nos. cstc2014jcyjA40031, cstc2016jcyjA0351).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ren, Y., Domeniconi, C., Zhang, G. et al. Weighted-object ensemble clustering: methods and analysis. Knowl Inf Syst 51, 661–689 (2017). https://doi.org/10.1007/s10115-016-0988-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-016-0988-y