Abstract

Local community detection (or local clustering) is of fundamental importance in large network analysis. Random walk-based methods have been routinely used in this task. Most existing random walk methods are based on the single-walker model. However, without any guidance, a single walker may not be adequate to effectively capture the local cluster. In this paper, we study a multi-walker chain (MWC) model, which allows multiple walkers to explore the network. Each walker is influenced (or pulled back) by all other walkers when deciding the next steps. This helps the walkers to stay as a group and within the cluster. We introduce two measures based on the mean and standard deviation of the visiting probabilities of the walkers. These measures not only can accurately identify the local cluster, but also help detect the cluster center and boundary, which cannot be achieved by the existing single-walker methods. We provide rigorous theoretical foundation for MWC and devise efficient algorithms to compute it. Extensive experimental results on a variety of real-world and synthetic networks demonstrate that MWC outperforms the state-of-the-art local community detection methods by a large margin.

Similar content being viewed by others

Notes

We use local clustering and local community detection interchangeably in this paper.

This is the same set of conditions used to prove the convergence of RWR by the Perron–Frobenius theorem [23].

For the well-structured top-5000 clusters in LiveJournal that are suggested to use in the original paper [34], the averaged community size is 28.

References

Alamgir M, Von Luxburg U (2010) Multi-agent random walks for local clustering on graphs. In: ICDM

Alon N, Avin C, Koucky M, Kozma G, Lotker Z, Tuttle MR (2008) Many random walks are faster than one. In: SPPA

Andersen R, Chung F, Lang K (2006) Local graph partitioning using PageRank vectors. In: FOCS

Andersen R, Lang KJ (2006) Communities from seed sets. In: WWW

Barnard K, Duygulu P, Forsyth D, Freitas Nd, Blei DM, Jordan MI (2003) Matching words and pictures. J Mach Learn Res 3:1107–1135

Brandes U (2001) A faster algorithm for betweenness centrality. J Math Sociol 25(2):163–177

Cooper C, Frieze A, Radzik T (2009) Multiple random walks and interacting particle systems. In: International colloquium on automata, languages and programming. Springer, New York, pp 399–410

Guan Z, Wu J, Zhang Q, Singh A, Yan X (2011) Assessing and ranking structural correlations in graphs. In: SIGMOD

Guillaumin M, Mensink T, Verbeek J, Schmid C (2009) Tagprop: discriminative metric learning in nearest neighbor models for image auto-annotation. In: ICCV

Hajnal J, Bartlett M (1958) Weak ergodicity in non-homogeneous Markov chains. In: Mathematical proceedings of the Cambridge Philosophical Society

Haveliwala TH (2002) Topic-sensitive pagerank, WWW

He K, Shi P, Hopcroft J, Bindel D (2016) Local spectral diffusion for robust community detection. In: SIGKDD twelfth workshop on mining and learning with graphs

He K, Sun Y, Bindel D, Hopcroft J, Li Y (2015) Detecting overlapping communities from local spectral subspaces. In: ICDM

Isaacson DL, Madsen RW (1976) Markov chains, theory and applications, vol 4. Wiley, New York

Jeh G, Widom J (2002) SimRank: a measure of structural-context similarity. In: KDD

Kloster K, Gleich DF (2014) Heat kernel based community detection. In: KDD

Kloumann IM, Kleinberg JM (2014) Community membership identification from small seed sets. In: KDD

Lancichinetti A, Fortunato S, Radicchi F (2008) Benchmark graphs for testing community detection algorithms. Phys Rev E 78(4):046110

Langville AN, Meyer CD (2004) Deeper inside PageRank. Intern Math 1(3):335–380

Lee C, Jang W-D, Sim J-Y, Kim C-S (2015) Multiple random walkers and their application to image cosegmentation. In: CVPR

Lee S-H, Jang W-D, Park B K, Kim C-S (2016) RGB-D image segmentation based on multiple random walkers. In: ICIP

Li Y, He K, Bindel D, Hopcroft JE (2015) Uncovering the small community structure in large networks: a local spectral approach. In: WWW

Lu D, Zhang H (1986) Stochastic process and applications. Tsinghua University Press, Beijing

Pan J-Y, Yang H-J, Faloutsos C, Duygulu P (2004) Automatic multimedia cross-modal correlation discovery. In: KDD

Sarkar P, Moore A (2007) A tractable approach to finding closest truncated-commute-time neighbors in large graphs. In: UAI

Schaeffer SE (2007) Graph clustering. Comput Sci Rev 1(1):27–164

Spielman DA, Teng S-H (2013) A local clustering algorithm for massive graphs and its application to nearly-linear time graph partitioning. SIAM J Comput 42(1):1–26

Tong H, Faloutsos C, Pan J-Y (2006) Fast random walk with restart and its applications. In: ICDM

Whang JJ, Gleich DF, Dhillon IS (2013) Overlapping community detection using seed set expansion. In: CIKM

Wolfowitz J (1963) Products of indecomposable, aperiodic, stochastic matrices. Linear Algebra Appl 14(5):733–737

Wu CW (2005) On bounds of extremal eigenvalues of irreducible and m-reducible matrices. Linear Algebra Appl 402:29–45

Wu X-M, Li Z, So AM, Wright J, Chang S-F (2012) Learning with partially absorbing random walks. NIPS

Wu Y, Jin R, Li J, Zhang X (2015) Robust local community detection: on free rider effect and its elimination. In: VLDB

Yang J, Leskovec J (2012) Defining and evaluating network communities based on ground-truth. In: ICDM

Yin H, Benson AR, Leskovec J, Gleich DF (2017) Local higher-order graph clustering. In: KDD

Yu W, Lin X, Zhang W, Chang L, Pei J (2013) More is simpler: effectively and efficiently assessing node-pair similarities based on hyperlinks. In: VLDB

Zhang J, Tang J, Ma C, Tong H, Jing Y, Li J ( 2015) Panther: fast top-k similarity search on large networks. In: KDD

Zhou D, Zhang S, Yildirim MY, Alcorn S, Tong H, Davulcu H, He J (2017) A local algorithm for structure-preserving graph cut. In: KDD

Zhu X, Goldberg AB (2009) Introduction to semi-supervised learning. Synth Lect Artif Intell Mach Learn 3(1):1–130

Acknowledgements

This work was partially supported by the National Science Foundation Grants IIS-1664629 and CAREER.

Author information

Authors and Affiliations

Corresponding author

Appendix A

Appendix A

1.1 A.1 The Proof of Theorem 1

Before proving Theorem 1, we first prove the following lemma. Without loss of generality, we use \(W_k\) as an example and omit the subscript k in our discussion.

Lemma 1

For any walker in MWC, if \( {\mathbf {P}}\) is stochastic, irreducible and aperiodic (SIA), then for any \(\tau \ge 1\), \({\mathbb {P}}^{(1,\tau )}={\mathbb {P}}^{(1)}{\mathbb {P}}^{(2)}\ldots {\mathbb {P}}^{(\tau )}\) is also SIA.

Proof

Based on Eq. (5), for any \(\tau \ge 1\), \({\mathbb {P}}^{(\tau )}\) is stochastic, so is \({\mathbb {P}}^{(1,\tau )}\) since the product of stochastic matrices is also stochastic.

Furthermore, we have

Due to the last term, \((1-\alpha ) \mathbf {1(v}^{(\tau -1)})^\intercal \), we know that there is at least one column of \({\mathbb {P}}^{(1,\tau )}\) whose entries are all positive. Thus, \({\mathbb {P}}^{(1,\tau )}\) is irreducible (Please refer to Corollary 4 in [31]).

The self-loop represented by the diagonal of \((1-\alpha ) \mathbf {1(v}^{(\tau -1)})^\intercal \) can guarantee that \({\mathbb {P}}^{(1,\tau )}\) is aperiodic. \(\square \)

If T exists, \({\mathbb {P}}^{(\tau +1)}\)=\({\mathbb {P}}^{(\tau +T+1)}\) (\(\tau \ge \tau _p\)), since \({\mathbf {v}}^{(\tau )}\)=\({\mathbf {v}}^{(\tau +T)}\) in Eq. (3). Thus, \({\mathbb {P}}^{(\tau +1,\tau +T)} ={\mathbb {P}}^{(\tau +T+1,\tau +2T)}\). Now we only discuss the sequence \(\{{\mathbb {P}}^{(\tau )}\}_{\tau =\tau _p+1}^\infty \) after \(\tau _p\), and define

Then we know that the chain \(\{{\mathcal {P}}\}\) is homogeneous. Suppose the graph has finite nodes set, and it’s undirected, connected, and its corresponding \({\mathbf {P}}\) is SIA. Then based on Lemma 1, in MWC, the modified transition matrices \({\mathbb {P}}^{(\tau )}\) and \({\mathcal {P}}\) are also SIA. Then we have \(\lim \limits _{n\rightarrow \infty }|| {\mathcal {P}}^{n+1}-{\mathcal {P}}^{n}||_\infty =0\) [10, 14].

Proof of Theorem 1

For \(1\le \mu \le T\), we have

For a sufficient large n such that \({\mathcal {P}}^{n+1}={\mathcal {P}}^{n}={\mathcal {Q}}\), let \(\tau '_c=\tau _p+nT\).

Then we have \({\mathbf {x}}^{(\tau '_c+T+\mu )}={\mathbf {x}}^{(\tau '_c+\mu )}\). That is, for any \(\tau \ge \tau '_c, {\mathbf {x}}^{(\tau +T)}={\mathbf {x}}^{(\tau )}\).

Next, we estimate \(\tau _c'\). To reach a computational tolerance \(\epsilon >0\), i.e., \(|| {\mathcal {P}}^{n+1}-{\mathcal {P}}^{n}||_\infty <\epsilon \), a rough estimate of n is \(\log \epsilon /\log (\alpha ^T)\) [19], because

Then \(\tau '_c=\tau _p+nT\le \tau _p+\lfloor \log \epsilon /\log \alpha \rfloor \).

For the entire group of K walkers, we set \(\tau _c\) as the largest \(\tau '_c\) of all walkers and we have \(\tau _p\le \tau _c\le \tau _p+\lfloor \log \epsilon /\log \alpha \rfloor \). \(\square \)

1.2 A.2 The Proof of Theorem 2

Theorem 2 directly follows from the following lemma.

Lemma 2

[30] Let \(\Omega = \{{\mathbb {A}}^{(1)},\ldots ,{\mathbb {A}}^{(\tau )}\}\) be a set of square stochastic matrices of the same order such that for any \(m\ge 1\), the product \({\mathbb {B}}={\mathbb {A}}^{(i_1)}\ldots {\mathbb {A}}^{(i_m)}\) (\(1\le i_j\le \tau \) for \(1\le j\le m\)) is SIA. Then for any \(\epsilon >0\), there exists an integer \(\nu (\epsilon )\) such that any \({\mathbb {B}}\) of length \(n\ge \nu (\epsilon )\) satisfies \(\delta ({\mathbb {B}})<\epsilon \).

Proof of Theorem 2

Let \(\Omega \) be the set that contains all possible modified transition matrices in MWC. From Lemma 1, we know that the product of matrices from \(\Omega \) with any length and order is SIA. Based on Lemma 2, we know that for any \(\epsilon >0\), there exists an integer \(\nu (\epsilon )\) such that when \(\tau _s\ge \nu (\epsilon )\), \(\delta ({\mathbb {P}}^{(1,\tau _s)})<\epsilon \).

For \(\nu (\epsilon )\), based on Lemma 2 in [30], we know that \(\delta ({\mathbb {P}}^{(1,\tau _s)})\le \prod _{\tau =1}^{\tau _s}\lambda ({\mathbb {P}}^{(\tau )})\). Because the rows in \({\mathbf {1}}({\mathbf {v}}^{(\tau -1)})^\intercal \) are identical, then

So \(\delta ({\mathbb {P}}^{(1,\tau _s)})\le (\alpha \lambda ({\mathbf {P}}))^{\tau _s}\). To satisfy the tolerance \(\epsilon \), we can set \(\nu (\epsilon )=\log \epsilon /\log (\alpha \lambda ({\mathbf {P}}))\). \(\square \)

For a stochastic matrix \({\mathbf {P}}\), the measure \(\lambda ({\mathbf {P}})\) shows the difference of its row vectors and we have \(0\le \lambda ({\mathbf {P}})\le 1\). In practice, the transition matrix \({\mathbf {P}}\) is sparse and has block structure. We can select two rows from \({\mathbb {P}}\) that have no overlapping nonzero entries to make \(\lambda ({\mathbf {P}})\) reach the maximum value 1. So in the worst case, we can set \(\tau _s=\lfloor \log \epsilon /\log \alpha \rfloor \) to stop the algorithm.

1.3 A.3 The Proof of Theorem 3

In this section, we analyze the error bound on the difference between the exact and estimated probability vectors for \(W_k\) in the \((\tau +1)\)th group iteration. We first define the probability flow, and provide a tight error bound in Theorem 4. The proof of Theorem 3 then follows. For simplicity, we omit the subscript “k” and use \({\mathbf {x}}^{(\tau +1)}\) and \(\hat{{\mathbf {x}}}^{(\tau +1)}\) to represent the exact and estimated probability vectors, respectively.

Let \(U^{(\tau )}\) represent the set of nodes whose probability will be updated in the \((\tau +1)\)th iteration, i.e., \(U^{(\tau )} = C^{(\tau )}\cup \varGamma (C^{(\tau )})\), where \(\varGamma (C)\) represents the direct neighbors of the nodes in C, and let \(S^{(\tau )}\) represent the remaining nodes. We only update the scores for nodes in \(U^{(\tau )}\) in the \((\tau +1)\)th iteration, i.e.,

The estimation error is thus \(\Delta =||\frac{\hat{{\mathbf {x}}}^{(\tau +1)}}{||\hat{{\mathbf {x}}}^{(\tau +1)}||_1}-{\mathbf {x}}^{(\tau +1)}||_1\)

Definition 5

The probability flow from a set of nodes B to another set A in the \((\tau +1)\)th group iteration is defined as

where \(N_g\) represents the direct neighbors of g.

The probability flows during the \((\tau +1)\)th iteration are (we omit the superscript “\({(\tau )}\)” for \(U^{(\tau )}\) and \(S^{(\tau )}\) below):

Theorem 4

where \(\gamma =1-\parallel \hat{{\mathbf {x}}}^{(\tau +1)} \parallel _1=a+b-\beta \) and \(\beta =(c+b)/\alpha \).

Proof

According to Eqs. (11) and (12), we have

where \({\mathbf {x}}_{U}\) and \({\mathbf {x}}_{S}\) are the sub-vector projections of \({\mathbf {x}}\) on U and S, respectively, and \(\beta =(c+b)/\alpha \).

Thus, we have

\(\square \)

The proof of Theorem 3 is as follows.

Proof

(1) If \(\gamma \le 0\), we have

(2) If \(\gamma >0\), we have \(\Delta \le 2(\beta +\gamma )=2(a+b)\).

Let \(\delta U=\{g\in U|\exists i\in S, s.t., (i,g)\in E\}\) be the set of boundary nodes of U. We have \(a+b\le F_{\delta U\cup S\rightarrow S}\le F_{V\backslash C^{(\tau )}\rightarrow V}\le 1-\theta \).

Thus, \(\Delta \le 2(1-\theta )\). \(\square \)

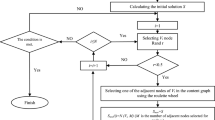

Algorithm 4 shows the overall process of the partial node set updating strategy for walker \(W_k\), which can be used to replace the UPDATE in Algorithm 2. The algorithm first finds the core node set \(C_{k}^{(\tau )}\) by a breadth first search starting from the influential nodes of walker \(W_k\) (Line 1). In Line 2, the updating node set \(U_k^{(\tau )}\) can be obtained as the union of the nodes in \(C_{k}^{(\tau )}\) and their direct neighbors. Lines 3 and 4 update the node visiting probabilities according to Eq. (11). Line 5 returns the normalized probability vector.

The breath first search costs \(O(d|U_k^{(\tau )}|)\), where d is the average degree of nodes in \(U_k^{(\tau )}\). In each iteration, the updating time is reduced from \(O(|V|+|E|)\) to \(O(d|U_k^{(\tau )}|)\) for \(W_k\).

Rights and permissions

About this article

Cite this article

Bian, Y., Ni, J., Cheng, W. et al. The multi-walker chain and its application in local community detection. Knowl Inf Syst 60, 1663–1691 (2019). https://doi.org/10.1007/s10115-018-1247-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-018-1247-1