Abstract

In this paper, we propose a single-agent Monte Carlo-based reinforced feature selection method, as well as two efficiency improvement strategies, i.e., early stopping strategy and reward-level interactive strategy. Feature selection is one of the most important technologies in data prepossessing, aiming to find the optimal feature subset for a given downstream machine learning task. Enormous research has been done to improve its effectiveness and efficiency. Recently, the multi-agent reinforced feature selection (MARFS) has achieved great success in improving the performance of feature selection. However, MARFS suffers from the heavy burden of computational cost, which greatly limits its application in real-world scenarios. In this paper, we propose an efficient reinforcement feature selection method, which uses one agent to traverse the whole feature set and decides to select or not select each feature one by one. Specifically, we first develop one behavior policy and use it to traverse the feature set and generate training data. And then, we evaluate the target policy based on the training data and improve the target policy by Bellman equation. Besides, we conduct the importance sampling in an incremental way and propose an early stopping strategy to improve the training efficiency by the removal of skew data. In the early stopping strategy, the behavior policy stops traversing with a probability inversely proportional to the importance sampling weight. In addition, we propose a reward-level and training-level interactive strategy to improve the training efficiency via external advice. What’s more, we propose an incremental descriptive statistics method to represent the state with low computational cost. Finally, we design extensive experiments on real-world data to demonstrate the superiority of the proposed method.

Similar content being viewed by others

References

Yang Y, Pedersen JO (1997) A comparative study on feature selection in text categorization. Icml 97:412–420

Forman G (2003) An extensive empirical study of feature selection metrics for text classification. J Mach Learn Res 3(3):1289–1305

Hall MA (1999) Feature selection for discrete and numeric class machine learning

Yu L, Liu H (2003) Feature selection for high-dimensional data: a fast correlation-based filter solution. In: Proceedings of the 20th International conference on machine learning (ICML-03), pp 856–863

Yang J, Honavar V (1998) Feature subset selection using a genetic algorithm, pp 117–136

Kim Y, Street WN, Menczer F (2000) Feature selection in unsupervised learning via evolutionary search. In: Proceedings of the Sixth ACM SIGKDD International conference on knowledge discovery and data mining, pp 365–369 ACM

Narendra PM, Fukunaga K (1977) A branch and bound algorithm for feature subset selection. IEEE Trans Comput 9:917–922

Kohavi R, John GH (1997) Wrappers for feature subset selection. Artif Intell 97(1–2):273–324

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc Series B (Methodol), pp 267–288

Sugumaran V, Muralidharan V, Ramachandran K (2007) Feature selection using decision tree and classification through proximal support vector machine for fault diagnostics of roller bearing. Mech Syst Signal Process 21(2):930–942

Liu K, Fu Y, Wang P, Wu L, Bo R, Li X (2019) Automating feature subspace exploration via multi-agent reinforcement learning. In: Proceedings of the 25th ACM SIGKDD International conference on knowledge discovery and data mining, pp 207–215

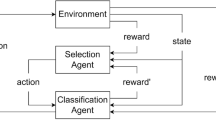

Fan W, Liu K, Liu H, Wang P, Ge Y, Fu Y (2020) Autofs: automated feature selection via diversity-aware interactive reinforcement learning. arXiv preprint arXiv:2008.12001

Sutton RS, Barto AG (2018) Reinforcement learning: an introduction

Schaul T, Quan J, Antonoglou I, Silver D (2015) Prioritized experience replay. arXiv preprint arXiv:1511.05952

Bellman R (1957) Kalaba R Dynamic programming and statistical communication theory. Proc Natl Acad Sci USA 43(8):749

Suay HB, Chernova S (2011) Effect of human guidance and state space size on interactive reinforcement learning. In: 2011 Ro-Man, pp 1–6. IEEE

Torrey L, Taylor M (2013) Teaching on a budget: agents advising agents in reinforcement learning. In: Proceedings of the 2013 International conference on autonomous agents and multi-agent systems, pp 1053–1060

MacEachern SN, Clyde M, Liu JS (1999) Sequential importance sampling for nonparametric bayes models: the next generation. Can J Stat 27(2):251–267

Mnih V, Kavukcuoglu K, Silver D, Graves A, Antonoglou I, Wierstra D, Riedmiller M (2013) Playing atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602

Gupta P, Doermann D, DeMenthon D (2002) Beam search for feature selection in automatic svm defect classification. In: 2002 International conference on pattern recognition, vol 2, pp 212–215 . IEEE

Fraiman N, Li Z (2022) Beam search for feature selection. arXiv preprint arXiv:2203.04350

Liu K, Fu Y, Wu L, Li X, Aggarwal C, Xiong H (2021) Automated feature selection: a reinforcement learning perspective. IEEE Trans Knowl Data Eng. https://doi.org/10.1109/TKDE.2021.3115477

Blackard JA (2015) Kaggle forest cover type prediction. [EB/OL]. https://www.kaggle.com/c/forest-cover-type-prediction/data

Dua D, Graff C (2017) UCI machine learning repository. http://archive.ics.uci.edu/ml

Van Der Putten P, van Someren M (2000) Coil challenge 2000: the insurance company case

Guvenir HA, Acar B, Demiroz G, Cekin A (1997) A supervised machine learning algorithm for arrhythmia analysis. Comput Cardiol 1997:433–436

Stiglic G, Kokol P (2010) Stability of ranked gene lists in large microarray analysis studies. J Biomed Biotechnol. https://doi.org/10.1155/2010/616358

Baldi P, Sadowski P (2014) Whiteson D Searching for exotic particles in high-energy physics with deep learning. Nat Commun 5(1):1–9

Leardi R (1996) Genetic algorithms in feature selection, pp 67–86

Peng H, Long F, Ding C (2005) Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238

Granitto PM, Furlanello C, Biasioli F (2006) Gasperi F Recursive feature elimination with random forest for ptr-ms analysis of agroindustrial products. Chemom Intell Lab Syst 83(2):83–90

Liu JS, Chen R, Wong WH (1998) Rejection control and sequential importance sampling. J Am Stat Assoc 93(443):1022–1031

Xie T, Ma Y, Wang Y-X (2019) Towards optimal off-policy evaluation for reinforcement learning with marginalized importance sampling. In: Advances in Neural Information Processing Systems, pp 9668–9678

Fortunato M, Azar MG, Piot B, Menick J, Osband I, Graves A, Mnih V, Munos R, Hassabis D, Pietquin O, et al (2017) Noisy networks for exploration. arXiv preprint arXiv:1706.10295

Yu Y (2018) Towards sample efficient reinforcement learning. In: IJCAI, pp 5739–5743

Raginsky M, Rakhlin A, Telgarsky M (2017) Non-convex learning via stochastic gradient langevin dynamics: a nonasymptotic analysis. arXiv preprint arXiv:1702.03849

Wang D, Wang P, Zhou J, Sun L, Du B, Fu Y (2020) Defending water treatment networks: Exploiting spatio-temporal effects for cyber attack detection. In: 2020 IEEE International conference on data mining (ICDM), pp 32–41. IEEE

Zhao X, Liu K, Fan W, Jiang L, Zhao X, Yin M, Fu Y (2020) Simplifying reinforced feature selection via restructured choice strategy of single agent. In: 2020 IEEE International conference on data mining (ICDM), pp 871–880 . IEEE

Lin LJ (1991) Programming robots using reinforcement learning and teaching. In: AAAI, pp 781–786

Schaal S (1999) Is imitation learning the route to humanoid robots? Trends Cogn Sci 3(6):233–242

Ho J, Ermon S (2016) Generative adversarial imitation learning. In: Advances in neural information processing systems, pp 4565–4573

Knox WB, Stone P, Breazeal C (2013) Teaching agents with human feedback: a demonstration of the tamer framework. In: Proceedings of the companion publication of the 2013 international conference on intelligent user interfaces companion, pp 65–66

Wang D, Wang P, Liu K, Zhou Y, Hughes CE, Fu Y (2021) Reinforced imitative graph representation learning for mobile user profiling: an adversarial training perspective. Proc AAAI Conf Artif Intell 35:4410–4417

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, K., Wang, D., Du, W. et al. Interactive reinforced feature selection with traverse strategy. Knowl Inf Syst 65, 1935–1962 (2023). https://doi.org/10.1007/s10115-022-01812-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-022-01812-3