Abstract

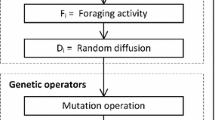

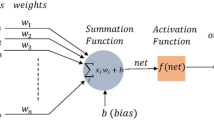

In recent years, significant advancements have been made in artificial neural network models and they have been applied to a variety of real-world problems. However, one of the limitations of artificial neural networks is that they can getting stuck in local minima during the training phase, which is a consequence of their use of gradient descent-based techniques. This negatively impacts the generalization performance of the network. In this study, it is proposed a new hybrid artificial neural network model called COOT-ANN, which uses the coot optimization algorithm firstly for optimizing artificial neural networks parameters, a metaheuristic-based approach. The COOT-ANN model does not get stuck in local minima during the training phase due to the use of metaheuristic-based optimization algorithm. The results of the study demonstrate that the proposed method is quite successful in terms of accuracy, cross-entropy, F1-score, and Cohen’s Kappa metrics when compared to gradient descent, scaled conjugate gradient, and Levenberg–Marquardt optimization techniques.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analyzed during the current study are available in the UCI machine learning repository [46].

Code Availability

The code are available from the corresponding author on reasonable request.

References

Abdolrasol MG, Hussain SS, Ustun TS, Sarker MR, Hannan MA, Mohamed R, Ali JA, Mekhilef S, Milad A (2021) Artificial neural networks based optimization techniques: a review. Electronics 10(21):2689

Dietterich TG (1997) Machine-learning research. AI Mag 18(4):97–97

Turkoglu B, Uymaz SA, Kaya E (2022) Clustering analysis through artificial algae algorithm. Int J Mach Learn Cybern 13(4):1179–1196

McCulloch WS, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bullet Math Biophys 5(4):115–133

Si T, Bagchi J, Miranda PBC (2022) Artificial neural network training using metaheuristics for medical data classification: An experimental study. Expert Syst Appl. https://doi.org/10.1016/j.eswa.2021.116423

Faramarzi A, Heidarinejad M, Stephens B, Mirjalili S (2020) Equilibrium optimizer: a novel optimization algorithm. Knowledge-Based Syst 191:105190. https://doi.org/10.1016/j.knosys.2019.105190

Abualigah L, Diabat A, Mirjalili S, Abd Elaziz M, Gandomi AH (2021) The arithmetic optimization algorithm. Comput Methods Appl Mech Eng 376:113609

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007

Yang X-S (2010) Nature-inspired metaheuristic algorithms. Luniver press

Karaboga D, et al (2005) An idea based on honey bee swarm for numerical optimization. Technical report, Technical report-tr06, Erciyes university, engineering faculty, computer.

Yang X-S, Gandomi AH (2012) Bat algorithm: a novel approach for global engineering optimization. Engineering computations

Mirjalili S (2016) Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput Appl 27(4):1053–1073

Karaboga D, Akay B, Ozturk C (2007) Artificial bee colony (abc) optimization algorithm for training feed-forward neural networks. In: International conference on modeling decisions for artificial intelligence, pp 318–329. Springer

Ghaleini EN, Koopialipoor M, Momenzadeh M, Sarafraz ME, Mohamad ET, Gordan B (2019) A combination of artificial bee colony and neural network for approximating the safety factor of retaining walls. Eng Comput 35(2):647–658

Shah H, Ghazali R, Nawi NM (2011) Using artificial bee colony algorithm for mlp training on earthquake time series data prediction. arXiv preprint arXiv:1112.4628

Blum C, Socha K (2005) Training feed-forward neural networks with ant colony optimization: an application to pattern classification. In: Fifth international conference on hybrid intelligent systems (HIS’05), p 6. IEEE

Yamany W, Tharwat A, Hassanin MF, Gaber T, Hassanien AE, Kim T-H (2015) A new multi-layer perceptrons trainer based on ant lion optimization algorithm. In: 2015 Fourth international conference on information science and industrial applications (ISI), pp 40–45 . IEEE

Mirjalili S, Mirjalili SM, Lewis A (2014) Let a biogeography-based optimizer train your multi-layer perceptron. Inf Sci 269:188–209

Askarzadeh A, Rezazadeh A (2013) Artificial neural network training using a new efficient optimization algorithm. Appl Soft Comput 13(2):1206–1213

Tran-Ngoc H, Khatir S, De Roeck G, Bui-Tien T, Wahab MA (2019) An efficient artificial neural network for damage detection in bridges and beam-like structures by improving training parameters using cuckoo search algorithm. Eng Struct 199:109637

Valian E, Mohanna S, Tavakoli S (2011) Improved cuckoo search algorithm for feedforward neural network training. Int J Artif Intell Appl 2(3):36–43

Yasen M, Al-Madi N, Obeid N (2018) Optimizing neural networks using dragonfly algorithm for medical prediction. In: 2018 8th international conference on computer science and information technology (CSIT), pp 71–76. IEEE

Xu J, Yan F (2019) Hybrid nelder-mead algorithm and dragonfly algorithm for function optimization and the training of a multilayer perceptron. Arab J Sci Eng 44(4):3473–3487

Mandal S, Saha G, Pal RK (2015) Neural network training using firefly algorithm. Glob J Adv Eng Sci 1(1):7–11

Dutta RK, Karmakar NK, Si T (2016) Artificial neural network training using fireworks algorithm in medical data mining. Int J Comput Appl 137(1):1–5

Heidari AA, Faris H, Aljarah I, Mirjalili S (2019) An efficient hybrid multilayer perceptron neural network with grasshopper optimization. Soft Comput 23(17):7941–7958

Mirjalili S (2015) How effective is the grey wolf optimizer in training multi-layer perceptrons. Appl Intell 43(1):150–161

Kumar N, Kumar D (2020) Classification using artificial neural network optimized with bat algorithm

Meissner M, Schmuker M, Schneider G (2006) Optimized particle swarm optimization (opso) and its application to artificial neural network training. BMC Bioinf 7(1):1–11

Abusnaina AA, Ahmad S, Jarrar R, Mafarja M (2018) Training neural networks using salp swarm algorithm for pattern classification. In: Proceedings of the 2nd international conference on future networks and distributed systems, pp 1–6

Bairathi D, Gopalani D (2021) Numerical optimization and feed-forward neural networks training using an improved optimization algorithm: multiple leader salp swarm algorithm. Evol Intell 14(3):1233–1249

Gulcu Ş (2020) Training of the artificial neural networks using states of matter search algorithm. Int J Intell Syst Appl Eng 8(3):131–136

Turkoglu B, Kaya E (2020) Training multi-layer perceptron with artificial algae algorithm. Eng Sci Technol Int J 23(6):1342–1350

Kulluk S, Ozbakir L, Baykasoglu A (2012) Training neural networks with harmony search algorithms for classification problems. Eng Appl Artif Intell 25(1):11–19

Aljarah I, Faris H, Mirjalili S (2018) Optimizing connection weights in neural networks using the whale optimization algorithm. Soft Comput 22(1):1–15

Irmak B, Gülcü Ş (2021) Training of the feed-forward artificial neural networks using butterfly optimization algorithm. MANAS J Eng 9(2):160–168

Alwaisi S, Baykan OK (2017) Training of artificial neural network using metaheuristic algorithm. Int J Intell Syst Appl Eng 5:12–16

Agushaka JO, Ezugwu AE, Abualigah L (2022) Dwarf mongoose optimization algorithm. Comput Methods Appl Mech Eng 391:114570

Abualigah L, Yousri D, Abd Elaziz M, Ewees AA, Al-Qaness MA, Gandomi AH (2021) Aquila optimizer: a novel meta-heuristic optimization algorithm. Comput Indus Eng 157:107250

Abualigah L, Abd Elaziz M, Sumari P, Geem ZW, Gandomi AH (2022) Reptile search algorithm (rsa): a nature-inspired meta-heuristic optimizer. Exp Syst Appl 191:116158

Oyelade ON, Ezugwu AE-S, Mohamed TI, Abualigah L (2022) Ebola optimization search algorithm: a new nature-inspired metaheuristic optimization algorithm. IEEE Access 10:16150–16177

Xie L, Han T, Zhou H, Zhang Z-R, Han B, Tang A (2021) Tuna swarm optimization: a novel swarm-based metaheuristic algorithm for global optimization. Comput Intell Neurosci 2021

Naruei I, Keynia F (2021) A new optimization method based on coot bird natural life model. Exp Syst Appl 183:115352. https://doi.org/10.1016/j.eswa.2021.115352

Hussien AM, Turky RA, Alkuhayli A, Hasanien HM, Tostado-Véliz M, Jurado F, Bansal RC (2022) Coot bird algorithms-based tuning pi controller for optimal microgrid autonomous operation. IEEE Access 10:6442–6458. https://doi.org/10.1109/ACCESS.2022.3142742

Memarzadeh G, Keynia F (2021) A new optimal energy storage system model for wind power producers based on long short term memory and coot bird search algorithm. J Energy Storag 44:103401. https://doi.org/10.1016/j.est.2021.103401

Dua D, Graff C (2017) UCI machine learning repository. http://archive.ics.uci.edu/ml

Adamowski J, Chan HF (2011) A wavelet neural network conjunction model for groundwater level forecasting. J Hydrol 407(1–4):28–40

Pineda LR, Serpa AL (2021) Determination of confidence bounds and artificial neural networks in non-linear optimization problems. Neurocomputing 463:495–504

Kayhan G, İşeri İ (2022) Counter propagation network based extreme learning machine. Neural Process Lett 1–16

Sharma V, Pratap Singh Chouhan A, Bisen D (2022) Prediction of activation energy of biomass wastes by using multilayer perceptron neural network with weka. Mater Today Proc 57:1944–1949. https://doi.org/10.1016/j.matpr.2022.03.051

Kayhan G, Ozdemir AE, Eminoglu İ (2013) Reviewing and designing pre-processing units for rbf networks: initial structure identification and coarse-tuning of free parameters. Neural Comput Appl 22(7):1655–1666

Wilson DR, Martinez TR (2003) The general inefficiency of batch training for gradient descent learning. Neural Netw 16(10):1429–1451. https://doi.org/10.1016/S0893-6080(03)00138-2

Møller M (1993) A scaled conjugate gradient algorithm for fast supervised learning. neural networks. Neural Netw 6:525–533. https://doi.org/10.1016/S0893-6080(05)80056-5

Othman KMZ, Salih AM (2021) Scaled conjugate gradient ann for industrial sensors calibration. Bullet Electr Eng Inf 10(2):680–688

Saeed W, Ghazali R, Deris MM (2015) Content-based sms spam filtering based on the scaled conjugate gradient backpropagation algorithm. In: 2015 12th international conference on fuzzy systems and knowledge discovery (FSKD), pp 675–680

Dokht Shakibjoo A, Moradzadeh M, Moussavi SZ, Mohammadzadeh A, Vandevelde L (2022) Load frequency control for multi-area power systems: a new type-2 fuzzy approach based on levenberg-marquardt algorithm. ISA Trans 121:40–52. https://doi.org/10.1016/j.isatra.2021.03.044

Wilamowski BM, Irwin JD (2011) The industrial electronics handbook. In: Bogdan MW, Irwin JD (eds) Control and mechatronics. CRC Press, Boca Raton

Çavuslu MA, Becerikli Y, Karakuzu C (2012) Levenberg-marquardt algoritması ile ysa eǧitiminin donanımsal gerçeklenmesi (hardware implementation of neural network training with levenberg-marquardt algorithm)

Alan A (2020) Makine öğrenmesi sınıflandırma yöntemlerinde performans metrikleri ile test tekniklerinin farklı veri setleri üzerinde değerlendirilmesi. In: Master’s thesis, Fen Bilimleri Enstitüsü

Koşan MA, Coşkun A, Karacan H (2019) Yapay zekâ yöntemlerinde entropi. J Inf Syst Manag Res 1(1):15–22

Japkowicz N, Shah M (2011) Evaluating learning algorithms: a classification perspective. Cambridge University Press

Cohen J (1960) A coefficient of agreement for nominal scales. Educ Psychol Measur 20(1):37–46. https://doi.org/10.1177/001316446002000104

Delgado R, Tibau X-A (2019) Why cohen’s kappa should be avoided as performance measure in classification. PLOS ONE 14(9):1–26. https://doi.org/10.1371/journal.pone.0222916

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Contributions

All authors have contributed equally to the work.

Corresponding author

Ethics declarations

Conflict of interest

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Özden, A., İşeri, İ. COOT optimization algorithm on training artificial neural networks. Knowl Inf Syst 65, 3353–3383 (2023). https://doi.org/10.1007/s10115-023-01859-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-023-01859-w