Abstract

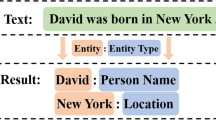

Entity linking is the task of resolving ambiguous mentions in documents to their referent entities in a knowledge graph (KG). Existing solutions mainly rely on three kinds of information: local contextual similarity, global coherence, and prior probability. But the information of the mentions’ types is rarely utilized, which is helpful for precise entity linking. That is to say, if the type information of a mention is obtained from a mention classifier, we can exclude candidate entities with different types. However, the key challenge of realizing it lies in obtaining the type labels with appropriate granularity and performing entity linking with the error propagated from the mention classifier. To solve the challenges, we propose a model named type-oriented multi-task entity linking (TMTEL). First, we select types with appropriate granularity from the taxonomy of a KG, which is modeled as a nonlinear integer programming problem. Second, we use a multi-task learning framework to incorporate the selected types into entity linking. The type information is used to enhance the representation of the mentions’ context, which is more robust to the errors of the mention classifier. Experimental results show that our model outperforms multiple existing solutions.

Similar content being viewed by others

Notes

In this paper, entity linking refers to the task of disambiguating a detected mention instead of the task of detecting and disambiguating mentions, which is also denoted as entity linking in some works.

If we use 1/0 to indicate whether type \(t_l \in T\) is selected or not, then the variables for this problem are all integers. Besides, the objective function \(C(\cdot )\) and the constraint \(\varGamma (T^*,R)\) are nonlinear. Therefore, this is a nonlinear integer programming problem.

The dataset of TAC-KBP is no longer available. The dataset of ACE2004 is relatively small, so we do not test on them in this paper.

All the datasets can be obtained from https://github.com/lephong/mulrel-nel.

Six types are manually selected from the taxonomy by volunteers.

References

Blanco R, Ottaviano G, Meij E (2015) Fast and space-efficient entity linking for queries. In: Proceedings of the eighth ACM international conference on web search and data mining, ACM, pp 179–188

Cao ND, Izacard G, Riedel S, Petroni F (2021) Autoregressive entity retrieval. In: International conference on learning representations. https://openreview.net/forum?id=5k8F6UU39V

Clark K, Luong MT, Khandelwal U, Manning CD, Le QV (2019) Bam! born-again multi-task networks for natural language understanding. arXiv:1907.04829

Fang Z, Cao Y, Li Q, Zhang D, Zhang Z, Liu Y (2019) Joint entity linking with deep reinforcement learning. In: The World Wide Web conference, pp 438–447

Furlanello T, Lipton ZC, Tschannen M, Itti L, Anandkumar A (2018) Born again neural networks. arXiv:1805.04770

Ganea OE, Hofmann T (2017) Deep joint entity disambiguation with local neural attention. arXiv:1704.04920

Hoffart J, Yosef MA, Bordino I, Fürstenau H, Pinkal M, Spaniol M, Taneva B, Thater S, Weikum G (2011) Robust disambiguation of named entities in text. In: Proceedings of the conference on empirical methods in Natural Language Processing, Association for Computational Linguistics, pp 782–792

Hoffart J, Seufert S, Nguyen DB, Theobald M, Weikum G (2012) Kore: keyphrase overlap relatedness for entity disambiguation. In: Proceedings of the 21st ACM international conference on Information and knowledge management, ACM, pp 545–554

Hoffmann R, Zhang C, Ling X, Zettlemoyer L, Weld DS (2011) Knowledge-based weak supervision for information extraction of overlapping relations. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1, Association for Computational Linguistics, pp 541–550

Koch M, Gilmer J, Soderland S, Weld DS (2014) Type-aware distantly supervised relation extraction with linked arguments. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp 1891–1901

Lazic N, Subramanya A, Ringgaard M, Pereira F (2015) Plato: a selective context model for entity resolution. Trans Assoc Comput Linguist 3:503–515

Le P, Titov I (2018) Improving entity linking by modeling latent relations between mentions. arXiv:1804.10637

Le P, Titov I (2019) Boosting entity linking performance by leveraging unlabeled documents. arXiv:1906.01250

Li J, Shang S, Shao L (2020) Metaner: named entity recognition with meta-learning. In: Proceedings of The Web Conference, pp 429–440

Lin TY, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, pp 2980–2988

Ling X, Singh S, Weld DS (2015) Design challenges for entity linking. Trans Assoc Comput Linguist 3:315–328

Liu M, Zhao Y, Qin B, Liu T (2019) Collective entity linking: a random walk-based perspective. Knowl Inf Syst 60(3):1611–1643

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space. In: 1st international conference on learning representations, ICLR 2013, Scottsdale, Arizona, USA, May 2–4, 2013, workshop track proceedings, http://arxiv.org/abs/1301.3781

Nadeau D, Sekine S (2007) A survey of named entity recognition and classification. Lingvisticae Investigationes 30(1):3–26

Onoe Y, Durrett G (2019) Fine-grained entity typing for domain independent entity linking. arXiv:1909.05780

Pershina M, He Y, Grishman R (2015) Personalized page rank for named entity disambiguation. In: Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp 238–243

Radhakrishnan P, Talukdar P, Varma V (2018) Elden: Improved entity linking using densified knowledge graphs. In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), pp 1844–1853

Raiman JR, Raiman OM (2018) Deeptype: multilingual entity linking by neural type system evolution. In: Thirty-second AAAI conference on artificial intelligence

Ratinov L, Roth D, Downey D, Anderson M (2011) Local and global algorithms for disambiguation to wikipedia. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1, Association for Computational Linguistics, pp 1375–1384

Ruder S (2017) An overview of multi-task learning in deep neural networks. arXiv:1706.05098

Sener O, Koltun V (2018) Multi-task learning as multi-objective optimization. In: Advances in neural information processing systems, pp 527–538

Shen W, Wang J, Han J (2014) Entity linking with a knowledge base: issues, techniques, and solutions. IEEE Trans Knowl Data Eng 27(2):443–460

Van Laarhoven PJ, Aarts EH (1987) Simulated annealing. In: Simulated annealing: theory and applications. Springer, pp 7–15

Wang C, He X, Zhou A (2020) Heel: exploratory entity linking for heterogeneous information networks. Knowl Inf Syst 62(2):485–506

Wu L, Petroni F, Josifoski M, Riedel S, Zettlemoyer L (2019) Scalable zero-shot entity linking with dense entity retrieval. arXiv:1911.03814

Yaghoobzadeh Y, Adel H, Schütze H (2016) Noise mitigation Fordu neural entity typing and relation extraction. arXiv:1612.07495

Yamada I, Shindo H, Takeda H, Takefuji Y (2016) Joint learning of the embedding of words and entities for named entity disambiguation. arXiv:1601.01343

Yamada I, Shindo H, Takeda H, Takefuji Y (2017) Learning distributed representations of texts and entities from knowledge base. Trans Assoc Comput Linguist 5:397–411

Yang X, Gu X, Lin S, Tang S, Zhuang Y, Wu F, Chen Z, Hu G, Ren X (2019) Learning dynamic context augmentation for global entity linking. arXiv:1909.02117

Yih SWt, Chang MW, He X, Gao J (2015) Semantic parsing via staged query graph generation: Question answering with knowledge base. In: Proceedings of the joint conference of the 53rd annual meeting of the ACL and the 7th International Joint Conference on Natural Language Processing of the AFNLP

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, X., Chen, L., Zhu, W. et al. Multi-task entity linking with supervision from a taxonomy. Knowl Inf Syst 65, 4335–4358 (2023). https://doi.org/10.1007/s10115-023-01905-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-023-01905-7