Abstract

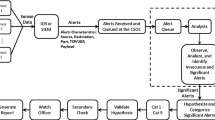

The level of operational effectiveness (LOE) is a color-coded performance metric that is monitored by the cybersecurity operations center (CSOC). It is determined using the average time to analyze alerts (AvgTTA) in every hour of shift operation, where the time to analyze an alert (TTA) is the sum of waiting time in the queue and investigation time by the analysts. Ideally, the CSOC managers would set a predetermined baseline target for AvgTTA to be maintained for every hour of shift operation. However, due to adverse events, an imbalance may exist if the alert arrival rate far exceeds the service rate, resulting in high AvgTTA or low LOE. Upon exhausting all the analyst resources, the only option available to a CSOC manager is to discard alerts for restoring the LOE of the CSOC. The paper proposes two strategies: the value-based strategy is developed using a static optimization model while the reinforcement learning-based strategy is developed using a dynamic optimization model. The paper compares various strategies for discarding alerts and measures the following desiderata for comparing them: (1) minimize the number of alerts discarded, (2) ensure highest utilization of analysts, (3) determine the optimal time at which the alerts must be discarded in a shift, and (4) maintain the best possible LOE closest to the baseline target LOE. Results indicate that, overall, the RL strategy is the best performer among all strategies that guarantees the AvgTTA below the threshold value in every hour of shift operation while discarding the fewest number of alerts under adverse events.

Similar content being viewed by others

Notes

This observation is based on our numerous conversations with cybersecurity analysts and the CSOC managers.

References

Aydin, M.E., Oztemel, E.: Dynamic job-shop scheduling using reinforcement learning agents. Robot. Auton. Syst. 33(2), 169–178 (2000)

Bejtlich, R.: The Tao of Network Security Monitoring: Beyond Intrusion Detection. Pearson Education Inc., London (2005)

Bhatt, S., Manadhata, P.K., Zomlot, L.: The operational role of security information and event management systems. IEEE Secur. Privacy 12(5), 35–41 (2014)

CIO: DON cyber crime handbook. Dept. of Navy, Washington, DC (2008)

Crothers, T.: Implementing Intrusion Detection Systems. Wiley Publishing Inc., New York (2002)

D’Amico, A., Whitley, K.: The real work of computer network defense analysts. In: VizSEC 2007: Proceedings of the Workshop on Visualization for Computer Security (2008)

D’Amico, A., Whitley, K.: The real work of computer network defense analysts: The analysis roles and processes that transform network data into security situation awareness. In: Proceedings of the Workshop on Visualization for Computer Security, pp. 19–37 (2008)

Edwards, B., Hofmeyr, S., Forrest, S.: Hype and heavy tails: a closer look at data breaches. J. Cybersecur. 2(1), 3–14 (2016)

Farris, K.A., Shah, A., Cybenko, G., Ganesan, R., Jajodia, S.: VULCON–a system for vulnerability prioritization, mitigation, and management. ACM Trans. Privacy Secur. 21(4), 16:2-16:28 (2018)

Ganesan, R., Jajodia, S., Cam, H.: Optimal scheduling of cybersecurity analyst for minimizing risk. ACM Trans. Intell. Syst. Technol. 8(4), 1–32 (2017)

Ganesan, R., Jajodia, S., Shah, A., Cam, H.: Dynamic scheduling of cybersecurity analysts for minimizing risk using reinforcement learning. ACM Trans. Intell. Syst. Technol. 8(1), 1–21 (2016). https://doi.org/10.1145/2882969

Gosavi, A.: Simulation Based Optimization: Parametric Optimization Techniques and Reinforcement Learning. Kluwer Academic, Norwell, MA (2003)

Killcrece, G., Kossakowski, K.P., Ruefle, R., Zajicek, M.: State of the practice of computer security incident response teams (csirts). Tech. Rep. CMU/SEI-2003-TR-001, Software Engineering Institute, Carnegie Mellon University, Pittsburgh, PA (2003)

Mnih, V., Kavukcuoglu, K., Silver, D., Graves, A., Antonoglou, I., Wierstra, D., Riedmiller, M.: Playing atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602 (2013)

Paternina-Arboleda, C.D., Das, T.K.: A multi-agent reinforcement learning approach to obtaining dynamic control policies for stochastic lot scheduling problem. Simul. Modell. Pract. Theory 13(5), 389–406 (2005)

Powell, W.B.: Approximate Dynamic Programming: Solving the Curses of Dimensionality. Wiley-Interscience, New York (2007)

Rasoulifard, A., Bafghi, A.G., Kahani, M.: Incremental hybrid intrusion detection using ensemble of weak classifiers. In: Advances in Computer Science and Engineering, pp. 577–584. Springer, Cham (2008)

Scarfone, K., Mell, P.: Guide to intrusion detection and prevention systems (IDPS). Special Publication 800-94, NIST (2007)

Shah, A., Ganesan, R., Jajodia, S.: A methodology for ensuring fair allocation of CSOC effort for alert investigation. Int. J. Inf. Secur. (2018). https://doi.org/10.1007/s10207-018-0407-3

Shah, A., Ganesan, R., Jajodia, S., Cam, H.: Adaptive reallocation of cybersecurity analysts to sensors for balancing risk between sensors. Serv. Orient. Comput. Appl. (2018). https://doi.org/10.1007/s11761-018-0235-3

Shah, A., Ganesan, R., Jajodia, S., Cam, H.: Dynamic optimization of the level of operational effectiveness of a CSOC under adverse conditions. ACM Trans. Intell. Syst. Technol. 9(5), 51:1-51:20 (2018). https://doi.org/10.1145/3173457

Shah, A., Ganesan, R., Jajodia, S., Cam, H.: A methodology to measure and monitor level of operational effectiveness of a CSOC. Int. J. Inf. Secur. 17(2), 121–134 (2018). https://doi.org/10.1007/s10207-017-0365-1

Shah, A., Ganesan, R., Jajodia, S., Cam, H.: Optimal assignment of sensors to analysts in a cybersecurity operations center. IEEE Syst. J. (2018). https://doi.org/10.1109/JSYST.2018.2809506

Shah, A., Ganesan, R., Jajodia, S., Cam, H.: Understanding tradeoffs between throughput, quality, and cost of alert analysis in a CSOC. IEEE Trans. Inf. Forens. Secur. 14(5), 1155–1170 (2019)

Smith, M., Paté-Cornell, E.: Cyber risk analysis for a smart grid: How smart is smart enough? a multi-armed bandit approach. In: Proceedings of the 2nd Singapore Cyber-Security R&D Conference (SG-CRC 2017), pp. 37–56 (2017)

Sundaramurthy, S.C., Bardas, A.G., Case, J., Ou, X., Wesch, M., McHugh, J., Rajagopalan, S.R.: A human capital model for mitigating security analyst burnout. In: Eleventh Symposium on Usable Privacy and Security (SOUPS 2015), pp. 347–359. USENIX Association (2015)

Sundaramurthy, S.C., McHugh, J., Ou, X., Wesch, M., Bardas, A.G., Rajagopalan, S.R.: Turning contradictions into innovations or: How we learned to stop whining and improve security operations. In: Twelfth Symposium on Usable Privacy and Security (SOUPS 2016), pp. 237–250. USENIX Association (2016)

Sutton, R.S., Barto, A.G.: Reinforcement Learning: An Introduction. MIT press, Cambridge (2018)

Acknowledgements

The authors would like to thank Dr. Cliff Wang of the Army Research Office for the many discussions which served as the inspiration for this research.

Funding

This study was funded by the Army Research Office (grant number W911NF-13-1-0421), by the Office of Naval Research (grant numbers N00014-18-1-2670 and N00014-20-1-2407), and by the National Science Foundation under (grant number CNS-1822094).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

Authors Shah, Ganesan, Jajodia, and Cam declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shah, A., Ganesan, R., Jajodia, S. et al. Maintaining the level of operational effectiveness of a CSOC under adverse conditions. Int. J. Inf. Secur. 21, 637–651 (2022). https://doi.org/10.1007/s10207-021-00573-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10207-021-00573-4