Abstract

The paper studies the steepest descent method applied to the minimization of a twice continuously differentiable function. Under certain conditions, the random choice of the step length parameter, independent of the actual iteration, generates a process that is almost surely R-convergent for quadratic functions. The convergence properties of this random procedure are characterized based on the mean value function related to the distribution of the step length parameter. The distribution of the random step length, which guarantees the maximum asymptotic convergence rate independent of the detailed properties of the Hessian matrix of the minimized function, is found, and its uniqueness is proved. The asymptotic convergence rate of this optimally created random procedure is equal to the convergence rate of the Chebyshev polynomials method. Under practical conditions, the efficiency of the suggested random steepest descent method is degraded by numeric noise, particularly for ill-conditioned problems; furthermore, the asymptotic convergence rate is not achieved due to the finiteness of the realized calculations. The suggested random procedure is also applied to the minimization of a general non-quadratic function. An algorithm needed to estimate relevant bounds for the Hessian matrix spectrum is created. In certain cases, the random procedure may surpass the conjugate gradient method. Interesting results are achieved when minimizing functions having a large number of local minima. Preliminary results of numerical experiments show that some modifications of the presented basic method may significantly improve its properties.

Similar content being viewed by others

Notes

except for the cases when the Hessian matrix in the local minimum of \(V(\mathsf {x})\) is singular

The indirect way to minimize \(V(\mathsf {x}_{j+s})\) using s steps of the conjugate gradient method is not considered now because it differs from the steepest descent method (1.1).

The possibility that the Barzilai–Borwein algorithm can converge linearly with the logarithmic convergence rate \(\sim \frac{2}{\kappa (\mathsf {A})+1}\), when an inappropriate starting point is chosen, is mentioned already in the paper [2]. Nevertheless, this situation occurs with zero probability.

Only in the first step, no vectors \(\mathsf {r}_{j-1}-\mathsf {r}_j\) and the values \(\gamma _j\) for \(j\le 0\) are defined, so the values \(\overline{\lambda }^{(1)}\), \(\underline{\Lambda }^{(1)}\) were expressed directly using the vector \(\mathsf {Ar}_0\).

The importance of the deviations in comparison with the R-linear descent of the residual norm decreases by increasing the number of steps, but the limited accuracy of the computation does not allow us realize the related observation.

The convergence factor (3.15) is applicable only to the eigenspaces with \(\lambda _i\in \langle \overline{\lambda }^{(n)},\underline{\Lambda }^{(n)}\rangle \).

The narrowed interval of the possible values \(l_j\) causes the more efficient suppression of the coordinates \(\beta _i^{(n)}\) for \(\lambda _i\) from the actual interval \(\langle \overline{\lambda }^{(n)},\underline{\Lambda }^{(n)}\rangle \); the number of the eigenvalues \(\lambda _i\not \in \langle \overline{\lambda }^{(n)},\underline{\Lambda }^{(n)}\rangle \) is much lower than the problem dimension M, and the insufficient efficiency of the process on the related eigenspaces does not significantly affect the total value \(\Vert \mathsf {r}_n\Vert \), while the decrease in the norm \(\Vert \mathsf {r}_n\Vert \) is small.

The algorithm itself does not need the second derivatives; nevertheless, they are hidden in the considerations in “Appendix 6.”

The number of steps is equal to the number of calculated gradients for other studied methods.

A “safety” parameter of this kind is implicitly present in the algorithm as a consequence of the inexact estimates \(\overline{\lambda }^{(n)}>\lambda \), \(\underline{\Lambda }^{(n)}<\Lambda \).

Algorithm 2 is a special realization of this idea.

In fact, the attempt to interpret the Barzilai–Borwein method in a nonstandard way was the origin of the work resulting in this article.

The mean value \(\widehat{E}_R(\lambda _i)\) exists by the definition of the point of the first type relative to the measure \(\phi \) and the condition \(\beta ^{(0)}_i\ne 0\) is included in the assumption \(\lambda _i\in \mathcal {S}_1^{(\phi )}(\mathsf {A,b})\).

We refuse to waste time calculating the gradients \(\mathsf {g}(\mathsf {x}_0-\gamma _1\mathsf {g}_0)\) until it is needed for the following step creation.

References

H. Akaike: On a successive transformation of probability distribution and its application to the analysis of the optimum gradient method. Ann. Inst. Statist. Math. Tokyo 11 (1959), 1 – 16

J. Barzilai and J. M. Borwein: Two-point step size gradient methods. IMA Journal of Numerical Analysis 8 (1988), 141 – 148

A. Cauchy: Méthode génerale pour la résolution des systèmes d’ équations simultanées. Comptes Rendus Hebd. Séances Acad. Sci. 25 (1847), 536–538,

Y. H. Dai and R. Fletcher: Projected Barzilai-Borwein methods for large-scale box-constrained quadratic programming. Numerische Mathematik 100 (2005), No. 1, 21 – 47

Y. H. Dai and L. Z. Liao: \(R\)-linear convergence of the Barzilai-Borwein gradient method. IMA Journal of Numerical Analysis vol. 22 (2002), 1 – 10

R. Fletcher and C. M. Reeves: Function minimization by conjugate gradients, The Computer Journal 1964, 149 – 154

G. E. Forsythe: On the asymptotic directions of the \(s\)-dimensional optimum gradient method. Numerische Mathematik 11 (1968), 57 – 76

A. Friedlander, J. M. Martínez, N. Molina and M. Raydan: Gradient method with retards and generalizations. SIAM J. Numer. Anal. 36 (1999), 275 – 289

Kalousek, Z.: Appeal of inexact calculations in Proceedings of conference “Modern mathematical methods in engineering”, VŠB-TU Ostrava, 2013

F. Luengo and M. Raydan: Gradient method with dynamical retards for large-scale optimization problems. Electronic Transactions on Numerical Analysis, 16 (2003), 186 – 193

Y. Narushima, T. Wakamatsu and H. Yabe: Extended Barzilai-Borwein method for unconstrained minimization problems. Pacific journal of optimization 6 (2010), 591 - 613

L. Pronzato, P. Wynn and A. A. Zhigljavsky: A dynamical-system analysis of the optimum \(s\)-gradient algorithm in Optimal design and related areas in optimization and statistics (editors L. Pronzato and A. A. Zhigljavsky) , Springer, 2009, pp. 39 – 80

L. Pronzato and A. A. Zhigljavsky: Gradient algorithms for quadratic optimization with fast convergence rates. Computational Optimization and Applications 50 (2011), 597 – 617

M. Raydan: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM Journal on Optimization 7 (1997), 26–33

M. Raydan and B. F. Svaiter: Relaxed steepest descent and Cauchy-Barzilai-Borwein method. Computational Optimization and Applications 21 (2002), 155 – 167

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Michael Overton.

Appendices

Appendix 1: Proof of Theorem 1

First, we prove an important property of the points of the second type:

Lemma 3

If \(\hat{x}\in \langle \lambda ,\Lambda \rangle \) is a point of the second type relative to the measure \(\phi \), then

Proof

Since \(\phi (\{\hat{x}\})=0\), it holds

The value \(\widehat{E}_R(\hat{x})\) is undefined; therefore, the limit on the right-hand side of (8.1) is either infinite or it does not exist at all. The function

is non-decreasing on \((0,\frac{\hat{x}}{2})\), as follows from

for \(0<\delta _1<\delta _2<\frac{\hat{x}}{2}\), because the argument of the integrated logarithm is

and a non-positive function is integrated in (8.2). The limit of the non-decreasing function \(g(\delta )\) in (8.1) exists, and since it is not finite according to the assumptions, it must be equal to \(-\infty \). \(\square \)

Now, we can prove the particular statements of Theorem 1. The convergence of the process to the solution is equivalent to the convergence of the residuals \(\mathsf {r}_n\) to zero, so we will deal with the sequence of the residuals.

-

1.

We will observe the coordinates \(\beta _i^{(j)}\) of the residual \(\mathsf {r}_j\) according to (2.1). The set \(\mathcal {S}_3^{(\phi )}(\mathsf {A,b})\) is finite; therefore, the value

$$\begin{aligned} s_{\phi ,\mathsf {A,b}} =\min _{\lambda _i\in \mathcal {S}_3^{(\phi )}(\mathsf {A,b})} \phi \left( \{\lambda _i\}\right) >0 \end{aligned}$$exists. This result implies the inequality

$$\begin{aligned} \Pr \left( l_j\ne \lambda _i\right) \le 1-s_{\phi ,\mathsf {A,b}} \end{aligned}$$for any eigenvalue \(\lambda _i\in \mathcal {S}(\mathsf {A,b})\). If at least one value \(l_j=\lambda _i\) for \(j=1,2,\dots ,n\), then as a consequence of (2.2), \(\beta _i^{(n)}=0\). Thus, the independence of the values \(l_j\) for different j leads to

$$\begin{aligned} \Pr \left( \beta _i^{(n)}\ne 0\right) =\Pr \biggl (\bigcap _{j=1}^n\left[ l_j\ne \lambda _i\right] \biggr ) =\prod _{j=1}^n\Pr \left( l_j\ne \lambda _i\right) \le (1-s_{\phi ,\mathsf {A,b}})^n\ . \end{aligned}$$(8.3)The probability that the residual \(\mathsf {r}_n\ne 0\) is the same as the probability of at least one coordinate \(\beta _i^{(n)}\) of the residual \(\mathsf {r}_n\) being nonzero, so

$$\begin{aligned} \Pr \left( \mathsf {r}_n\ne 0\right) =\Pr \biggl (\bigcup _{i=1}^N\left[ \beta _i^{(n)}\ne 0\right] \biggr ) \le \sum _{i=1}^N\Pr \left( \beta _i^{(n)}\ne 0\right) \le N(1-s_{\phi ,\mathsf {A,b}})^n \end{aligned}$$, and this probability converges to zero at \(n\rightarrow \infty \).

-

2.

In the case \(\mathcal {S}_1^{(\phi )}(\mathsf {A,b})\ne \emptyset \), we get, according to (2.3) and (2.4) and due to the validity of the strong law of large numbers,

$$\begin{aligned} \lim _{n\rightarrow \infty }\frac{1}{n}\ln \frac{|\beta ^{(n)}_i|}{|\beta ^{(0)}_i|} =\lim _{n\rightarrow \infty }\frac{1}{n}\sum _{j=1}^n\widetilde{R}_i^{(j)} =\widehat{E}_R(\lambda _i) \end{aligned}$$(8.4)for any \(\lambda _i\in \mathcal {S}_1^{(\phi )}(\mathsf {A,b})\) with probability one.Footnote 13

-

(a)

Let \(E_{\phi ,\mathsf {A,b}}>0\). Then, there exists an eigenvalue \(\lambda _{\hat{\imath }}\in \mathcal {S}_1^{(\phi )}(\mathsf {A,b})\) such that \(\widehat{E}_R(\lambda _{\hat{\imath }})=E_{\phi ,\mathsf {A,b}}>0\). We express the probability that there exists an infinite bounded subsequence of coordinates \(\{\beta _{\hat{\imath }}^{(n_k)}\}_{k>0}\). This event occurs, when for some \(C\in \mathcal {R}\) and all \(k\in \mathcal {N}\)

$$\begin{aligned} \frac{1}{n_k}\ln \frac{|\beta ^{(n_k)}_{\hat{\imath }}|}{|\beta ^{(0)}_{\hat{\imath }}|} <\frac{1}{n_k}\ln \frac{C}{|\beta ^{(0)}_{\hat{\imath }}|}\ ; \end{aligned}$$(8.5)The necessary condition to satisfy (8.5) is

$$\begin{aligned} \limsup _{k\rightarrow \infty }\frac{1}{n_k}\ln \frac{|\beta ^{(n_k)}_{\hat{\imath }}|}{|\beta ^{(0)}_{\hat{\imath }}|} \le \limsup _{k\rightarrow \infty }\frac{1}{n_k}\ln \frac{C}{|\beta ^{(0)}_{\hat{\imath }}|} =0\ . \end{aligned}$$(8.6)The relation (8.4) applied for \(\lambda _{\hat{\imath }}\)

$$\begin{aligned} \lim _{n\rightarrow \infty }\frac{1}{n}\ln \frac{|\beta ^{(n)}_{\hat{\imath }}|}{|\beta ^{(0)}_{\hat{\imath }}|} =\widehat{E}_R(\lambda _{\hat{\imath }})>0 \end{aligned}$$characterizes an event, which is incompatible with the event characterized by (8.6). Therefore, the probability of the existence of an infinite bounded subsequence of coordinates \(\{\beta _{\hat{\imath }}^{(n_k)}\}_{k>0}\) is

$$\begin{aligned} \Pr \left( \forall k\in \mathcal {N}:|\beta ^{(n_k)}_{\hat{\imath }}|<C\right)\le & {} \Pr \biggl (\limsup _{k\rightarrow \infty } \frac{1}{n_k}\ln \frac{|\beta ^{(n_k)}_{\hat{\imath }}|}{|\beta ^{(0)}_{\hat{\imath }}|}\le 0\biggr )\ \le \\\le & {} 1-\Pr \biggl (\lim _{n\rightarrow \infty }\frac{1}{n}\ln \frac{|\beta ^{(n)}_{\hat{\imath }}|}{|\beta ^{(0)}_{\hat{\imath }}|}>0\biggr )\ =\ 0, \end{aligned}$$and it implies

$$\begin{aligned} \lim _{n\rightarrow \infty }|\beta ^{(n)}_{\hat{\imath }}|=\infty \end{aligned}$$with probability one. Since

$$\begin{aligned} \Vert \mathsf {r}_n\Vert \ge \max _{\lambda _i\in \mathcal {S}^{(\phi )}(\mathsf {A,b})}|\beta ^{(n)}_i|\ , \end{aligned}$$the sequence of residuals and consequently the sequence of the iterations are almost surely divergent.

-

(b)

We prove the convergence of the process in both cases 2b) and 3) together. We will study the convergence of the sequence \(\{\beta _i^{(n)}\}_{n\ge 0}\) for general \(i=1,2,\dots ,N\) such that \(\lambda _i\in \mathcal {S}^{(\phi )}(\mathsf {A,b})\). If \(\lambda _i\in \mathcal {S}_3^{(\phi )}(\mathsf {A,b})\), the relation (8.3) implies

$$\begin{aligned} \Pr \left( \lim _{n\rightarrow \infty }\beta _i^{(n)}=0\right) =1\ . \end{aligned}$$(8.7)For \(\lambda _i\in \mathcal {S}_1^{(\phi )}(\mathsf {A,b})\), the mean value \(\widehat{E}_R(\lambda _i)\) exists. Analogously to (8.4)

$$\begin{aligned} \lim _{n\rightarrow \infty }\frac{1}{n}\ln \frac{|\beta ^{(n)}_i|}{|\beta ^{(0)}_i|} =\lim _{n\rightarrow \infty }\frac{1}{n}\sum _{j=1}^n\widetilde{R}_i^{(j)} =\widehat{E}_R(\lambda _i)\le E_{\phi ,\mathsf {A,b}}<0 \end{aligned}$$(8.8)with probability one. Let us suppose

$$\begin{aligned} \lim _{n\rightarrow \infty }\beta _i^{(n)}\ne 0\ . \end{aligned}$$We formulate this assumption now in the standard way: There exists \(\varepsilon >0\) and some infinite subsequence \(\{n_k\}_{k\ge 1}\subset \mathcal {N}\) such that \(|\beta _i^{(n_k)}|\ge \varepsilon \) for all \(k\in \mathcal {N}\). The technique applied above gives

$$\begin{aligned} \Pr \left( \lim _{n\rightarrow \infty }\beta _i^{(n)}\ne 0\right)= & {} \Pr \left( \forall k\in \mathcal {N}:|\beta _i^{(n_k)}|\ge \varepsilon \right) \nonumber \\\le & {} \Pr \biggl (\liminf _{k\rightarrow \infty } \frac{1}{n_k}\ln \frac{|\beta _i^{(n_k)}|}{|\beta ^{(0)}_i|}\ge 0\biggr )\\\le & {} 1-\Pr \biggl (\lim _{n\rightarrow \infty }\frac{1}{n}\ln \frac{|\beta ^{(n)}_i|}{|\beta ^{(0)}_i|}<0\biggr )\ =\ 0\ . \end{aligned}$$Thus, the relation (8.7) holds for \(\lambda _i\in \mathcal {S}_1^{(\phi )}(\mathsf {A,b})\) too. Let \(\lambda _i\in \mathcal {S}_2^{(\phi )}(\mathsf {A,b})\). Then, the statement of Lemma 3. implies for any \(K<0\) the existence of such \(\delta _K>0\) that

$$\begin{aligned} \int \limits _{ \langle \lambda ,\Lambda \rangle \setminus (\lambda _i-\delta _K,\lambda _i+\delta _K)} \ln \left| 1-\frac{\lambda _i}{l}\right| \mathrm {d}\phi (l)\le K\ , \end{aligned}$$(8.9)this integral being finite. We define the random variable

$$\begin{aligned} Q_i^{(j)}=\left\{ \begin{array}{ll} \ln \left| 1-\frac{\lambda _i}{l_j}\right| &{}\hbox {for} \quad l_j\in \langle \lambda ,\Lambda \rangle \setminus (\lambda _i-\delta _K,\lambda _i+\delta _K)\ ,\\ 0&{}\hbox {for}\quad l_j\in \langle \lambda ,\Lambda \rangle \cap (\lambda _i-\delta _K,\lambda _i+\delta _K) \end{array}\right. \end{aligned}$$The variable \(Q_i^{(j)}\) has its mean value \(E(Q_i)\le K\) due to (8.9), and at the given value \(l_j\) of the random variable L always holds \(\widetilde{R}_i^{(j)}\le Q_i^{(j)}\). Therefore,

$$\begin{aligned} \limsup _{n\rightarrow \infty }\frac{1}{n}\ln \frac{|\beta ^{(n)}_i|}{|\beta ^{(0)}_i|} =\limsup _{n\rightarrow \infty }\frac{1}{n}\sum _{j=1}^n\widetilde{R}_i^{(j)} \le \lim _{n\rightarrow \infty }\frac{1}{n}\sum _{j=1}^n\widetilde{Q}_i^{(j)} =E(Q_i)\le K<0 \end{aligned}$$(8.10)almost surely, and the relation (8.7) may be proved using the same method as for \(\lambda _i\in \mathcal {S}_1^{(\phi )}(\mathsf {A,b})\). We define the vectors

$$\begin{aligned} \mathsf {r}_n^{(k)}=\sum _{i=1}^k\beta _i^{(n)}\mathsf {e}_i \end{aligned}$$for \(k=0,1,\dots ,N\); thus, \(\mathsf {r}_n^{(N)}=\mathsf {r}_n\), \(\mathsf {r}_n^{(0)}=\mathsf {0}\). We express the probabilities

$$\begin{aligned} \Pr \left( \lim _{n\rightarrow \infty }\Vert \mathsf {r}_n^{(k)}-\mathsf {r}_n^{(k-1)}\Vert =0\right) =\Pr \left( \lim _{n\rightarrow \infty }|\beta _k^{(n)}|=0\right) =1 \end{aligned}$$as a consequence of (8.7) for any \(k=1,2,\dots ,N\). It implies

$$\begin{aligned} \lim _{n\rightarrow \infty }\mathsf {r}_n =\lim _{n\rightarrow \infty }\mathsf {r}_n^{(N)} =\lim _{n\rightarrow \infty }\mathsf {r}_n^{(N-1)} =\dots =\lim _{n\rightarrow \infty }\mathsf {r}_n^{(0)}=0 \end{aligned}$$with probability one. As for the rate of convergence, we express the values of \(\displaystyle \lim _{n\rightarrow \infty }\root n \of {|\beta _i^{(n)}|}\). If \(\beta _i^{(0)}=0\), all the members of the considered sequence are zero, otherwise

$$\begin{aligned} \lim _{n\rightarrow \infty }\root n \of {|\beta _i^{(n)}|} =\lim _{n\rightarrow \infty }\root n \of {\frac{|\beta _i^{(n)}|}{|\beta _i^{(0)}|}}\ . \end{aligned}$$(8.11)If \(\lambda _i\in \mathcal {S}_3^{(\phi )}(\mathsf {A,b})\), then the relation (8.3) implies the limit (8.11) is almost surely equal to zero. For the values \(\lambda _i\in \mathcal {S}_2^{(\phi )}(\mathsf {A,b})\), we get, based on (8.10),

$$\begin{aligned} \limsup _{n\rightarrow \infty }\root n \of {|\beta _i^{(n)}|} =e^{~\limsup \limits _{n\rightarrow \infty }\frac{1}{n}\ln \frac{|\beta _i^{(n)}|}{|\beta _i^{(0)}|}} \le e^K \end{aligned}$$(8.12)with probability one for arbitrary \(K<0\); therefore, the limit (8.11) is almost surely zero in this case. Analogously, the relation (8.8) implies for \(\lambda _i\in \mathcal {S}_1^{(\phi )}(\mathsf {A,b})\)

$$\begin{aligned} \lim _{n\rightarrow \infty }\root n \of {|\beta _i^{(n)}|} =e^{\widehat{E}_R(\lambda _i)}\ . \end{aligned}$$with probability one. Now, we can evaluate

$$\begin{aligned} \lim _{n\rightarrow \infty }\root n \of {\frac{\Vert \mathsf {r}_n\Vert }{\Vert \mathsf {r}_0\Vert }}= & {} \lim _{n\rightarrow \infty }\root 2n \of {\sum _{i=1}^N\left( \beta _i^{(n)}\right) ^2}\nonumber \\\ge & {} \lim _{n\rightarrow \infty }\root 2n \of {\max _{i\le N}\left( \beta _i^{(n)}\right) ^2} =\max _{i\le N}\lim _{n\rightarrow \infty }\root n \of {|\beta _i^{(n)}|}\ ; \end{aligned}$$(8.13)the last equality is valid, if all the limits on the right-hand side of (8.13) exist. However, their almost sure existence is confirmed by the preceding results. Analogously

$$\begin{aligned} \lim _{n\rightarrow \infty }\root n \of {\frac{\Vert \mathsf {r}_n\Vert }{\Vert \mathsf {r}_0\Vert }} \le \lim _{n\rightarrow \infty }\root 2n \of { N\cdot \max _{i\le N}\left( \beta _i^{(n)}\right) ^2} =\max _{i\le N}\lim _{n\rightarrow \infty }\root n \of {|\beta _i^{(n)}|} \end{aligned}$$with probability one, thus

$$\begin{aligned} \lim _{n\rightarrow \infty }\root n \of {\frac{\Vert \mathsf {r}_n\Vert }{\Vert \mathsf {r}_0\Vert }} =\max _{i\le N}\lim _{n\rightarrow \infty }\root n \of {|\beta _i^{(n)}|} \end{aligned}$$(8.14)almost surely. In the case \(\mathcal {S}_1^{(\phi )}(\mathsf {A,b})\ne \emptyset \) is by definition (2.5)

$$\begin{aligned} \lim _{n\rightarrow \infty }\root n \of {\frac{\Vert \mathsf {r}_n\Vert }{\Vert \mathsf {r}_0\Vert }} =\max _{\lambda _i\in \mathcal {S}_1^{(\phi )}(\mathsf {A,b})}e^{\widehat{E}_R(\lambda _i)} =e^{E_{\phi ,\mathsf {A,b}}} \end{aligned}$$(8.15)with unit probability. The logarithm of the relation (8.15) results in (2.6).

-

(c)

In the case \(E_{\phi ,\mathsf {A,b}}=0\), the technique used in the proofs of the statements 2a) and 2b) cannot be applied; nevertheless, if we admit the possibility of the convergence of the process to the solution, then, according to (8.14),

$$\begin{aligned} \lim _{n\rightarrow \infty }\root n \of {\frac{\Vert \mathsf {r}_n\Vert }{\Vert \mathsf {r}_0\Vert }} =\max _{\lambda _i\in \mathcal {S}_1^{(\phi )}(\mathsf {A,b})}e^{\widehat{E}_R(\lambda _i)}=1, \end{aligned}$$and the convergence is almost surely R-sublinear.

-

(a)

-

3.

When \(\mathcal {S}_1^{(\phi )}(\mathsf {A,b})=\emptyset \), the relations (8.3), (8.12) imply

$$\begin{aligned} \lim _{n\rightarrow \infty }\root n \of {|\beta _i^{(n)}|}=0 \end{aligned}$$for all the eigenvalues \(\lambda _i\in \mathcal {S}(\mathsf {A,b})\). Therefore, according to (8.14),

$$\begin{aligned} \lim _{n\rightarrow \infty }\root n \of {\frac{\Vert \mathsf {r}_n\Vert }{\Vert \mathsf {r}_0\Vert }} =\max _{i\le N}(0)=0 \end{aligned}$$almost surely, which proves statement 3.

Appendix 2: Proofs of Lemma 1 and Lemma 2

The integrals \(I_c\) and \(I_d\) exist for any \(q\in \langle \lambda ,\Lambda \rangle \), as both the singularities of the integrands are integrable. After the standard substitution

we get

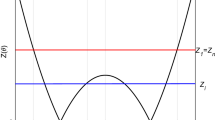

The second integral on the right-hand side of (8.17) is finite and does not depend on the parameter q, so we can neglect it. The first integral (we will denote it as \(I_{c1}\)) can be expressed using the variable \(\varphi \) (see Fig. 12) defined by:

As follows from the Fig. 12, the mapping \(\varphi \longleftrightarrow \alpha \) is unequivocal for \(\alpha \in \langle -\frac{\pi }{2},\frac{\pi }{2}\rangle \), \(\varphi \in \langle -\frac{\pi }{2},\frac{\pi }{2}\rangle \) at \(q\in (\lambda ,\Lambda )\); for \(q=\lambda \), the unequivocalness is ensured for the mapping \(\alpha \in (-\frac{\pi }{2},\frac{\pi }{2}\rangle \longleftrightarrow \varphi \in (0,\frac{\pi }{2}\rangle \) and \(\alpha =-\frac{\pi }{2}\longleftrightarrow \varphi =0\); the analogous unequivocal mapping exists for \(q=\Lambda \). Therefore, we can define the functions \(\varphi (\alpha )\) and \(\alpha (\varphi )\) and use them as necessary.

The integral \(I_{c1}\) may be expressed as

The first integral on the right-hand side of (8.20) is finite and does not depend on the parameter q again, so we neglect it. The remaining integral (we will further denote it as \(I_{c2}\)) can depend on the parameter q through the function \(\varphi (\alpha )\). We can express it by use of the variable \(\varphi \) as the integration variable. The geometric interpretation of the substitution \(\alpha \rightarrow \varphi \) gives simply (see Fig. 12—the angle \(\angle QAC=|\varphi -\alpha |\))

We must distinguish three situations now. For \(q\in (\lambda ,\Lambda )\),

From Fig. 12, it follows that the triangles QCB and \(QCB_1\) are identical. The points \(B_1\), Q, and A lie on the same straight line, and the triangle \(CAB_1\) is isosceles; therefore, the angles \(\angle QAC=\angle QBC\) and

Moreover, the base of the triangle \(B_1CA\) has the length

thus

and the integral \(I_c\) does not depend on the parameter q.

If \(q=\lambda \), then

In this case, the point Q coincides with the point \(B_1\), and we can express directly the value

and we get

again. We can finish the proof of Lemma 1 for \(q=\Lambda \) analogously.

As for the integral (3.2), we get after the implementation of the variable \(\alpha \) according to (8.16)

and the expression inside the absolute value in the numerator may be substituted using the variable \(\varphi \) defined by (8.18), (8.19)

The first integral on the right-hand side is finite and evidently does not depend on the parameter q. Therefore, we will deal with the second integral (denoted as \(I_{d1}\)) only. We change over the integration variable to \(\varphi \). For \(q\in (\lambda ,\Lambda )\), we get, according to (8.21),

(We have used the results (8.22), (8.23) during the calculations.)

If we use the law of cosines for the triangle QCA in Fig. 12, we get the relation

this relation also holds for \(q>\frac{\Lambda +\lambda }{2}\) and may be interpreted as a quadratic equation for the unknown variable \(v(\varphi )\). This quadratic equation always has two different solutions with opposite signs, the positive solution being the value \(v(\varphi )\) itself.

The law of cosines applied to the triangle QCB gives the equation

and so v solves the Eq. (8.25) if and only if \((-v)\) satisfies the Eq. (8.26). This implies that the negative solution to the Eq. (8.25) is the opposite value of \(v(-\varphi )\). According to Vieta’s theorem, we can write therefore

After the substitution of these results into (8.24), we get

Let us define the variable \(\psi \in \langle 0,\frac{\pi }{2})\) by the relation

The integral (8.27) expressed using this substitution is

i.e., its value does not depend on the parameter q.

If \(q=\lambda \), the relation (8.24) reduces to

Since the angle \(\angle PCA=2\varphi \) in this case, the value \(v(\varphi )\) can be expressed as

and

The substitution \(\frac{\tan \varphi }{\sqrt{\lambda }}=\frac{\tan \psi }{\sqrt{\Lambda }}\) gives the result (8.28) again; similarly, we get the same result for \(q=\Lambda \).

Appendix 3: Existence of the Integral J

Let us define

The sets \(\Omega _k\) for \(k\in \mathcal {N}\) consist of two V-shaped strips with the width \(\frac{\Lambda }{2^k(\kappa (\mathsf {A})-1)}\); these are shown in Fig. 13. Note, if the inequality

is satisfied for a number k, then the set \(\Omega _k\) is empty; geometrically it follows from this fact than the point \(L_k\) in Fig. 13 lies to the right of the point \(R_k\). Contrarily, when \(\Omega _k\ne \emptyset \), then

this information will be used later.

The intersection \(\Omega _i\cap \Omega _j=\emptyset \) for \(i\ne j\) and

Now, we find the bounds for the values f(x, l), when \([x,l]\in \Omega _0\). The argument of the logarithm in the definition of the function f(x, l) is

at the same time

on the whole square \(\langle \lambda ,\Lambda \rangle \times \langle \lambda ,\Lambda \rangle \), so

on \(\Omega _0\). When \(k>0\), we can get by the way leading to (8.32)

on \(\Omega _k\). If \(\Omega _k\ne \emptyset \), then \(\ln \left[ 2^k(\kappa (\mathsf {A}-1)\right] >0\) due to (8.30), and we can estimate

The denominator of the expression (3.3) is bounded from below on \(\Omega _k\ne \emptyset \) by the value

the last inequality is valid as a consequence of (8.30).

We can further estimate the measures

—all these results follow easily from Fig. 13. The estimate (8.37) together with (8.31) implies

A sufficient condition for the existence of the integral J defined by (3.4) is the existence of the integral

The condition \(k\ge \log _2\frac{2\kappa (\mathsf {A})}{(\kappa (\mathsf {A})-1)^2}\) follows from the relation (8.29). It holds

for any \(k\ge 0\). The first integral on the right-hand side of (8.38) is zero at \(\Omega _0=\emptyset \), or—according to (8.39)

in any case, its value is finite. For \(k\ge k_0=\min (\log _2\frac{2\kappa (\mathsf {A})}{(\kappa (\mathsf {A})-1)^2},1)\), the terms in the sum on the right-hand side of (8.38) may be estimated by (8.39) using (8.34), (8.35), and (8.36)

The sum

So, the series on the right-hand side of (8.38) is absolutely convergent, and the integral J exists.

Appendix 4: Construction of the Optimal Step Length Distribution

First, we prove

Lemma 4

If a distribution function \(F_L(l)\) generates the function \(\widehat{E}_R(x)\) satisfying the conditions of Theorem 3, then the function \(\widehat{E}_R(x)\) is defined for all \(x\in \langle \lambda ,\Lambda \rangle \).

Proof

We show that for any point \(\hat{x}\) of the second or third type relative to the measure \(\phi \), there exists such a \(\delta >0\) that for any point \(x\in \mathcal {D}_{\delta }(\hat{x})=(\hat{x}-\delta ,\hat{x}+\delta )\cap \langle \lambda ,\Lambda \rangle \) of the first type relative to the measure \(\phi \) is \(\widehat{E}_R(x)<\overline{E}_{\phi }-1\). Since almost all the points \(x\in \mathcal {D}_{\delta }(\hat{x})\) are the points of the first type relative to the measure \(\phi \) (it is a consequence of Theorem 2) and \(\nu \left( x\in \mathcal {D}_{\delta }(\hat{x})\right) \ge \frac{2\delta }{\Lambda (\Lambda -\lambda )}>0\), the conditions of Theorem 3 are not satisfied in the considered case.

Suppose \(\hat{x}\in \langle \lambda ,\Lambda \rangle \) be a point of the third type relative to the measure \(\phi \). According to the definition, \(\phi (\hat{x})=m>0\). If \(x\in \langle \lambda ,\Lambda \rangle \) is a point of the first type relative to the measure \(\phi \), then the mean value \(\widehat{E}_R(x)\) exists, and

due to (8.33). Let us denote, using the symbols applied in the proof of Theorem 3

For any \(x\in \mathcal {D}_{\delta }(\hat{x})\) being the point of the first type relative to the measure \(\phi \), the right-hand side of (8.40) may be estimated

according to (3.10).

Assuming \(\hat{x}\) be a point of the second type relative to the measure \(\phi \), there exists, consequently of Lemma 3, such a \(\delta \in (0,\frac{\hat{x}}{2})\) that

For any \(x\in \mathcal {D}_{\delta }(\hat{x})\) being the point of first type relative to the measure \(\phi \), the mean value

We can express the value x in the form \(x=\hat{x}+\alpha \delta \) for some \(\alpha \in (-1,1)\). Suppose first \(\alpha >0\). Then,

The integrand in the second integral on the right-hand side is always non-positive, and the denominator of the fraction in the first integral is always greater or equal to \(\delta \); therefore, \(1+\frac{\alpha \delta }{\hat{x}-l}\le 1+\alpha <2\). It implies

and in (8.41)

Analogously we get the same result at \(\alpha <0\) \(\square \)

The wanted distribution function \(F_L(l)\) is, as a consequence of Lemma 4, continuous, especially \(F_L(\lambda )=0\). This fact will be used later.

The function \(\ln \left| 1-\frac{x}{l}\right| \) may be expressed in the form of Fourier series of Chebyshev polynomials of the first kind of the variable l; the Fourier coefficients \(c_n(x)\) are calculated in “Appendix 5.” We show that the partial sums of the created series are bounded by an integrable function for all \(x\in \langle \lambda ,\Lambda \rangle \), if the measure \(\phi \) generates the function \(\widehat{E}_R(x)\) satisfying the condition (3.6). Indeed, using the notation

and applying the results (8.61), (8.62), we get (the parameters \(\xi \), \(\zeta \) defined by (8.63) and (8.64), respectively, are used)

The integrated fraction in the last integral on the right-hand side of (8.43) is an odd and bounded function (it follows from the fact that it is a sum of k sines); therefore, it is integrable on any bounded interval. Due to the oddness of the integrand, the last integral is equal to the integral of the same function over the interval \((\alpha ,|\alpha -\xi |)\). Now, we can estimate the sum \(Z_k(l,x)\)

The absolute value of the last integral in (8.44) is necessary because the ordering of its limits depends on the relation between the values \(\alpha \), \(\xi \). Nevertheless, the integrand is always a positive function, and since in the case \(\alpha <|\alpha -\xi |\) it is \((\alpha ,|\alpha -\xi |)\subset (\alpha ,\alpha +\xi )\) and for \(\alpha \ge |\alpha -\xi |\) it holds \((|\alpha -\xi |,\alpha )\subset (|\alpha -\xi |,\alpha +\xi )\), we can estimate

The integrals on the right-hand side of (8.45) have their limits in ascending order, because \(\alpha \in \langle 0,\pi \rangle \), \(\xi \in \langle 0,\pi \rangle \). Furthermore, the last mentioned fact implies \((\alpha ,\alpha +\xi )\subset (\alpha ,\alpha +\pi )\), and we can continue estimating as follows:

The procedure resulting in (8.46) is fully correct only when \(\alpha >0\); in the case \(\alpha =0\), the integration of the term \(\frac{\cosh \frac{t}{2}\sin \frac{\alpha }{2}}{\sinh ^2\frac{t}{2}+\sin ^2\frac{\alpha }{2}}\) in (8.44) does not give the antiderivative \(2\arctan \frac{\sinh \frac{t}{2}}{\sin \frac{\alpha }{2}}\). The integrand is, however, zero except for the point \(t=0\) (where it is undefined) in this case; therefore, the corresponding integral is zero, and the estimate (8.46) holds too.

As follows from Lemma 4, if the measure \(\phi \) satisfies our requirements, then the integral

exists for any \(x\in \langle \lambda ,\Lambda \rangle \), especially for \(x=\lambda \) and \(x=\Lambda \) too. It implies the partial sums \(\{Z_k(l,x)\}_{k\ge 0}\) are for any \(x\in \langle \lambda ,\Lambda \rangle \) dominated by a common integrable function \(z_x(l)\), and due to the Lebesgue dominated convergence theorem,

for all \(x\in \langle \lambda ,\Lambda \rangle \).

The integrals on the right-hand side of (8.47) may be modified using the integration by parts

(the basic properties of the distribution function \(F_L\) are used: \(F_L(\Lambda )=1\), \(F_L(\lambda )=0\) due to its necessary continuity). We use again the substitution (8.42); the distribution function \(F_L(l)\) changes over to the function \(\widetilde{F}_L(\alpha )=F_L(\frac{\Lambda +\lambda }{2}-\frac{\Lambda -\lambda }{2}\cos \alpha )\) and

The function \(\widetilde{F}_L(\alpha )\) may be symmetrically extended onto the interval \(\langle -\pi ,\pi \rangle \) defining

(\(\breve{F}_L(0)=\widetilde{F}_L(0)=0\) according to its definition). The function \(\breve{F}_L\) is continuous, bounded on \(\langle -\pi ,\pi \rangle \) and odd; therefore, \(\breve{F}_L\in L_2(-\pi ,\pi )\), and it may be expressed in the form of a trigonometric series

The expansion (8.48) may be used also on the interval \(\langle 0,\pi \rangle \), where \(\widetilde{F}_L(l)=\breve{F}_L(l)\); then

Substituting the results (8.49) and (8.61) into (8.47), we get

The expression on the right-hand side of (8.50) may be written in the form

The coefficient \(b_0\) is finite, because \(|d_n|\le \frac{\Vert \breve{F}_L\Vert }{\sqrt{\pi }}\le \sqrt{2}\) for any \(n\in \mathcal {N}\) due to (8.48) and

Moreover, since \(b_n=-\frac{(-1)^n}{n}-\frac{\pi }{2}d_n\) and both the sequences \(\{\frac{(-1)^n}{n}\}_{n\ge 1}\), \(\{d_n\}_{n\ge 1}\) belong to the space \(l_2\), also \(\{b_n\}_{n\ge 0}\in l_2\) and the series (8.51) is a Fourier expansion of a function \(\widehat{E}_R(x)\in L_{2,f}(\lambda ,\Lambda )\) with the weight function \(f=\frac{1}{\sqrt{(\Lambda -x)(x-\lambda )}}\).

The condition necessary to satisfy (3.6) is \(\widehat{E}_R(x)=const\) almost everywhere on \(\langle \lambda ,\Lambda \rangle \). The Fourier series representation (8.51) of such a function must have all coefficients \(b_n=0\) for \(n\ge 1\), so

for all \(n\in \mathcal {N}\). Therefore, the Fourier series of the distribution function \(\widetilde{F}_L(\alpha )\) is unequivocally defined.

Let us suppose the existence of two distribution functions \(\widetilde{F}_1(\alpha )\), \(\widetilde{F}_2(\alpha )\) satisfying our requirements and their extensions \(\breve{F}_1(\alpha )\), \(\breve{F}_2(\alpha )\). The functions \(\breve{F}_i(\alpha )\) are continuous, as proved above, and they have bounded variation (equal to 2, as follows from the fact that \(\widetilde{F}_i(\alpha )\) are distribution functions). The trigonometric series (8.48) of both the functions has the coefficients (8.52). According to Dirichlet-Jordan theorem, this series converges uniformly to the functions \(\breve{F}_1(\alpha )\), \(\breve{F}_2(\alpha )\) on any closed interval \(\langle a,b\rangle \subset (-\pi ,\pi )\); therefore, \(\breve{F}_1(\alpha )=\breve{F}_2(\alpha )\) for any \(\alpha \in (-\pi ,\pi )\). The equality \(\breve{F}_1(\pi )=\breve{F}_2(\pi )\) follows from the fact that \(\widetilde{F}_L(\pi )=F_L(\Lambda )=1\) for any distribution function \(F_L\). This implies that there exists at most one distribution function \(F_L(l)\) which generates the function \(\widehat{E}_R(x)\) satisfying the condition (3.6).

The last task is the finding of the distribution function \(F_l(l)\) corresponding to the series (8.48) with the coefficients (8.52). It is known that the series

is trigonometric expansion of the function x on the interval \((-\pi ,\pi )\). Using this fact and the requirement \(\widetilde{F}_L(\pi )=1\), we get

Appendix 5: Variance of the Process with Optimal Step Length Distribution

Calculations in this appendix are technically complicated, and the details would require a lot of space. Therefore, we usually present only their basic principles and the final results.

Considering the distribution (3.13) of the random variable L, we express the variance \(\widehat{\sigma }^2_R(x)\) using the second moment

The integral in (8.54) exists, because all the present singularities are integrable. The probability density f defined by (3.13) may be considered a weight function on \((\lambda ,\Lambda )\); the existence of the integral in (8.54) implies

and we can write

If \(\mathcal {B}=\{\mathsf {b}_i\}_{i\ge 0}\) is an orthonormal basis of a real Hilbert space \(\mathcal {H}\), \(\mathsf {u}\in \mathcal {H}\), then we can express the vector \(\mathsf {u}\) using the Fourier series

and its norm satisfies the Parseval’s identity

The most common orthonormal basis in the Hilbert space \(L_{2,f}(\lambda ,\Lambda )\) with the weight (3.13) is constituted by the Chebyshev polynomials of the first kind

Thus, the problem of the variance expression is transformed to the calculation of the Fourier coefficients

The relation (8.54) may be then written, using (8.56) and (8.57), in the form

Let \(\Omega _{\varepsilon }(x)=(\lambda ,\Lambda )\times \langle 0,x\rangle \setminus \{[l,t]:|l-t|<\varepsilon \}\) for \(x\in \langle \lambda ,\Lambda \rangle \), \(\varepsilon \in (0,\min (\lambda ,\frac{\Lambda -\lambda }{3}))\), and

for integer \(n\ge 0\). We express the integral

The integral \(C_n(\varepsilon ,x)\) exists for arbitrary \(x\in \langle \lambda ,\Lambda \rangle \) and any \(\varepsilon \in (0,\min \left( \lambda ,\frac{\Lambda -\lambda }{3})\right) \), because the singularity \(\frac{1}{\sqrt{(\Lambda -\lambda )^2-(2l-\Lambda -\lambda )^2}}\) is integrable. So, according to the Fubini’s theorem,

where

After the integration (8.59), we get

for \(n\in \mathcal {N}\); analogous result without the factor \(\sqrt{2}\) holds for the value \(c_0\).

The change in the integration order when computing the integral \(C_n(\varepsilon ,x)\) gives

where

The recurrence formula for the Chebyshev polynomials implies

where \(U_n\) denote the Chebyshev polynomials of second kind and

After all the needed calculations (during the process, we find that many calculated integrals converge to zero at \(\varepsilon \rightarrow 0^+\)), we get the Fourier coefficients \(c_n(x)\), according to (8.60)

The Fourier coefficients \(c_n(x)\) were calculated with the aim to express the variance \(\widehat{\sigma }^2_R(x)\) according to (8.58). Before the attempt to calculate this sum, we define the parameters

The sum (8.58) may be written using (8.61) in the form

We did not find the analytic expression of the sum (8.65); nevertheless, it may be approximated rather exactly, namely for small values \(\zeta \) corresponding to large condition numbers \(\kappa (\mathsf {A})\).

The contribution of the first term in the square bracket in (8.65) to the total sum is

We describe the integration area \([t,u]\in \langle 0,\zeta \rangle \times \langle 0,\zeta \rangle \) using the variables

Then, the integral (8.66) may be transformed into the form

We can estimate the remaining integral on the right-hand side of (8.67) using the approximation

This (upper) estimate is very accurate for common condition numbers \(\kappa (\mathsf {A})\); values of the inaccuracy of the result are demonstrated in Table 2.

The inaccuracy behaves proportionally to \([\kappa (\mathsf {A})]^{-3}\) for sufficiently large \(\kappa (\mathsf {A})\), and its values are negligible in comparison with the total sum in (8.65).

After the integration in (8.67) using the approximation (8.68), we get

The second term in the square bracket in (8.65) may be expressed in a similar way (without the use of variables v, w). The application of Euler’s formula and the approximation (8.68) give

the inserted inaccuracy may be estimated, using the Schwartz’ inequality, as

values of the integral \(I(\kappa (\mathsf {A}))\) are demonstrated in Table 3.

The value \(I(\kappa (\mathsf {A}))\) behaves proportionally to \([\kappa (\mathsf {A})]^{-2}\) for sufficiently large \(\kappa (\mathsf {A})\), which is still negligible.

The third term in the square bracket in (8.65) may be expressed exactly because of the known fact that the sum \(\sum \frac{1}{n^2}\cos nx\) is the trigonometric series representation of the function \(\frac{1}{4}(x-\pi )^2-\frac{\pi ^2}{12}\) on the interval \(\langle 0,2\pi \rangle \). Since \(\xi \in \langle 0,\pi \rangle \), we can use this result also for the sum containing \(\cos ^2\xi =\frac{1}{2}(1+\cos 2\xi )\) and consequently

We can substitute the results (8.69), (8.70), (8.71) into (8.65); however, the obtained result is very complicated and chaotic. Therefore, we prefer the graphic illustration in Fig. 14. When the matrix \(\mathsf {A}\) is sufficiently ill-conditioned (\(\kappa (\mathsf {A})>10^4\)), the contributions of the expressions (8.69), (8.70) to the sum (8.65) are negligible except for the values \(x\sim \lambda \); therefore, we can write

The used method allows the calculation of the correlations of the random variables \(R_x\), \(R_y\), too. The covariance of these variables may be written in the form analogous to (8.55)

and the results (8.61) may be used. Analogously to (8.65)

where

The summation of the first three terms in the square bracket in (8.72) is performed above, the last term may be expressed using the trigonometric sum applied in (8.71). When applying it, we must take into account the fact that the used formula is valid for arguments \(x\ge 0\):

If \(\kappa (\mathsf {A})\gg 1\) and \(\min (x,y)\gtrsim \sqrt{\lambda \Lambda }\), this term significantly exceeds the remaining ones in (8.72), and the correlation

Appendix 6: Estimates of the Hessian Matrix Eigenvalues

If \(V(\mathsf {x})\) is a quadratic function, then the estimate \(\hat{\lambda }^{(j+1)}\) given by (4.4) represents the second derivative of \(V(\mathsf {x})\) in the direction \(\mathsf {r}_j\) (independently of the point \(\mathsf {x}\)). In the case of non-quadratic \(V(\mathsf {x})\), we can proceed analogously: We will propose a suitable value of the second derivative of \(V(\mathsf {x})\) along the jth step of the iterative procedure for the estimate \(\hat{\lambda }^{(j+1)}\).

Let \(V(\mathsf {x}_{j-1})\), \(V(\mathsf {x}_j)\) and \(\mathsf {g}_{j-1}=\nabla V(\mathsf {x}_{j-1})\), \(\mathsf {g}_j=\nabla V(\mathsf {x}_j)\) be given. We define a function of one variable

where \(\mathsf {p}_j=\mathsf {x}_j-\mathsf {x}_{j-1}=-\gamma _j\mathsf {g}_{j-1}\). The second derivative of V taken at the point \(x_{j-1}+tp_j\) along the direction \(\mathsf {p}_j\) is

The values

are known; therefore, we can interpolate the function \(\widehat{V}(t)\) by a cubic polynomial

where

satisfying the relations (8.74). Substituting the polynomial \(\widehat{P}(t)\) for \(\widehat{V}(t)\) in (8.73) gives

The considered step \(\mathsf {p}_j\) is characterized by the values \(t\in \langle 0,1\rangle \). In accordance with the considerations performed above, the maximum suitable value provided by (8.79) will be selected. So, depending on the values a, b, c:

-

1.

If \(a\le 0\), then the corresponding maximum is achieved for \(t=0\), so \(\hat{\lambda }^{(j+1)}=\frac{2b}{\Vert \mathsf {p}_j\Vert ^2}\). Nevertheless, the substitutive cubic polynomial \(\widehat{P}(t)\) may be decreasing on the whole set \(\mathcal {R}\), and in this case, it does not give any information about the behavior of the function V around its potential local minimum. This situation occurs, when the derivative of the polynomial \(\widehat{P}(t)\) has at most one real root, i.e.,

$$\begin{aligned} b^2-3ac\le 0\ . \end{aligned}$$(8.80)In this case, the result is not accepted. If \(b\le 0\), then the function \(\widehat{V}(t)\) is not convex in the observed region, and its behavior has no relation to its properties in the neighborhood of its local minimum; therefore, the result is disregarded too.

-

2.

If \(a>0\), then the value (8.79) increases when increasing t. Since \(\widehat{V}'(0)<0\) (the vectors \(\mathsf {g}_{j-1}\) and \(\mathsf {p}_j\) are oppositely oriented), there exists such a \(t_m>0\) that the function \(\widehat{V}(t)\) acquires its local minimum for \(t=t_m\); evidently, \(\widehat{V}''(t_m)>0\). Now, two situations are possible:

-

(a)

If \(t_m\le 1\) (which is equivalent to \(\widehat{V}'(1)=\langle \mathsf {g}_j|\mathsf {p}_j\rangle \ge 0\)), then the value \(t=1\) provides the maximum relevant value \(\widehat{V}''(t)\) associated with the jth step and \(\hat{\lambda }^{(j+1)}=\frac{6a+2b}{\Vert \mathsf {p}_j\Vert ^2}\).

-

(b)

If \(t_m>1\), then the step \(\mathsf {p}_j\) is too short, and it does not achieve the local minimum of the function \(\widehat{V}\). Since the points of minima are in the center of our interest, we prefer to work with the value \(\widehat{V}''(t_m)>\widehat{V}''(1)\). The value \(t_m\) is the positive root of the equation

$$\begin{aligned} 3at^2+2bt+c=0\ , \end{aligned}$$thus

$$\begin{aligned} \hat{\lambda }^{(j+1)}=\frac{6at_m+2b}{\Vert \mathsf {p}_j\Vert ^2} =\frac{2\sqrt{b^2-3ac}}{\Vert \mathsf {p}_j\Vert ^2}\ . \end{aligned}$$

-

(a)

As for the estimate \(\hat{\Lambda }^{(j+1)}\), the formula (4.4) based on (4.2) represents the second derivative of the function V in the direction \(\sqrt{\mathsf {H}}\mathsf {p}_j\) in the case of quadratic function \(V(\mathsf {x})\) bounded from below—then, the Hessian matrix \(\mathsf {H}\) is positive, and its square root exists. Therefore, we try to estimate the value \(\hat{\Lambda }^{(j+1)}\) corresponding to the jth step as the minimum relevant value

The vector \(\mathsf {H}(\mathsf {x}_{j-1}+t\mathsf {p}_j)\mathsf {p}_j\) may be expressed in the form

We decompose the gradients \(\mathsf {g}(\mathsf {x})\) into two parts —the component \(\mathsf {g}_{\parallel }\) parallel to \(\mathsf {p}_j\) and the component \(\mathsf {g}_{\perp }\) perpendicular to \(\mathsf {p}_j\):

Since we have not enough information about the gradients \(\mathsf {g}(\mathsf {x}_{j-1}+t\mathsf {p}_j)\), we must approximate them. For the component \(\mathsf {g}_{\parallel }\), the cubic interpolation (8.75) of the function \(\widehat{V}(t)\) is the most accessible one, since

For the perpendicular component, we have only two available entries —the values \(\mathsf {g}_{j-1\perp }=\mathsf {0}\) and \(\mathsf {g}_{j\perp }\) corresponding to \(t=1\). Therefore, the linear interpolation

is applied. The derivatives of the particular components of gradients will be

and according to (8.82), we get the interpolation

Using this result in (8.81), we get

for an appropriate t.

If \(6at+2b>0\), the expression (8.83) acquires its minimal value at

assuming \(\mathsf {g}_j\) not to be parallel to \(\mathsf {p}_j\). The choice of the parameter t depends on the values a, b, c

-

1.

If \(a\le 0\) and \(b\le 0\), then the value \(\hat{\Lambda }^{(j+1)}\) is not proposed.

-

2.

If \(a\le 0\) and \(b\in (0,\frac{1}{2}\sqrt{\Vert \mathsf {g}_j\Vert ^2\Vert \mathsf {p}_j\Vert ^2-\langle \mathsf {g}_j|\mathsf {p}_j\rangle ^2}\rangle \), or \(a\ge 0\) and \(b\ge \frac{1}{2}\sqrt{\Vert \mathsf {g}_j\Vert ^2\Vert \mathsf {p}_j\Vert ^2-\langle \mathsf {g}_j|\mathsf {p}_j\rangle ^2}\), then the expression (8.83) increases for \(t\ge 0\), so the value \(t=0\) is used in (8.83).

-

3.

If \(a>0\) and \(b\le \frac{1}{2}\sqrt{\Vert \mathsf {g}_j\Vert ^2\Vert \mathsf {p}_j\Vert ^2-\langle \mathsf {g}_j|\mathsf {p}_j\rangle ^2}\), or \(a<0\) and \(b\ge \frac{1}{2}\sqrt{\Vert \mathsf {g}_j\Vert ^2\Vert \mathsf {p}_j\Vert ^2-\langle \mathsf {g}_j|\mathsf {p}_j\rangle ^2}\), then the value

$$\begin{aligned} t_0=\frac{1}{6a}\left[ \sqrt{\Vert \mathsf {g}_j\Vert ^2\Vert \mathsf {p}_j\Vert ^2 -\langle \mathsf {g}_j|\mathsf {p}_j\rangle ^2}-2b\right] \ge 0 \end{aligned}$$is expressed. Now:

-

(a)

If \(t_0\le 1\), then the minimal possible value of the expression (8.83) is met in the inside point of the step \(\mathsf {p}_j\), so the value \(t_0\) is used in (8.83).

-

(b)

If \(t_0>1\), then the expression (8.83) is a decreasing function of the parameter \(t\in \langle 0,t_0\rangle \); therefore, the selection \(t=1\) is recommended. Nevertheless, if, in addition, \(a>0\), then we can consider also all the values \(t\le t_m\) (\(t_m\) is the parameter minimizing the value \(\widehat{V}(t)\), see above). The possibility \(t_m>1\) is characterized by the condition \(\langle \mathsf {p}_j|\mathsf {g}_j\rangle <0\), and in this case, the value \(t=\min (t_0,t_m)\) is used in (8.83).

-

(a)

-

4.

Analogously to the calculation of the value \(\hat{\lambda }^{(j+1)}\), the results are disregarded, when the condition (8.80) is satisfied.

The expression of the values \(\hat{\lambda }^{(j+1)}\), \(\hat{\Lambda }^{(j+1)}\) is impossible, when \(a\le 0\) and \(b\le 0\), or in the case (8.80); in all the remaining situations, the estimates are available. The inaccessibility of the values \(\hat{\lambda }^{(j+1)}\), \(\hat{\Lambda }^{(j+1)}\) may be solved by two different ways:

-

1.

In the first step (\(j=0\)), we need initialize the estimates \(\overline{\lambda }^{(1)}\), \(\underline{\Lambda }^{(1)}\). First, we propose a step length parameter \(\gamma _1\). If the unwanted situation mentioned above occurs, the easiest way to get some results is to increase the value \(\gamma _1\). Really, if \(\mathsf {p}_1=-\gamma _1\mathsf {g}_0\), then adding of the Eqs. (8.76) and (8.77) results in

$$\begin{aligned} a+b=V(\mathsf {x}_1)-V(\mathsf {x}_0)+\gamma _1\Vert \mathsf {g}_0\Vert ^2 \ge V_0-V(\mathsf {x}_0)+\gamma _1\Vert \mathsf {g}_0\Vert ^2\ , \end{aligned}$$(8.84)since the function \(V(\mathsf {x})\) is assumed to be bounded from below by a value \(V_0\). Therefore, if \(\mathsf {g}_0\ne \mathsf {0}\), then it exists a \(\gamma _1>0\) for which the expression on the right-hand side of (8.84) is positive, which implies \(a>0\) or \(b>0\). However, the possibility (8.80) is not yet excluded. The requirement opposite to (8.80) may be transformed, using (8.76), (8.77), (8.78), into the form

$$\begin{aligned}&\langle \mathsf {g}_1|\mathsf {p}_1\rangle ^2 -\left[ 6\left( V(\mathsf {x}_1)-V(\mathsf {x}_0)\right) +\gamma _1\Vert \mathsf {g}_0\Vert ^2\right] \langle \mathsf {g}_1|\mathsf {p}_1\rangle \\&\quad +\left[ 3\left( V(\mathsf {x}_1)-V(\mathsf {x}_0)\right) +\gamma _1\Vert \mathsf {g}_0\Vert ^2\right] ^2 >0\ . \end{aligned}$$This inequality is satisfied for any value \(\langle \mathsf {g}_1|\mathsf {p}_1\rangle \),Footnote 14 when its discriminant is negative; thus, for \(\gamma _1>0\),

$$\begin{aligned} 4\left( V(\mathsf {x}_1)-V(\mathsf {x}_0)\right) +\gamma _1\Vert \mathsf {g}_0\Vert ^2>0\ ; \end{aligned}$$(8.85)this holds, when

$$\begin{aligned} \gamma _1>\frac{4\left( V(\mathsf {x}_0)-V_0\right) }{\Vert \mathsf {g}_0\Vert ^2}\ . \end{aligned}$$(8.86)The condition (8.86) is stronger than the condition following from (8.84); therefore, for any \(\gamma _1\) satisfying (8.86), the initial estimates \(\overline{\lambda }^{(1)}=\hat{\lambda }^{(1)}>0\), \(\underline{\Lambda }^{(1)}=\hat{\Lambda }^{(1)}>0\) exist. The value \(V_0\) is, however, unknown in general; nevertheless, all our requirements guaranteeing the existence of estimates \(\hat{\lambda }^{(1)}>0\), \(\hat{\Lambda }^{(1)}>0\) are met, as the condition (8.85) is satisfied.

-

2.

For \(j\ge 1\), the process described above may be used too, or simply the results for \(\hat{\lambda }^{(j+1)}\), \(\hat{\Lambda }^{(j+1)}\) are not employed.

Since the maximum possible estimate of the minimal eigenvalue of the Hessian matrix is selected, and the estimate of the maximal eigenvalue is minimized, the possibility \(\hat{\lambda }^{(j+1)}>\hat{\Lambda }^{(j+1)}\) is not excluded. In general, the use of shorter steps in the steepest descent method causes less damage, so in the nonsense case \(\overline{\lambda }^{(j)}>\underline{\Lambda }^{(j)}\), we prefer the application of the value \(l_j=\overline{\lambda }^{(j)}\); this is supported also by the use of a finer interpolation during its calculation.

Rights and permissions

About this article

Cite this article

Kalousek, Z. Steepest Descent Method with Random Step Lengths. Found Comput Math 17, 359–422 (2017). https://doi.org/10.1007/s10208-015-9290-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10208-015-9290-8