Abstract

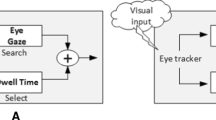

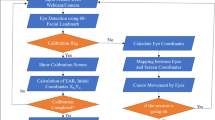

Healthy people use a keyboard and mouse as standard input devices for controlling a computer. However, these input devices are usually not suitable for people with severe physical disabilities. This study aims to design and implement a suitable and reliable human–computer interactive (HCI) interface for disabled users by integrating both eye tracking technology and lip motion recognition. Eye movements control the cursor position on the computer screen. An eye gaze followed by a lip motion of the mouth opening served as a mouse click action. Seven lip motion features were extracted to discriminate mouth openings and mouth closures with the cumulative sum control chart algorithm. A novel smoothing technique called the threshold-based Savitzky–Golay smoothing filter was proposed to stabilize the cursor movement due to the inherent jittery motions of eyes and to reduce eye tracking latencies. In this study, a fixation experiment with nine dots was carried out to evaluate the efficacy of eye gaze data smoothing. A Chinese text entry experiment based on the on-screen keyboard with four keypad sizes was designed to evaluate the influence of the keypad size on the Chinese text entry rate. The results of the fixation experiment indicated that the threshold-based Savitzky–Golay smoothing filter with a threshold value of two standard deviations, a polynomial order of 3, and a window length of 61 can significantly improve the stability of eye cursor movements by 44.86% on average. The averaged Chinese text entry rate achieved 4.41 wpm with the dynamical enlargeable keypads. The current results encouraged us to utilize the proposed HCI interface for disabled users in the future.

Similar content being viewed by others

References

Cook, A.M., Polgar, J.M.: Cook and Hussey’s Assistive Technologies: Principles and Practice, 3rd edn. Mosby, St. Louis (2007)

Scherer, M.J.: Living in the State of Stuck: How Assistive Technology Impacts the Lives of People with Disabilities, 4th edn. Brookline, Boston (2005)

Betke, M., Gips, J., Fleming, P.: The Camera Mouse: visual tracking of body features to provide computer access for people with severe disabilities. IEEE Trans. Neural Syst. Rehabil. Eng. 10(1), 1–10 (2002)

Pereira, C., Neto, R., Reynaldo, A., Luzo, M., Oliveira, R.: Development and evaluation of a head-controlled human–computer interface with mouse-like functions for physically disabled users. Clin. Sci. 64, 975–981 (2009)

Walsh, E., Daems, W., Steckel, J.: Assistive pointing device based on a head-mounted camera. IEEE Trans. Hum. Mach. Syst. 47(4), 590–597 (2017)

Zhang, X., MacKenzie, I.S.: Evaluating eye tracking with ISO 9241 part 9. In: Proceedings of the International Conference on Human Computer Interaction: Intelligent Multimodal Interaction Environments, pp. 779–788 (2007)

MacKenzie, I.S.: Evaluating eye tracking systems for computer input. In: Majaranta, P. (ed.) Gaze Interaction and Applications of Eye Tracking: Advances in Assistive Technologies, pp. 205–225. IGI Global, Pennsylvania (2012)

Yousefi, B., Huo, X., Veledar, E., Ghovanloo, M.: Quantitative and comparative assessment of learning in a tongue-operated computer input device. IEEE Trans. Inf Technol. Biomed. 15(5), 747–757 (2011)

Huo, X., Park, H., Kim, J., Ghovanloo, M.: A dual-mode human computer interface combining speech and tongue motion for people with severe disabilities. IEEE Trans. Neural Syst. Rehabil. 21(6), 979–991 (2013)

Jose, M., de Deus Lopes, R.: Human–computer interface controlled by the lip. IEEE J. Biomed. Health Inform. 19(1), 302–308 (2015)

Bian, Z.P., Hou, J., Chau, L.P., Magnenat-Thalmann, N.: Facial position and expression based human computer interface for persons with tetraplegia. IEEE J. Biomed. Health Inform. 20(3), 915–924 (2016)

Fall, C.L., Quevillon, F., Blouin, M., Latour, S., Campeau-Lecours, A., Gosselin, C., Gosselin, B.: A Multimodal adaptive wireless control interface for people with upper-body disabilities. IEEE Trans. Biomed. Circuits Syst. 12(3), 564–575 (2018)

McCool, P., Fraser, G., Chan, A., Petropoulakis, L., Soraghan, J.: Identification of contaminant type in surface electromyography (EMG) signals. IEEE Trans. Neural Syst. Rehabil. Eng. 22(4), 774–783 (2014)

Zhang, R., He, S., Yang, X.: An EOG-based human-machine interface to control a smart home environment for patients with severe spinal cord injuries. IEEE Trans. Biomed. Eng. 66(1), 89–100 (2019)

Mak, J., Wolpaw, J.: Clinical applications of brain–computer interfaces: current state and future prospects. IEEE Rev. Biomed. Eng. 2, 187–199 (2009)

Mihajlovic, V., Grundlehner, B., Vullers, R., Penders, J.: Wearable, wireless EEG solutions in daily life applications: what are we missing? IEEE J. Biomed. Health Inform. 19(1), 6–21 (2014)

Carpenter, R.H.S.: Movements of the Eyes. Pion, London (1977)

Majarant, P., Räihä, K.J.: Twenty years of eye typing: systems and design issues. In: Proceedings of the Symposium on Eye Tracking Research and Applications, pp. 15–22 (2002)

Nyström, M., Andersson, R., Holmqvist, L., Weijer, J.: The influence of calibration method and eye physiology on eye tracking data quality. Behav. Res. Methods 45(1), 272–288 (2013)

Ooms, K., Dupont, L., Lapon, L., Popelka, S.: Accuracy and precision of fixation locations recorded with the low-cost Eye Tribe tracker in different experimental set-ups. J. Eye Mov. Res. 8, 1–24 (2015)

Feit, A., Williams, S., Toledo, A., Paradiso, A., Kulkarni, H., Kane, S., Morris, M.R.: Toward everyday gaze input: accuracy and precision of eye tracking and and Implications for Design. In: Proceedings of the CHI Conference on Human Factors in Computing Systems, ACM, pp. 1118–1130 (2017)

Jacob, R.: The use of eye movements in human computer interaction techniques: what you look at is what you get. ACM Trans. Inf. Syst. 9(3), 152–169 (1991)

Stampe, D.M.: Heuristic filtering and reliable calibration methods for video-based pupil-tracking systems. Behav. Res. Methods Instrum. Comput. 25(2), 137–142 (1993)

Jimenez, J., Gutierrez, D., Latorre, P.: Gaze-based interaction for virtual environments. J. Univers. Comput. Sci. 14(19), 3085–3098 (2008)

Veneri, G., Federighi, P., Rosini, F., Federico, A., Rufa, A.: Influences of data filtering on human computer interaction by gaze contingent display and eye tracking applications. Comput. Hum. Behav. 26(6), 1555–1563 (2010)

Kumar, M., Klingner, J., Puranik, R., Winograd, T., Paepck, A.: Improving the accuracy of gaze input for interaction. In: Proceedings of International Conference on Eye Tracking Research & Applications, pp. 65–68 (2008)

Komogortsev, O.V., Khan, J.I.: Kalman filtering in the design of eye-gaze-guided computer interfaces. In: Proceedings of the 12th International Conference on Human Computer Interaction: Intelligent Multimodal Interaction Environments. Springer, Berlin, pp. 679–689 (2007)

Špakov, O.: Comparison of eye movement filters used in HCI. In: Proceeding of the ACM Symposium on Eye Tracking Research & Applications, pp. 281–284 (2012)

Nyström, M., Holmqvist, K.: An adaptive algorithm for fixation, saccade, and glissade detection in eye tracking data. Behav. Res. Methods 42(1), 188–204 (2009)

Pandia, K., Revindran, S., Cole, R., Kovacs, G., Giaovangrandi, L.: Motion artifact cancellation to obtain heart sounds from a single chest-worn accelerometer. In: Proceedings of IEEE International Conference on Acoustics Speech and Signal Processing, pp. 590–593 (2010)

Persson, P.O., Strang, G.: Smoothing by Savitzky–Golay and Legendre filters. IMA Vol. Math. Syst. Theory Biol. Commun. Comput. Finance 134, 301–316 (2003)

Savitzky, A., Golay, M.J.E.: Smoothing and differentiation of data by simplified least squares procedures. Anal. Chem. 36, 1627–1639 (1964)

Stern, R.M., Acero, A., Liu, F., Ohshima, Y.: Signal processing for robust speech recognition. In: Lee, C.H., Soong, F.K., Paliwal, K.K. (eds.) Automatic Speech and Speaker Recognition: Advanced Topics, pp. 351–378. Kluwer, London (1996)

Hatfield, F., Jenkins, E.A.: An interface integrating eye gaze and voice recognition for hands-free computer access. In: Proceedings of CSUN Technology and Persons with Disabilities Conference, pp. 1–7 (1997)

Castellina, E., Corno, F., Pellegrino, P.: Integrated speech and gaze control for realistic desktop environments. In: Proceedings of International Conference on Eye Tracking Research & Applications, pp. 26–28 (2008)

Liang, L., Liu, X., Zhao, Y., Pi, X., Nefian, A.V.: Speaker independent audio-visual continuous speech recognition. In: Proceeding of IEEE International Conference on Multimedia and Expo, pp. 25–28 (2002)

Potamianos, G., Neti, C., Gravier, G., Senior, A.W.: Recent advances in automatic recognition of audio-visual speech. Proc. IEEE 91, 1306–1326 (2003)

Silsbee, P.L., Bovik, A.C.: Computer lip reading for improved accuracy in automatic speech recognition. IEEE Trans. Speech Audio Process. 4(5), 337–351 (1996)

Kaynak, M.N., Qi, Z., Cheok, A.D., Sengupta, K., Chung, K.C.: Audio-visual modeling for bimodal speech recognition. IEEE Trans. Syst. Man Cybern. 34, 564–570 (2001)

Bregler, C., Hild, H., Manke, S., Waibel, A.: Improved connected letter recognition by lipreading. In: Proceedings of IEEE International Conference on Acoustics Speech and Signal Processing, pp. 557–560 (1993)

Conforto, S., Schmid, M., Neri, A., D’Alessio, T.: A neural approach to extract foreground from human movement images. Comput. Methods Progr. Biomed. 82, 73–80 (2006)

Wang, Q., Ren, X.: Facial feature locating using active appearance models with contour constraints from consumer depth cameras. J. Theor. Appl. Inf. Technol. 45(2), 593–597 (2012)

Webb, J., Ashley, J.: Beginning Kinect Programming with the Microsoft Kinect SDK. Apress, New York (2012)

Rossi, G., Lampugnani, L., Marchi, M.: An approximate CUSUM procedure for surveillance of health events. Stat. Med. 18(16), 2111–2122 (1999)

Nikovski, D., Jain, A.: Fast adaptive algorithms for abrupt change detection. Mach. Learn. 79(3), 283–306 (2010)

Hansen, J.P., Hansen, D.W., Johansen, A.S.: Bringing gaze-based interaction back to basics. In: Proceedings of Universal Access in Human Computer Interaction, pp. 325–328 (2001)

Hansen, D.W., Skovsgaard, H.H.T., Hansen, J.P., Molllebbach, E.: Noise tolerant selection by gaze controlled pan and zoom in 3D. In: Proceedings of the Symposium on Eye Tracking Research & Applications. ACM, pp. 205–212 (2008)

Wobbrock, J.O., Rubinstein, J., Sawyer, M.W., Duchowski, A.T.: Longitudinal evaluation of discrete consecutive gaze gestures for text entry. In: Proceedings of the Symposium on Eye Tracking Research & Applications. ACM, pp. 11–18 (2008)

Tuisku, O., Surakka, V., Rantanen, V., Vanhala, T., Lekkala, J.: Text entry by gazing and smiling. Adv. Hum. Comput. Interact. 2013, 1–13 (2013)

Funding

Funding was provided by Ministry of Science and Technology, Taiwan (Grant No. MOST 104-2221-E-214 -052).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that no financial and personal relationships with any other people or organizations have inappropriately influenced the work here submitted.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hwang, IS., Tsai, YY., Zeng, BH. et al. Integration of eye tracking and lip motion for hands-free computer access. Univ Access Inf Soc 20, 405–416 (2021). https://doi.org/10.1007/s10209-020-00723-w

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10209-020-00723-w